gcc编译和运行hadoop c api程序

gcc hdfs_cpp_demo.c -I $HADOOP_HOME/src/c++/libhdfs -I $JAVA_HOME/include -I $JAVA_HOME/include/linux/ -L $HADOOP_HOME/c++/Linux-i386-32/lib/ -l hdfs -L $JAVA_HOME/jre/lib/i386/server -ljvm -o hdfs_cpp_demo

以上是32为linux系统的编译,注意到Linux-i386-32 、i386 命令。

如果是64为linux系统的话,要用以下编译命令:

gcc hdfs_cpp_demo.c \ -I $HADOOP_HOME/src/c++/libhdfs \ -I $JAVA_HOME/include \ -I $JAVA_HOME/include/linux/ \ -L $HADOOP_HOME/c++/Linux-amd64-64/lib/ -lhdfs \ -L $JAVA_HOME/jre/lib/amd64/server -ljvm \ -o hdfs_cpp_demo

编译完成后会生成hdfs_cpp_demo 可执行文件。

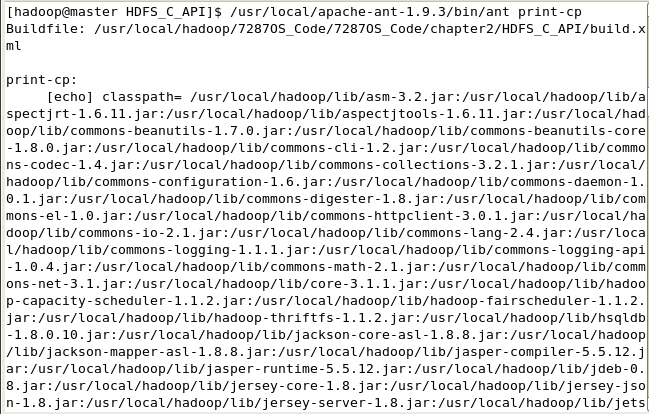

接下来运行该文件,运行该文件前得设置CLASSPATH环境变量(注意大写),我们利用ant打印环境变量(关于ant的包可以到apache官网下载)。

打印环境变量的命令如下:

[hadoop@master HDFS_C_API]$ /usr/local/apache-ant-1.9.3/bin/ant print-cp

会生成如下图所示的很多jar包:

我们只需要把classpath后的所有包export即可。命令如下:

[hadoop@master HDFS_C_API]$ export CLASSPATH=/usr/local/hadoop/lib/asm-3.2.jar:/usr/local/hadoop/lib/aspectjrt-1.6.11.jar:/usr/local/hadoop/lib/aspectjtools-1.6.11.jar:/usr/local/hadoop/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/lib/commons-cli-1.2.jar:/usr/local/hadoop/lib/commons-codec-1.4.jar:/usr/local/hadoop/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/lib/commons-configuration-1.6.jar:/usr/local/hadoop/lib/commons-daemon-1.0.1.jar:/usr/local/hadoop/lib/commons-digester-1.8.jar:/usr/local/hadoop/lib/commons-el-1.0.jar:/usr/local/hadoop/lib/commons-httpclient-3.0.1.jar:/usr/local/hadoop/lib/commons-io-2.1.jar:/usr/local/hadoop/lib/commons-lang-2.4.jar:/usr/local/hadoop/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/lib/commons-logging-api-1.0.4.jar:/usr/local/hadoop/lib/commons-math-2.1.jar:/usr/local/hadoop/lib/commons-net-3.1.jar:/usr/local/hadoop/lib/core-3.1.1.jar:/usr/local/hadoop/lib/hadoop-capacity-scheduler-1.1.2.jar:/usr/local/hadoop/lib/hadoop-fairscheduler-1.1.2.jar:/usr/local/hadoop/lib/hadoop-thriftfs-1.1.2.jar:/usr/local/hadoop/lib/hsqldb-1.8.0.10.jar:/usr/local/hadoop/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/lib/jasper-compiler-5.5.12.jar:/usr/local/hadoop/lib/jasper-runtime-5.5.12.jar:/usr/local/hadoop/lib/jdeb-0.8.jar:/usr/local/hadoop/lib/jersey-core-1.8.jar:/usr/local/hadoop/lib/jersey-json-1.8.jar:/usr/local/hadoop/lib/jersey-server-1.8.jar:/usr/local/hadoop/lib/jets3t-0.6.1.jar:/usr/local/hadoop/lib/jetty-6.1.26.jar:/usr/local/hadoop/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/lib/jsch-0.1.42.jar:/usr/local/hadoop/lib/jsp-2.1/jsp-2.1.jar:/usr/local/hadoop/lib/jsp-2.1/jsp-api-2.1.jar:/usr/local/hadoop/lib/junit-4.5.jar:/usr/local/hadoop/lib/kfs-0.2.2.jar:/usr/local/hadoop/lib/log4j-1.2.15.jar:/usr/local/hadoop/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/lib/oro-2.0.8.jar:/usr/local/hadoop/lib/servlet-api-2.5-20081211.jar:/usr/local/hadoop/lib/slf4j-api-1.4.3.jar:/usr/local/hadoop/lib/slf4j-log4j12-1.4.3.jar:/usr/local/hadoop/lib/xmlenc-0.52.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter1/build/lib/hadoop-cookbook-chapter1.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter10/C10Samples.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter2/HDFS_Java_API/HDFSJavaAPI.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter3/build/lib/hadoop-cookbook-chapter1.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter3/build/lib/hadoop-cookbook-chapter3.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter4/C4LogProcessor.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter5/build/lib/hadoop-cookbook.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter6/build/lib/hadoop-cookbook-chapter6.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter8/build/lib/hadoop-cookbook-chapter6.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter8/build/lib/hadoop-cookbook-chapter8.jar:/usr/local/hadoop/7287OS_Code/7287OS_Code/chapter9/C9Samples.jar:/usr/local/hadoop/contrib/datajoin/hadoop-datajoin-1.1.2.jar:/usr/local/hadoop/contrib/failmon/hadoop-failmon-1.1.2.jar:/usr/local/hadoop/contrib/gridmix/hadoop-gridmix-1.1.2.jar:/usr/local/hadoop/contrib/hdfsproxy/hdfsproxy-2.0.jar:/usr/local/hadoop/contrib/index/hadoop-index-1.1.2.jar:/usr/local/hadoop/contrib/streaming/hadoop-streaming-1.1.2.jar:/usr/local/hadoop/contrib/vaidya/hadoop-vaidya-1.1.2.jar:/usr/local/hadoop/hadoop-ant-1.1.2.jar:/usr/local/hadoop/hadoop-client-1.1.2.jar:/usr/local/hadoop/hadoop-core-1.1.2.jar:/usr/local/hadoop/hadoop-examples-1.1.2.jar:/usr/local/hadoop/hadoop-minicluster-1.1.2.jar:/usr/local/hadoop/hadoop-test-1.1.2.jar:/usr/local/hadoop/hadoop-tools-1.1.2.jar:/usr/local/hadoop/hdfs1.jar:/usr/local/hadoop/hdfs2.jar:/usr/local/hadoop/ivy/ivy-2.1.0.jar:/usr/local/hadoop/mapreduce.jar:/usr/local/hadoop/minimapreduce.jar:/usr/local/hadoop/minimapreduce1.jar:/usr/local/hadoop/src/contrib/thriftfs/lib/hadoopthriftapi.jar:/usr/local/hadoop/src/contrib/thriftfs/lib/libthrift.jar:/usr/local/hadoop/src/test/lib/ftplet-api-1.0.0-SNAPSHOT.jar:/usr/local/hadoop/src/test/lib/ftpserver-core-1.0.0-SNAPSHOT.jar:/usr/local/hadoop/src/test/lib/ftpserver-server-1.0.0-SNAPSHOT.jar:/usr/local/hadoop/src/test/lib/mina-core-2.0.0-M2-20080407.124109-12.jar:/usr/local/hadoop/src/test/org/apache/hadoop/mapred/test.jar

这里注意CLASSPATH需为大写,且后面的=号不能有空格。

设置好环境变量后,我们就可以运行程序了,执行下面的命令。

[hadoop@master HDFS_C_API]$ LD_LIBRARY_PATH=$HADOOP_HOME/c++/Linux-i386-32/lib:$JAVA_HOME/jre/lib/i386/server ./hdfs_cpp_demo

OK,输出结果如下:

Welcome to HDFS C API!!!

大功告成!

以下附录测试源程序:

[hadoop@master HDFS_C_API]$ cat hdfs_cpp_demo.c

// Following is a libhdfs sample adapted from the src/c++/libhdfs/hdfs_write.c of the Hadoop distribution.

#include "hdfs.h"

int main(int argc, char **argv) {

hdfsFS fs = hdfsConnect("master",9000);

if (!fs) {

fprintf(stderr, "Cannot connect to HDFS.\n");

exit(-1);

}

char* fileName = "demo_c.txt";

char* message = "Welcome to HDFS C API!!!";

int size = strlen(message);

int exists = hdfsExists(fs, fileName);

if (exists > -1) {

fprintf(stdout, "File %s exists!\n", fileName);

}else{

// Create and open file for writing

hdfsFile outFile = hdfsOpenFile(fs, fileName, O_WRONLY|O_CREAT, 0, 0, 0);

if (!outFile) {

fprintf(stderr, "Failed to open %s for writing!\n", fileName);

exit(-2);

}

// write to file

hdfsWrite(fs, outFile, (void*)message, size);

hdfsCloseFile(fs, outFile);

}

// Open file for reading

hdfsFile inFile = hdfsOpenFile(fs, fileName, O_RDONLY, 0, 0, 0);

if (!inFile) {

fprintf(stderr, "Failed to open %s for reading!\n", fileName);

exit(-2);

}

char* data = malloc(sizeof(char) * size);

// Read from file.

tSize readSize = hdfsRead(fs, inFile, (void*)data, size);

fprintf(stdout, "%s\n", data);

free(data);

hdfsCloseFile(fs, inFile);

hdfsDisconnect(fs);

return 0;

}