在CentOS-7.0下高级硬盘管理RAID 1+0方案

企业经常用到的RAID+LVM管理模式的建立:

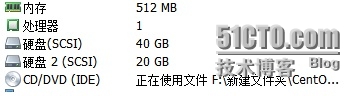

用到的软件:VMware Workstation 10

系统环境:

[root@localhost~]# cat /etc/centos-release

CentOSLinux release 7.0.1406 (Core)

[root@localhost~]# rpm -qi mdadm

Name : mdadm

Version : 3.2.6

RAID 1+0方案

简介

先对磁盘做mirror,然后对整个mirror组做条带化;比如8块盘需要分成4个基组,每个基组2块盘;每个基组先做raid1,再做raid0,4条条带化。

所以:

允许所有磁盘基组中的磁盘各损坏一个,但是不允许同一基组中的磁盘同时有坏的。

优点:数据安全性好,只要不是1个条带上的2个硬盘同时坏,没有问题,还可以继续跑数据。数据恢复快。

缺点 :写性能稍微比RAID 0+1 差(读性能一样)

我的思路

在新添加的硬盘上划分4个1G的区域分别为1,2,3,5

每两个转化为一个RAID 1 卷 /dev/md{1,2}

将这两个RAID 1 卷转化为一个RAID 0卷/dev/md0

将RAID 0转化为pv,vg

之后通过lv创建自己的空间

这个空间就具有RAID 1+0的性质

详细实施过程

我们这里添加一块用作实验的硬盘2

我们不想重启来读取新添加的硬盘,我们输入下面命令进行扫描

[root@localhost~]# echo "- - -" > /sys/class/scsi_host/host0/scan

[root@localhost~]# echo "- - -" > /sys/class/scsi_host/host1/scan

[root@localhost~]# echo "- - -" > /sys/class/scsi_host/host2/scan

[root@localhost~]# fdisk -l

Disk /dev/sdb: 21.5 GB, 21474836480 bytes, 41943040sectors

Units= sectors of 1 * 512 = 512 bytes

Sectorsize (logical/physical): 512 bytes / 512 bytes

I/Osize (minimum/optimal): 512 bytes / 512 bytes

发现新添加的sdb

下面开始具体的实验过程

#fdisk/dev/sdb

Command(m for help): n

Partitiontype:

p primary (0 primary, 0 extended, 4 free)

e extended

Select(default p):

Usingdefault response p

Partitionnumber (1-4, default 1):

Firstsector (2048-41943039, default 2048):

Usingdefault value 2048

Lastsector, +sectors or +size{K,M,G} (2048-41943039, default 41943039): +1G

Partition1 of type Linux and of size 1 GiB is set

Command(m for help): n

Partitiontype:

p primary (1 primary, 0 extended, 3 free)

e extended

Select(default p):

Usingdefault response p

Partitionnumber (2-4, default 2):

Firstsector (2099200-41943039, default 2099200):

Usingdefault value 2099200

Lastsector, +sectors or +size{K,M,G} (2099200-41943039, default 41943039): +1G

Partition2 of type Linux and of size 1 GiB is set

Command(m for help): n

Partitiontype:

p primary (2 primary, 0 extended, 2 free)

e extended

Select(default p):

Usingdefault response p

Partitionnumber (3,4, default 3):

Firstsector (4196352-41943039, default 4196352):

Usingdefault value 4196352

Lastsector, +sectors or +size{K,M,G} (4196352-41943039, default 41943039): +1G

Partition3 of type Linux and of size 1 GiB is set

Command(m for help): n

Partitiontype:

p primary (3 primary, 0 extended, 1 free)

e extended

Select (default e):

Usingdefault response e

Selectedpartition 4

Firstsector (6293504-41943039, default 6293504):

Usingdefault value 6293504

Lastsector, +sectors or +size{K,M,G} (6293504-41943039, default 41943039):

Usingdefault value 41943039

Partition4 of type Extended and of size 17 GiB is set

将剩余的所有空间划给扩展区,之后再划分区间用l命令即逻辑盘区

此后的区间号就从5开始往后加

Command(m for help): n

Allprimary partitions are in use

Addinglogical partition 5

Firstsector (6295552-41943039, default 6295552):

Usingdefault value 6295552

Lastsector, +sectors or +size{K,M,G} (6295552-41943039, default 41943039): +1G

Partition5 of type Linux and of size 1 GiB is set

Command(m for help): p

Disk/dev/sdb: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units= sectors of 1 * 512 = 512 bytes

Sectorsize (logical/physical): 512 bytes / 512 bytes

I/Osize (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: dos

Diskidentifier: 0x8e9a9326

Device Boot Start End Blocks Id System

/dev/sdb1 2048 2099199 1048576 83 Linux

/dev/sdb2 2099200 4196351 1048576 83 Linux

/dev/sdb3 4196352 6293503 1048576 83 Linux

/dev/sdb4 6293504 41943039 17824768 5 Extended

/dev/sdb5 6295552 8392703 1048576 83 Linux

/dev/sdb6 8394752 10491903 1048576 83 Linux

[root@localhost ~]# mdadm -C /dev/md1-a yes -l 1 -n 2 /dev/sdb{1,2}

mdadm:Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensurethat

your boot-loader understands md/v1.xmetadata, or use

--metadata=0.90

Continuecreating array?

Continuecreating array? (y/n) y

mdadm:Defaulting to version 1.2 metadata

mdadm:array /dev/md1 started.

[root@localhost ~]# mdadm -C /dev/md2 -a yes-l 1 -n 2 /dev/sdb{3,5}

mdadm:Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensurethat

your boot-loader understands md/v1.xmetadata, or use

--metadata=0.90

Continuecreating array?

Continuecreating array? (y/n) y

mdadm:Defaulting to version 1.2 metadata

mdadm:array /dev/md2 started.

[root@localhost ~]# mdadm -C /dev/md0 -a yes-l 1 -n 2 /dev/md{1,2}(将RAID1转化为RAID0)

mdadm:Note: this array has metadata at the start and

may not be suitable as a boot device. If you plan to

store '/boot' on this device please ensurethat

your boot-loader understands md/v1.xmetadata, or use

--metadata=0.90

Continuecreating array?

Continuecreating array? (y/n) y

mdadm:Defaulting to version 1.2 metadata

mdadm:array /dev/md0 started.

[root@localhost ~]# pvcreate /dev/md0(变PV)

Physical volume "/dev/md0"successfully created

[root@localhost ~]# vgcreate first/dev/md0(变VG)

Volume group "first" successfullycreated

[root@localhost~]# lvcreate -L 1G -n data1 first

Volume group "first" hasinsufficient free space (255 extents): 256 required.

[root@localhost ~]# lvcreate -L +500M-n data1 first(划分自己想要的区域大小)

Logical volume "data1" created

[root@localhost ~]# mkfs.ext4/dev/first/data1(格式化)

mke2fs1.42.9 (28-Dec-2013)

Filesystemlabel=

OStype: Linux

Blocksize=1024 (log=0)

Fragmentsize=1024 (log=0)

Stride=0blocks, Stripe width=0 blocks

128016inodes, 512000 blocks

25600blocks (5.00%) reserved for the super user

Firstdata block=1

Maximumfilesystem blocks=34078720

63block groups

8192blocks per group, 8192 fragments per group

2032inodes per group

Superblockbackups stored on blocks:

8193, 24577, 40961, 57345, 73729,204801, 221185, 401409

Allocatinggroup tables: done

Writinginode tables: done

Creatingjournal (8192 blocks): done

Writingsuperblocks and filesystem accounting information: done

[root@localhost~]# mkdir /lvm1

[root@localhost~]# mount /dev/first/data1 /lvm1

[root@localhost~]# cd /lvm1

[root@localhostlvm1]# ll

total12

drwx------2 root root 12288 Aug 12 21:21 lost+found

挂载后即可对划分出的新空间进行使用。

举例

这里举个例子,20个硬盘

这里举个例子,20个硬盘

做RAID 1+0,共10个条带,每个条带2个硬盘做MIRROR,如果坏了1个硬盘,没关系,其它19个硬盘还要同时工作,只要不是坏在一个MIRROR里面的,就没事。

建议,硬盘很多时,同时坏的几率就比较大,建议使用安全系数高的RAID 1+0,宁愿损失点性能(其实差不多)。

后语:

新手,如有错误,欢迎指正。