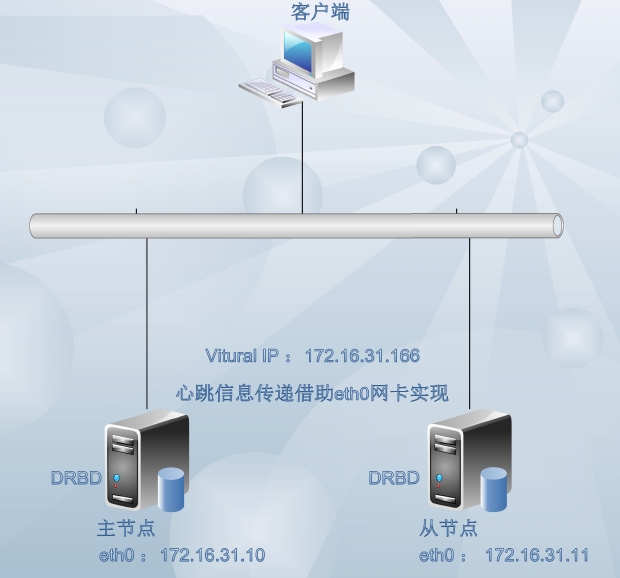

corosync+pacemaker+crmsh+DRBD实现数据库服务器高可用集群构建

DRBD (DistributedReplicated Block Device) 是 Linux 平台上的分散式储存系统。其中包含了核心模组,数个使用者空间管理程式及 shell scripts,通常用于高可用性(high availability, HA)丛集。DRBD 类似磁盘阵列的RAID 1(镜像),只不过 RAID 1 是在同一台电脑内,而 DRBD 是透过网络。

DRBD 是以 GPL2 授权散布的自由软件。

实验架构图:

一.高可用集群构建的前提条件

1.主机名互相解析,实现主机名通信

[root@node1 ~]# vim /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.16.31.10 node1.stu31.com node1 172.16.31.11 node2.stu31.com node2

复制一份到node2:

[root@node1 ~]# scp /etc/[email protected]:/etc/hosts

2.节点直接实现ssh无密钥通信

节点1: [root@node1 ~]# ssh-keygen -t rsa -P"" [root@node1 ~]# ssh-copy-id -i.ssh/id_rsa.pub root@node2 节点2: [root@node2 ~]# ssh-keygen -t rsa -P"" [root@node2 ~]# ssh-copy-id -i.ssh/id_rsa.pub root@node1

测试ssh无密钥通信:

[root@node2 ~]# date ; ssh node1 'date' Fri Jan 2 12:34:02 CST 2015 Fri Jan 2 12:34:02 CST 2015

时间同步,上面两个节点的时间是一致的!

二.DRBD软件的安装

1.获取DRBD软件程序,CentOS6.6的内核版本是2.6.32-504

[root@node1 ~]# uname -r 2.6.32-504.el6.x86_64

DRBD已经合并到linux kernel2.6.33及以后内核版本中,这里直接安装管理工具即可,若内核

版本低于2.6.33时请额外安装DRBD内核模块,且与管理工具版本保持一致。

kmod-drbd84-8.4.5-504.1.el6.x86_64.rpm drbd84-utils-8.9.1-1.el6.elrepo.x86_64.rpm

此软件包是经过编译源码而成,我提供下载,根据附件下载即可:

2.安装软件包,节点1和节点2都需要安装

安装时间将持续很长时间:

[root@node1 ~]# rpm -ivhdrbd84-utils-8.9.1-1.el6.elrepo.x86_64.rpm kmod-drbd84- 8.4.5-504.1.el6.x86_64.rpm warning:drbd84-utils-8.9.1-1.el6.elrepo.x86_64.rpm: Header V4 DSA/SHA1 Signature, key ID baadae52: NOKEY Preparing... ########################################### [100%] 1:drbd84-utils ########################################### [ 50%] 2:kmod-drbd84 ########################################### [100%] Working. This may take some time ... Done.

3.各节点准备存储设备

节点1和节点2都需要操作:

[root@node1 ~]# echo -n -e "n\np\n3\n\n+1G\nw\n"|fdisk /dev/sda [root@node1 ~]# partx -a /dev/sda BLKPG: Device or resource busy error adding partition 1 BLKPG: Device or resource busy error adding partition 2 BLKPG: Device or resource busy error adding partition 3

四.配置DRBD

1.DRBD的配置文件:

[root@node1 ~]# vim /etc/drbd.conf # You can find an example in /usr/share/doc/drbd.../drbd.conf.example include "drbd.d/global_common.conf"; include "drbd.d/*.res";

DRBD的所有的控制都是在配置文件/etc/drbd.conf中。通常情况下配置文件包含如下内容:

include"/etc/drbd.d/global_common.conf";

include "/etc/drbd.d/*.res";

通常情况下,/etc/drbd.d/global_common.conf包含global和common的DRBD配置部分,而.res文件都包含一个资源的部分。

在一个单独的drbd.conf文件中配置全部是可以实现的,但是占用的配置很快就会变得混乱,变得难以管理,这也是为什么多文件管理作为首选的原因之一。

无论采用哪种方式,需必须保持在各个集群节点的drbd.conf以及其他的文件完全相同。

2.配置DRBD的全局及通用资源配置文件

[root@node1 drbd.d]# cat global_common.conf

# DRBD is the result of over a decade ofdevelopment by LINBIT.

# In case you need professional servicesfor DRBD or have

# feature requests visithttp://www.linbit.com

global {

#用于统计应用各个版本的信息。当新的版本的drbd被安装就会和http server进行联系

。当然也可以禁用该选项,默认情况下是启用该选项的。

usage-count no;

# minor-count dialog-refresh disable-ip-verification

}

common {

handlers {

# These are EXAMPLE handlersonly.

# They may have severeimplications,

# like hard resetting the nodeunder certain circumstances.

# Be careful when chosing yourpoison.

#一旦节点发生错误就降级

pri-on-incon-degr"/usr/lib/drbd/notify-pri-on-incon-degr.sh;

/usr/lib/drbd/notify-emergency-reboot.sh;echo b > /proc/sysrq-trigger ; reboot -f";

#一旦节点发生脑裂的处理是重启

pri-lost-after-sb"/usr/lib/drbd/notify-pri-lost-after-sb.sh;

/usr/lib/drbd/notify-emergency-reboot.sh;echo b > /proc/sysrq-trigger ; reboot -f";

#一旦本地io错误的处理是关机

local-io-error"/usr/lib/drbd/notify-io-error.sh;

/usr/lib/drbd/notify-emergency-shutdown.sh;echo o > /proc/sysrq-trigger ; halt -f";

# fence-peer"/usr/lib/drbd/crm-fence-peer.sh";

# split-brain"/usr/lib/drbd/notify-split-brain.sh root";

# out-of-sync"/usr/lib/drbd/notify-out-of-sync.sh root";

# before-resync-target"/usr/lib/drbd/snapshot-resync-target-lvm.sh

-p 15 -- -c 16k";

# after-resync-target/usr/lib/drbd/unsnapshot-resync-target-lvm.sh;

}

startup {

# wfc-timeout degr-wfc-timeoutoutdated-wfc-timeout wait-after-sb

}

options {

# cpu-maskon-no-data-accessible

}

disk {

# size on-io-error fencingdisk-barrier disk-flushes

#一旦本地磁盘发生IO错误时的操作:拆除

on-io-error detach;

# disk-drain md-flushesresync-rate resync-after al-extents

# c-plan-ahead c-delay-targetc-fill-target c-max-rate

# c-min-rate disk-timeout

}

net {

# protocol timeoutmax-epoch-size max-buffers unplug-watermark

#资源配饰使用完全同步复制协议(Protocol C),除非另有明确指定;表示

收到远程主机的写入确认后,则认为写入完成.

protocol C;

# connect-int ping-intsndbuf-size rcvbuf-size ko-count

# allow-two-primariescram-hmac-alg shared-secret after-sb-0pri

#设置主备机之间通信使用的信息算法.

cram-hmac-alg "sha1";

#消息摘要认证密钥

shared-secret "password";

# after-sb-1pri after-sb-2prialways-asbp rr-conflict

# ping-timeoutdata-integrity-alg tcp-cork on-congestion

# congestion-fillcongestion-extents csums-alg verify-alg

# use-rle

}

syncer {

#设置主备节点同步时的网络速率最大值,单位是字节.

rate 1000M;

}

}

3.定义节点存储资源配置文件

一个DRBD设备(即:/dev/drbdX),叫做一个"资源"。里面包含一个DRBD设备的主备节点的的ip信息,底层存储设备名称,设备大小,meta信息存放方式,drbd对外提供的设备名等等。

[root@node1 drbd.d]# vim mystore.res

resource mystore {

#每个主机的说明以"on"开头,后面是主机名.在后面的{}中为这个主机的配置.

on node1.stu31.com {

device /dev/drbd0;

disk /dev/sda3;

#设置DRBD的监听端口,用于与另一台主机通信

address 172.16.31.10:7789;

meta-disk internal;

}

on node2.stu31.com {

device /dev/drbd0;

disk /dev/sda3;

address 172.16.31.11:7789;

meta-disk internal;

}

}

配置完成后复制一份到节点2:

[root@node1 drbd.d]# ls global_common.conf mystore.res [root@node1 drbd.d]# scp *node2:/etc/drbd.d/ global_common.conf 100% 2105 2.1KB/s 00:00 mystore.res 100% 318 0.3KB/s 00:00

4.创建matadata

在启动DRBD之前,需要分别在两台主机的sda分区上,创建供DRBD记录信息的数据块.分别在两台主机上执行:

[root@node1 drbd.d]# drbdadm create-mdmystore initializing activity log NOT initializing bitmap Writing meta data... New drbd meta data block successfullycreated. [root@node2 ~]# drbdadm create-md mystore initializing activity log NOT initializing bitmap Writing meta data... New drbd meta data block successfullycreated.

5.启动DRBD服务

[root@node1 ~]# /etc/init.d/drbd start Starting DRBD resources: [ create res: mystore prepare disk: mystore adjust disk: mystore adjust net: mystore ] .......... *************************************************************** DRBD's startup script waits for the peernode(s) to appear. - Incase this node was already a degraded cluster before the rebootthe timeout is 0 seconds. [degr-wfc-timeout] - Ifthe peer was available before the reboot the timeout will expire after 0 seconds. [wfc-timeout] (These values are for resource 'mystore'; 0 sec -> wait forever) Toabort waiting enter 'yes' [ 21]: . [root@node1 ~]# 节点2启动drbd: [root@node2 ~]# /etc/init.d/drbd start Starting DRBD resources: [ create res: mystore prepare disk: mystore adjust disk: mystore adjust net: mystore ] .

6. 查看DRBD的状态,分别在两台主机上执行

[root@node1 ~]# cat /proc/drbd version: 8.4.5 (api:1/proto:86-101) GIT-hash:1d360bde0e095d495786eaeb2a1ac76888e4db96 build by [email protected], 2015-01-02 12:06:20 0:cs:Connected ro:Secondary/Secondary ds:Inconsistent/Inconsistent C r----- ns:0 nr:0 dw:0 dr:0 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:1059216

对输出的含义解释如下:

ro表示角色信息,第一次启动drbd时,两个drbd节点默认都处于Secondary状态,

ds是磁盘状态信息,“Inconsistent/Inconsisten”,即为“不一致/不一致”状态,表示两个节点的磁盘数据处于不一致状态。

Ns表示网络发送的数据包信息。

Dw是磁盘写信息

Dr是磁盘读信息

7.设置主节点

由于默认没有主次节点之分,因而需要设置两个主机的主次节点,选择需要设置为主节点的主机,然后执行如下

node1为主节点

#强制设置主节点

[root@node1 ~]# drbdadm primary --forcemystore

查看同步操作:

[root@node1 ~]# drbd-overview

0:mystore/0 SyncSource Primary/Secondary UpToDate/Inconsistent

[=====>..............] sync'ed: 32.1% (724368/1059216)K

[root@node1 ~]# watch -n1 'cat /proc/drbd'

完成后查看节点状态:

[root@node1 ~]# cat /proc/drbd version: 8.4.5 (api:1/proto:86-101) GIT-hash:1d360bde0e095d495786eaeb2a1ac76888e4db96 build by [email protected], 2015-01-02 12:06:20 0:cs:Connected ro:Primary/Secondary ds:UpToDate/UpToDate C r----- ns:1059216 nr:0 dw:0 dr:1059912 al:0 bm:0 lo:0 pe:0 ua:0 ap:0 ep:1 wo:foos:0

8.格式化存储

[root@node1 ~]# mke2fs -t ext4 /dev/drbd0

挂载到一个目录: [root@node1 ~]# mount /dev/drbd0 /mnt 复制一个文件到mnt: [root@node1 ~]# cp /etc/issue /mnt 卸载存储: [root@node1 ~]# umount /mnt

9.切换主节点为备节点,将node2提升为主节点

节点1设置为备节点: [root@node1 ~]# drbdadm secondary mystore [root@node1 ~]# drbd-overview 0:mystore/0 Connected Secondary/Secondary UpToDate/UpToDate 提升节点2为主节点: [root@node2 ~]# drbdadm primary mystore [root@node2 ~]# drbd-overview 0:mystore/0 Connected Primary/Secondary UpToDate/UpToDate

挂载文件系统,查看文件是否存在:

[root@node2 ~]# mount /dev/drbd0 /mnt

[root@node2 ~]# ls /mnt

issue lost+found

注意:

(1)mount drbd设备以前必须把设备切换到primary状态。

(2)两个节点中,同一时刻只能有一台处于primary状态,另一台处于secondary状态。

(3)处于secondary状态的服务器上不能加载drbd设备。

(4)主备服务器同步的两个分区大小最好相同,这样不至于浪费磁盘空间,因为drbd磁盘镜像相当于网络raid 1。

10.将drbd服务关闭,开机自启动关闭:

节点1: [root@node1 ~]# service drbd stop Stopping all DRBD resources: . [root@node1 ~]# chkconfig drbd off 节点2: [root@node2 ~]# service drbd stop Stopping all DRBD resources: . [root@node2 ~]# chkconfig drbd off

五.corosync+pacemaker+drbd实现mariadb高可用集群

1.安装corosync和pacemaker软件包:节点1和节点2都安装

# yum install corosync pacemaker -y

2.创建配置文件并配置

[root@node1 ~]# cd /etc/corosync/ [root@node1 corosync]# cpcorosync.conf.example corosync.conf

[root@node1 corosync]# cat corosync.conf

# Please read the corosync.conf.5 manualpage

compatibility: whitetank

totem {

version: 2

# secauth: Enable mutual node authentication. If you choose to

# enable this ("on"), then do remember to create a shared

# secret with "corosync-keygen".

#开启认证

secauth: on

threads: 0

# interface: define at least one interface to communicate

# over. If you define more than one interface stanza, you must

# also set rrp_mode.

interface {

# Rings must be consecutivelynumbered, starting at 0.

ringnumber: 0

# This is normally the*network* address of the

# interface to bind to. Thisensures that you can use

# identical instances of thisconfiguration file

# across all your clusternodes, without having to

# modify this option.

#定义网络地址

bindnetaddr: 172.16.31.0

# However, if you have multiplephysical network

# interfaces configured for thesame subnet, then the

# network address alone is notsufficient to identify

# the interface Corosync shouldbind to. In that case,

# configure the *host* addressof the interface

# instead:

# bindnetaddr: 192.168.1.1

# When selecting a multicastaddress, consider RFC

# 2365 (which, among otherthings, specifies that

# 239.255.x.x addresses areleft to the discretion of

# the network administrator).Do not reuse multicast

# addresses across multipleCorosync clusters sharing

# the same network.

#定义组播地址

mcastaddr: 239.224.131.31

# Corosync uses the port youspecify here for UDP

# messaging, and also theimmediately preceding

# port. Thus if you set this to5405, Corosync sends

# messages over UDP ports 5405and 5404.

#信息传递端口

mcastport: 5405

# Time-to-live for clustercommunication packets. The

# number of hops (routers) thatthis ring will allow

# itself to pass. Note thatmulticast routing must be

# specifically enabled on mostnetwork routers.

ttl: 1

}

}

logging {

# Log the source file and line where messages are being

# generated. When in doubt, leave off. Potentially useful for

# debugging.

fileline: off

# Log to standard error. When in doubt, set to no. Useful when

# running in the foreground (when invoking "corosync -f")

to_stderr: no

# Log to a log file. When set to "no", the "logfile"option

# must not be set.

#定义日志记录存放

to_logfile: yes

logfile: /var/log/cluster/corosync.log

# Log to the system log daemon. When in doubt, set to yes.

#to_syslog: yes

# Log debug messages (very verbose). When in doubt, leave off.

debug: off

# Log messages with time stamps. When in doubt, set to on

# (unless you are only logging to syslog, where double

# timestamps can be annoying).

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

#以插件方式启动pacemaker:

service {

ver: 0

name: pacemaker

}

3.生成认证密钥文件:认证密钥文件需要1024字节,手动写入太麻烦了,我们可以下载程序包来实现写满内存的熵池实现,

[root@node1 corosync]# corosync-keygen Corosync Cluster Engine Authentication key generator. Gathering 1024 bits for key from/dev/random. Press keys on your keyboard to generateentropy. Press keys on your keyboard to generateentropy (bits = 128). Press keys on your keyboard to generateentropy (bits = 192). Press keys on your keyboard to generateentropy (bits = 256). Press keys on your keyboard to generateentropy (bits = 320). Press keys on your keyboard to generateentropy (bits = 384). Press keys on your keyboard to generateentropy (bits = 448). Press keys on your keyboard to generateentropy (bits = 512). Press keys on your keyboard to generateentropy (bits = 576). Press keys on your keyboard to generateentropy (bits = 640). Press keys on your keyboard to generateentropy (bits = 704). Press keys on your keyboard to generate entropy(bits = 768). Press keys on your keyboard to generateentropy (bits = 832). Press keys on your keyboard to generateentropy (bits = 896). Press keys on your keyboard to generateentropy (bits = 960). Writing corosync key to/etc/corosync/authkey.

随便下载神马程序都行!

完成后将配置文件及认证密钥复制一份到节点2:

[root@node1 corosync]# scp authkeycorosync.conf node2:/etc/corosync/ authkey 100% 128 0.1KB/s 00:00 corosync.conf 100% 2724 2.7KB/s 00:00

4.启动corosync服务:

[root@node1 corosync]# service corosyncstart Starting Corosync Cluster Engine(corosync): [ OK ] [root@node2 ~]# service corosync start Starting Corosync Cluster Engine(corosync): [ OK ]

5.查看日志:

查看corosync引擎是否正常启动:

节点1的启动日志:

[root@node1 corosync]# grep -e"Corosync Cluster Engine" -e "configuration file"

/var/log/cluster/corosync.log

Jan 02 14:20:28 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): startedand

ready to provide service.

Jan 02 14:20:28 corosync [MAIN ] Successfully read main configuration file

'/etc/corosync/corosync.conf'.

节点2的启动日志:

[root@node2 ~]# grep -e "CorosyncCluster Engine" -e "configuration file"

/var/log/cluster/corosync.log

Jan 02 14:20:39 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): startedand

ready to provide service.

Jan 02 14:20:39 corosync [MAIN ] Successfully read main configuration file

'/etc/corosync/corosync.conf'.

查看关键字TOTEM,初始化成员节点通知是否发出:

[root@node1 corosync]# grep"TOTEM" /var/log/cluster/corosync.log Jan 02 14:20:28 corosync [TOTEM ]Initializing transport (UDP/IP Multicast). Jan 02 14:20:28 corosync [TOTEM ]Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0). Jan 02 14:20:28 corosync [TOTEM ] Thenetwork interface [172.16.31.10] is now up. Jan 02 14:20:28 corosync [TOTEM ] Aprocessor joined or left the membership and a new membership was formed. Jan 02 14:20:37 corosync [TOTEM ] Aprocessor joined or left the membership and a new membership was formed.

查看监听端口5405是否开启:

[root@node1 ~]# ss -tunl |grep 5405 udp UNCONN 0 0 172.16.31.10:5405 *:* udp UNCONN 0 0 239.224.131.31:5405 *:*

查看错误日志:

[root@node1 ~]# grep ERROR/var/log/cluster/corosync.log #警告信息:将pacemaker以插件运行的告警,忽略即可 Jan 02 14:20:28 corosync [pcmk ] ERROR: process_ais_conf: You haveconfigured a cluster using the Pacemaker plugin for Corosync.The plugin is not supported in this environment and will be removed very soon. Jan 02 14:20:28 corosync [pcmk ] ERROR: process_ais_conf: Please see Chapter 8 of 'Clusters from Scratch'(http://www.clusterlabs.org/doc) for details on using Pacemaker with CMAN Jan 02 14:20:52 [6260] node1.stu31.com pengine: notice: process_pe_message: Configuration ERRORs found during PE processing. Please run "crm_verify -L" to identify issues. Jan 02 14:20:52 [6260] node1.stu31.com pengine: notice: process_pe_message: Configuration ERRORs found during PE processing. Please run "crm_verify -L" to identify issues.

[root@node1 ~]# crm_verify -L -V #无stonith设备的警告信息,可以忽略 error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option error: unpack_resources: NOTE:Clusters with shared data need STONITH to ensure data integrity Errors found during check: config not valid

六.集群配置工具安装:crmsh软件安装

1.配置yum源:我这里存在一个完整的yum源服务器

[root@node1 yum.repos.d]# vimcentos6.6.repo [base] name=CentOS $releasever $basearch on localserver 172.16.0.1 baseurl=http://172.16.0.1/cobbler/ks_mirror/CentOS-6.6-$basearch/ gpgcheck=0 [extra] name=CentOS $releasever $basearch extras baseurl=http://172.16.0.1/centos/$releasever/extras/$basearch/ gpgcheck=0 [epel] name=Fedora EPEL for CentOS$releasever$basearch on local server 172.16.0.1 baseurl=http://172.16.0.1/fedora-epel/$releasever/$basearch/ gpgcheck=0 [corosync2] name=corosync2 baseurl=ftp://172.16.0.1/pub/Sources/6.x86_64/corosync/ gpgcheck=0

复制一份到节点2:

[root@node1 ~]# scp /etc/yum.repos.d/centos6.6.reponode2:/etc/yum.repos.d/ centos6.6.repo 100% 521 0.5KB/s 00:00

2.安装crmsh软件,2各节点都安装

# yum install -y crmsh # rpm -qa crmsh crmsh-2.1-1.6.x86_64

3.去除上面的stonith设备警告错误:

[root@node1 ~]# crm crm(live)# configure crm(live)configure# propertystonith-enabled=false crm(live)configure# verify #双节点需要仲裁,或者忽略(会造成集群分裂) crm(live)configure# propertyno-quorum-policy=ignore crm(live)configure# verify crm(live)configure# commit crm(live)configure# show node node1.stu31.com node node2.stu31.com property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore

无错误信息输出了:

[root@node1 ~]# crm_verify -L -V [root@node1 ~]#

七.将DRBD定义为集群服务

1.按照集群服务的要求,首先确保两个节点上的drbd服务已经停止,且不会随系统启动而自动启动:

[root@node1 ~]# drbd-overview 0:mystore/0 Unconfigured . . [root@node1 ~]# chkconfig --list drbd drbd 0:off 1:off 2:off 3:off 4:off 5:off 6:off

2.配置drbd为集群资源:

提供drbd的RA目前由OCF归类为linbit,其路径为/usr/lib/ocf/resource.d/linbit/drbd。我们可以使用如下命令来查看此RA及RA的meta信息:

[root@node1 ~]# crm ra classes lsb ocf / heartbeat linbit pacemaker service stonith [root@node1 ~]# crm ra list ocf linbit drbd

下面命令可以查看详细信息

[root@node1 ~]# crm ra info ocf:linbit:drbd

输出内容略

drbd需要同时运行在两个节点上,但只能有一个节点(primary/secondary模型)是Master,而另一个节点为Slave;因此,它是一种比较特殊的集群资源,其资源类型为多态(Multi-state)clone类型,即主机节点有Master和Slave之分,且要求服务刚启动时两个节点都处于slave状态。

开始定义集群资源:

[root@node1 ~]# crm configure crm(live)configure# primitive mydrbdocf:linbit:drbd params drbd_resource="mystore" op monitor role=Slave interval=20stimeout=20s op monitor role=Master interval=10s timeout=20s op start timeout=240s op stoptimeout=100s crm(live)configure# verify 将集群资源设置为主从模式: crm(live)configure# ms ms_mydrbd mydrbdmeta master-max="1" master-node-max="1" clone-max="2"clone-node-max="1" notify="true" crm(live)configure# verify crm(live)configure# show node node1.stu31.com node node2.stu31.com primitive mydrbd ocf:linbit:drbd \ params drbd_resource=mystore \ op monitor role=Slave interval=20s timeout=20s \ op monitor role=Master interval=10s timeout=20s \ op start timeout=240s interval=0 \ op stop timeout=100s interval=0 ms ms_mydrbd mydrbd \ meta master-max=1 master-node-max=1 clone-max=2 clone-node-max=1notify=true property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore crm(live)configure# commit crm(live)configure# cd crm(live)# status Last updated: Sat Jan 3 11:22:54 2015 Last change: Sat Jan 3 11:22:50 2015 Stack: classic openais (with plugin) Current DC: node1.stu31.com - partitionwith quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ node1.stu31.com node2.stu31.com ] Master/Slave Set: ms_mydrbd [mydrbd] Masters: [ node2.stu31.com ] Slaves: [ node1.stu31.com ]

#master-max:有几个主资源master-node-max: 1个节点上最多运行的主资源

#clone-max:有几个克隆资源clone-node-max:1个节点上最多运行的克隆资源

#主从资源也是克隆资源的一种的,只不过它有主从关系

查看drbd的主从状态:

[root@node1 ~]# drbd-overview 0:mystore/0 Connected Secondary/Primary UpToDate/UpToDate [root@node2 ~]# drbd-overview 0:mystore/0 Connected Primary/Secondary UpToDate/UpToDate

将node2降级成从节点并上线:

[root@node2 ~]# crm node standby [root@node2 ~]# drbd-overview 0:mystore/0 Unconfigured . . [root@node2 ~]# crm node online [root@node2 ~]# drbd-overview 0:mystore/0 Connected Secondary/Primary UpToDate/UpToDate

那么node1就成为主节点了:

[root@node1 ~]# drbd-overview 0:mystore/0 Connected Primary/Secondary UpToDate/UpToDate

3.定义DRBD存储自动挂载,主节点在哪里,存储就在哪里,需要定义约束

crm(live)# configure crm(live)configure# primitive myfsocf:heartbeat:Filesystem params device=/dev/drbd0 directory=/mydata fstype="ext4"op monitor interval=20s timeout=40s op start timeout=60s op stop timeout=60s crm(live)configure# verify #定义协同约束,主节点在哪里启动,存储就跟随主节点 crm(live)configure# colocation myfs_with_ms_mydrbd_masterinf: myfs ms_mydrbd:Master #定义顺序约束,主角色提升完成后才启动存储 crm(live)configure# orderms_mydrbd_master_before_myfs inf: ms_mydrbd:promote myfs:start crm(live)configure# verify crm(live)configure# commit crm(live)configure# cd crm(live)# status Last updated: Sat Jan 3 11:34:23 2015 Last change: Sat Jan 3 11:34:12 2015 Stack: classic openais (with plugin) Current DC: node1.stu31.com - partitionwith quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 3 Resources configured Online: [ node1.stu31.com node2.stu31.com ] Master/Slave Set: ms_mydrbd [mydrbd] Masters: [ node1.stu31.com ] Slaves: [ node2.stu31.com ] myfs (ocf::heartbeat:Filesystem): Started node1.stu31.com

可以知道主节点是node1,存储也是挂载在节点1上的。

查看挂载的目录:文件存在,挂载成功

[root@node1 ~]# ls /mydata issue lost+found

主从资源,文件系统挂载都完成了,下面就开始安装mariadb数据库了!

八.安装mariadb数据库

1.初始化安装mariadb必须在主节点进行:

创建用户mysql管理数据库及配置数据存储目录权限为mysql,两个节点都需要创建用户

# groupadd -r -g 306 mysql # useradd -r -g 306 -u 306 mysql

获取mariadb的二进制安装包:

mariadb-10.0.10-linux-x86_64.tar.gz

解压至/usr/local目录中:

[root@node1 ~]# tar xfmariadb-10.0.10-linux-x86_64.tar.gz -C /usr/local/

创建软链接:

[root@node1 ~]# cd /usr/local [root@node1 local]# ln -svmariadb-10.0.10-linux-x86_64/ mysql

在挂载的DRBD存储上创建数据库数据存放目录:

# chown -R mysql:mysql /mydata/

进入安装目录:

[root@node1 local]# cd mysql [root@node1 mysql]# pwd /usr/local/mysql [root@node1 mysql]# chown -R root:mysql ./*

初始化安装mariadb:

[root@node1 mysql]#scripts/mysql_install_db --user=mysql --datadir=/mydata/data

安装完成后查看数据存放目录:

[root@node1 mysql]# ls /mydata/data/ aria_log.00000001 ibdata1 ib_logfile1 performance_schema aria_log_control ib_logfile0 mysql test

安装成功!

mariadb配置文件的存放,如果我们希望一个节点的配置文件更改后,备节点同步更新,那么配置文件需要存放在drbd存储上是最合适的!

[root@node1 mysql]# mkdir /mydata/mysql/ [root@node1 mysql]# chown -R mysql:mysql /mydata/mysql/ [root@node1 mysql]# cp support-files/my-large.cnf /mydata/mysql/my.cnf [root@node1 mysql]# vim /mydata/mysql/my.cnf [mysqld] port = 3306 datadir = /mydata/data socket = /tmp/mysql.sock skip-external-locking key_buffer_size = 256M max_allowed_packet = 1M table_open_cache = 256 sort_buffer_size = 1M read_buffer_size = 1M read_rnd_buffer_size = 4M myisam_sort_buffer_size = 64M thread_cache_size = 8 query_cache_size= 16M # Try number of CPU's*2 forthread_concurrency thread_concurrency = 8 innodb_file_per_table = on skip_name_resolve = on

在本地创建软链接指向配置文件目录:

[root@node1 ~]# ln -sv /mydata/mysql/etc/mysql `/etc/mysql' -> `/mydata/mysql'

服务脚本的创建:

[root@node1 mysql]# cpsupport-files/mysql.server /etc/init.d/mysqld [root@node1 mysql]# chkconfig --add mysqld

启动服务测试:

[root@node1 mysql]# service mysqld start Starting MySQL. [ OK ]

登录mysql创建数据库:

[root@node1 mysql]#/usr/local/mysql/bin/mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 4 Server version: 10.0.10-MariaDB-log MariaDBServer Copyright (c) 2000, 2014, Oracle, SkySQL Aband others. Type 'help;' or '\h' for help. Type '\c' toclear the current input statement. MariaDB [(none)]> create databasetestdb; Query OK, 1 row affected (0.00 sec) MariaDB [(none)]> \q Bye

停止mysql服务器:

[root@node1 mysql]# service mysqld stop Shutting down MySQL.. [ OK ]

2.节点2也要配置mariadb

切换node1为从节点:

[root@node1 ~]# crm node standby [root@node1 ~]# crm status Last updated: Sat Jan 3 12:21:38 2015 Last change: Sat Jan 3 12:21:34 2015 Stack: classic openais (with plugin) Current DC: node1.stu31.com - partitionwith quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 3 Resources configured Node node1.stu31.com: standby Online: [ node2.stu31.com ] Master/Slave Set: ms_mydrbd [mydrbd] Masters: [ node2.stu31.com ] Stopped: [ node1.stu31.com ] myfs (ocf::heartbeat:Filesystem): Started node2.stu31.com

让node1从节点上线:

[root@node1 ~]# crm node online [root@node1 ~]# crm status Last updated: Sat Jan 3 12:21:52 2015 Last change: Sat Jan 3 12:21:48 2015 Stack: classic openais (with plugin) Current DC: node1.stu31.com - partitionwith quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 3 Resources configured Online: [ node1.stu31.com node2.stu31.com ] Master/Slave Set: ms_mydrbd [mydrbd] Masters: [ node2.stu31.com ] Slaves: [ node1.stu31.com ] myfs (ocf::heartbeat:Filesystem): Started node2.stu31.com

mariadb程序包解压:

[root@node2 ~]# tar xfmariadb-10.0.10-linux-x86_64.tar.gz -C /usr/local [root@node2 ~]# cd /usr/local [root@node2 local]# ln -sv mariadb-10.0.10-linux-x86_64/mysql `mysql' ->`mariadb-10.0.10-linux-x86_64/' [root@node2 local]# cd mysql [root@node2 mysql]# chown -R root:mysql ./*

不需要初始化安装了!

查看节点2的存储挂载完成与否:

[root@node2 local]# ls /mydata/data/ aria_log.00000001 ib_logfile1 mysql-bin.index testdb aria_log_control multi-master.info mysql-bin.state ibdata1 mysql performance_schema ib_logfile0 mysql-bin.000001 test

成功挂载:

只需要服务脚本了:

[root@node2 mysql]# cpsupport-files/mysql.server /etc/init.d/mysqld [root@node2 mysql]# chkconfig --add mysqld [root@node2 mysql]# chkconfig mysqld off

创建软链接将存储的配置文件定位到/etc/下,方便mysql启动:

[root@node2 ~]# ln -sv /mydata/mysql//etc/mysql `/etc/mysql' -> `/mydata/mysql/'

启动mysqld服务:

[root@node2 ~]# service mysqld start Starting MySQL... [ OK ] [root@node2 ~]# /usr/local/mysql/bin/mysql Welcome to the MariaDB monitor. Commands end with ; or \g. Your MariaDB connection id is 4 Server version: 10.0.10-MariaDB-log MariaDBServer Copyright (c) 2000, 2014, Oracle, SkySQL Aband others. Type 'help;' or '\h' for help. Type '\c' toclear the current input statement. MariaDB [(none)]> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | test | | testdb | +--------------------+ 5 rows in set (0.04 sec) MariaDB [(none)]> grant all on *.* to'root'@'172.16.%.%' identified by 'oracle'; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> flush privileges; Query OK, 0 rows affected (0.00 sec) MariaDB [(none)]> \q Bye

可以看出数据库是有testdb的!!!

同步过来了!我们授权一下远程客户端可以登录!

两个节点都安装好了mariadb,

九.定义mariadb数据库集群服务资源

[root@node2 ~]# crm crm(live)# configure #定义数据库集群的VIP crm(live)configure# primitive myipocf:heartbeat:IPaddr params ip="172.16.31.166" op monitor interval=10s timeout=20s crm(live)configure# verify #定义数据库集群的服务资源mysqld crm(live)configure# primitive myserverlsb:mysqld op monitor interval=20s timeout=20s crm(live)configure# verify #将资源加入资源组,进行结合资源在一起 crm(live)configure# group myservice myipms_mydrbd:Master myfs myserver ERROR: myservice refers to missing objectms_mydrbd:Master INFO: resource references incolocation:myfs_with_ms_mydrbd_master updated INFO: resource references inorder:ms_mydrbd_master_before_myfs updated #定义资源顺序约束,启动好myfs资源后再启动myserver资源: crm(live)configure# ordermyfs_before_myserver inf: myfs:start myserver:start crm(live)configure# verify #所有都定义完成后就提交!可能mysql服务启动有点慢,等一下即可! crm(live)configure# commit crm(live)configure# cd crm(live)# status Last updated: Sat Jan 3 13:42:13 2015 Last change: Sat Jan 3 13:41:48 2015 Stack: classic openais (with plugin) Current DC: node1.stu31.com - partitionwith quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 5 Resources configured Online: [ node1.stu31.com node2.stu31.com ] Master/Slave Set: ms_mydrbd [mydrbd] Masters: [ node2.stu31.com ] Slaves: [ node1.stu31.com ] Resource Group: myservice myip (ocf::heartbeat:IPaddr): Started node2.stu31.com myfs (ocf::heartbeat:Filesystem): Started node2.stu31.com myserver (lsb:mysqld): Started node2.stu31.com

启动完成后,我们在远程客户端上连接数据库进行测试:

[root@nfs ~]# mysql -h 172.16.31.166 -uroot -poracle Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 4 Server version: 5.5.5-10.0.10-MariaDB-logMariaDB Server Copyright (c) 2000, 2013, Oracle and/or itsaffiliates. All rights reserved. Oracle is a registered trademark of OracleCorporation and/or its affiliates. Other names may be trademarksof their respective owners. Type 'help;' or '\h' for help. Type '\c' toclear the current input statement. mysql> show databases; +--------------------+ | Database | +--------------------+ | information_schema | | mysql | | performance_schema | | test | | testdb | +--------------------+ 5 rows in set (0.05 sec) mysql> use testdb Database changed mysql> create table t1 (id int); Query OK, 0 rows affected (0.18 sec) mysql> show tables; +------------------+ | Tables_in_testdb | +------------------+ | t1 | +------------------+ 1 row in set (0.01 sec) mysql> \q Bye

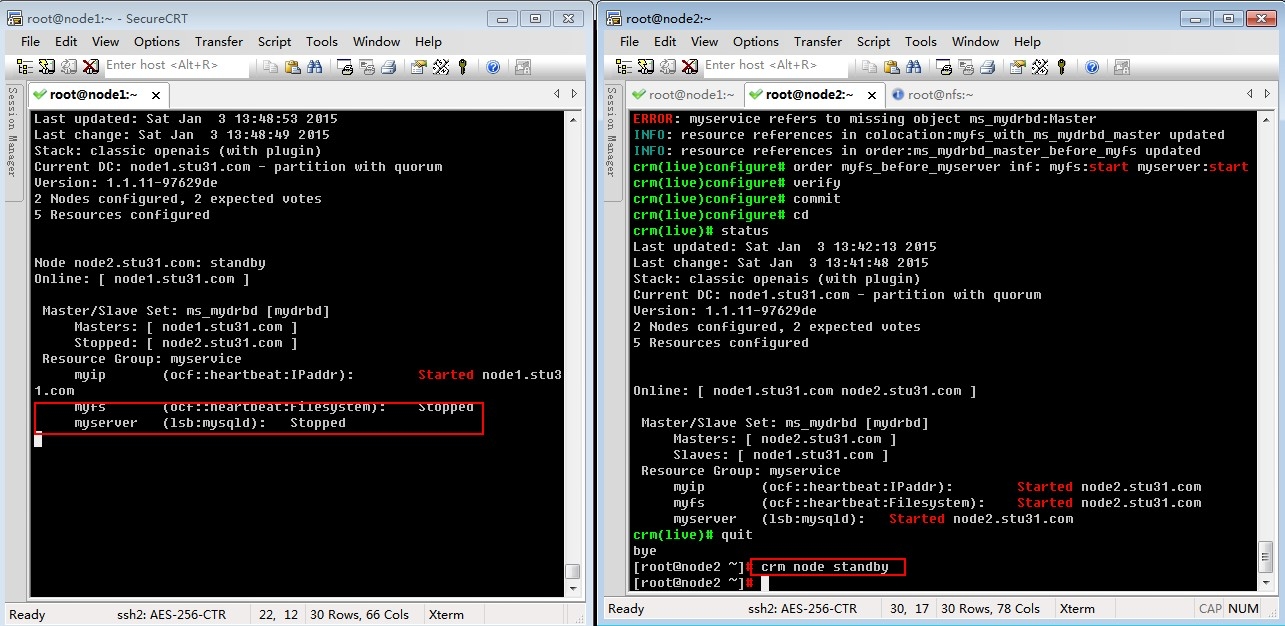

将节点2切换为备节点,让node1成为主节点:

[root@node2 ~]# crm node standby

输入切换指令后我们监控node1转换成主节点的过程:

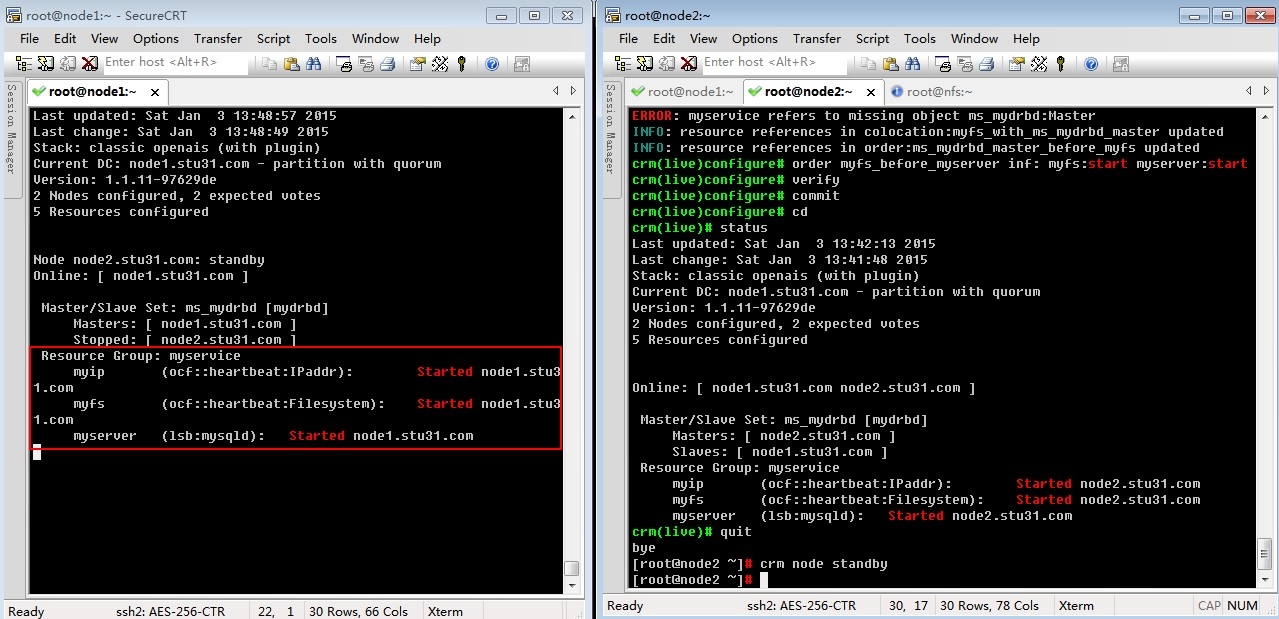

查看节点1的集群状态信息:

[root@node1 ~]# crm status Last updated: Sat Jan 3 13:59:38 2015 Last change: Sat Jan 3 13:48:49 2015 Stack: classic openais (with plugin) Current DC: node1.stu31.com - partitionwith quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 5 Resources configured Node node2.stu31.com: standby Online: [ node1.stu31.com ] Master/Slave Set: ms_mydrbd [mydrbd] Masters: [ node1.stu31.com ] Stopped: [ node2.stu31.com ] Resource Group: myservice myip (ocf::heartbeat:IPaddr): Started node1.stu31.com myfs (ocf::heartbeat:Filesystem): Started node1.stu31.com myserver (lsb:mysqld): Started node1.stu31.com

再次远程连接数据库测试:

[root@nfs ~]# mysql -h 172.16.31.166 -uroot -poracle Welcome to the MySQL monitor. Commands end with ; or \g. Your MySQL connection id is 4 Server version: 5.5.5-10.0.10-MariaDB-logMariaDB Server Copyright (c) 2000, 2013, Oracle and/or itsaffiliates. All rights reserved. Oracle is a registered trademark of OracleCorporation and/or its affiliates. Other names may be trademarksof their respective owners. Type 'help;' or '\h' for help. Type '\c' toclear the current input statement. mysql> use testdb; Reading table information for completion oftable and column names You can turn off this feature to get aquicker startup with -A Database changed mysql> show tables; +------------------+ | Tables_in_testdb | +------------------+ | t1 | +------------------+ 1 row in set (0.00 sec) mysql> \q Bye

测试成功,同步完成!

至此,corosync+pacemaker+crmsh+DRBD实现数据库服务器高可用性集群的搭建就完成了!!!