Linux 高可用(HA)集群之Corosync+pacemaker安装使用

转自http://freeloda.blog.51cto.com/2033581/1272417

一、Corysync与Pacemaker 安装

1.环境说明

系统:CentOS 6.4x64最小化安装

node1: 192.168.3.37

node2: 192.168.3.38

core-nfs: 192.168.3.39

vip: 192.168.3.40

2.前提条件

node1:

(1).各节点之间主机名互相解析

[root@node1 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.3.37 node1.weyee.com node1 192.168.3.38 node2.weyee.com node2

(2).时间同步

[root@node1 ~]# yum install ntp -y [root@node1 ~]# echo "*/10 * * * * /usr/sbin/ntpdate asia.pool.ntp.org &>/dev/null" >/var/spool/cron/root [root@node1 ~]# ntpdate asia.pool.ntp.org 4 Jun 15:24:24 ntpdate[1454]: step time server 124.41.86.200 offset 156.480664 sec [root@node1 ~]# hwclock -w

(3).各节点之间ssh互信

[root@node1 ~]# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Created directory '/root/.ssh'. Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 5f:c2:ee:48:96:52:8e:0e:09:5e:66:2b:3b:d9:fe:33 [email protected] The key's randomart image is: +--[ RSA 2048]----+ | | | | | | | . | | . + S o . | | . = o + + o | | oo+ o = o | | oo.oE+ o | | .o..oo. . | +-----------------+ [root@node1 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@node2

node2:

(1).各节点之间主机名互相解析

#从node1上将/etc/hosts文件scp过来 [root@node1 ~]# scp /etc/hosts node2:/etc hosts 100% 228 0.2KB/s 00:00

(2).各节点之间时间同步

[root@node2 ~]# yum -y install ntp [root@node2 ~]# echo "*/10 * * * * /usr/sbin/ntpdate asia.pool.ntp.org &>/dev/null" >/var/spool/cron/root [root@node2 ~]# ntpdate asia.pool.ntp.org 4 Jun 15:27:58 ntpdate[1482]: step time server 80.241.0.72 offset 156.485766 sec [root@node2 ~]# hwclock -w

(3).各节点之间ssh互信

[root@node2 ~]# ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 09:dc:d5:fe:18:93:dd:d1:ad:ab:2b:b8:e2:0c:9f:b6 [email protected] The key's randomart image is: +--[ RSA 2048]----+ | .. o| | . . . . .o| | o . . o o.| | . . = o .| | S = . | | . o | | . . . | | +o.. . . | | oEo.. ... | +-----------------+ [root@node2 ~]# ssh-copy-id -i .ssh/id_rsa.pub root@node1

3.配置yum源(EPEL源)

node1:

[root@node1 ~]# rpm -ivh http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm Retrieving http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm warning: /var/tmp/rpm-tmp.cYQwcb: Header V3 RSA/SHA256 Signature, key ID 0608b895: NOKEY Preparing... ########################################### [100%] 1:epel-release ########################################### [100%] [root@node1 ~]# sed -i 's@#b@b@g' /etc/yum.repos.d/epel.repo [root@node1 ~]# sed -i 's@mirrorlist@#mirrorlist@g' /etc/yum.repos.d/epel.repo

node2:

[root@node2 ~]# rpm -ivh http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm Retrieving http://dl.fedoraproject.org/pub/epel/6/x86_64/epel-release-6-8.noarch.rpm warning: /var/tmp/rpm-tmp.EC9ILO: Header V3 RSA/SHA256 Signature, key ID 0608b895: NOKEY Preparing... ########################################### [100%] 1:epel-release ########################################### [100%] [root@node2 ~]# sed -i 's@#b@b@g' /etc/yum.repos.d/epel.repo [root@node2 ~]# sed -i 's@mirrorlist@#mirrorlist@g' /etc/yum.repos.d/epel.repo

4.关闭防火墙与SELinux

node1:

[root@node1 ~]# service iptables stop iptables: Flushing firewall rules: [ OK ] iptables: Setting chains to policy ACCEPT: filter [ OK ] iptables: Unloading modules: [ OK ] [root@node1 ~]# getenforce Disabled

node2:

[root@node2 ~]# service iptables stop iptables: Flushing firewall rules: [ OK ] iptables: Setting chains to policy ACCEPT: filter [ OK ] iptables: Unloading modules: [ OK ] [root@node2 ~]# getenforce Disabled

5.安装corosync与pacemaker

node1:

[root@node1 ~]# yum install corosync* pacemaker* -y

node2:

[root@node2 ~]# yum install corosync* pacemaker* -y

二、Corosync 详细配置

1.提供配置文件

[root@node1 ~]# cd /etc/corosync/ [root@node1 corosync]# ll total 16 -rw-r--r-- 1 root root 2663 Oct 15 2014 corosync.conf.example -rw-r--r-- 1 root root 1073 Oct 15 2014 corosync.conf.example.udpu drwxr-xr-x 2 root root 4096 Oct 15 2014 service.d drwxr-xr-x 2 root root 4096 Oct 15 2014 uidgid.d

注:大家可以看到提供一个样例文件corosync.conf.example

[root@node1 corosync]# cp corosync.conf.example corosync.conf

2.修改配置文件

[root@node1 corosync]# egrep -v "^#|^$|^[[:space:]]+#" /etc/corosync/corosync.conf

compatibility: whitetank

totem {

version: 2

secauth: on

threads: 2

interface {

ringnumber: 0

bindnetaddr: 192.168.3.0

mcastaddr: 239.255.1.1

mcastport: 5405

ttl: 1

}

}

logging {

fileline: off

to_stderr: no

to_logfile: yes

logfile: /var/log/cluster/corosync.log

to_syslog: no

debug: off

timestamp: on

logger_subsys {

subsys: AMF

debug: off

}

}

amf {

mode: disabled

}

service {

ver: 0

name: pacemaker

}

aisexec {

user: root

group: root

}

注:用 man corosync.conf 可以查看所有选项的意思。

3.生成密钥文件

注:corosync生成key文件会默认调用/dev/random随机数设备,一旦系统中断的IRQS的随机数不够用,将会产生大量的等待时间,因此,为了节约时间,我们在生成key之前讲random替换成urandom,以便节约时间。

[root@node1 corosync]# mv /dev/{random,random.bak}

[root@node1 corosync]# ln -s /dev/urandom /dev/random

[root@node1 corosync]# corosync-keygen

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/random.

Press keys on your keyboard to generate entropy.

Writing corosync key to /etc/corosync/authkey.

4.查看生成的key文件

[root@node1 corosync]# ll total 24 -r-------- 1 root root 128 Jun 4 15:47 authkey #这是我们刚生成的 -rw-r--r-- 1 root root 2776 Jun 4 15:41 corosync.conf -rw-r--r-- 1 root root 2663 Oct 15 2014 corosync.conf.example -rw-r--r-- 1 root root 1073 Oct 15 2014 corosync.conf.example.udpu drwxr-xr-x 2 root root 4096 Oct 15 2014 service.d drwxr-xr-x 2 root root 4096 Oct 15 2014 uidgid.d

5.将key文件authkey与配置文件corosync.conf复制到node2上

[root@node1 corosync]# scp authkey corosync.conf node2:/etc/corosync/ authkey 100% 128 0.1KB/s 00:00 corosync.conf 100% 2776 2.7KB/s 00: [root@node2 ~]# ll /etc/corosync/ total 24 -r-------- 1 root root 128 Jun 4 15:48 authkey -rw-r--r-- 1 root root 2776 Jun 4 15:48 corosync.conf -rw-r--r-- 1 root root 2663 Oct 15 2014 corosync.conf.example -rw-r--r-- 1 root root 1073 Oct 15 2014 corosync.conf.example.udpu drwxr-xr-x 2 root root 4096 Oct 15 2014 service.d drwxr-xr-x 2 root root 4096 Oct 15 2014 uidgid.d

注:corosync 到这里配置全部完成。下面我们进行启动测试!

三、Corosync 启动信息

1.启动corosync

[root@node1 corosync]# ssh node2 service corosync start Starting Corosync Cluster Engine (corosync): [ OK ] [root@node1 corosync]# service corosync start Starting Corosync Cluster Engine (corosync): [ OK ]

2.查看corosync启动信息

(1).查看corosync引擎是否正常启动

[root@node1 corosync]# egrep "Corosync Cluster Engine|configuration file" /var/log/cluster/corosync.log

Jun 04 15:51:53 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): started and ready to provide service.

Jun 04 15:51:53 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Jun 04 15:51:53 corosync [MAIN ] Corosync Cluster Engine exiting with status 13 at main.c:1609.

Jun 04 15:54:07 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): started and ready to provide service.

Jun 04 15:54:07 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Jun 04 15:54:07 corosync [MAIN ] Corosync Cluster Engine exiting with status 13 at main.c:1609.

Jun 04 15:56:33 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): started and ready to provide service.

Jun 04 15:56:33 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Jun 04 15:56:56 corosync [MAIN ] Corosync Cluster Engine exiting with status 0 at main.c:2055.

Jun 04 15:57:45 corosync [MAIN ] Corosync Cluster Engine ('1.4.7'): started and ready to provide service.

Jun 04 15:57:45 corosync [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

(2).查看初始化成员节点通知是否正常发出

[root@node1 corosync]# grep TOTEM /var/log/cluster/corosync.log |tail -10 Jun 04 15:56:33 corosync [TOTEM ] waiting_trans_ack changed to 1 Jun 04 15:56:33 corosync [TOTEM ] entering OPERATIONAL state. Jun 04 15:56:33 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed. Jun 04 15:56:33 corosync [TOTEM ] waiting_trans_ack changed to 0 Jun 04 15:56:56 corosync [TOTEM ] sending join/leave message Jun 04 15:57:45 corosync [TOTEM ] Initializing transport (UDP/IP Multicast). Jun 04 15:57:45 corosync [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0). Jun 04 15:57:45 corosync [TOTEM ] The network interface [192.168.3.37] is now up. Jun 04 15:57:45 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed. Jun 04 15:57:45 corosync [TOTEM ] A processor joined or left the membership and a new membership was formed.

(3).检查启动过程中是否有错误产生

[root@node1 corosync]# grep ERROR: /var/log/cluster/corosync.log Jun 04 15:56:33 corosync [pcmk ] ERROR: process_ais_conf: You have configured a cluster using the Pacemaker plugin for Corosync. The plugin is not supported in this environment and will be removed very soon. Jun 04 15:56:33 corosync [pcmk ] ERROR: process_ais_conf: Please see Chapter 8 of 'Clusters from Scratch' (http://www.clusterlabs.org/doc) for details on using Pacemaker with CMAN

(4).查看pacemaker是否正常启动

[root@node1 corosync]# grep pcmk_startup /var/log/cluster/corosync.log Jun 04 15:56:33 corosync [pcmk ] info: pcmk_startup: CRM: Initialized Jun 04 15:56:33 corosync [pcmk ] Logging: Initialized pcmk_startup Jun 04 15:56:33 corosync [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615 Jun 04 15:56:33 corosync [pcmk ] info: pcmk_startup: Service: 9 Jun 04 15:56:33 corosync [pcmk ] info: pcmk_startup: Local hostname: node1.weyee.com Jun 04 15:57:45 corosync [pcmk ] info: pcmk_startup: CRM: Initialized Jun 04 15:57:45 corosync [pcmk ] Logging: Initialized pcmk_startup Jun 04 15:57:45 corosync [pcmk ] info: pcmk_startup: Maximum core file size is: 18446744073709551615 Jun 04 15:57:45 corosync [pcmk ] info: pcmk_startup: Service: 9 Jun 04 15:57:45 corosync [pcmk ] info: pcmk_startup: Local hostname: node1.weyee.com

3.查看集群状态

[root@node1 corosync]# crm_mon Last updated: Thu Jun 4 16:02:19 2015 Last change: Thu Jun 4 15:56:55 2015 Stack: classic openais (with plugin) Current DC: node1.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 0 Resources configured Online: [ node1.weyee.com node2.weyee.com ]

注:大家可以看到,集群运行正常,node1与node2都在线,DC是node1节点。但是还没有配置资源,配置资源就要用到pacemaker,用pacemaker来增加各种资源。

四、pacemaker的crmsh安装配置

1.Pacemaker 配置资源方法

(1).命令配置方式

crmsh

pcs

(2).图形配置方式

pygui

hawk

LCMC

pcs

注:本文主要的讲解的是crmsh

2.安装crmsh资源管理工具

(1).crmsh官方网站

https://savannah.nongnu.org/forum/forum.php?forum_id=7672

(2).crmsh下载地址

http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/

(3).安装crmsh

node1:

#下载安装包 [root@node1 ~]# wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/RedHat_RHEL-6/x86_64/crmsh-2.1-1.2.x86_64.rpm #安装依赖包 [root@node1 ~]# yum install -y python-dateutil python-lxml [root@node1 ~]# rpm -ivh crmsh-2.1-1.2.x86_64.rpm --nodeps warning: crmsh-2.1-1.2.x86_64.rpm: Header V3 RSA/SHA1 Signature, key ID 17280ddf: NOKEY Preparing... ########################################### [100%] 1:crmsh ########################################### [100%] [root@node1 ~]# crm #安装好后出现一个crm命令,说明安装完成 crm crm_attribute crm_error crm_master crm_node crm_resource crm_simulate crm_ticket crmadmin crm_diff crm_failcount crm_mon crm_report crm_shadow crm_standby crm_verify [root@node1 ~]# crm crm(live)# help

node2:

[root@node1 ~]# scp crmsh-2.1-1.2.x86_64.rpm node2:/root crmsh-2.1-1.2.x86_64.rpm 100% 602KB 602.1KB/s 00:00 [root@node2 ~]# yum install -y python-dateutil python-lxml [root@node2 ~]# rpm -ivh crmsh-2.1-1.2.x86_64.rpm --nodeps warning: crmsh-2.1-1.2.x86_64.rpm: Header V3 RSA/SHA1 Signature, key ID 17280ddf: NOKEY Preparing... ########################################### [100%] 1:crmsh ########################################### [100%]

注:到此准备工作全部完成,下面我们来具体配置一下高可用的Web集群,在配置之前我们还得简的说明一下,crm sh 如何使用!

3.crmsh使用说明

注:简单说明一下,其实遇到一个新命令最好的方法就是man一下!简单的先熟悉一下这个命令,然后再慢慢尝试。

[root@node1 ~]# crm #输入crm命令,进入crm sh 模式 Cannot change active directory to /var/lib/pacemaker/cores/root: No such file or directory (2) crm(live)# help #输入help查看一下,会出下很多子命令 This is crm shell, a Pacemaker command line interface. Available commands: cib manage shadow CIBs resource resources management configure CRM cluster configuration node nodes management options user preferences history CRM cluster history site Geo-cluster support ra resource agents information center status show cluster status help,? show help (help topics for list of topics) end,cd,up go back one level quit,bye,exit exit the program crm(live)# configure #输入configure就会进入,configure模式下, crm(live)configure# #敲两下tab键就会显示configure下全部命令 ? default-timeouts group node rename simulate bye delete help op_defaults role template cd edit history order rsc_defaults up cib end load primitive rsc_template upgrade cibstatus erase location property rsc_ticket user clone exit master ptest rsctest verify collocation fencing_topology modgroup quit save xml colocation filter monitor ra schema commit graph ms refresh show crm(live)configure# help node #输入help加你想了解的任意命令,就会显示该命令的使用帮助与案例 The node command describes a cluster node. Nodes in the CIB are commonly created automatically by the CRM. Hence, you should not need to deal with nodes unless you also want to define node attributes. Note that it is also possible to manage node attributes at the `node` level. Usage: ............... node <uname>[:<type>] [attributes <param>=<value> [<param>=<value>...]] [utilization <param>=<value> [<param>=<value>...]] type :: normal | member | ping ............... Example: ............... node node1 node big_node attributes memory=64 ............... 注:好了,简单说明就到这,其实就是一句话,不会的命令help一下。下面我们开始配置,高可用的Web集群。

4.crmsh 配置高可用的Web集群

(1).查看一下默认配置

[root@node1 ~]# crm crm(live)# configure crm(live)configure# show node node1.weyee.com node node2.weyee.com property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2

(2).检测一下配置文件是否有错

crm(live)configure# verify error: unpack_resources: Resource start-up disabled since no STONITH resources have been defined error: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option error: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity Errors found during check: config not vali #注:说我们的STONITH resources没有定义,因我们这里没有STONITH设备,所以我们先关闭这个属性 crm(live)configure# property stonith-enabled=false crm(live)configure# show node node1.weyee.com node node2.weyee.com property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false crm(live)configure# verify #现在已经不报错

(3).查看当前集群系统所支持的类型

crm(live)# ra crm(live)ra# classes lsb ocf / heartbeat pacemaker service stonith

(4).查看某种类别下的所用资源代理的列表

crm(live)ra# list lsb abrt-ccpp abrt-oops abrtd acpid atd auditd blk-availability corosync corosync-notifyd cpuspeed crond haldaemon halt ip6tables iptables irqbalance kdump killall lvm2-lvmetad lvm2-monitor mdmonitor messagebus netconsole netfs network nfs nfslock ntpd ntpdate pacemaker pacemaker_remote postfix psacct quota_nld rdisc restorecond rngd rpcbind rpcgssd rpcidmapd rpcsvcgssd rsyslog sandbox saslauthd single smartd sshd sysstat udev-post winbind

(5).查看某个资源代理的配置方法

crm(live)ra# info ocf:heartbeat:IPaddr

(6).接下来要创建的web集群创建一个IP地址资源(IP资源是主资源,我们查看一下怎么定义一个主资源)

crm(live)# configure #增加一个VIP资源 crm(live)configure# primitive vip ocf:heartbeat:IPaddr params ip=192.168.3.40 nic=eth0 cidr_netmask=24 crm(live)configure# property stonith-enabled=false #查看已增加好的VIP crm(live)configure# show node node1.weyee.com node node2.weyee.com primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false #检查配置文件是否有错误 crm(live)configure# verify #提交配置的资源,在命令行配置资源时,只要不用commit提交配置好资源,就不会生效,一但用commit命令提交,就会写入到cib.xml的配置文件中 crm(live)configure# commit #查看一下配置好的资源状态,有一个资源vip,运行在node1上 crm(live)# status Last updated: Thu Jun 4 16:35:55 2015 Last change: Thu Jun 4 16:34:04 2015 Stack: classic openais (with plugin) Current DC: node1.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 1 Resources configured Online: [ node1.weyee.com node2.weyee.com ] vip (ocf::heartbeat:IPaddr): Started node1.weyee.com #查看系统IP信息 [root@node1 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:25:7d:42 brd ff:ff:ff:ff:ff:ff inet 192.168.3.37/24 brd 192.168.3.255 scope global eth0 inet 192.168.3.40/24 brd 192.168.3.255 scope global secondary eth0 inet6 fe80::20c:29ff:fe25:7d42/64 scope link valid_lft forever preferred_lft forever

查看一下node1节点上的ip,大家可以看到vip已经生效,而后我们到node2上通过如下命令停止node1上的corosync服务,再查看状态

测试,停止node1节点上的corosync,可以看到node1已经离线

[root@node2 ~]# ssh node1 service corosync stop Signaling Corosync Cluster Engine (corosync) to terminate: [ OK ] Waiting for corosync services to unload:.[ OK ] [root@node2 ~]# crm status Last updated: Thu Jun 4 16:47:43 2015 Last change: Thu Jun 4 16:34:04 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition WITHOUT quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 1 Resources configured Online: [ node2.weyee.com ] OFFLINE: [ node1.weyee.com ]

重点说明:上面的信息显示node1.test.com已经离线,但资源vip却没能在node2.test.com上启动。这是因为此时的集群状态为"WITHOUT quorum",即已经失去了quorum,此时集群服务本身已经不满足正常运行的条件,这对于只有两节点的集群来讲是不合理的。因此,我们可以通过如下的命令来修改忽略quorum不能满足的集群状态检查:property no-quorum-policy=ignore

[root@node2 ~]# crm crm(live)# configure crm(live)configure# property no-quorum-policy=igonre crm(live)configure# show node node1.weyee.com node node2.weyee.com primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=igonre crm(live)configure# verify crm(live)configure# commit

好了,验正完成后,我们正常启动node1.test.com

[root@node2 ~]# ssh node1 service corosync start Starting Corosync Cluster Engine (corosync): [ OK ] [root@node2 ~]# crm status Last updated: Thu Jun 4 16:51:18 2015 Last change: Thu Jun 4 16:50:39 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 1 Resources configured Online: [ node1.weyee.com node2.weyee.com ] vip (ocf::heartbeat:IPaddr): Started node2.weyee.com

正常启动node1.test.com后,集群资源vip很可能会重新从node2.test.com转移回node1.test.com,但也可能不回去。资源的这种在节点间每一次的来回流动都会造成那段时间内其无法正常被访问,所以,我们有时候需要在资源因为节点故障转移到其它节点后,即便原来的节点恢复正常也禁止资源再次流转回来。这可以通过定义资源的黏性(stickiness)来实现。在创建资源时或在创建资源后,都可以指定指定资源黏性。好了,下面我们来简单回忆一下,资源黏性。

(7).资源黏性

资源黏性是指:资源更倾向于运行在哪个节点。

资源黏性值范围及其作用:

0:这是默认选项。资源放置在系统中的最适合位置。这意味着当负载能力“较好”或较差的节点变得可用时才转移资源。此选项的作用基本等同于自动故障回复,只是资源可能会转移到非之前活动的节点上;

大于0:资源更愿意留在当前位置,但是如果有更合适的节点可用时会移动。值越高表示资源越愿意留在当前位置;

小于0:资源更愿意移离当前位置。绝对值越高表示资源越愿意离开当前位置;

INFINITY:如果不是因节点不适合运行资源(节点关机、节点待机、达到migration-threshold 或配置更改)而强制资源转移,资源总是留在当前位置。此选项的作用几乎等同于完全禁用自动故障回复;

-INFINITY:资源总是移离当前位置;

我们这里可以通过以下方式为资源指定默认黏性值: rsc_defaults resource-stickiness=100

crm(live)configure# rsc_defaults resource-stickiness=100 crm(live)configure# verify crm(live)configure# show node node1.weyee.com node node2.weyee.com primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore rsc_defaults rsc-options: \ resource-stickiness=100 crm(live)configure# commit

(8).结合上面已经配置好的IP地址资源,将此集群配置成为一个active/passive模型的web(httpd)服务集群

node1:

[root@node1 ~]# yum install httpd -y [root@node1 ~]# echo "ServerName localhost:80" >>/etc/httpd/conf/httpd.conf [root@node1 ~]# echo "<h1>node1.weyee.com</h1>" >>/var/www/html/index.html

node2:

[root@node2 ~]# yum install httpd -y [root@node2 ~]# echo "ServerName localhost:80" >>/etc/httpd/conf/httpd.conf [root@node2 ~]# echo "<h1>node2.weyee.com</h1>" >>/var/www/html/index.html

确保node1和node2上的httpd服务不要启动,并禁止开机自动启动

接下来我们将此httpd服务添加为集群资源。将httpd添加为集群资源有两处资源代理可用:lsb和ocf:heartbeat,为了简单起见,我们这里使用lsb类型:

首先可以使用如下命令查看lsb类型的httpd资源的语法格式:

crm(live)# ra crm(live)ra# info lsb:httpd start and stop Apache HTTP Server (lsb:httpd) The Apache HTTP Server is an efficient and extensible \ server implementing the current HTTP standards. Operations' defaults (advisory minimum): start timeout=15 stop timeout=15 status timeout=15 restart timeout=15 force-reload timeout=15 monitor timeout=15 interval=15

接下来新建资源httpd:

crm(live)# configure crm(live)configure# primitive httpd lsb:httpd crm(live)configure# show node node1.weyee.com node node2.weyee.com primitive httpd lsb:httpd primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore rsc_defaults rsc-options: \ resource-stickiness=100 crm(live)configure# verify crm(live)configure# commit

来查看一下资源状态

[root@node1 ~]# crm status Last updated: Thu Jun 4 17:11:37 2015 Last change: Thu Jun 4 17:11:10 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ node1.weyee.com node2.weyee.com ] vip (ocf::heartbeat:IPaddr): Started node2.weyee.com httpd (lsb:httpd): Started node1.weyee.com

从上面的信息中可以看出vip和httpd有可能会分别运行于两个节点上,这对于通过此IP提供Web服务的应用来说是不成立的,即此两者资源必须同时运行在某节点上。有两种方法可以解决,一种是定义组资源,将vip与httpd同时加入一个组中,可以实现将资源运行在同节点上,另一种是定义资源约束可实现将资源运行在同一节点上。我们先来说每一种方法,定义组资源。

(9).定义组资源

crm(live)# configure crm(live)configure# group webservice vip httpd crm(live)configure# show node node1.weyee.com node node2.weyee.com primitive httpd lsb:httpd primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 group webservice vip httpd #这里是我们刚才定义的组资源webservice property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore rsc_defaults rsc-options: \ resource-stickiness=100 crm(live)configure# verify crm(live)configure# commit

再次查看一下资源状态

[root@node1 ~]# crm status Last updated: Thu Jun 4 17:14:47 2015 Last change: Thu Jun 4 17:13:52 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ node1.weyee.com node2.weyee.com ] Resource Group: webservice vip (ocf::heartbeat:IPaddr): Started node2.weyee.com httpd (lsb:httpd): Started node2.weyee.com

大家可以看到,所有资源全部运行在node2上,下面我们来测试一下

下面我们模拟故障,测试一下

#在node2上停止节点 [root@node2 ~]# crm crm(live)# node crm(live)node# standby #在node1上查看集群状态 [root@node1 ~]# crm status Last updated: Thu Jun 4 17:19:11 2015 Last change: Thu Jun 4 17:18:19 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Node node2.weyee.com: standby Online: [ node1.weyee.com ] Resource Group: webservice vip (ocf::heartbeat:IPaddr): Started node1.weyee.com httpd (lsb:httpd): Started node1.weyee.com #查看ip和httpd信息 [root@node1 ~]# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:25:7d:42 brd ff:ff:ff:ff:ff:ff inet 192.168.3.37/24 brd 192.168.3.255 scope global eth0 inet 192.168.3.40/24 brd 192.168.3.255 scope global secondary eth0 inet6 fe80::20c:29ff:fe25:7d42/64 scope link valid_lft forever preferred_lft forever [root@node1 ~]# netstat -anpt |grep http tcp 0 0 :::80 :::* LISTEN 24319/httpd

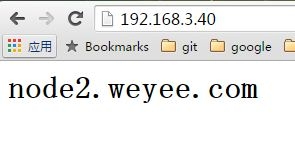

大家可以看到时,当node2节点设置为standby时,所有资源全部切换到node1上,下面我们再来访问一下Web页面

好了,组资源的定义与说明,我们就先演示到这,下面我们来说一说怎么定义资源约束。

(10).定义资源约束

我们先让node2上线,再删除组资源

#在node2上操作 crm(live)node# online crm(live)node# cd crm(live)# status Last updated: Thu Jun 4 17:21:51 2015 Last change: Thu Jun 4 17:21:47 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ node1.weyee.com node2.weyee.com ] Resource Group: webservice vip (ocf::heartbeat:IPaddr): Started node1.weyee.com httpd (lsb:httpd): Started node1.weyee.com

删除组资源操作

[root@node1 ~]# crm crm(live)# resource crm(live)resource# show Resource Group: webservice vip (ocf::heartbeat:IPaddr): Started httpd (lsb:httpd): Started crm(live)resource# stop webservice #停止资源 crm(live)resource# show Resource Group: webservice vip (ocf::heartbeat:IPaddr): Stopped httpd (lsb:httpd): Stopped crm(live)resource# cleanup webservice #清理资源 Cleaning up vip on node1.weyee.com Cleaning up vip on node2.weyee.com Cleaning up httpd on node1.weyee.com Cleaning up httpd on node2.weyee.com Waiting for 4 replies from the CRMd.... OK crm(live)resource# cd crm(live)# configure crm(live)configure# delete crm(live)configure# delete webservice #删除组资源 crm(live)configure# show node node1.weyee.com node node2.weyee.com \ attributes standby=off primitive httpd lsb:httpd primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore rsc_defaults rsc-options: \ resource-stickiness=100 crm(live)configure# commit [root@node1 ~]# crm_mon Last updated: Thu Jun 4 17:24:42 2015 Last change: Thu Jun 4 17:24:14 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Online: [ node1.weyee.com node2.weyee.com ] vip (ocf::heartbeat:IPaddr): Started node1.weyee.com httpd (lsb:httpd): Started node2.weyee.com

大家可以看到资源又重新运行在两个节点上了,下面我们来定义约束!使资源运行在同一节点上。首先我们来回忆一下资源约束的相关知识,资源约束则用以指定在哪些群集节点上运行资源,以何种顺序装载资源,以及特定资源依赖于哪些其它资源。pacemaker共给我们提供了三种资源约束方法:

Resource Location(资源位置):定义资源可以、不可以或尽可能在哪些节点上运行;

Resource Collocation(资源排列):排列约束用以定义集群资源可以或不可以在某个节点上同时运行;

Resource Order(资源顺序):顺序约束定义集群资源在节点上启动的顺序;

定义约束时,还需要指定分数。各种分数是集群工作方式的重要组成部分。其实,从迁移资源到决定在已降级集群中停止哪些资源的整个过程是通过以某种方式修改分数来实现的。分数按每个资源来计算,资源分数为负的任何节点都无法运行该资源。在计算出资源分数后,集群选择分数最高的节点。INFINITY(无穷大)目前定义为 1,000,000。加减无穷大遵循以下3个基本规则:

任何值 + 无穷大 = 无穷大

任何值 - 无穷大 = -无穷大

无穷大 - 无穷大 = -无穷大

定义资源约束时,也可以指定每个约束的分数。分数表示指派给此资源约束的值。分数较高的约束先应用,分数较低的约束后应用。通过使用不同的分数为既定资源创建更多位置约束,可以指定资源要故障转移至的目标节点的顺序。因此,对于前述的vip和httpd可能会运行于不同节点的问题,可以通过以下命令来解决:

crm(live)# configure crm(live)configure# collocation httpd-with-vip -inf: httpd vip crm(live)configure# show node node1.weyee.com node node2.weyee.com \ attributes standby=off primitive httpd lsb:httpd primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 colocation http-with-ip INFUNTY: httpd vip property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore \ last-lrm-refresh=1433409782 rsc_defaults rsc-options: \ resource-stickiness=100 crm(live)configure# show xml <rsc_colocation id="http-with-ip" score-attribute="INFUNTY" rsc="httpd" with-rsc="vip"/> crm(live)configure# verify crm(live)configure# commit

模拟一下故障,再进行测试

在node1上

crm(live)# node crm(live)node# standby crm(live)node# show node1.weyee.com: normal standby=on node2.weyee.com: normal standby=off #查看集群信息 [root@node2 ~]# crm status Last updated: Thu Jun 4 17:39:02 2015 Last change: Thu Jun 4 17:38:56 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 2 Resources configured Node node1.weyee.com: standby Online: [ node2.weyee.com ] vip (ocf::heartbeat:IPaddr): Started node2.weyee.com httpd (lsb:httpd): Started node2.weyee.com

大家可以看到,资源全部移动到node2上了,再进行测试

接着,我们还得确保httpd在某节点启动之前得先启动vip,这可以使用如下命令实现:

crm(live)node# cd crm(live)# configure crm(live)configure# order httpd-after-vip mandatory: vip httpd crm(live)configure# verify crm(live)configure# show node node1.weyee.com \ attributes standby=on node node2.weyee.com \ attributes standby=off primitive httpd lsb:httpd primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 colocation httpd-with-ip INFUNTY: httpd vip order httpd-after-vip Mandatory: vip httpd property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore \ last-lrm-refresh=1433409782 crm(live)configure# show xml <rsc_order id="httpd-after-vip" kind="Mandatory" first="vip" then="httpd"/> crm(live)configure# commit

此外,由于HA集群本身并不强制每个节点的性能相同或相近。所以,某些时候我们可能希望在正常时服务总能在某个性能较强的节点上运行,这可以通过位置约束来实现:

crm(live)configure# location prefer-node1 vip node_pref::200: node1

好了,到这里高可用的Web集群的基本配置全部完成,下面我们来讲一下增加nfs资源。

8.crmsh 配置nfs资源

(1).配置NFS服务器

[root@nfs ~]# mkdir -p /web [root@nfs ~]# cat /etc/exports /web/ 192.168.3.0/24(ro,async) [root@nfs ~]# echo '<h1>Cluster NFS Server</h1>' > /web/index.html #安装nfs服务 [root@nfs ~]# yum install nfs-utils rpcbind -y [root@nfs ~]# service rpcbind start Starting rpcbind: [ OK ] [root@nfs ~]# service nfs start Starting NFS services: [ OK ] Starting NFS quotas: [ OK ] Starting NFS mountd: [ OK ] Starting NFS daemon: [ OK ] Starting RPC idmapd: [ OK ] [root@nfs ~]# showmount -e 192.168.3.39 Export list for 192.168.3.39: /web 192.168.3.0/24 [root@nfs ~]# service iptables stop iptables: Flushing firewall rules: [ OK ] iptables: Setting chains to policy ACCEPT: filter [ OK ] iptables: Unloading modules: [ OK ]

(2).节点测试挂载

node1:

[root@node1 ~]# mount -t nfs 192.168.3.39:/web /mnt [root@node1 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda3 18G 1.4G 16G 9% / tmpfs 495M 22M 473M 5% /dev/shm /dev/sda1 194M 28M 156M 16% /boot 192.168.3.39:/web 18G 1.3G 16G 8% /mnt [root@node1 ~]# umount /mnt/

node2:

[root@node2 ~]# mount -t nfs 192.168.3.39:/web /mnt [root@node2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda3 18G 1.4G 16G 9% / tmpfs 495M 37M 458M 8% /dev/shm /dev/sda1 194M 28M 156M 16% /boot 192.168.3.39:/web 18G 1.3G 16G 8% /mnt [root@node2 ~]# umount /mnt/

(3).配置资源 vip 、httpd、nfs

[root@node1 ~]# crm crm(live)# configure crm(live)configure# show node node1.weyee.com \ attributes standby=off node node2.weyee.com \ attributes standby=on primitive httpd lsb:httpd primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 colocation httpd-with-vip inf: httpd vip order httpd-after-vip Mandatory: vip httpd property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore \ last-lrm-refresh=1433409782 rsc_defaults rsc-options: \ resource-stickiness=100 crm(live)configure# primitive nfs ocf:heartbeat:Filesystem params device=192.168.3.39:/web directory=/var/www/html fstype=nfs crm(live)configure# verify WARNING: nfs: default timeout 20s for start is smaller than the advised 60 WARNING: nfs: default timeout 20s for stop is smaller than the advised 60 crm(live)configure# show node node1.weyee.com \ attributes standby=off node node2.weyee.com \ attributes standby=on primitive httpd lsb:httpd primitive nfs Filesystem \ params device="192.168.3.39:/web" directory="/var/www/html" fstype=nfs primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 colocation httpd-with-vip inf: httpd vip order httpd-after-vip Mandatory: vip httpd property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore \ last-lrm-refresh=1433409782 rsc_defaults rsc-options: \ resource-stickiness=100 crm(live)configure# commit WARNING: nfs: default timeout 20s for start is smaller than the advised 60 WARNING: nfs: default timeout 20s for stop is smaller than the advised 60

将3个资源定义在一个组资源里

(4).定义组资源

crm(live)configure# group webservice vip nfs httpd crm(live)configure# show node node1.weyee.com \ attributes standby=off node node2.weyee.com \ attributes standby=on primitive httpd lsb:httpd primitive nfs Filesystem \ params device="192.168.3.39:/web" directory="/var/www/html" fstype=nfs primitive vip IPaddr \ params ip=192.168.3.40 nic=eth0 cidr_netmask=24 group webservice vip nfs httpd property cib-bootstrap-options: \ dc-version=1.1.11-97629de \ cluster-infrastructure="classic openais (with plugin)" \ expected-quorum-votes=2 \ stonith-enabled=false \ no-quorum-policy=ignore \ last-lrm-refresh=1433409782 rsc_defaults rsc-options: \ resource-stickiness=100 crm(live)configure# commit

查看一下资源状态,所有资源全部在node1上,下面我们测试一下

[root@node2 ~]# crm status Last updated: Thu Jun 4 18:21:21 2015 Last change: Thu Jun 4 18:20:18 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 3 Resources configured Node node2.weyee.com: standby Online: [ node1.weyee.com ] Resource Group: webservice vip (ocf::heartbeat:IPaddr): Started node1.weyee.com nfs (ocf::heartbeat:Filesystem): Started node1.weyee.com httpd (lsb:httpd): Started node1.weyee.com

(5).最后我们模拟一下资源故障

让node1下线

在node1上操作

crm(live)# node crm(live)node# standby #在node2上查看集群状态 crm(live)# status Last updated: Thu Jun 4 18:24:03 2015 Last change: Thu Jun 4 18:24:00 2015 Stack: classic openais (with plugin) Current DC: node2.weyee.com - partition with quorum Version: 1.1.11-97629de 2 Nodes configured, 2 expected votes 3 Resources configured Node node1.weyee.com: standby Online: [ node2.weyee.com ] Resource Group: webservice vip (ocf::heartbeat:IPaddr): Started node2.weyee.com nfs (ocf::heartbeat:Filesystem): Started node2.weyee.com httpd (lsb:httpd): Started node2.weyee.com

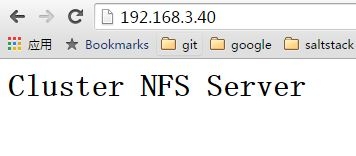

当node1故障时,所有资源全部移动到时node2上,下面我们再来访问一下吧

由于我们的nfs资源和vip,httpd是运行在一起的,所以我们还能正常访问到httpd服务