LVM 详解

大纲

一、概述

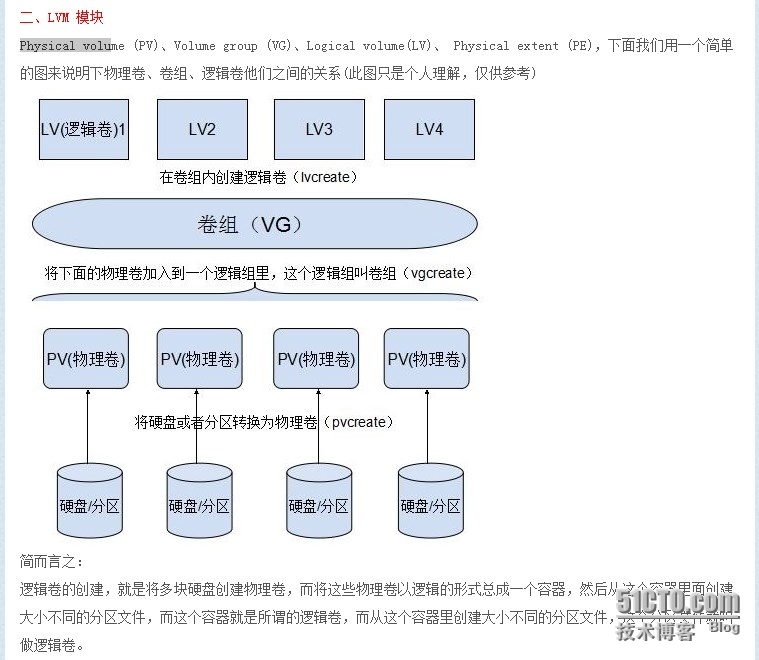

二、LVM 模块

三、具体操作

对添加的硬盘进行分区(fdisk /dev/[hs]d[a-z])

对创建的分区创建物理卷(pvcreate)

给逻辑卷创建逻辑容器(卷组)

在卷组创建大小不同的逻辑卷(lvcreate)

实现在线扩大LVM容量

实现缩减LVM容量(不支持在线缩减)

给以存在的卷组扩大容量

减小卷组容量

利用给LVM创建快照,并完成备份并还原数据

一、概述

LVM全称为Logical Volume Manager,即逻辑卷管理器。LVM可以弹性的调整文件系统的容量,可以将多个物理分区整合在一起,并且根据需要划分空间或动态的修改文件系统空间。

LVM有两个版本: lvm,lvm2

二、LVM的相关概念

1、物理卷(PV)

物理卷是LVM的最底层的元素,组成LVM的物理分区就是PV。

2、卷组(VG)

将各个独立的PV组合起来,所形成的一个存储空间称为VG;VG的大小就是整个LVM的空间大小。

3、逻辑卷(LV)

最终可以被用户格式化、挂载、存储数据的操作对象就是LV;LV与分区相类似,只不过LV可以理加灵活的调整容量。

4、物理扩展块(PE)

PE类似与分区中block的概念,也就是LVM的最小存储单位,默认大小为4M;可以通过调整PE块的数量来定义LV的容量。

5、快照(snapshot)

快照用于保存原卷上有变化的数据。快照刚刚被创建时其内容与原卷是一模一样的,只有在原卷中的数据发生变化时,快照才会将被改动的数据的原数据保存起来。

三、创建LVM逻辑卷的具体过程

1、创建物理文件系统,并将其文件系统类型设置为lvm,分区时修改文件系统类型为8e,如下:

[root@localhost ~]# fdisk -l Disk /dev/sda: 42.9 GB, 42949672960 bytes 255 heads, 63 sectors/track, 5221 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x0007101d Device Boot Start End Blocks Id System /dev/sda1 1 1530 12288000 83 Linux /dev/sda2 * 1530 2550 8192000 83 Linux /dev/sda3 2550 3315 6144000 83 Linux /dev/sda4 3315 5222 15318016 5 Extended /dev/sda5 3315 3576 2097152 82 Linux swap / Solaris /dev/sda6 3577 5222 13218816 83 Linux Disk /dev/sdb: 1099.5 GB, 1099511627776 bytes 255 heads, 63 sectors/track, 133674 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x2acf481d Device Boot Start End Blocks Id System /dev/sdb1 1 133674 1073736373+ 8e Linux LVM WARNING: GPT (GUID Partition Table) detected on '/dev/sdc'! The util fdisk doesn't support GPT. Use GNU Parted. Disk /dev/sdc: 4398.0 GB, 4398046511104 bytes 255 heads, 63 sectors/track, 534698 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Device Boot Start End Blocks Id System /dev/sdc1 1 267350 2147483647+ 8e Linux LVM

以上为我们要创建LVM逻辑卷准备的两个分区。

2、创建物理卷(PV),我们来看下和PV相关的命令:

pvcreate:将物理分区创建成为物理卷(PV),创建命令;

pvscan:查询目前系统里面任何具有PV的磁盘;

pvdisplay:显示出目前系统上面的PV状态;

pvremove:将PV属性删除,让该分区不具有PV属性;

下面我看来创建PV吧,其实非常简单了。

[root@localhost ~]# pvcreate /dev/sdb1 Physical volume "/dev/sdb1" successfully created [root@localhost ~]# umount /data [root@localhost ~]# pvcreate /dev/sdc1 Physical volume "/dev/sdc1" successfully created [root@localhost ~]# pv -bash: pv: command not found [root@localhost ~]# pvs PV VG Fmt Attr PSize PFree /dev/sdb1 lvm2 a-- 1023.99g 1023.99g /dev/sdc1 lvm2 a-- 4.00t 4.00t [root@localhost ~]# pvs pvs pvscan [root@localhost ~]# pvs pvs pvscan [root@localhost ~]# pvscan PV /dev/sdb1 lvm2 [1023.99 GiB] PV /dev/sdc1 lvm2 [4.00 TiB] Total: 2 [5.00 TiB] / in use: 0 [0 ] / in no VG: 2 [5.00 TiB] [root@localhost ~]# pvdisplay "/dev/sdb1" is a new physical volume of "1023.99 GiB" --- NEW Physical volume --- PV Name /dev/sdb1 VG Name PV Size 1023.99 GiB Allocatable NO PE Size 0 Total PE 0 Free PE 0 Allocated PE 0 PV UUID wedNqw-DjD5-JM60-sK47-5CHD-rfIf-V2nTgb "/dev/sdc1" is a new physical volume of "4.00 TiB" --- NEW Physical volume --- PV Name /dev/sdc1 VG Name PV Size 4.00 TiB Allocatable NO PE Size 0 Total PE 0 Free PE 0 Allocated PE 0 PV UUID LczjeA-p9uS-XXET-ZJkk-Iq2s-9o2q-LM0JPX

由pvdisplay 可以发现每个pv 在没加入卷组前,其 PE 值都为 0

3、创建卷组(VG),我们来看下和VG相关的命令:

vgcreate:创建VG的命令;

vgscan:查找系统上面是否有VG存在;

vgdisplay:显示目前系统上面的VG状态;

vgextend:在VG内增加额外的PV;

vgreduce:在VG内删除PV;

vgchange:设置VG是否启动;

vgremove:删除一个VG;

vgrename (给 vg 重命名)

格式:vgcreate [-s N[mgt]] VG名称 PV名称

参数: -s:后面接PE的大小(size),单位可以是m,g,t(大小写均可)默认为4MB

[root@localhost ~]# vgcreate 1vg /dev/sdb1 /dev/sdc1 Volume group "1vg" successfully created [root@localhost ~]# vgs VG #PV #LV #SN Attr VSize VFree 1vg 2 0 0 wz--n- 5.00t 5.00t [root@localhost ~]# vgscan anaconda-ks.cfg .bashrc mysql-5.6.25/ .tcshrc .bash_history .cshrc mysql-5.6.25.tar.gz .bash_logout install.log .mysql_history .bash_profile install.log.syslog mysql.sh.txt [root@localhost ~]# vgs vgs vgscan vgsplit [root@localhost ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "1vg" using metadata type lvm2 [root@localhost ~]# vgdisplay --- Volume group --- VG Name 1vg System ID Format lvm2 Metadata Areas 2 Metadata Sequence No 1 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 2 Act PV 2 VG Size 5.00 TiB PE Size 4.00 MiB Total PE 1310717 Alloc PE / Size 0 / 0 Free PE / Size 1310717 / 5.00 TiB VG UUID RtWcGz-voRL-yUeA-XrYK-1bLL-sMxA-VRRLrT [root@localhost ~]# pvdisplay --- Physical volume --- PV Name /dev/sdb1 VG Name 1vg PV Size 1023.99 GiB / not usable 2.68 MiB Allocatable yes PE Size 4.00 MiB Total PE 262142 Free PE 262142 Allocated PE 0 PV UUID oStbSl-d3xZ-Z2My-0JUm-UpdU-CnAo-OqXkaJ --- Physical volume --- PV Name /dev/sdc1 VG Name 1vg PV Size 4.00 TiB / not usable 3.03 MiB Allocatable yes PE Size 4.00 MiB Total PE 1048575 Free PE 1048575 Allocated PE 0 PV UUID LczjeA-p9uS-XXET-ZJkk-Iq2s-9o2q-LM0JPX

大家应该可以看明白吧,和之前介绍的pvs差不多哦!从这里可以看到,我们的VG已经创建好了,将之前创建的两个PV的大小,整合到一起了,我们新建的VG大小为5T。

vgrename VG_NAME VG_NEWNAME (给 vg 重命名)

[root@localhost ~]# vgrename 1vg 1111vg Volume group "1vg" successfully renamed to "1111vg" [root@localhost ~]# vgs VG #PV #LV #SN Attr VSize VFree 1111vg 2 0 0 wz--n- 5.00t 5.00t [root@localhost ~]# vfscan -bash: vfscan: command not found [root@localhost ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "1111vg" using metadata type lvm2 [root@localhost ~]# vgdisplay --- Volume group --- VG Name 1111vg System ID Format lvm2 Metadata Areas 2 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 2 Act PV 2 VG Size 5.00 TiB PE Size 4.00 MiB Total PE 1310717 Alloc PE / Size 0 / 0 Free PE / Size 1310717 / 5.00 TiB VG UUID RtWcGz-voRL-yUeA-XrYK-1bLL-sMxA-VRRLrT

vgremove VG_NAME 删除VG

[root@localhost ~]# vgremove 1111vg Volume group "1111vg" not found Skipping volume group 1111vg [root@localhost ~]# vgscan Reading all physical volumes. This may take a while... No volume groups found [root@localhost ~]# vgdisplay No volume groups found

vgreduce VG_NAME 要移除的pv (从某个 VG 中拿走某个 VG)

注意拿走之前要先对拿走 pv 中的数据进行转移

如 : pvmove /dev/sdb2 /dev/sdb1 把 /dev/sdb2 中的数据移动到 /dev/sdb1 中

[root@localhost ~]# vgreduce 1vg /dev/sdb1 Removed "/dev/sdb1" from volume group "1vg" [root@localhost ~]# vgdisplay --- Volume group --- VG Name 1vg System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 1 Act PV 1 VG Size 4.00 TiB PE Size 4.00 MiB Total PE 1048575 Alloc PE / Size 0 / 0 Free PE / Size 1048575 / 4.00 TiB VG UUID HL3yBn-fEtu-f9wd-Y3NJ-2t5r-ptAY-28tQi0

vgextend VG_NAME 要添加进来的pv ( 把某个pv 添加进某个 VG)

[root@localhost ~]# vgextend 1vg /dev/sdb1 Volume group "1vg" successfully extended [root@localhost ~]# vgscan Reading all physical volumes. This may take a while... Found volume group "1vg" using metadata type lvm2 [root@localhost ~]# vgdisplay --- Volume group --- VG Name 1vg System ID Format lvm2 Metadata Areas 2 Metadata Sequence No 3 VG Access read/write VG Status resizable MAX LV 0 Cur LV 0 Open LV 0 Max PV 0 Cur PV 2 Act PV 2 VG Size 5.00 TiB PE Size 4.00 MiB Total PE 1310717 Alloc PE / Size 0 / 0 Free PE / Size 1310717 / 5.00 TiB VG UUID HL3yBn-fEtu-f9wd-Y3NJ-2t5r-ptAY-28tQi0

4、创建逻辑卷(LV),我们来看下和LV相关的命令:

lvcreate :新建LV;

lvscan:查询系统上面的LV;

lvdisplay:显示系统上面的LV状态;

lvextend:在LV里面增加容量;

lvreduce:在LV里面减少容量;

lvremove:删除一个LV;

lvresize:对LV进行容量大小的调整;

我们来创建一个LV吧!

格式:lvcreate [-L N[mgt]] [-n LV名称] [VG名称]

参数:

-L:后面接容量,容量的单位可以是M,G,T等,最小单位为PE,这个单位必须是PE的倍数,若不相符,系统自动计算相近的容量。

-l:后面接PE的个数,而不是数量

-n:后面接的就是LV的名称。

创建一个4T和一个1T大小的LV吧

[root@localhost ~]# lvcreate -L 4T -n lv_data 1vg Logical volume "lv_data" created [root@localhost ~]# lvs LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert lv_data 1vg -wi-a----- 4.00t [root@localhost ~]# lvscan ACTIVE '/dev/1vg/lv_data' [4.00 TiB] inherit [root@localhost ~]# lvdisplay --- Logical volume --- LV Path /dev/1vg/lv_data LV Name lv_data VG Name 1vg LV UUID VbFeoK-4ZP1-zj32-BnsS-gEpB-LZTR-GxY3Bd LV Write Access read/write LV Creation host, time localhost.localdomain, 2015-06-25 10:09:24 +0800 LV Status available # open 0 LV Size 4.00 TiB Current LE 1048576 Segments 2 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:0 [root@localhost ~]# lvcreate -L 1023G -n lv_test 1vg Logical volume "lv_test" created [root@localhost ~]# lvdisplay --- Logical volume --- LV Path /dev/1vg/lv_data LV Name lv_data VG Name 1vg LV UUID VbFeoK-4ZP1-zj32-BnsS-gEpB-LZTR-GxY3Bd LV Write Access read/write LV Creation host, time localhost.localdomain, 2015-06-25 10:09:24 +0800 LV Status available # open 0 LV Size 4.00 TiB Current LE 1048576 Segments 2 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:0 --- Logical volume --- LV Path /dev/1vg/lv_test LV Name lv_test VG Name 1vg LV UUID 7shO5Z-tYgd-Ttjn-Jtcd-rKA4-LZkv-Nk95cS LV Write Access read/write LV Creation host, time localhost.localdomain, 2015-06-25 10:16:08 +0800 LV Status available # open 0 LV Size 1023.00 GiB Current LE 261888 Segments 1 Allocation inherit Read ahead sectors auto - currently set to 256 Block device 253:1

LV 到这里我们也创建好了,下面我们就来对新创建的LV进行格化、挂载等操作吧,这里我就不对格式化挂载等操作进行过多的解释了,我们直接来操作吧。要注意VG的全称是test_vg;LV的名称必须要使用全名,/dev/test_vg/my_lv这样才对哦,所以千万不要格式化错了哦,路径要选对哦!

5、格式化

[root@localhost ~]# mkfs.ext4 /dev/1vg/lv_data && mkfs.ext4 /dev/1vg/lv_test mke2fs 1.41.12 (17-May-2010) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) Stride=0 blocks, Stripe width=0 blocks 268435456 inodes, 1073741824 blocks 53687091 blocks (5.00%) reserved for the super user First data block=0 Maximum filesystem blocks=4294967296 32768 block groups 32768 blocks per group, 32768 fragments per group 8192 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968, 102400000, 214990848, 512000000, 550731776, 644972544 Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done This filesystem will be automatically checked every 24 mounts or 180 days, whichever comes first. Use tune2fs -c or -i to override. mke2fs 1.41.12 (17-May-2010) Filesystem label= OS type: Linux Block size=4096 (log=2) Fragment size=4096 (log=2) Stride=0 blocks, Stripe width=0 blocks 67043328 inodes, 268173312 blocks 13408665 blocks (5.00%) reserved for the super user First data block=0 Maximum filesystem blocks=4294967296 8184 block groups 32768 blocks per group, 32768 fragments per group 8192 inodes per group Superblock backups stored on blocks: 32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208, 4096000, 7962624, 11239424, 20480000, 23887872, 71663616, 78675968, 102400000, 214990848 Writing inode tables: done Creating journal (32768 blocks): done Writing superblocks and filesystem accounting information: done This filesystem will be automatically checked every 28 mounts or 180 days, whichever comes first. Use tune2fs -c or -i to override.

6、挂载

[root@localhost ~]# fdisk -l Disk /dev/sda: 42.9 GB, 42949672960 bytes 255 heads, 63 sectors/track, 5221 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x0007101d Device Boot Start End Blocks Id System /dev/sda1 1 1530 12288000 83 Linux /dev/sda2 * 1530 2550 8192000 83 Linux /dev/sda3 2550 3315 6144000 83 Linux /dev/sda4 3315 5222 15318016 5 Extended /dev/sda5 3315 3576 2097152 82 Linux swap / Solaris /dev/sda6 3577 5222 13218816 83 Linux Disk /dev/sdb: 1099.5 GB, 1099511627776 bytes 255 heads, 63 sectors/track, 133674 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x2acf481d Device Boot Start End Blocks Id System /dev/sdb1 1 133674 1073736373+ 83 Linux WARNING: GPT (GUID Partition Table) detected on '/dev/sdc'! The util fdisk doesn't support GPT. Use GNU Parted. Disk /dev/sdc: 4398.0 GB, 4398046511104 bytes 255 heads, 63 sectors/track, 534698 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Device Boot Start End Blocks Id System /dev/sdc1 1 267350 2147483647+ 8e Linux LVM Disk /dev/mapper/1vg-lv_data: 4398.0 GB, 4398046511104 bytes 255 heads, 63 sectors/track, 534698 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/mapper/1vg-lv_test: 1098.4 GB, 1098437885952 bytes 255 heads, 63 sectors/track, 133544 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 [root@localhost ~]# df -HT Filesystem Type Size Used Avail Use% Mounted on /dev/sda2 ext4 8.3G 2.7G 5.2G 34% / tmpfs tmpfs 515M 0 515M 0% /dev/shm /dev/sda1 ext4 13G 165M 12G 2% /home /dev/sda6 ext4 14G 1.7G 11G 14% /usr /dev/sda3 ext4 6.2G 291M 5.6G 5% /var [root@localhost ~]# mount /dev/ Display all 201 possibilities? (y or n) [root@localhost ~]# mount /dev/ mount: can't find /dev/ in /etc/fstab or /etc/mtab [root@localhost ~]# mount /dev/m mapper/ mcelog mem midi [root@localhost ~]# mount /dev/m mapper/ mcelog mem midi [root@localhost ~]# mount /dev/m mapper/ mcelog mem midi [root@localhost ~]# mount /dev/mapper/ 1vg-lv_data 1vg-lv_test control [root@localhost ~]# mount /dev/mapper/1vg-lv_data /data [root@localhost ~]# mount /dev/mapper/1vg-lv_test /test [root@localhost ~]# fdisk -l Disk /dev/sda: 42.9 GB, 42949672960 bytes 255 heads, 63 sectors/track, 5221 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x0007101d Device Boot Start End Blocks Id System /dev/sda1 1 1530 12288000 83 Linux /dev/sda2 * 1530 2550 8192000 83 Linux /dev/sda3 2550 3315 6144000 83 Linux /dev/sda4 3315 5222 15318016 5 Extended /dev/sda5 3315 3576 2097152 82 Linux swap / Solaris /dev/sda6 3577 5222 13218816 83 Linux Disk /dev/sdb: 1099.5 GB, 1099511627776 bytes 255 heads, 63 sectors/track, 133674 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x2acf481d Device Boot Start End Blocks Id System /dev/sdb1 1 133674 1073736373+ 83 Linux WARNING: GPT (GUID Partition Table) detected on '/dev/sdc'! The util fdisk doesn't support GPT. Use GNU Parted. Disk /dev/sdc: 4398.0 GB, 4398046511104 bytes 255 heads, 63 sectors/track, 534698 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Device Boot Start End Blocks Id System /dev/sdc1 1 267350 2147483647+ 8e Linux LVM Disk /dev/mapper/1vg-lv_data: 4398.0 GB, 4398046511104 bytes 255 heads, 63 sectors/track, 534698 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/mapper/1vg-lv_test: 1098.4 GB, 1098437885952 bytes 255 heads, 63 sectors/track, 133544 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 [root@localhost ~]# df -TH Filesystem Type Size Used Avail Use% Mounted on /dev/sda2 ext4 8.3G 2.7G 5.2G 34% / tmpfs tmpfs 515M 0 515M 0% /dev/shm /dev/sda1 ext4 13G 165M 12G 2% /home /dev/sda6 ext4 14G 1.7G 11G 14% /usr /dev/sda3 ext4 6.2G 291M 5.6G 5% /var /dev/mapper/1vg-lv_data ext4 4.4T 204M 4.2T 1% /data /dev/mapper/1vg-lv_test ext4 1.1T 209M 1.1T 1% /test

7、添加自动挂载

[root@localhost ~]# blkid /dev/sdb1: UUID="oStbSl-d3xZ-Z2My-0JUm-UpdU-CnAo-OqXkaJ" TYPE="LVM2_member" /dev/sda1: UUID="dbfbfa8d-65ca-48eb-9bb1-59fa3e929de6" TYPE="ext4" /dev/sda2: UUID="3ca94f19-885f-4ceb-a358-e517936c29f5" TYPE="ext4" /dev/sda3: UUID="a6e82449-fe6d-44cd-995f-7b6e27849b64" TYPE="ext4" /dev/sda5: UUID="6e9b5627-b72d-4dcf-9d4b-5d42fc0f6bd4" TYPE="swap" /dev/sda6: UUID="5098319e-c317-441a-8997-dc2f8cf84dfb" TYPE="ext4" /dev/sdc1: UUID="LczjeA-p9uS-XXET-ZJkk-Iq2s-9o2q-LM0JPX" TYPE="LVM2_member" /dev/mapper/1vg-lv_data: UUID="5993bbe2-7a00-406b-92f3-abe839d53d8b" TYPE="ext4" /dev/mapper/1vg-lv_test: UUID="3d2d4532-c3d0-4a04-aeb9-ec963eb1d06e" TYPE="ext4" [root@localhost ~]# cat -n /etc/fstab 1 2 # 3 # /etc/fstab 4 # Created by anaconda on Mon Jun 8 18:39:48 2015 5 # 6 # Accessible filesystems, by reference, are maintained under '/dev/disk' 7 # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info 8 # 9 UUID=3ca94f19-885f-4ceb-a358-e517936c29f5 / ext4 defaults 1 1 10 UUID=dbfbfa8d-65ca-48eb-9bb1-59fa3e929de6 /home ext4 defaults 1 2 11 UUID=5098319e-c317-441a-8997-dc2f8cf84dfb /usr ext4 defaults 1 2 12 UUID=a6e82449-fe6d-44cd-995f-7b6e27849b64 /var ext4 defaults 1 2 13 UUID=6e9b5627-b72d-4dcf-9d4b-5d42fc0f6bd4 swap swap defaults 0 0 14 tmpfs /dev/shm tmpfs defaults 0 0 15 devpts /dev/pts devpts gid=5,mode=620 0 0 16 sysfs /sys sysfs defaults 0 0 17 proc /proc proc defaults 0 0 [root@localhost ~]# echo 'UUID=5993bbe2-7a00-406b-92f3-abe839d53d8b /data ext4 defaults 0 0' >> /etc/fstab [root@localhost ~]# echo 'UUID=3d2d4532-c3d0-4a04-aeb9-ec963eb1d06e /test ext4 defaults 0 0' >> /etc/fstab [root@localhost ~]# cat -n /etc/fstab 1 2 # 3 # /etc/fstab 4 # Created by anaconda on Mon Jun 8 18:39:48 2015 5 # 6 # Accessible filesystems, by reference, are maintained under '/dev/disk' 7 # See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info 8 # 9 UUID=3ca94f19-885f-4ceb-a358-e517936c29f5 / ext4 defaults 1 1 10 UUID=dbfbfa8d-65ca-48eb-9bb1-59fa3e929de6 /home ext4 defaults 1 2 11 UUID=5098319e-c317-441a-8997-dc2f8cf84dfb /usr ext4 defaults 1 2 12 UUID=a6e82449-fe6d-44cd-995f-7b6e27849b64 /var ext4 defaults 1 2 13 UUID=6e9b5627-b72d-4dcf-9d4b-5d42fc0f6bd4 swap swap defaults 0 0 14 tmpfs /dev/shm tmpfs defaults 0 0 15 devpts /dev/pts devpts gid=5,mode=620 0 0 16 sysfs /sys sysfs defaults 0 0 17 proc /proc proc defaults 0 0 18 UUID=5993bbe2-7a00-406b-92f3-abe839d53d8b /data ext4 defaults 0 0 19 UUID=3d2d4532-c3d0-4a04-aeb9-ec963eb1d06e /test ext4 defaults 0 0

接下来我们说一下两个概念 :物理边界 ,逻辑边界

物理边界 : 我们对一个磁盘进行分区的分区边界,也就是说我分给这个分区多大。创建

分区实际上就是创建物理边界的过程。

逻辑边界 : 我们格式化分区建立文件系统后形成的边界。创建文件系统实际上就是

创建逻辑边界的过程。

一般情况下我们在分区上创建文件系统时,逻辑边界是紧贴着物理边界的。但是当我们对逻辑

卷扩展或缩减时逻辑边界就不会紧贴着物理边界。

一个逻辑卷能够使用多少是由物理边界和逻辑边界共同决定的。

由于 逻辑边界在物理边界里面所以进行 逻辑卷的 扩展时是有一定的顺序的 :

扩展 ( 先扩展物理边界 ,再扩展逻辑边界)

缩减 (先缩减逻辑边界 ,在缩减物理边界)

1、在线扩大LVM容量

好了,说了那么多终于进入扩展逻辑卷和缩减逻辑卷了。

扩展逻辑卷 :(可以实现在线扩展也即是不用卸载就能扩展)

1,先查看vg ,保证 vg 中有足够的扩展空间。(可用 vgs 或者 vgdisplay)

2 , 扩展物理边界

3,扩展逻辑边界

操作之前先介绍 lvextend resize2fs

扩展物理边界 : lvextend

用法:lvextend -L [+]大小 LV_NAME(路径)

(注意 有 + 的话表示添加多少 没有得话表示直接把大小变为多少)(扩展之前

请自行用 lvs vgs lvdisplay 决定扩展多少)

[root@localhost test]# df -TH Filesystem Type Size Used Avail Use% Mounted on /dev/sda2 ext4 8.3G 379M 7.5G 5% / tmpfs tmpfs 515M 0 515M 0% /dev/shm /dev/sda1 ext4 13G 165M 12G 2% /home /dev/sda6 ext4 14G 555M 13G 5% /usr /dev/sda3 ext4 6.2G 211M 5.7G 4% /var /dev/mapper/111vg-lv_test ext4 6.0T 195M 5.7T 1% /1test /dev/mapper/111vg-lv_data ext4 2.2T 208M 2.1T 1% /data [root@localhost test]# lvscan ACTIVE '/dev/111vg/lv_test' [5.50 TiB] inherit ACTIVE '/dev/111vg/lv_data' [2.49 TiB] inherit [root@localhost test]# lvs LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert lv_data 111vg -wi-ao---- 2.49t lv_test 111vg -wi-ao---- 5.50t [root@localhost test]# lvextend -L +100G /dev/111vg/lv_data Extending logical volume lv_data to 2.59 TiB Logical volume lv_data successfully resized [root@localhost test]# LVS -bash: LVS: command not found [root@localhost test]# lvs LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert lv_data 111vg -wi-ao---- 2.59t lv_test 111vg -wi-ao---- 5.50t [root@localhost test]# lvscan ACTIVE '/dev/111vg/lv_test' [5.50 TiB] inherit ACTIVE '/dev/111vg/lv_data' [2.59 TiB] inherit [root@localhost test]# df -TH Filesystem Type Size Used Avail Use% Mounted on /dev/sda2 ext4 8.3G 379M 7.5G 5% / tmpfs tmpfs 515M 0 515M 0% /dev/shm /dev/sda1 ext4 13G 165M 12G 2% /home /dev/sda6 ext4 14G 555M 13G 5% /usr /dev/sda3 ext4 6.2G 211M 5.7G 4% /var /dev/mapper/111vg-lv_test ext4 6.0T 195M 5.7T 1% /1test /dev/mapper/111vg-lv_data ext4 2.2T 208M 2.1T 1% /data

#通过上面发现lv_data变大了,但df查看可用的空间没有变化

改变逻辑边界: resize2fs (改变 ext2 ext3 ext4 文件系统的逻辑边界)

用法 :

reszie2fs LV PATH 大小 (把物理边界改为多大 )

resize2fs -p LV PATH 表示把逻辑边界更改为物理边界,也即物理边界有多大就多大)

下面是扩展逻辑卷的整个过程:

先查看扩展逻辑卷能够用的空间 ,以及当前文件系统的大小( lvs vgs df )

扩展物理边界(lvextend ,此时发现 lv 变大了,但是能够使用的空间没有变化( lvs df )

扩展逻辑边界(resize2fs ,此时用 df 查看发现能用的空间变大了)

[root@localhost ~]# resize2fs -p /dev/111vg/lv_data resize2fs 1.41.12 (17-May-2010) Filesystem at /dev/111vg/lv_data is mounted on /data; on-line resizing required old desc_blocks = 128, new_desc_blocks = 166 Performing an on-line resize of /dev/111vg/lv_data to 694157312 (4k) blocks. The filesystem on /dev/111vg/lv_data is now 694157312 blocks long. [root@localhost ~]# [root@localhost ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda2 7.7G 362M 7.0G 5% / tmpfs 491M 0 491M 0% /dev/shm /dev/sda1 12G 158M 11G 2% /home /dev/sda6 13G 529M 12G 5% /usr /dev/sda3 5.8G 201M 5.3G 4% /var /dev/mapper/111vg-lv_test 5.5T 186M 5.2T 1% /1test /dev/mapper/111vg-lv_data 2.6T 202M 2.5T 1% /data

注意:扩展不会对原先的文件造成影响,可以在线扩展也即是不用卸载,扩展是先物理

后逻辑,也即 lvextend resize2fs -p)

2、缩减LVM容量(不支持在线缩减)

缩减逻辑边界 (注意这个有风险)

缩减物理边界: (得先卸载,不能在线缩减)

lvreduce -L [-]大小 LV PATH

注意: 1 , 不能在线缩减,得先卸载

2,确保缩减后的空间大小依然能存储原有的所有数据

3, 在缩减之前应该强行检查逻辑卷,以确保文件系统处于一致性状态

强行检查文件系统 : e2fsck -f LV PATH

操作步骤 :

1 ,先查看能缩减到多少(用 df -h 查看)

2 , 卸载 (umount dir )

3,强制检查 ( e2fsck -f LV PATH)

4, 缩减逻辑边界 (resize2fs )

5 , 缩减物理边界 (lvreduce )

6 ,重新挂载(如果用 mount -a 的话,记住 /etc/fstab 中得有相应的记录)

[root@localhost ~]# df -HT Filesystem Type Size Used Avail Use% Mounted on /dev/sda2 ext4 8.3G 379M 7.5G 5% / tmpfs tmpfs 515M 0 515M 0% /dev/shm /dev/sda1 ext4 13G 165M 12G 2% /home /dev/sda6 ext4 14G 555M 13G 5% /usr /dev/sda3 ext4 6.2G 211M 5.7G 4% /var /dev/mapper/111vg-lv_test ext4 6.0T 195M 5.7T 1% /1test /dev/mapper/111vg-lv_data ext4 2.8T 212M 2.7T 1% /data [root@localhost ~]# ls /data lost+found test [root@localhost ~]# umount /data [root@localhost ~]# ls /data [root@localhost ~]# lvs LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert lv_data 111vg -wi-a----- 2.59t lv_test 111vg -wi-ao---- 5.50t [root@localhost ~]# lvscan ACTIVE '/dev/111vg/lv_test' [5.50 TiB] inherit ACTIVE '/dev/111vg/lv_data' [2.59 TiB] inherit root@localhost ~]# resize2fs /dev/111vg/lv_data 1024G resize2fs 1.41.12 (17-May-2010) Please run 'e2fsck -f /dev/111vg/lv_data' first. [root@localhost ~]# resize2fs /dev/111vg/lv_data 1024G resize2fs 1.41.12 (17-May-2010) Please run 'e2fsck -f /dev/111vg/lv_data' first. [root@localhost ~]# e2fsck -f /dev/111vg/lv_data e2fsck 1.41.12 (17-May-2010) Pass 1: Checking inodes, blocks, and sizes Pass 2: Checking directory structure Pass 3: Checking directory connectivity Pass 4: Checking reference counts Pass 5: Checking group summary information /dev/111vg/lv_data: 13/173539328 files (0.0% non-contiguous), 10943902/694157312 blocks [root@localhost ~]# resize2fs /dev/111vg/lv_data 1024G resize2fs 1.41.12 (17-May-2010) Resizing the filesystem on /dev/111vg/lv_data to 268435456 (4k) blocks. The filesystem on /dev/111vg/lv_data is now 268435456 blocks long. [root@localhost ~]# lvs LV VG Attr LSize Pool Origin Data% Move Log Cpy%Sync Convert lv_data 111vg -wi-a----- 2.59t lv_test 111vg -wi-ao---- 5.50t [root@localhost ~]# lvscan ACTIVE '/dev/111vg/lv_test' [5.50 TiB] inherit ACTIVE '/dev/111vg/lv_data' [2.59 TiB] inherit [root@localhost ~]# resize2fs /dev/111vg/lv_data 1024G resize2fs 1.41.12 (17-May-2010) Resizing the filesystem on /dev/111vg/lv_data to 268435456 (4k) blocks. The filesystem on /dev/111vg/lv_data is now 268435456 blocks long. [root@localhost ~]# mount -a [root@localhost ~]# df -TH Filesystem Type Size Used Avail Use% Mounted on /dev/sda2 ext4 8.3G 379M 7.5G 5% / tmpfs tmpfs 515M 0 515M 0% /dev/shm /dev/sda1 ext4 13G 165M 12G 2% /home /dev/sda6 ext4 14G 555M 13G 5% /usr /dev/sda3 ext4 6.2G 211M 5.7G 4% /var /dev/mapper/111vg-lv_test ext4 6.0T 195M 5.7T 1% /1test /dev/mapper/111vg-lv_data ext4 1.1T 209M 1.1T 1% /data

注意 : 缩减之前记住必须得有这 3 步 :

df -h 查看能缩到多小

umount

e2fsck -f LV PATH

3、扩展VG的容量

[root@ha-node2 ~]# pvcreate /dev/sdb1 #将sdb做成pv Physical volume "/dev/sdb1" successfully created [root@ha-node2 ~]# pvs #查看pv信息 PV VG Fmt Attr PSize PFree /dev/sdb1 lvm2 a-- 10.00g 10.00g /dev/sdc1 myvg lvm2 a-- 5.01g 3.01g /dev/sdc2 myvg lvm2 a-- 5.01g 5.01g /dev/sdc3 myvg lvm2 a-- 9.97g 9.97g [root@ha-node2 ~]# vgextend myvg /dev/sdb1 #对卷组myvg进行扩展 Volume group "myvg" successfully extended [root@ha-node2 ~]# vgs VG #PV #LV #SN Attr VSize VFree myvg 4 1 0 wz--n- 29.98g 27.98g #这里我们能看到myvg的大小从原来的19.99变成27.98G了

4、缩减VG的容量

发现物理磁盘空间使用不足,将其中一块硬盘或分区拿掉

pvmove /dev/sdc1 #将/dev/sdc1上存储的数据移到其它物理卷中

vgreduce myvg /dev/sdc1 #将/dev/sdc1从myvg卷组中移除

pvremove /dev/sdc1 #将/dev/sdc1从物理卷上移除

[root@ha-node2 ~]# pvs PV VG Fmt Attr PSize PFree /dev/sdb1 myvg lvm2 a-- 9.99g 9.99g #这里我们将/dev/sdb1拿掉 /dev/sdc1 myvg lvm2 a-- 5.01g 4.01g /dev/sdc2 myvg lvm2 a-- 5.01g 5.01g /dev/sdc3 myvg lvm2 a-- 9.97g 9.97g [root@ha-node2 ~]# pvmove /dev/sdb1 #将/dev/sdb1上的数据移到其他的pv中 No data to move for myvg [root@ha-node2 ~]# vgreduce myvg /dev/sdb1 #将/dev/sdb1从myvg中移除 Removed "/dev/sdb1" from volume group "myvg" [root@ha-node2 ~]# pvremove /dev/sdb1 #将/dev/sdb1从物理卷中移除 Labels on physical volume "/dev/sdb1" successfully wiped [root@ha-node2 ~]# vgs VG #PV #LV #SN Attr VSize VFree myvg 3 1 0 wz--n- 19.99g 18.99g #最后的结果是myvg只有18.99G [root@ha-node2 ~]# pvs PV VG Fmt Attr PSize PFree /dev/sdc1 myvg lvm2 a-- 5.01g 4.01g /dev/sdc2 myvg lvm2 a-- 5.01g 5.01g /dev/sdc3 myvg lvm2 a-- 9.97g 9.97g [root@ha-node2 ~]# df -h Filesystem Size Used Avail Use% Mounted on /dev/sda3 18G 2.6G 14G 16% / tmpfs 495M 0 495M 0% /dev/shm /dev/sda1 190M 48M 133M 27% /boot /dev/mapper/myvg-mylv1 1008M 67M 891M 7% /mydata [root@ha-node2 ~]# ls /mydata/ lost+found test.txt

5、实现快照,进行备份还原

在/mnt/lvm目录上,我们将原始的目录文件进行快照,然后将/mydata目录中的内容清空,并进行还原

[root@ha-node2 ~]# cat /mydata/test.txt lvm [root@ha-node2 ~]# lvcreate -L 30M -n backup -s -p r /dev/myvg/mylv1 #-L 快照大小 �Cn:快照名称 �Cp 权限只读 �Cs 创建快照 Rounding up size to full physical extent 32.00 MiB Logical volume "backup" created [root@ha-node2 ~]# ll /mydata/ total 20 drwx------ 2 root root 16384 Jun 23 15:41 lost+found -rw-r--r-- 1 root root 4 Jun 23 16:12 test.txt [root@ha-node2 ~]# mkdir /tmp/backup #创建挂载目录 [root@ha-node2 ~]# mount /dev/myvg/backup /tmp/backup/ #挂载 mount: block device /dev/mapper/myvg-backup is write-protected, mounting read-only [root@ha-node2 ~]# cd /tmp/backup/ [root@ha-node2 backup]# ls lost+found test.txt [root@ha-node2 backup]# mkdir /tmp/lvmbackup #创建备份目录 [root@ha-node2 backup]# tar zcf /tmp/lvmbackup/test.tar.gz test.txt #将text.txt文件打包 [root@ha-node2 backup]# ll /tmp/lvmbackup/ #查看备份 total 4 -rw-r--r-- 1 root root 124 Jun 23 16:17 test.tar.gz [root@ha-node2 backup]# rm -rf /mydata/* #删除/mydata目录下的所有文件 [root@ha-node2 backup]# ls /mydata/ [root@ha-node2 backup]# tar xf /tmp/lvmbackup/test.tar.gz -C /mydata/ [root@ha-node2 backup]# ls /mydata/ test.txt