02 ceph集群扩展与管理 实战

扩展集群:

再增加1个OSD节点,

再增加2个mon节点,

增加1个MDS元数据服务器

查看osd节点自动平衡数据

请确保各节点时间偏移不大于0.05sec

Ready !Go!

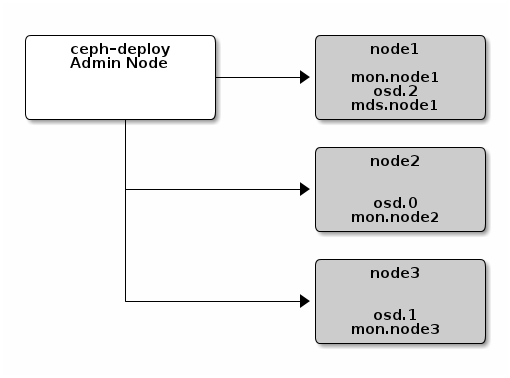

再添加一个OSD

把node1的mon节点也变成osd

root@node1:~# mkdir /var/local/osd2

ceph-deploy osd prepare node1:/var/local/osd2

准备 OSD:

root@admin-node:~# cd /home/my-cluster/

root@admin-node:/home/my-cluster# ceph-deploy osd prepare node1:/var/local/osd2

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.28): /usr/bin/ceph-deploy osd prepare node1:/var/local/osd2

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] disk : [('node1', '/var/local/osd2', None)]

[ceph_deploy.cli][INFO ] dmcrypt : False

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : prepare

[ceph_deploy.cli][INFO ] dmcrypt_key_dir : /etc/ceph/dmcrypt-keys

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fe341bc0fc8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] fs_type : xfs

[ceph_deploy.cli][INFO ] func : <function osd at 0x7fe342023758>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] zap_disk : False

[ceph_deploy.osd][DEBUG ] Preparing cluster ceph disks node1:/var/local/osd2:

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[node1][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 14.04 trusty

[ceph_deploy.osd][DEBUG ] Deploying osd to node1

[node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node1][INFO ] Running command: udevadm trigger --subsystem-match=block --action=add

[ceph_deploy.osd][DEBUG ] Preparing host node1 disk /var/local/osd2 journal None activate False

[node1][INFO ] Running command: ceph-disk -v prepare --cluster ceph --fs-type xfs -- /var/local/osd2

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=fsid

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mkfs_options_xfs

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mkfs_options_xfs

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_mount_options_xfs

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_fs_mount_options_xfs

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=osd_journal_size

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_cryptsetup_parameters

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_dmcrypt_key_size

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-conf --cluster=ceph --name=osd. --lookup osd_dmcrypt_type

[node1][WARNIN] DEBUG:ceph-disk:Preparing osd data dir /var/local/osd2

[node1][INFO ] checking OSD status...

[node1][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

[ceph_deploy.osd][DEBUG ] Host node1 is now ready for osd use.

激活 OSDs:

root@admin-node:/home/my-cluster# ceph-deploy osd activate node1:/var/local/osd2

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.28): /usr/bin/ceph-deploy osd activate node1:/var/local/osd2

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : activate

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f477f019fc8>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function osd at 0x7f477f47c758>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : [('node1', '/var/local/osd2', None)]

[ceph_deploy.osd][DEBUG ] Activating cluster ceph disks node1:/var/local/osd2:

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[node1][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 14.04 trusty

[ceph_deploy.osd][DEBUG ] activating host node1 disk /var/local/osd2

[ceph_deploy.osd][DEBUG ] will use init type: upstart

[node1][INFO ] Running command: ceph-disk -v activate --mark-init upstart --mount /var/local/osd2

[node1][WARNIN] DEBUG:ceph-disk:Cluster uuid is 34e6e6b5-bb3e-4185-a8ee-01837c678db4

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster=ceph --show-config-value=fsid

[node1][WARNIN] DEBUG:ceph-disk:Cluster name is ceph

[node1][WARNIN] DEBUG:ceph-disk:OSD uuid is eb841029-51db-4b38-8734-28655b294308

[node1][WARNIN] DEBUG:ceph-disk:Allocating OSD id...

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring osd create --concise eb841029-51db-4b38-8734-28655b294308

[node1][WARNIN] DEBUG:ceph-disk:OSD id is 2

[node1][WARNIN] DEBUG:ceph-disk:Initializing OSD...

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring mon getmap -o /var/local/osd2/activate.monmap

[node1][WARNIN] got monmap epoch 1

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph-osd --cluster ceph --mkfs --mkkey -i 2 --monmap /var/local/osd2/activate.monmap --osd-data /var/local/osd2 --osd-journal /var/local/osd2/journal --osd-uuid eb841029-51db-4b38-8734-28655b294308 --keyring /var/local/osd2/keyring

[node1][WARNIN] 2015-10-22 13:26:57.892930 7f8b93f41900 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway

[node1][WARNIN] 2015-10-22 13:26:57.942855 7f8b93f41900 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway

[node1][WARNIN] 2015-10-22 13:26:57.943503 7f8b93f41900 -1 filestore(/var/local/osd2) could not find 23c2fcde/osd_superblock/0//-1 in index: (2) No such file or directory

[node1][WARNIN] 2015-10-22 13:26:57.969759 7f8b93f41900 -1 created object store /var/local/osd2 journal /var/local/osd2/journal for osd.2 fsid 34e6e6b5-bb3e-4185-a8ee-01837c678db4

[node1][WARNIN] 2015-10-22 13:26:57.969845 7f8b93f41900 -1 auth: error reading file: /var/local/osd2/keyring: can't open /var/local/osd2/keyring: (2) No such file or directory

[node1][WARNIN] 2015-10-22 13:26:57.969943 7f8b93f41900 -1 created new key in keyring /var/local/osd2/keyring

[node1][WARNIN] DEBUG:ceph-disk:Marking with init system upstart

[node1][WARNIN] DEBUG:ceph-disk:Authorizing OSD key...

[node1][WARNIN] INFO:ceph-disk:Running command: /usr/bin/ceph --cluster ceph --name client.bootstrap-osd --keyring /var/lib/ceph/bootstrap-osd/ceph.keyring auth add osd.2 -i /var/local/osd2/keyring osd allow * mon allow profile osd

[node1][WARNIN] added key for osd.2

[node1][WARNIN] DEBUG:ceph-disk:ceph osd.2 data dir is ready at /var/local/osd2

[node1][WARNIN] DEBUG:ceph-disk:Creating symlink /var/lib/ceph/osd/ceph-2 -> /var/local/osd2

[node1][WARNIN] DEBUG:ceph-disk:Starting ceph osd.2...

[node1][WARNIN] INFO:ceph-disk:Running command: /sbin/initctl emit --no-wait -- ceph-osd cluster=ceph id=2

[node1][INFO ] checking OSD status...

[node1][INFO ] Running command: ceph --cluster=ceph osd stat --format=json

root@admin-node:/home/my-cluster#

watch live cluster changes

root@admin-node:/home/my-cluster# ceph -w

cluster 34e6e6b5-bb3e-4185-a8ee-01837c678db4

health HEALTH_OK

monmap e1: 1 mons at {node1=172.16.66.142:6789/0}

election epoch 2, quorum 0 node1

osdmap e13: 3 osds: 3 up, 3 in

pgmap v24: 64 pgs, 1 pools, 0 bytes data, 0 objects

19765 MB used, 1376 GB / 1470 GB avail

64 active+clean

2015-10-22 13:27:06.305730 mon.0 [INF] pgmap v24: 64 pgs: 64 active+clean; 0 bytes data, 19765 MB used, 1376 GB / 1470 GB avail

2015-10-22 13:29:05.336898 mon.0 [INF] pgmap v25: 64 pgs: 64 active+clean; 0 bytes data, 19765 MB used, 1376 GB / 1470 GB avail

2015-10-22 13:29:06.339859 mon.0 [INF] pgmap v26: 64 pgs: 64 active+clean; 0 bytes data, 19765 MB used, 1376 GB / 1470 GB avail

2015-10-22 13:31:05.378266 mon.0 [INF] pgmap v27: 64 pgs: 64 active+clean; 0 bytes data, 19765 MB used, 1376 GB / 1470 GB avail

2015-10-22 13:33:05.427523 mon.0 [INF] pgmap v28: 64 pgs: 64 active+clean; 0 bytes data, 19765 MB used, 1376 GB / 1470 GB avail

添加源数据服务器

root@admin-node:/home/my-cluster# ceph-deploy mds create node1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.28): /usr/bin/ceph-deploy mds create node1

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f56b51c9680>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function mds at 0x7f56b5613cf8>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] mds : [('node1', 'node1')]

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mds][DEBUG ] Deploying mds, cluster ceph hosts node1:node1

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[ceph_deploy.mds][INFO ] Distro info: Ubuntu 14.04 trusty

[ceph_deploy.mds][DEBUG ] remote host will use upstart

[ceph_deploy.mds][DEBUG ] deploying mds bootstrap to node1

[node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node1][DEBUG ] create path if it doesn't exist

[node1][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node1 osd allow rwx mds allow mon allow profile mds -o /var/lib/ceph/mds/ceph-node1/keyring

[node1][INFO ] Running command: initctl emit ceph-mds cluster=ceph id=node1

root@admin-node:/home/my-cluster#

添加RGW实例

[RGW:The S3/Swift gateway component of Ceph]

root@admin-node:/home/my-cluster# ceph-deploy rgw create node1

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.28): /usr/bin/ceph-deploy rgw create node1

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] rgw : [('node1', 'rgw.node1')]

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : create

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f78a906bb00>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function rgw at 0x7f78a992c5f0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.rgw][DEBUG ] Deploying rgw, cluster ceph hosts node1:rgw.node1

[node1][DEBUG ] connected to host: node1

[node1][DEBUG ] detect platform information from remote host

[node1][DEBUG ] detect machine type

[ceph_deploy.rgw][INFO ] Distro info: Ubuntu 14.04 trusty

[ceph_deploy.rgw][DEBUG ] remote host will use upstart

[ceph_deploy.rgw][DEBUG ] deploying rgw bootstrap to node1

[node1][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node1][DEBUG ] create path recursively if it doesn't exist

[node1][INFO ] Running command: ceph --cluster ceph --name client.bootstrap-rgw --keyring /var/lib/ceph/bootstrap-rgw/ceph.keyring auth get-or-create client.rgw.node1 osd allow rwx mon allow rw -o /var/lib/ceph/radosgw/ceph-rgw.node1/keyring

[node1][INFO ] Running command: initctl emit radosgw cluster=ceph id=rgw.node1

[ceph_deploy.rgw][INFO ] The Ceph Object Gateway (RGW) is now running on host node1 and default port 7480

root@admin-node:/home/my-cluster#

****【This functionality is new with the Hammer release】

修改配置文件 for rgw

root@admin-node:/home/my-cluster# vim ceph.conf [global] fsid = 34e6e6b5-bb3e-4185-a8ee-01837c678db4 mon_initial_members = node1 mon_host = 172.16.66.142 auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx filestore_xattr_use_omap = true osd pool default size = 2 [client] rgw frontends = civetweb port=80

时间一定要同步

root@admin-node:~# ntpdate cn.pool.ntp.org root@node1:~# ntpdate cn.pool.ntp.org root@node2:~# ntpdate cn.pool.ntp.org root@node3:~# ntpdate cn.pool.ntp.org

添加 2个 Monitors

可能会提示使用 --overwrite-conf参数 ceph-deploy --overwrite-conf mon add node2 ceph-deploy --overwrite-conf mon add node3

root@admin-node:/home/my-cluster# ceph-deploy mon add node3

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.28): /usr/bin/ceph-deploy --overwrite-conf mon add node3

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : add

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7fce6871c200>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] mon : ['node3']

[ceph_deploy.cli][INFO ] func : <function mon at 0x7fce68b81e60>

[ceph_deploy.cli][INFO ] address : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mon][INFO ] ensuring configuration of new mon host: node3

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node3

[node3][DEBUG ] connected to host: node3

[node3][DEBUG ] detect platform information from remote host

[node3][DEBUG ] detect machine type

[node3][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.mon][DEBUG ] Adding mon to cluster ceph, host node3

[ceph_deploy.mon][DEBUG ] using mon address by resolving host: 172.16.66.140

[ceph_deploy.mon][DEBUG ] detecting platform for host node3 ...

[node3][DEBUG ] connected to host: node3

[node3][DEBUG ] detect platform information from remote host

[node3][DEBUG ] detect machine type

[node3][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: Ubuntu 14.04 trusty

[node3][DEBUG ] determining if provided host has same hostname in remote

[node3][DEBUG ] get remote short hostname

[node3][DEBUG ] adding mon to node3

[node3][DEBUG ] get remote short hostname

[node3][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node3][DEBUG ] create the mon path if it does not exist

[node3][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-node3/done

[node3][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-node3/done

[node3][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-node3.mon.keyring

[node3][DEBUG ] create the monitor keyring file

[node3][INFO ] Running command: ceph mon getmap -o /var/lib/ceph/tmp/ceph.node3.monmap

[node3][WARNIN] got monmap epoch 1

[node3][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i node3 --monmap /var/lib/ceph/tmp/ceph.node3.monmap --keyring /var/lib/ceph/tmp/ceph-node3.mon.keyring

[node3][DEBUG ] ceph-mon: set fsid to 34e6e6b5-bb3e-4185-a8ee-01837c678db4

[node3][DEBUG ] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node3 for mon.node3

[node3][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-node3.mon.keyring

[node3][DEBUG ] create a done file to avoid re-doing the mon deployment

[node3][DEBUG ] create the init path if it does not exist

[node3][INFO ] Running command: ceph-mon -i node3 --public-addr 172.16.66.140

[node3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node3.asok mon_status

[node3][WARNIN] node3 is not defined in `mon initial members`

[node3][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node3.asok mon_status

[node3][DEBUG ] ********************************************************************************

[node3][DEBUG ] status for monitor: mon.node3

[node3][DEBUG ] {

[node3][DEBUG ] "election_epoch": 1,

[node3][DEBUG ] "extra_probe_peers": [],

[node3][DEBUG ] "monmap": {

[node3][DEBUG ] "created": "0.000000",

[node3][DEBUG ] "epoch": 2,

[node3][DEBUG ] "fsid": "34e6e6b5-bb3e-4185-a8ee-01837c678db4",

[node3][DEBUG ] "modified": "2015-10-22 14:37:01.386999",

[node3][DEBUG ] "mons": [

[node3][DEBUG ] {

[node3][DEBUG ] "addr": "172.16.66.140:6789/0",

[node3][DEBUG ] "name": "node3",

[node3][DEBUG ] "rank": 0

[node3][DEBUG ] },

[node3][DEBUG ] {

[node3][DEBUG ] "addr": "172.16.66.142:6789/0",

[node3][DEBUG ] "name": "node1",

[node3][DEBUG ] "rank": 1

[node3][DEBUG ] }

[node3][DEBUG ] ]

[node3][DEBUG ] },

[node3][DEBUG ] "name": "node3",

[node3][DEBUG ] "outside_quorum": [],

[node3][DEBUG ] "quorum": [],

[node3][DEBUG ] "rank": 0,

[node3][DEBUG ] "state": "electing",

[node3][DEBUG ] "sync_provider": []

[node3][DEBUG ] }

[node3][DEBUG ] ********************************************************************************

[node3][INFO ] monitor: mon.node3 is running

root@admin-node:/home/my-cluster# echo $?

0

root@admin-node:/home/my-cluster# ceph-deploy mon add node2

[ceph_deploy.conf][DEBUG ] found configuration file at: /root/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.28): /usr/bin/ceph-deploy --overwrite-conf mon add node2

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : True

[ceph_deploy.cli][INFO ] subcommand : add

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f9015ec3200>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] mon : ['node2']

[ceph_deploy.cli][INFO ] func : <function mon at 0x7f9016328e60>

[ceph_deploy.cli][INFO ] address : None

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.mon][INFO ] ensuring configuration of new mon host: node2

[ceph_deploy.admin][DEBUG ] Pushing admin keys and conf to node2

[node2][DEBUG ] connected to host: node2

[node2][DEBUG ] detect platform information from remote host

[node2][DEBUG ] detect machine type

[node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[ceph_deploy.mon][DEBUG ] Adding mon to cluster ceph, host node2

[ceph_deploy.mon][DEBUG ] using mon address by resolving host: 172.16.66.141

[ceph_deploy.mon][DEBUG ] detecting platform for host node2 ...

[node2][DEBUG ] connected to host: node2

[node2][DEBUG ] detect platform information from remote host

[node2][DEBUG ] detect machine type

[node2][DEBUG ] find the location of an executable

[ceph_deploy.mon][INFO ] distro info: Ubuntu 14.04 trusty

[node2][DEBUG ] determining if provided host has same hostname in remote

[node2][DEBUG ] get remote short hostname

[node2][DEBUG ] adding mon to node2

[node2][DEBUG ] get remote short hostname

[node2][DEBUG ] write cluster configuration to /etc/ceph/{cluster}.conf

[node2][DEBUG ] create the mon path if it does not exist

[node2][DEBUG ] checking for done path: /var/lib/ceph/mon/ceph-node2/done

[node2][DEBUG ] done path does not exist: /var/lib/ceph/mon/ceph-node2/done

[node2][INFO ] creating keyring file: /var/lib/ceph/tmp/ceph-node2.mon.keyring

[node2][DEBUG ] create the monitor keyring file

[node2][INFO ] Running command: ceph mon getmap -o /var/lib/ceph/tmp/ceph.node2.monmap

[node2][WARNIN] got monmap epoch 2

[node2][INFO ] Running command: ceph-mon --cluster ceph --mkfs -i node2 --monmap /var/lib/ceph/tmp/ceph.node2.monmap --keyring /var/lib/ceph/tmp/ceph-node2.mon.keyring

[node2][DEBUG ] ceph-mon: set fsid to 34e6e6b5-bb3e-4185-a8ee-01837c678db4

[node2][DEBUG ] ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node2 for mon.node2

[node2][INFO ] unlinking keyring file /var/lib/ceph/tmp/ceph-node2.mon.keyring

[node2][DEBUG ] create a done file to avoid re-doing the mon deployment

[node2][DEBUG ] create the init path if it does not exist

[node2][INFO ] Running command: ceph-mon -i node2 --public-addr 172.16.66.141

[node2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status

[node2][WARNIN] node2 is not defined in `mon initial members`

[node2][INFO ] Running command: ceph --cluster=ceph --admin-daemon /var/run/ceph/ceph-mon.node2.asok mon_status

[node2][DEBUG ] ********************************************************************************

[node2][DEBUG ] status for monitor: mon.node2

[node2][DEBUG ] {

[node2][DEBUG ] "election_epoch": 1,

[node2][DEBUG ] "extra_probe_peers": [

[node2][DEBUG ] "172.16.66.140:6789/0"

[node2][DEBUG ] ],

[node2][DEBUG ] "monmap": {

[node2][DEBUG ] "created": "0.000000",

[node2][DEBUG ] "epoch": 3,

[node2][DEBUG ] "fsid": "34e6e6b5-bb3e-4185-a8ee-01837c678db4",

[node2][DEBUG ] "modified": "2015-10-22 14:37:19.313321",

[node2][DEBUG ] "mons": [

[node2][DEBUG ] {

[node2][DEBUG ] "addr": "172.16.66.140:6789/0",

[node2][DEBUG ] "name": "node3",

[node2][DEBUG ] "rank": 0

[node2][DEBUG ] },

[node2][DEBUG ] {

[node2][DEBUG ] "addr": "172.16.66.141:6789/0",

[node2][DEBUG ] "name": "node2",

[node2][DEBUG ] "rank": 1

[node2][DEBUG ] },

[node2][DEBUG ] {

[node2][DEBUG ] "addr": "172.16.66.142:6789/0",

[node2][DEBUG ] "name": "node1",

[node2][DEBUG ] "rank": 2

[node2][DEBUG ] }

[node2][DEBUG ] ]

[node2][DEBUG ] },

[node2][DEBUG ] "name": "node2",

[node2][DEBUG ] "outside_quorum": [],

[node2][DEBUG ] "quorum": [],

[node2][DEBUG ] "rank": 1,

[node2][DEBUG ] "state": "electing",

[node2][DEBUG ] "sync_provider": []

[node2][DEBUG ] }

[node2][DEBUG ] ********************************************************************************

[node2][INFO ] monitor: mon.node2 is running

Once you have added your new Ceph Monitors, Ceph will begin synchronizing the monitors and form a quorum. You can check the quorum status by executing the following:

root@admin-node:/home/my-cluster# ceph quorum_status --format json-pretty

{

"election_epoch": 8,

"quorum": [

0,

1,

2

],

"quorum_names": [

"node3",

"node2",

"node1"

],

"quorum_leader_name": "node3",

"monmap": {

"epoch": 3,

"fsid": "34e6e6b5-bb3e-4185-a8ee-01837c678db4",

"modified": "2015-10-22 14:37:19.313321",

"created": "0.000000",

"mons": [

{

"rank": 0,

"name": "node3",

"addr": "172.16.66.140:6789\/0"

},

{

"rank": 1,

"name": "node2",

"addr": "172.16.66.141:6789\/0"

},

{

"rank": 2,

"name": "node1",

"addr": "172.16.66.142:6789\/0"

}

]

}

}

watch live cluster changes

root@admin-node:~# ceph -w

cluster 34e6e6b5-bb3e-4185-a8ee-01837c678db4

health HEALTH_OK

monmap e3: 3 mons at {node1=172.16.66.142:6789/0,node2=172.16.66.141:6789/0,node3=172.16.66.140:6789/0}

election epoch 42, quorum 0,1,2 node3,node2,node1

osdmap e63: 3 osds: 3 up, 3 in

pgmap v918: 104 pgs, 6 pools, 848 bytes data, 43 objects

19831 MB used, 1376 GB / 1470 GB avail

104 active+clean

2015-10-23 12:58:26.605512 mon.0 [INF] pgmap v918: 104 pgs: 104 active+clean; 848 bytes data, 19831 MB used, 1376 GB / 1470 GB avail

-s, --status show cluster status

-w, --watch watch live cluster changes

--watch-debug watch debug events

--watch-info watch info events

--watch-sec watch security events

--watch-warn watch warn events

--watch-error watch error events

--version, -v display version

--verbose make verbose

--concise make less verbose

【集群管理】

【集群使用统计】

%USED 占用整个集群的容量比例

root@node3:~# ceph df GLOBAL: SIZE AVAIL RAW USED %RAW USED 1470G 1375G 19961M 1.33 POOLS: NAME ID USED %USED MAX AVAIL OBJECTS rbd 0 0 0 687G 0 .rgw.root 1 848 0 687G 3 .rgw.control 2 0 0 687G 8 .rgw 3 0 0 687G 0 .rgw.gc 4 0 0 687G 32 .users.uid 5 0 0 687G 0 test 6 44892k 0 687G 1 root@node3:~# ceph df detail GLOBAL: SIZE AVAIL RAW USED %RAW USED OBJECTS 1470G 1375G 19961M 1.33 44 POOLS: NAME ID CATEGORY USED %USED MAX AVAIL OBJECTS DIRTY READ WRITE rbd 0 - 0 0 687G 0 0 0 0 .rgw.root 1 - 848 0 687G 3 3 0 3 .rgw.control 2 - 0 0 687G 8 8 0 0 .rgw 3 - 0 0 687G 0 0 0 0 .rgw.gc 4 - 0 0 687G 32 32 1997 1344 .users.uid 5 - 0 0 687G 0 0 0 0 test 6 - 44892k 0 687G 1 1 0 11 root@node3:~#

【MON: quorum_leader切换演示】

现在有3台mon

集群mons状态:

可以看出现在的quorum_leader是node3

root@admin-node:/home/my-cluster# ceph quorum_status

{"election_epoch":60,"quorum":[0,1,2],"quorum_names":["node3","node2","node1"],

"quorum_leader_name":"node3",

"monmap":{"epoch":3,"fsid":"34e6e6b5-bb3e-4185-a8ee-01837c678db4",

"modified":"2015-10-22 14:37:19.313321","created":"0.000000",

"mons":[

{"rank":0,"name":"node3","addr":"172.16.66.140:6789\/0"},

{"rank":1,"name":"node2","addr":"172.16.66.141:6789\/0"},

{"rank":2,"name":"node1","addr":"172.16.66.142:6789\/0"}]}}

"quorum_leader_name":"node3"

在node3节点关闭mon

root@node3:~# stop ceph-mon id=node3 ceph-mon stop/waiting

健康状态检查:

root@node3:~# ceph health

HEALTH_WARN 1 mons down, quorum 1,2 node2,node1

root@node3:~# ceph health detail

HEALTH_WARN 1 mons down, quorum 1,2 node2,node1

mon.node3 (rank 0) addr 172.16.66.140:6789/0 is down (out of quorum

root@node3:~# ceph -s

cluster 34e6e6b5-bb3e-4185-a8ee-01837c678db4

health HEALTH_WARN

1 mons down, quorum 1,2 node2,node1

monmap e3: 3 mons at {node1=172.16.66.142:6789/0,node2=172.16.66.141:6789/0,node3=172.16.66.140:6789/0}

election epoch 62, quorum 1,2 node2,node1

osdmap e89: 3 osds: 3 up, 3 in

pgmap v2010: 114 pgs, 7 pools, 44892 kB data, 44 objects

19961 MB used, 1375 GB / 1470 GB avail

114 active+clean

检查集群mons状态

现在可以看到,quorum_leader转到node2了

root@node3:~# ceph quorum_status

{"election_epoch":62,"quorum":[1,2],"quorum_names":["node2","node1"],"quorum_leader_name":"node2","monmap":{"epoch":3,"fsid":"34e6e6b5-bb3e-4185-a8ee-01837c678db4","modified":"2015-10-22 14:37:19.313321","created":"0.000000","mons":[{"rank":0,"name":"node3","addr":"172.16.66.140:6789\/0"},{"rank":1,"name":"node2","addr":"172.16.66.141:6789\/0"},{"rank":2,"name":"node1","addr":"172.16.66.142:6789\/0"}]}}

root@node3:~#

启动mon节点

root@node3:~# start ceph-mon id=node3 ceph-mon (ceph/node3) start/running, process 2459

检查集群mons状态

现在可以看到,quorum_leader恢复为node3了

root@node3:~# ceph quorum_status

{"election_epoch":64,"quorum":[0,1,2],"quorum_names":["node3","node2","node1"],"quorum_leader_name":"node3","monmap":{"epoch":3,"fsid":"34e6e6b5-bb3e-4185-a8ee-01837c678db4","modified":"2015-10-22 14:37:19.313321","created":"0.000000","mons":[{"rank":0,"name":"node3","addr":"172.16.66.140:6789\/0"},{"rank":1,"name":"node2","addr":"172.16.66.141:6789\/0"},{"rank":2,"name":"node1","addr":"172.16.66.142:6789\/0"}]}}

健康检查

root@node3:~# ceph -s

cluster 34e6e6b5-bb3e-4185-a8ee-01837c678db4

health HEALTH_OK

monmap e3: 3 mons at {node1=172.16.66.142:6789/0,node2=172.16.66.141:6789/0,node3=172.16.66.140:6789/0}

election epoch 64, quorum 0,1,2 node3,node2,node1

osdmap e89: 3 osds: 3 up, 3 in

pgmap v2023: 114 pgs, 7 pools, 44892 kB data, 44 objects

19965 MB used, 1375 GB / 1470 GB avail

114 active+clean

【mon法定票数:mons必须保证51%的mon是可以被访问的】

当前的集群状态是健康的,可以看到3个mons

root@admin-node:/home/my-cluster# ceph -s

cluster 34e6e6b5-bb3e-4185-a8ee-01837c678db4

health HEALTH_OK

monmap e3: 3 mons at {node1=172.16.66.142:6789/0,node2=172.16.66.141:6789/0,node3=172.16.66.140:6789/0}

election epoch 64, quorum 0,1,2 node3,node2,node1

osdmap e89: 3 osds: 3 up, 3 in

pgmap v2034: 114 pgs, 7 pools, 44892 kB data, 44 objects

19960 MB used, 1375 GB / 1470 GB avail

114 active+clean

到node2关闭mon

root@node1:~# stop ceph-mon id=node1 ceph-mon stop/waiting

到node3

root@node2:~# stop ceph-mon id=node2 ceph-mon stop/waiting

root@admin-node:/home/my-cluster# ceph -s 2015-10-26 15:28:43.221625 7fc2f84f1700 0 -- :/1003429 >> 172.16.66.142:6789/0 pipe(0x7fc2f405d280 sd=3 :0 s=1 pgs=0 cs=0 l=1 c=0x7fc2f40594a0).fault 2015-10-26 15:28:46.221965 7fc2f83f0700 0 -- :/1003429 >> 172.16.66.142:6789/0 pipe(0x7fc2e8000c00 sd=4 :0 s=1 pgs=0 cs=0 l=1 c=0x7fc2e8004ef0).fault 2015-10-26 15:28:49.222358 7fc2f84f1700 0 -- :/1003429 >> 172.16.66.142:6789/0 pipe(0x7fc2e80081b0 sd=4 :0 s=1 pgs=0 cs=0 l=1 c=0x7fc2e800c450).fault 2015-10-26 15:28:52.222802 7fc2f83f0700 0 -- :/1003429 >> 172.16.66.142:6789/0 pipe(0x7fc2e8000c00 sd=4 :0 s=1 pgs=0 cs=0 l=1 c=0x7fc2e8006610).fault 2015-10-26 15:28:55.223324 7fc2f84f1700 0 -- :/1003429 >> 172.16.66.142:6789/0 pipe(0x7fc2e80081b0 sd=4 :0 s=1 pgs=0 cs=0 l=1 c=0x7fc2e80058b0).fault 2015-10-26 15:28:58.223841 7fc2f83f0700 0 -- :/1003429 >> 172.16.66.142:6789/0 pipe(0x7fc2e8000c00 sd=4 :0 s=1 pgs=0 cs=0 l=1 c=0x7fc2e8006f80).fault 此时现在已经联系不上集群了,无休止刷屏,

需要把刚关闭的几台mon都启动,集群就恢复正常

node1节点

root@node1:~# start ceph-mon id=node1 ceph-mon (ceph/node1) start/running, process 3028

node2节点

root@node2:~# start ceph-mon id=node2 ceph-mon (ceph/node2) start/running, process 2305

集群瞬间恢复HEALTH_OK

root@admin-node:/home/my-cluster# ceph -w

cluster 34e6e6b5-bb3e-4185-a8ee-01837c678db4

health HEALTH_OK

monmap e3: 3 mons at {node1=172.16.66.142:6789/0,node2=172.16.66.141:6789/0,node3=172.16.66.140:6789/0}

election epoch 90, quorum 0,1,2 node3,node2,node1

osdmap e89: 3 osds: 3 up, 3 in

pgmap v2052: 114 pgs, 7 pools, 44892 kB data, 44 objects

19964 MB used, 1375 GB / 1470 GB avail

114 active+clean

检查集群mons状态

现在可以看到,quorum_leader恢复为node3了

root@admin-node:/home/my-cluster# ceph quorum_status

{"election_epoch":90,"quorum":[0,1,2],"quorum_names":["node3","node2","node1"],"quorum_leader_name":"node3","monmap":{"epoch":3,"fsid":"34e6e6b5-bb3e-4185-a8ee-01837c678db4","modified":"2015-10-22 14:37:19.313321","created":"0.000000","mons":[{"rank":0,"name":"node3","addr":"172.16.66.140:6789\/0"},{"rank":1,"name":"node2","addr":"172.16.66.141:6789\/0"},{"rank":2,"name":"node1","addr":"172.16.66.142:6789\/0"}]}}

mon状态

root@admin-node:/home/my-cluster# ceph mon stat

e3: 3 mons at {node1=172.16.66.142:6789/0,node2=172.16.66.141:6789/0,node3=172.16.66.140:6789/0}, election epoch 90, quorum 0,1,2 node3,node2,node1

详细mon状态:

fsid 唯一ID

last_changed:上次修改时间

root@admin-node:/home/my-cluster# ceph mon dump dumped monmap epoch 3 epoch 3 fsid 34e6e6b5-bb3e-4185-a8ee-01837c678db4 last_changed 2015-10-22 14:37:19.313321 created 0.000000 0: 172.16.66.140:6789/0 mon.node3 1: 172.16.66.141:6789/0 mon.node2 2: 172.16.66.142:6789/0 mon.node1

【OSD管理】

显示OSD详细信息,位置,ID,权重,节点

root@admin-node:/home/my-cluster# ceph osd tree ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY -1 1.43999 root default -2 0.48000 host node2 0 0.48000 osd.0 up 1.00000 1.00000 -3 0.48000 host node3 1 0.48000 osd.1 up 1.00000 1.00000 -4 0.48000 host node1 2 0.48000 osd.2 up 1.00000

共有4种状态

up,in 说明OSD运行正常,且已经承载至少一个PG的数据。正常状态。

up,out 说明OSD运行正常,并未承载任何PG

down,in 说明OSD运行发生异常,且已经承载至少一个PG的数据。异常状态。

down,out 说明该OSD已经彻底发生故障,且不再承载任何PG

【up,in --> up,out 状态】

让osd.2变为out状态

root@node1:~# ceph osd out osd.2 osd.2 is already out. 改回正常状态 up,out --> up,in 状态 # ceph osd in osd.2

【删除OSD 节点】

root@ceph-node0-admin0:~# ceph osd dump

epoch 15

fsid 78f1fdb8-d1ec-419d-be06-9861a6c942d0

created 2015-11-12 08:46:21.378927

modified 2015-11-12 12:52:25.377917

flags sortbitwise

pool 0 'rbd' replicated size 3 min_size 2 crush_ruleset 0 object_hash rjenkins pg_num 64 pgp_num 64 last_change 1 flags hashpspool stripe_width 0

max_osd 4

osd.0 down out weight 0 up_from 0 up_thru 0 down_at 0 last_clean_interval [0,0) :/0 :/0 :/0 :/0 exists,new 073a0b01-158e-4387-8785-ca3b36e53965

osd.1 up in weight 1 up_from 9 up_thru 14 down_at 0 last_clean_interval [0,0) 192.168.10.65:6800/1965 192.168.10.65:6801/1965 192.168.10.65:6802/1965 192.168.10.65:6803/1965 exists,up d228c73b-15d2-4015-8373-5561b4b91816

osd.2 up in weight 1 up_from 6 up_thru 14 down_at 0 last_clean_interval [0,0) 192.168.10.62:6800/3859 192.168.10.62:6801/3859 192.168.10.62:6802/3859 192.168.10.62:6803/3859 exists,up 521a39a6-b912-4043-b926-e059020178a3

osd.3 up in weight 1 up_from 14 up_thru 14 down_at 0 last_clean_interval [0,0) 192.168.10.66:6800/1781 192.168.10.66:6801/1781 192.168.10.66:6802/1781 192.168.10.66:6803/1781 exists,up 3b9cfeb8-e5da-4700-a7f4-f1b3601307fb

[Remove the OSD from the CRUSH map ]

root@ceph-node0-admin0:~# ceph osd crush remove osd.0

device 'osd.0' does not appear in the crush map

[Remove the OSD authentication key]

root@ceph-node0-admin0:~# ceph auth del osd.0

entity osd.0 does not exist

[Remove the OSD.]

root@ceph-node0-admin0:~# ceph osd rm 0

removed osd.0

root@ceph-node0-admin0:~# ceph -s

cluster 78f1fdb8-d1ec-419d-be06-9861a6c942d0

health HEALTH_OK

monmap e1: 1 mons at {cephnode1mon0=192.168.10.61:6789/0}

election epoch 1, quorum 0 cephnode1mon0

osdmap e16: 3 osds: 3 up, 3 in

flags sortbitwise

pgmap v43: 64 pgs, 1 pools, 0 bytes data, 0 objects

16520 MB used, 870 GB / 892 GB avail

64 active+clean

查看osd详细信息,可以看到ID,每个pool承载pg的个数

root@admin-node:/home/my-cluster# ceph osd dump epoch 89 fsid 34e6e6b5-bb3e-4185-a8ee-01837c678db4 created 2015-10-22 12:14:52.060418 modified 2015-10-26 10:46:10.360876 flags pool 0 'rbd' replicated size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 64 pgp_num 64 last_change 1 flags hashpspool stripe_width 0 pool 1 '.rgw.root' replicated size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 14 owner 18446744073709551615 flags hashpspool stripe_width 0 pool 2 '.rgw.control' replicated size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 16 owner 18446744073709551615 flags hashpspool stripe_width 0 pool 3 '.rgw' replicated size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 18 owner 18446744073709551615 flags hashpspool stripe_width 0 pool 4 '.rgw.gc' replicated size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 19 owner 18446744073709551615 flags hashpspool stripe_width 0 pool 5 '.users.uid' replicated size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 8 pgp_num 8 last_change 20 owner 18446744073709551615 flags hashpspool stripe_width 0 pool 6 'test' replicated size 2 min_size 1 crush_ruleset 0 object_hash rjenkins pg_num 10 pgp_num 10 last_change 64 flags hashpspool stripe_width 0 max_osd 3 osd.0 up in weight 1 up_from 88 up_thru 88 down_at 87 last_clean_interval [52,86) 172.16.66.141:6800/1273 172.16.66.141:6801/1273 172.16.66.141:6802/1273 172.16.66.141:6803/1273 exists,up 305b777a-8376-41a2-a11b-4bd51de4bfe2 osd.1 up in weight 1 up_from 88 up_thru 88 down_at 87 last_clean_interval [51,86) 172.16.66.140:6800/1340 172.16.66.140:6801/1340 172.16.66.140:6802/1340 172.16.66.140:6803/1340 exists,up a7fc8f11-699e-41ec-8dec-20b2747c898e osd.2 up in weight 1 up_from 88 up_thru 88 down_at 87 last_clean_interval [51,86) 172.16.66.142:6801/1323 172.16.66.142:6802/1323 172.16.66.142:6803/1323 172.16.66.142:6804/1323 exists,up eb841029-51db-4b38-8734-28655b294308

可以看出

osd.0 172.16.66.141

osd.1 172.16.66.140

osd.2 172.16.66.142

显示有多少pool

root@admin-node:/home/my-cluster# ceph osd lspools 0 rbd,1 .rgw.root,2 .rgw.control,3 .rgw,4 .rgw.gc,5 .users.uid,6 test,

删除一个对象

root@admin-node:/home/my-cluster# rados rm my-object --pool=test

设定对象默认保存份数,如果大于OSD个数,会显示 health HEALTH_WARN

root@admin-node:/home/my-cluster# ceph osd pool set test size 4 set pool 6 size to 4

获取默认保存的份数

root@admin-node:/home/my-cluster# ceph osd pool get test size size: 4

获取pg和pgp的数目

root@admin-node:/home/my-cluster# ceph osd pool get test pg_num pg_num: 10 root@admin-node:/home/my-cluster# ceph osd pool get test pgp_num pgp_num: 10 root@admin-nod

ceph集群某个pool实际的pg数目

pg=(pool.id在osd盘文件夹数量/保存分数)+(pool.id 文件夹数量/保存分数).......

除去temp文件夹

每个节点 只会存在一个mon进程

每个节点 只会存在一个osd进程

root@node3:~# ps aux |grep ceph-mon root@node3:~# ps aux |grep ceph-osd

启动所有的守护进程

要启动/停止一个Ceph的节点上所有的守护程序(不论类型):

start/stop ceph-all

要启动特定类型的Ceph的节点上所有的守护进程:

start/stop ceph-osd-all start/stop ceph-mon-all start/stop ceph-mds-all

启动/停止守护进程

要启动特定的守护进程实例Ceph的节点上,执行下列操作之一:

start/stop ceph-osd id={id}

start/stop ceph-mon id={hostname}

start/stop ceph-mds id={hostname}

启动/停止所有守护程序

/etc/init.d/ceph -a start/stop

要启动/停止所有Ceph的守护程序在另一个节点上的一种特殊类型,请使用以下语法:

sudo /etc/init.d/ceph -a start/stop {daemon-type}

sudo /etc/init.d/ceph -a start/stop osd