CentOS5.8 RHCS配置

大纲

一、系统环境

二、准备工作

三、cman + rgmanager + NFS + httpd的高可用服务

一、环境说明

系统环境

CentOS5.8 x86_64

node1.network.com node1 172.16.1.101

node2.network.com node2 172.16.1.105

node3.network.com node3 172.16.1.106

NFS Server /www 172.16.1.102

软件版本

cman-2.0.115-124.el5.x86_64.rpm

rgmanager-2.0.52-54.el5.centos.x86_64.rpm

system-config-cluster-1.0.57-17.noarch.rpm

拓扑图

二、准备工作

1、时间同步

[root@node1 ~]# ntpdate s2c.time.edu.cn [root@node2 ~]# ntpdate s2c.time.edu.cn [root@node3 ~]# ntpdate s2c.time.edu.cn 可根据需要在每个节点上定义crontab任务 [root@node1 ~]# which ntpdate /sbin/ntpdate [root@node1 ~]# echo "*/5 * * * * /sbin/ntpdate s2c.time.edu.cn &> /dev/null" >> /var/spool/cron/root [root@node1 ~]# crontab -l */5 * * * * /sbin/ntpdate s2c.time.edu.cn &> /dev/null

2、主机名称要与uname -n保持一致,并通过/etc/hosts解析

node1 [root@node1 ~]# hostname node1.network.com [root@node1 ~]# uname -n node1.network.com [root@node1 ~]# sed -i 's@\(HOSTNAME=\).*@\1node1.network.com@g' /etc/sysconfig/network node2 [root@node2 ~]# hostname node2.network.com [root@node2 ~]# uname -n node2.network.com [root@node2 ~]# sed -i 's@\(HOSTNAME=\).*@\1node2.network.com@g' /etc/sysconfig/network node3 [root@node3 ~]# hostname node3.network.com [root@node3 ~]# uname -n node3.network.com [root@node3 ~]# sed -i 's@\(HOSTNAME=\).*@\1node3.network.com@g' /etc/sysconfig/network node1添加hosts解析 [root@node1 ~]# vim /etc/hosts [root@node1 ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.16.1.101 node1.network.com node1 172.16.1.105 node2.network.com node2 172.16.1.110 node3.network.com node3 拷贝此hosts文件至node2 [root@node1 ~]# scp /etc/hosts node2:/etc/ The authenticity of host 'node2 (172.16.1.105)' can't be established. RSA key fingerprint is 13:42:92:7b:ff:61:d8:f3:7c:97:5f:22:f6:71:b3:24. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node2,172.16.1.105' (RSA) to the list of known hosts. root@node2's password: hosts 100% 233 0.2KB/s 00:00 拷贝此hosts文件至node3 [root@node1 ~]# scp /etc/hosts node3:/etc/ The authenticity of host 'node3 (172.16.1.110)' can't be established. RSA key fingerprint is 13:42:92:7b:ff:61:d8:f3:7c:97:5f:22:f6:71:b3:24. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'node3,172.16.1.110' (RSA) to the list of known hosts. hosts 100% 320 0.3KB/s 00:00

3、ssh互信通信

node1 [root@node1 ~]# ssh-keygen -t rsa -f ~/.ssh/id_rsa -P '' Generating public/private rsa key pair. /root/.ssh/id_rsa already exists. Overwrite (y/n)? n # 我这里已经生成过了 [root@node1 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub node2 root@node2's password: Now try logging into the machine, with "ssh 'node2'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting. [root@node1 ~]# setenforce 0 [root@node1 ~]# ssh node2 'ifconfig' eth0 Link encap:Ethernet HWaddr 00:0C:29:D6:03:52 inet addr:172.16.1.105 Bcast:255.255.255.255 Mask:255.255.255.0 inet6 addr: fe80::20c:29ff:fed6:352/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:9881 errors:0 dropped:0 overruns:0 frame:0 TX packets:11220 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:5898514 (5.6 MiB) TX bytes:1850217 (1.7 MiB) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 inet6 addr: ::1/128 Scope:Host UP LOOPBACK RUNNING MTU:16436 Metric:1 RX packets:16 errors:0 dropped:0 overruns:0 frame:0 TX packets:16 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:1112 (1.0 KiB) TX bytes:1112 (1.0 KiB) 同理node2也需要做同样的双击互信,一样的操作,此处不再演示

4、关闭iptables和selinux

node1

[root@node1 ~]# service iptables stop [root@node1 ~]# vim /etc/sysconfig/selinux [root@node1 ~]# cat /etc/sysconfig/selinux # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - SELinux is fully disabled. #SELINUX=permissive SELINUX=disabled # SELINUXTYPE= type of policy in use. Possible values are: # targeted - Only targeted network daemons are protected. # strict - Full SELinux protection. SELINUXTYPE=targeted

node2

[root@node2 ~]# service iptables stop [root@node2 ~]# vim /etc/sysconfig/selinux [root@node2 ~]# cat /etc/sysconfig/selinux # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - SELinux is fully disabled. #SELINUX=permissive SELINUX=disabled # SELINUXTYPE= type of policy in use. Possible values are: # targeted - Only targeted network daemons are protected. # strict - Full SELinux protection. SELINUXTYPE=targeted

node3

[root@node3 ~]# service iptables stop [root@node3 ~]# vim /etc/sysconfig/selinux [root@node3 ~]# cat /etc/sysconfig/selinux # This file controls the state of SELinux on the system. # SELINUX= can take one of these three values: # enforcing - SELinux security policy is enforced. # permissive - SELinux prints warnings instead of enforcing. # disabled - SELinux is fully disabled. #SELINUX=permissive SELINUX=disabled # SELINUXTYPE= type of policy in use. Possible values are: # targeted - Only targeted network daemons are protected. # strict - Full SELinux protection. SELINUXTYPE=targeted

三、cman + rgmanager + NFS + httpd的高可用服务

1、启动system-config-cluster

[root@node1 ~]# system-config-cluster & [1] 3987

输入集群名称,自定义

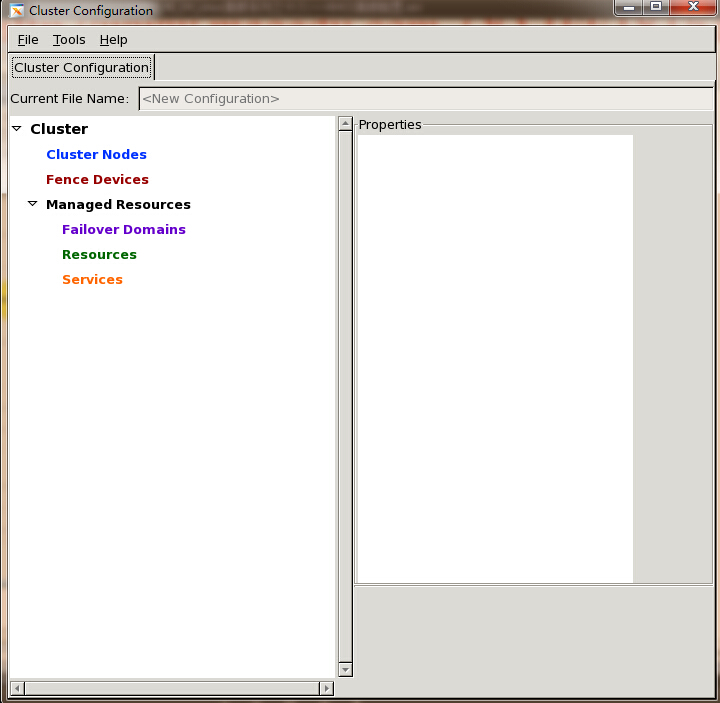

点击ok进入如下界面

添加三个节点node1、node2、node3、这里的集群节点名称与uname -n保持一致

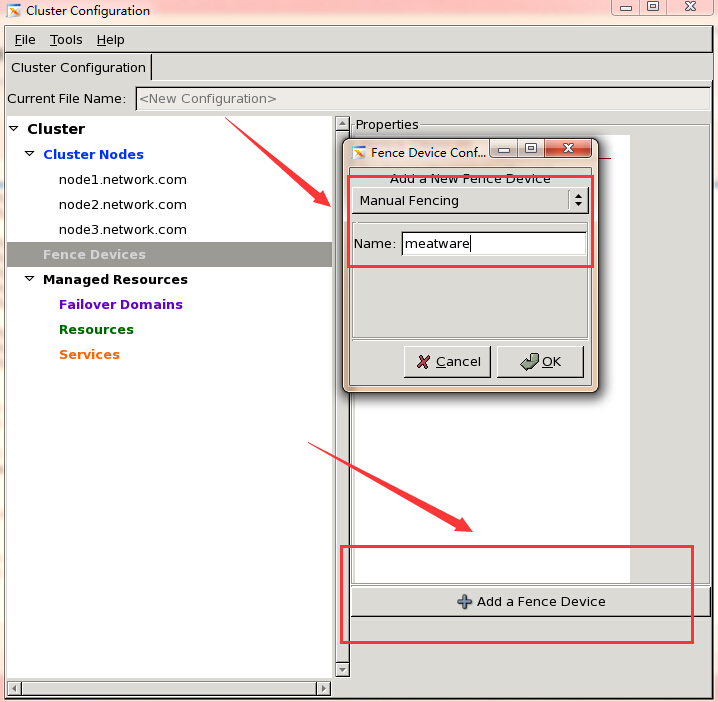

添加三个节点完成之后,再添加fence设备

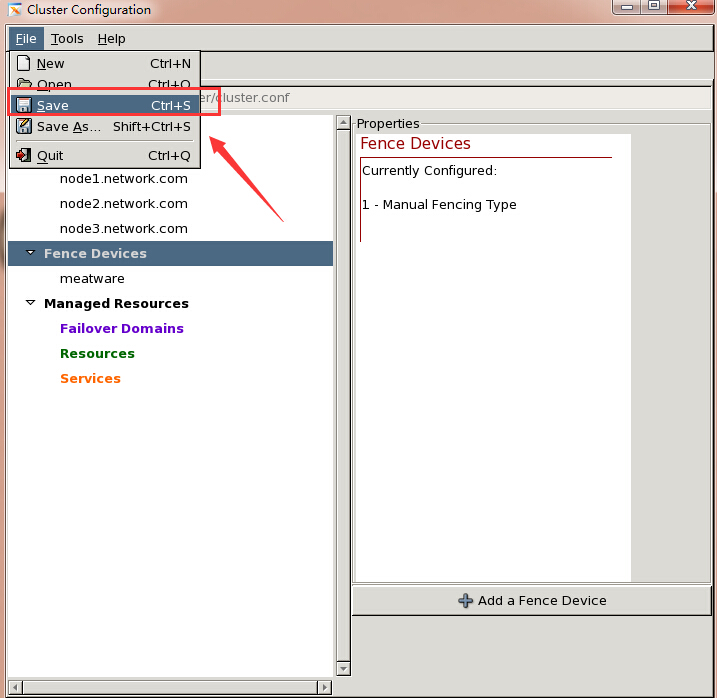

点击File-->Save

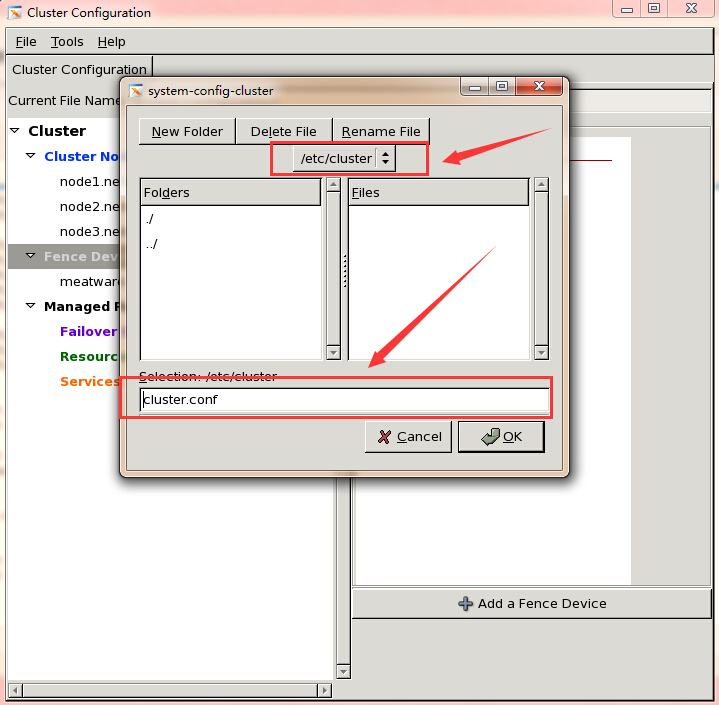

默认保存为/etc/cluster/cluster.conf

保存完成之后就可以关闭此GUI接口

2、三个节点上启动cman与rgmanager服务

node1 [root@node1 ~]# service cman start Starting cluster: Loading modules... done Mounting configfs... done Starting ccsd... done Starting cman... done Starting daemons... done Starting fencing... done Tuning DLM... done [ OK ] [root@node1 ~]# service rgmanager start Starting Cluster Service Manager: [ OK ] node2 [root@node2 cluster]# service cman start Starting cluster: Loading modules... done Mounting configfs... done Starting ccsd... done Starting cman... done Starting daemons... done Starting fencing... done Tuning DLM... done [ OK ] [root@node2 cluster]# service rgmanager start Starting Cluster Service Manager: [ OK ] node3 [root@node3 ~]# service cman start Starting cluster: Loading modules... done Mounting configfs... done Starting ccsd... done Starting cman... done Starting daemons... done Starting fencing... done Tuning DLM... done [ OK ] [root@node3 ~]# service rgmanager start Starting Cluster Service Manager: [ OK ] 查看各集群节点状态信息 [root@node1 ~]# cman_tool status Version: 6.2.0 Config Version: 2 Cluster Name: tcluster Cluster Id: 28212 Cluster Member: Yes Cluster Generation: 260 Membership state: Cluster-Member Nodes: 3 Expected votes: 3 Total votes: 3 Node votes: 1 Quorum: 2 Active subsystems: 8 Flags: Dirty Ports Bound: 0 177 Node name: node1.network.com Node ID: 1 Multicast addresses: 239.192.110.162 Node addresses: 172.16.1.101 也可以使用clustat来查看 [root@node1 ~]# clustat Cluster Status for tcluster @ Mon Jan 11 21:44:04 2016 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ node1.network.com 1 Online, Local, rgmanager node2.network.com 2 Online, rgmanager node3.network.com 3 Online, rgmanager 今天做这个出了点问题,先添加ip,再添加httpd,服务死活启动不了,百度谷歌查了一天也没结果 只是不停报错,错误日志如下 Jan 11 21:22:45 node1 clurgmgrd[5425]: <notice> Reconfiguring Jan 11 21:22:46 node1 clurgmgrd[5425]: <notice> Initializing service:webservice Jan 11 21:22:48 node1 clurgmgrd[5425]: <notice> Recovering failed service service:webservice Jan 11 21:22:48 node1 clurgmgrd[5425]: <notice> start on ip "172.16.1.110/16" returned 1 (generic error) Jan 11 21:22:48 node1 clurgmgrd[5425]: <warning> #68: Failed to start service:webservice; return value: 1 Jan 11 21:22:48 node1 clurgmgrd[5425]: <notice> Stopping service service:webservice Jan 11 21:22:49 node1 clurgmgrd[5425]: <notice> Service service:webservice is recovering Jan 11 21:22:50 node1 ccsd[3455]: Update of cluster.conf complete (version 15 -> 18). Jan 11 21:22:59 node1 gconfd (root-6662): GConf server is not in use, shutting down. Jan 11 21:22:59 node1 gconfd (root-6662): Exiting 后来我尝试着不启动ip,只启动httpd,发现服务可以启动,再然后我把ip添加进来,结果竟然可以了 坑爹,不得不说,莫名其妙的原因坑了我一整天 现在细细想来,貌似是我添加的ip跟我本地物理主机不在同一网段内,我把ip换成了172.16.1.110/24就好了

注意:请确保各节点上openais服务关闭开机自启动

[root@node1 ~]# chkconfig --list openais openais 0:off 1:off 2:off 3:off 4:off 5:off 6:off

3、安装httpd并且配置不同页面,方便测试

node1 [root@node1 ~]# yum install -y httpd [root@node1 ~]# echo "<h1>node1.network.com</h1>" > /var/www/html/index.html node2 [root@node2 ~]# yum install -y httpd [root@node2 ~]# echo "<h1>node2.network.com</h1>" > /var/www/html/index.html node3 [root@node3 ~]# yum install -y httpd [root@node3 ~]# echo "<h1>node3.network.com</h1>" > /var/www/html/index.html 在3个节点上做测试,这里暂以node1为例 httpd服务是否能正常访问,测试成功之后关闭httpd服务,并设置不能开机自启动 [root@node1 ~]# service httpd start Starting httpd: [ OK ] [root@node1 ~]# curl http://172.16.1.101 <h1>node1.network.com</h1> [root@node1 ~]# service httpd stop Stopping httpd: [ OK ] [root@node1 ~]# chkconfig httpd off

4、配置资源和服务

[root@node1 ~]# system-config-cluster & [1] 4605

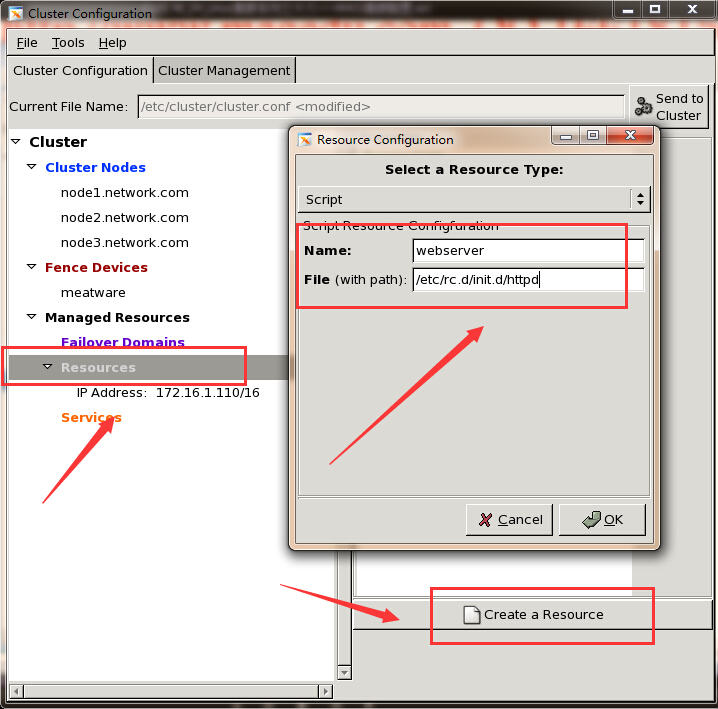

再来配置webserver资源

点击File-->Save

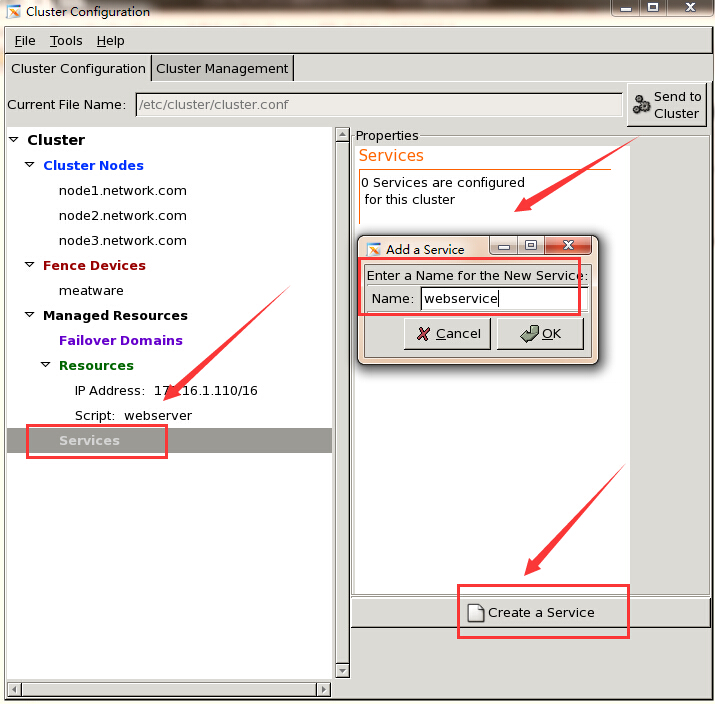

两个资源配置完成,接下来就可以配置服务了

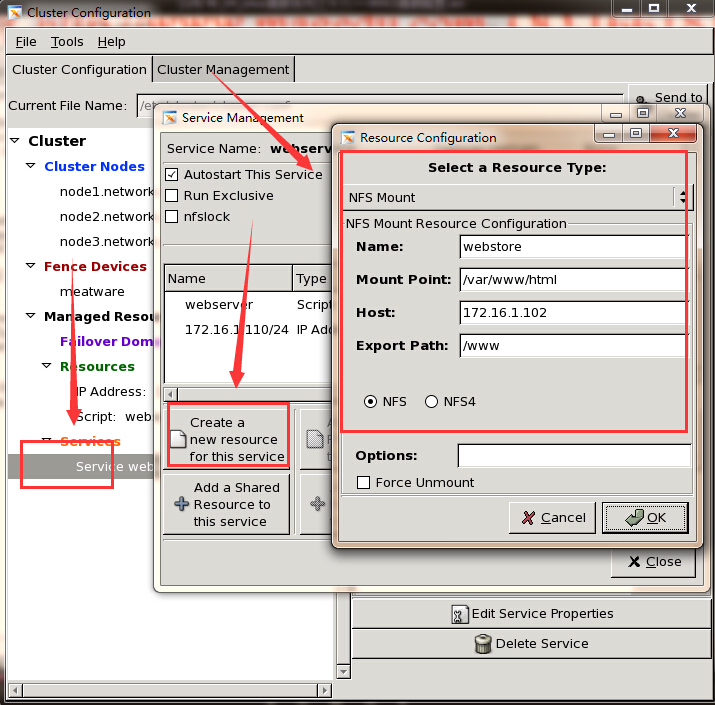

点击Add a Shared Resource to this service,将刚才配置的两个资源添加进来

配置好服务之后,同步给其它节点

5、查看服务状态

[root@node1 ~]# clustat Cluster Status for tcluster @ Mon Jan 11 21:49:32 2016 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ node1.network.com 1 Online, Local, rgmanager node2.network.com 2 Online, rgmanager node3.network.com 3 Online, rgmanager Service Name Owner (Last) State ------- ---- ----- ------ ----- service:webservice node1.network.com started 这里使用curl访问测试,没有用浏览器,懒得贴图了,一天被上面那个莫名其妙的原因搞得心累 [root@node1 ~]# curl http://172.16.1.110 <h1>node1.network.com</h1> 测试是否能进行资源迁移 [root@node1 ~]# clusvcadm -r webservice -m node3.network.com Trying to relocate service:webservice to node3.network.com...Success 查看集群服务状态 [root@node1 ~]# clustat Cluster Status for tcluster @ Mon Jan 11 21:49:32 2016 Member Status: Quorate Member Name ID Status ------ ---- ---- ------ node1.network.com 1 Online, Local, rgmanager node2.network.com 2 Online, rgmanager node3.network.com 3 Online, rgmanager Service Name Owner (Last) State ------- ---- ----- ------ ----- service:webservice node3.network.com started

这次使用浏览器访问测试一下

6、再来添加一个Filesystem资源作为web页面存储路径

先准备好NFS Server,这里不给出具体过程,之前的文档中有

再手动迁移一下服务

[root@node1 ~]# clusvcadm -r webservice -m node1.network.com Trying to relocate service:webservice to node1.network.com...Success

再来访问一下

到此,一个非常简单的cman + rgmanager + NFS + httpd的高可用服务搭建完成

四、基于命令行配置(补充内容,另外一种配置方式)

1、生成配置文件

请先确保三个节点是刚安装完cman、rgmanager的,并没有配置文件 [root@node1 ~]# ccs_tool create tcluster 查看生成的配置文件 [root@node1 ~]# cat /etc/cluster/cluster.conf <?xml version="1.0"?> <cluster name="tcluster" config_version="1"> <clusternodes/> <fencedevices/> <rm> <failoverdomains/> <resources/> </rm> </cluster>

2、配置fence设备

[root@node1 ~]# ccs_tool addfence meatware fence_manual running ccs_tool update... 查看fence设备 [root@node1 ~]# ccs_tool lsfence Name Agent meatware fence_manual

3、添加节点

[root@node1 ~]# ccs_tool addnode -v 1 -n 1 -f meatware node1.network.com [root@node1 ~]# ccs_tool addnode -v 1 -n 2 -f meatware node2.network.com [root@node1 ~]# ccs_tool addnode -v 1 -n 3 -f meatware node3.network.com 查看添加的各节点 [root@node1 ~]# ccs_tool lsnode Cluster name: tcluster, config_version: 30 Nodename Votes Nodeid Fencetype node1.network.com 1 1 meatware node2.network.com 1 2 meatware node3.network.com 1 3 meatware

目前的话如果想编辑资源,还是得去编辑配置文件,这一点还是比较头疼的