Linux块设备子系统浅析(2.6.26)

Linux块设备子系统浅析(2.6.26)

作者:guolele 2011.3.20.

Blog:http://blog.csdn.net/guolele2010

这里只是说明每个函数大概是做些什么工作,用于了解块设备子系统工作的原理。

首先,系统初始化时,会调用sysinit_call()加载各个子系统,而对于块设备来说就是在,

Block/genhd.c里subsys_initcall(genhd_device_init);

而genhd_device_init主要做几件事情

static int __init genhd_device_init(void) { int error = class_register(&block_class); ….. bdev_map = kobj_map_init(base_probe, &block_class_lock); blk_dev_init(); #ifndef CONFIG_SYSFS_DEPRECATED /* create top-level block dir */ block_depr = kobject_create_and_add("block", NULL); #endif return 0; }

class_register(&block_class);//在/sys/class下创建block设备类

kobj_map_init(base_probe, &block_class_lock);中是创建一个kobject映射域,kobj_map是一个保存了一个255目索引,以主设备号为间隔的哈希表。主要的作用是当kobj_map调用时,会匹配设备,然后把设备主设备号写进哈希表里,然后调用它的base->get,初始化为base_probe。

static struct kobject *base_probe(dev_t devt, int *part, void *data) { if (request_module("block-major-%d-%d", MAJOR(devt), MINOR(devt)) > 0) /* Make old-style 2.4 aliases work */ request_module("block-major-%d", MAJOR(devt)); return NULL; }

这函数的主要作用是加载内核模块。

kobject_create_and_add("block", NULL);在sys下创建block目录

blk_dev_init();

int __init blk_dev_init(void) { int i; kblockd_workqueue = create_workqueue("kblockd"); if (!kblockd_workqueue) panic("Failed to create kblockd/n"); request_cachep = kmem_cache_create("blkdev_requests", sizeof(struct request), 0, SLAB_PANIC, NULL); blk_requestq_cachep = kmem_cache_create("blkdev_queue", sizeof(struct request_queue), 0, SLAB_PANIC, NULL); for_each_possible_cpu(i) INIT_LIST_HEAD(&per_cpu(blk_cpu_done, i)); open_softirq(BLOCK_SOFTIRQ, blk_done_softirq, NULL); register_hotcpu_notifier(&blk_cpu_notifier); return 0; }

kblockd_workqueue = create_workqueue("kblockd");这函数作用是创建一个工作队列和内核处理线程,这个工作队列会在申请请求时设定的超时处理函数blk_unplug_timeout里得到调用,目的是为是为是超时时调用kblockd_schedule_work(&q->unplug_work); q->unplug_work,这里的这个函数又是在blk_init_queue时指定的,可能很多不明白,但是这里为了说明这个工作队列的作用,不得不大概调出后面的调用顺序,这样更清晰,这里只要记住,这个工作队列是为了让要暂时阻塞不接受request,但又重新恢复接受时调用的。blk_plug_device()以及blk_remove_plug()。

kmem_cache_create创建一些缓冲池,让一些请求或者请求队列在里面创建。

open_softirq(BLOCK_SOFTIRQ, blk_done_softirq, NULL);这个函数主要是初始化了一个软件中断,具体调用是在当处理请求完成后,调用raise_softirq_irqoff(BLOCK_SOFTIRQ);然后激活,进入blk_done_softirq,最后处理指定的函数完成完成处理命令。Xxx_ finish_command(cmd);

register_hotcpu_notifier(&blk_cpu_notifier);这个函数是为了让系统支持热插拔。

完成了子系统的始初化,就要看看设备驱动是如何跟子系统联系的。

块设备驱动要做的工作先是register_blkdev()这没什么用,就是在proc/device里显示一下。

然后就是alloc_disk(),主要作用是申请一个struct gendisk结构,并初始化必要的值

接着是add_disk()

void add_disk(struct gendisk *disk) { struct backing_dev_info *bdi; disk->flags |= GENHD_FL_UP; blk_register_region(MKDEV(disk->major, disk->first_minor), disk->minors, NULL, exact_match, exact_lock, disk); register_disk(disk); blk_register_queue(disk); bdi = &disk->queue->backing_dev_info; bdi_register_dev(bdi, MKDEV(disk->major, disk->first_minor)); sysfs_create_link(&disk->dev.kobj, &bdi->dev->kobj, "bdi"); }

这函数看似简单,却非常复杂。

blk_register_region(MKDEV(disk->major, disk->first_minor),

disk->minors, NULL, exact_match, exact_lock, disk);

首先这一个函数是为了保存一些信息并加载这模块到bdev_map,即子系统创建的kobject_map里。

register_disk(disk);这函数的主要作用是创建一些目录,创建以及初始化struct block_device结构体。其中指定的文件信息保存在default_attrs里,而default_attrs又在blk_queue_ktype中。

blk_register_queue(disk);这个函数注册请求队列(申请在驱动程序blk_init_queue),然后注册IO调试函数即所谓的电梯函数。

bdi_register_dev(bdi, MKDEV(disk->major, disk->first_minor));这函数生成一些信息文件。

也看完了驱动程序的作用,那么两者如何联系的呢?

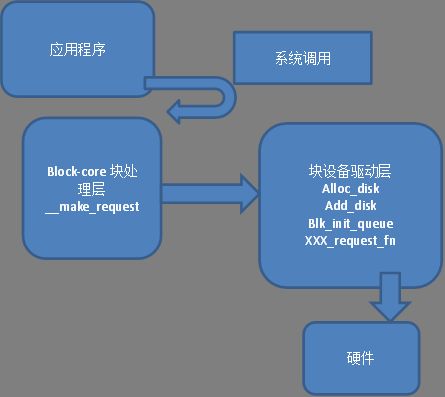

首先应用程序通过系统调用read write后,通过不同的文件系统,最终调用__blockdev_direct_io>>>> direct_io_worker>>>> dio_bio_submit>>> submit_bio>>> generic_make_request>>> __generic_make_request>>> ret = q->make_request_fn(q, bio);最终调用make_request

这就是指定的make_request的作用。假如不自己定一个make_request的话,那么在驱动程序调用blk_init_queue里会有blk_queue_make_request(q, __make_request);默认指定__make_request.

static int __make_request(struct request_queue *q, struct bio *bio) { …… /* * low level driver can indicate that it wants pages above a * certain limit bounced to low memory (ie for highmem, or even * ISA dma in theory) */ blk_queue_bounce(q, &bio); …… el_ret = elv_merge(q, &req, bio); switch (el_ret) { case ELEVATOR_BACK_MERGE: BUG_ON(!rq_mergeable(req)); if (!ll_back_merge_fn(q, req, bio)) break; blk_add_trace_bio(q, bio, BLK_TA_BACKMERGE); req->biotail->bi_next = bio; req->biotail = bio; req->nr_sectors = req->hard_nr_sectors += nr_sectors; req->ioprio = ioprio_best(req->ioprio, prio); drive_stat_acct(req, 0); if (!attempt_back_merge(q, req)) elv_merged_request(q, req, el_ret); goto out; case ELEVATOR_FRONT_MERGE: BUG_ON(!rq_mergeable(req)); if (!ll_front_merge_fn(q, req, bio)) break; blk_add_trace_bio(q, bio, BLK_TA_FRONTMERGE); bio->bi_next = req->bio; req->bio = bio; /* * may not be valid. if the low level driver said * it didn't need a bounce buffer then it better * not touch req->buffer either... */ req->buffer = bio_data(bio); req->current_nr_sectors = bio_cur_sectors(bio); req->hard_cur_sectors = req->current_nr_sectors; req->sector = req->hard_sector = bio->bi_sector; req->nr_sectors = req->hard_nr_sectors += nr_sectors; req->ioprio = ioprio_best(req->ioprio, prio); drive_stat_acct(req, 0); if (!attempt_front_merge(q, req)) elv_merged_request(q, req, el_ret); goto out; /* ELV_NO_MERGE: elevator says don't/can't merge. */ default: ; } get_rq: /* * This sync check and mask will be re-done in init_request_from_bio(), * but we need to set it earlier to expose the sync flag to the * rq allocator and io schedulers. */ rw_flags = bio_data_dir(bio); if (sync) rw_flags |= REQ_RW_SYNC; /* * Grab a free request. This is might sleep but can not fail. * Returns with the queue unlocked. */ req = get_request_wait(q, rw_flags, bio); /* * After dropping the lock and possibly sleeping here, our request * may now be mergeable after it had proven unmergeable (above). * We don't worry about that case for efficiency. It won't happen * often, and the elevators are able to handle it. */ init_request_from_bio(req, bio); spin_lock_irq(q->queue_lock); if (elv_queue_empty(q)) blk_plug_device(q); add_request(q, req); out: if (sync) __generic_unplug_device(q); spin_unlock_irq(q->queue_lock); return 0; end_io: bio_endio(bio, err); return 0; }

el_ret = elv_merge(q, &req, bio);判断是否有电梯函数,如果有,就处理一下IO操作,然后把它们都放在一个request里,再到

out:

if (sync)

__generic_unplug_device(q);

spin_unlock_irq(q->queue_lock);

return 0;

__generic_unplug_device(q);主要是调用q->request_fn(q);调用驱动程序中的处理函数。

如果不支持电梯函数就获得一个空的request然后把bio填进去,再调用__generic_unplug_device(q)。

交给驱动程序处理,因为结束一个bio要采用bio_endio(bio, err);,而如果不出错,需要在驱动程序中自己加上去。一般驱动程序如果用默认make_request有两种方式处理,一种是一次一个请求,结束用end_request,另一种是采用rq_for_each_bio>>bio_for_each_segment一次性处理一连续bio,这种结束采用end_that_request_first>>blkdev_dequeue_request>>end_that_request_last

最后整一副整个块设备工作流程关系图: