iOS中截取视频中的音频

最近项目中需要用到,截取视频中的音频,特别记录一下.

参照的方法是raywenderlich里的一篇文章,地址是:How to Play, Record, and Edit Videos in iOS

里面有一些有关于AVFoundation非常好的介绍,在这里贴一下

A Brief Intro to AVFoundation

Now that your video playback and recording is up and running, let’s move on to something a bit more complex: AVFoundation.

Since iOS 4.0, the iOS SDK provides a number of video editing APIs in the AVFoundation framework. With these APIs, you can apply any kind of CGAffineTransform to a video and merge multiple video and audio files together into a single video.

These last few sections of the tutorial will walk you through merging two videos into a single video and adding a background audio track.

Before diving into the code, let’s discuss some theory first.

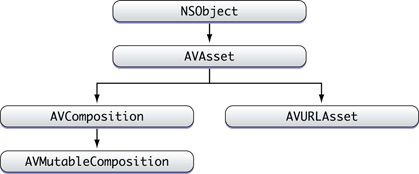

AVAsset

This is an abstract class that represents timed audiovisual media such as video and audio. Each asset contains a collection of tracks intended to be presented or processed together, each of a uniform media type, including but not limited to audio, video, text, closed captions, and subtitles.

An AVAsset object defines the collective properties of the tracks that comprise the asset. A track is represented by an instance of AVAssetTrack.

In a typical simple case, one track represents the audio component and another represents the video component; in a complex composition, there may be multiple overlapping tracks of audio and video. You will represent the video and audio files you’ll merge together as AVAsset objects.

AVComposition

An AVCompositionobject combines media data from multiple file-based sources in a custom temporal arrangement in order to present or process it together. All file-based audiovisual assets are eligible to be combined, regardless of container type.

At its top level, an AVComposition is a collection of tracks, each presenting media of a specific type such as audio or video, according to a timeline. Each track is represented by an instance of AVCompositionTrack.

AVMutableComposition and AVMutableCompositionTrack

A higher-level interface for constructing compositions is also presented by AVMutableComposition and AVMutableCompositionTrack. These objects offer insertion, removal, and scaling operations without direct manipulation of the trackSegment arrays of composition tracks.

AVMutableComposition and AVMutableCompositionTrack make use of higher-level constructs such as AVAsset and AVAssetTrack. This means the client can make use of the same references to candidate sources that it would have created in order to inspect or preview them prior to inclusion in a composition.

In short, you have an AVMutableComposition and you can add multiple AVMutableCompositionTrack instances to it. Each AVMutableCompositionTrack will have a separate media asset.

And the Rest

In order to apply a CGAffineTransform to a track, you will make use of AVVideoCompositionInstruction and AVVideoComposition. An AVVideoCompositionInstruction object represents an operation to be performed by a compositor. The object contains multiple AVMutableVideoCompositionLayerInstruction objects.

You use an AVVideoCompositionLayerInstruction object to modify the transform and opacity ramps to apply to a given track in an AV composition. AVMutableVideoCompositionLayerInstruction is a mutable subclass of AVVideoCompositionLayerInstruction.

An AVVideoComposition object maintains an array of instructions to perform its composition, and an AVMutableVideoComposition object represents a mutable video composition.

//选择第一个asset

- (IBAction)loadAssetOne:(UIButton *)sender {

if ([UIImagePickerController isSourceTypeAvailable:UIImagePickerControllerSourceTypeSavedPhotosAlbum] == NO) {

UIAlertView *alert = [[UIAlertView alloc] initWithTitle:@"Error" message:@"No Saved Album Found"

delegate:nil cancelButtonTitle:@"OK" otherButtonTitles:nil];

[alert show];

} else {

isSelectingAssetOne = TRUE;

[self startMediaBrowserFromViewController:self usingDelegate:self];

}

}

//选择第二个asset

- (IBAction)loadAssetTwo:(UIButton *)sender {

if ([UIImagePickerController isSourceTypeAvailable:UIImagePickerControllerSourceTypeSavedPhotosAlbum] == NO) {

UIAlertView *alert = [[UIAlertView alloc] initWithTitle:@"Error" message:@"No Saved Album Found"

delegate:nil cancelButtonTitle:@"OK" otherButtonTitles:nil];

[alert show];

} else {

isSelectingAssetOne = FALSE;

[self startMediaBrowserFromViewController:self usingDelegate:self];

}

}

- (IBAction)mergeAndSave:(UIButton *)sender {

if (firstAsset !=nil && secondAsset!=nil) {

// 1 - Create AVMutableComposition object. This object will hold your AVMutableCompositionTrack instances.

//创建一个AVMutableComposition实例

AVMutableComposition *mixComposition = [[AVMutableComposition alloc] init];

// 2 - Video track

//创建一个轨道,类型是AVMediaTypeAudio

AVMutableCompositionTrack *firstTrack = [mixComposition addMutableTrackWithMediaType:AVMediaTypeAudio

preferredTrackID:kCMPersistentTrackID_Invalid];

//获取firstAsset中的音频,插入轨道

[firstTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, firstAsset.duration)

ofTrack:[[firstAsset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0] atTime:kCMTimeZero error:nil];

//获取secondAsset中的音频,插入轨道

[firstTrack insertTimeRange:CMTimeRangeMake(kCMTimeZero, secondAsset.duration)

ofTrack:[[secondAsset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0] atTime:firstAsset.duration error:nil];

// 4 - Get path

//创建输出路径

NSArray *paths = NSSearchPathForDirectoriesInDomains(NSDocumentDirectory, NSUserDomainMask, YES);

NSString *documentsDirectory = [paths objectAtIndex:0];

NSString *myPathDocs = [documentsDirectory stringByAppendingPathComponent:

[NSString stringWithFormat:@"mergeVideo-%d.mov",arc4random() % 1000]];

NSURL *url = [NSURL fileURLWithPath:myPathDocs];

// 5 - Create exporter

//创建输出对象

AVAssetExportSession *exporter = [[AVAssetExportSession alloc] initWithAsset:mixComposition

presetName:AVAssetExportPresetHighestQuality];

exporter.outputURL=url;

exporter.outputFileType = AVFileTypeQuickTimeMovie;

// @"com.apple.quicktime-movie";

exporter.shouldOptimizeForNetworkUse = YES;

[exporter exportAsynchronouslyWithCompletionHandler:^{

dispatch_async(dispatch_get_main_queue(), ^{

[self exportDidFinish:exporter];

});

}];

}

}

-(void)exportDidFinish:(AVAssetExportSession*)session {

NSLog(@"%ld",session.status);

if (session.status == AVAssetExportSessionStatusCompleted) {

NSURL *outputURL = session.outputURL;

MPMoviePlayerViewController *theMovie = [[MPMoviePlayerViewController alloc]

initWithContentURL:outputURL];

[self presentMoviePlayerViewControllerAnimated:theMovie];

}

audioAsset = nil;

firstAsset = nil;

secondAsset = nil;

}

-(BOOL)startMediaBrowserFromViewController:(UIViewController*)controller usingDelegate:(id)delegate {

// 1 - Validation

if (([UIImagePickerController isSourceTypeAvailable:UIImagePickerControllerSourceTypeSavedPhotosAlbum] == NO)

|| (delegate == nil)

|| (controller == nil)) {

return NO;

}

// 2 - Create image picker

UIImagePickerController *mediaUI = [[UIImagePickerController alloc] init];

mediaUI.sourceType = UIImagePickerControllerSourceTypeSavedPhotosAlbum;

mediaUI.mediaTypes = [[NSArray alloc] initWithObjects:(NSString *)kUTTypeMovie, nil];

// Hides the controls for moving & scaling pictures, or for

// trimming movies. To instead show the controls, use YES.

mediaUI.allowsEditing = YES;

mediaUI.delegate = delegate;

// 3 - Display image picker

[controller presentModalViewController: mediaUI animated: YES];

return YES;

}

-(void)imagePickerController:(UIImagePickerController *)picker didFinishPickingMediaWithInfo:(NSDictionary *)info {

// 1 - Get media type

NSString *mediaType = [info objectForKey: UIImagePickerControllerMediaType];

// 2 - Dismiss image picker

[self dismissModalViewControllerAnimated:NO];

// 3 - Handle video selection

//判断是不是选中的第一个asset,分别加载到2个asset

if (CFStringCompare ((__bridge_retained CFStringRef) mediaType, kUTTypeMovie, 0) == kCFCompareEqualTo) {

if (isSelectingAssetOne){

NSLog(@"Video One Loaded");

UIAlertView *alert = [[UIAlertView alloc] initWithTitle:@"Asset Loaded" message:@"Video One Loaded"

delegate:nil cancelButtonTitle:@"OK" otherButtonTitles:nil];

[alert show];

firstAsset = [AVAsset assetWithURL:[info objectForKey:UIImagePickerControllerMediaURL]];

} else {

NSLog(@"Video two Loaded");

UIAlertView *alert = [[UIAlertView alloc] initWithTitle:@"Asset Loaded" message:@"Video Two Loaded"

delegate:nil cancelButtonTitle:@"OK" otherButtonTitles:nil];

[alert show];

secondAsset = [AVAsset assetWithURL:[info objectForKey:UIImagePickerControllerMediaURL]];

}

}

}