Kinect运用OpenNI产生点云

点云数据是由depthmap得到,利用OpenNI中depthgenerator node获取depth map,再将depth map转为real world的坐标值。

OpenNI的depth map的像素值代表的是实际空间位置到Kinect所在平面的深度值,即到该Kinect平面的距离,单位为mm;

利用DepthGenerator 中GetDepthMap或者getMetaData均可获得深度图像的像素值;GetMetaData函数是将像素值填充到DepthMetaData的对象中,GetDepthMap是直接读取像素值。

XnPoint3D是保持类型为float的x、y、z的结构,为将像素值转为点云,须利用DepthGenerator的ConvertProjectToRealWorld函数:

XnStatus ConvertProjectiveToRealWorld (XnUInt32 nCount, const XnPoint3D aProjective[], XnPoint3D aRealWorld[]) const

其中nCount表示点数数目,aProjective表示投影坐标下所有(x,y,z)的值,aRealWorld表示实际空间坐标值,该坐标系的坐标原点是:depth map的中心投影到Kinect所在平面上的点。x-y平面即为Kinect所在平面:

z轴方向代表了Kinect镜头所对方向。OpenNI获得的深度值即为实际坐标空间的z值,也就是通过ConvertProjectToRealWorld函数是不改变z值得,x,y是depth map的索引位置(x:为column y:为row),须将x,y转为实际坐标空间的x,y值;围观OpenNI的源码,ConvertProjectToRealWorld函数实现是通过单位换算得到的:

- 获得field of view(视角)值:获取horizontal和vertical的的视角值,即Kinect水平和垂直视角;

- 单位换算比例:α表示水平视角角度值 、β表示垂直视角角度值,换算关系RealWorldXtoZ=2tan(α/2) RealWorldYtoZ = 2tan(β/2),即得到了depth map的宽度值w/高度值h与距离d的比值

- 求出实际的x,y值:NormalizedX = x / outputMode.nXRes - 0.5,即深度图像左侧在x轴负方向上,右侧在x轴正方向上;同样NormalizedY = 0.5 - y / outputMode.nYRes,上侧在y轴正方向上,下侧在y轴负方向上

最后x = NormalizedX * Z * RealWorldXtoZ y = NormalizedX * Z * RealWorldXtoZ

#include <XnCppWrapper.h>

#include <stdio.h>

using namespace xn;

/**

* use 1.create object 2.set output mode 3.initilization 4.update and processing

* ex.. 1.DepthmapPointCloud cloud; 2.cloud.setOutputMode(getDefaultOutputMode()); 3.cloud.init(); 4. ... ;

*/

class DepthmapPointCloud

{

public:

DepthmapPointCloud(Context &contex, DepthGenerator &depthGenerator)

: m_hContex(contex), m_hDepthGenerator(depthGenerator), m_nStatus(XN_STATUS_OK),

m_pProjecttiveCloud(0), m_pRealworldCloud(0) {}

~DepthmapPointCloud()

{

stop();

}

void init();

void stop();

void setOutputMode(const XnMapOutputMode &outputMode);

void updataPointCloud(); // updata the point cloud datas

inline XnPoint3D* getPointCloudData()

{

return m_pRealworldCloud;

}

inline const XnUInt32 getPointCloudNum() const

{

return m_nOutputMode.nXRes*m_nOutputMode.nYRes;

}

inline const XnMapOutputMode& getXYRes() const

{

return m_nOutputMode;

}

inline const XnMapOutputMode getDefaultOutputMode()

{

// set the depth map output mode

XnMapOutputMode outputMode = {XN_VGA_X_RES, XN_VGA_Y_RES, 30};

return outputMode;

}

/**

* test: return projective point cloud data

*/

inline XnPoint3D* getRawPointData()

{

return m_pProjecttiveCloud;

}

/**

* test: get field of view

*/

inline void getFieldofView(XnFieldOfView &FOV)const

{

m_hDepthGenerator.GetFieldOfView(FOV);

}

private:

Context &m_hContex;

DepthGenerator &m_hDepthGenerator;

XnStatus m_nStatus;

XnMapOutputMode m_nOutputMode;

XnPoint3D *m_pProjecttiveCloud;

XnPoint3D *m_pRealworldCloud;

inline void printError()

{

if (m_nStatus != XN_STATUS_OK)

{

printf("Error: %s", xnGetStatusString(m_nStatus));

exit(-1);

}

}

DepthmapPointCloud(const DepthmapPointCloud &rhs); // don't define copy constructor

DepthmapPointCloud& operator=(const DepthmapPointCloud &rhs); // don't define assignment operator

};

void DepthmapPointCloud::setOutputMode(const XnMapOutputMode &outputMode)

{

m_nOutputMode.nFPS = outputMode.nFPS;

m_nOutputMode.nXRes= outputMode.nXRes;

m_nOutputMode.nYRes = outputMode.nYRes;

}

void DepthmapPointCloud::init()

{

m_nStatus = m_hContex.Init();

printError();

m_nStatus = m_hDepthGenerator.Create(m_hContex);

printError();

// set output mode

m_nStatus = m_hDepthGenerator.SetMapOutputMode(m_nOutputMode);

printError();

// start generating

m_nStatus = m_hContex.StartGeneratingAll();

printError();

// allocate memory to projective point cloud and real world point cloud

m_pProjecttiveCloud = new XnPoint3D[getPointCloudNum()];

m_pRealworldCloud = new XnPoint3D[getPointCloudNum()];

}

void DepthmapPointCloud::stop()

{

m_hContex.Release();

delete [] m_pProjecttiveCloud;

delete [] m_pRealworldCloud;

}

void DepthmapPointCloud::updataPointCloud()

{

try

{

// update to next frame data

m_nStatus = m_hContex.WaitOneUpdateAll(m_hDepthGenerator);

// printf("DataSize: %d\n", m_hDepthGenerator.GetDataSize()); // test

// store depthmap data to projective cloudpoint data

XnUInt32 index, shiftStep;

for (XnUInt32 row=0; row<m_nOutputMode.nYRes; ++row)

{

shiftStep = row*m_nOutputMode.nXRes;

for (XnUInt32 column=0; column<m_nOutputMode.nXRes; ++column)

{

index = shiftStep + column;

m_pProjecttiveCloud[index].X = (XnFloat)column;

m_pProjecttiveCloud[index].Y = (XnFloat)row;

m_pProjecttiveCloud[index].Z = m_hDepthGenerator.GetDepthMap()[index]*0.001f; // mm -> m

}

}

if (m_nStatus != XN_STATUS_OK) throw m_nStatus;

// convert projective pointcloud to real world pointcloud

m_hDepthGenerator.ConvertProjectiveToRealWorld(m_nOutputMode.nXRes*m_nOutputMode.nYRes, m_pProjecttiveCloud, m_pRealworldCloud);

}

catch (...)

{

printError();

stop();

}

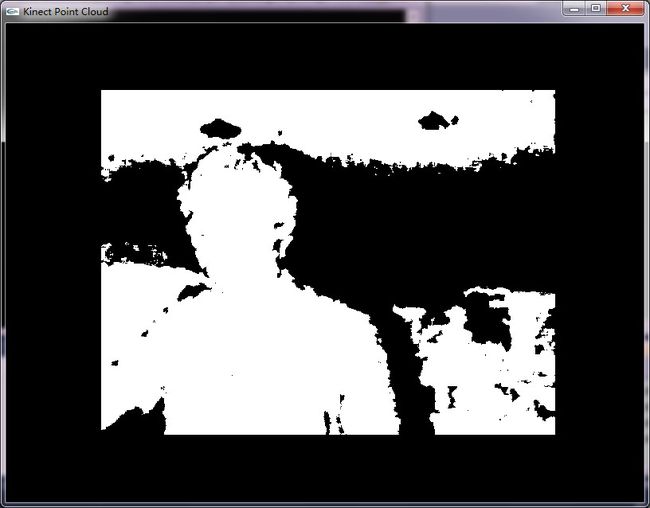

} 最后看看OpenGL下点云显示效果:..(*^__^*) ..

现在数据还是原始数据,比较粗糙,准备继续研究怎么将数据过滤平滑,让显示更好,呵呵,本身要做的事确是怎么识别手,跟踪手势,当然这是我最终目标了,

搞成点成果,后面继续努力吧...![]()

附件下载