opencv 图像仿射变换 计算仿射变换后对应特征点的新坐标 图像旋转、缩放、平移

常常需要最图像进行仿射变换,仿射变换后,我们可能需要将原来图像中的特征点坐标进行重新计算,获得原来图像中例如眼睛瞳孔坐标的新的位置,用于在新得到图像中继续利用瞳孔位置坐标。

仿射变换在:http://blog.csdn.net/xiaowei_cqu/article/details/7616044 这位大牛的博客中已经介绍的非常清楚。

关于仿射变换的详细介绍,请见上面链接的博客。

我这里主要介绍如何在已经知道原图像中若干特征点的坐标之后,计算这些特征点进行放射变换之后的坐标,然后做一些补充。

** 在原文中,很多功能函数都是使用的cvXXX,例如cv2DRotationMatrix( center, degree,1, &M); 这些都是老版本的函数,在opencv2以后,应该尽量的使用全新的函数,所以在我的代码中,都是使用的最新的函数,不再使用 cvMat, 而是全部使用 Mat 类型。 **

1. 特征点对应的新的坐标计算

假设已经有一个原图像中的特征点的坐标 CvPoint point; 那么计算这个point的对应的仿射变换之后在新的图像中的坐标位置,使用的方法如下函数:

// 获取指定像素点放射变换后的新的坐标位置

CvPoint getPointAffinedPos(const CvPoint &src, const CvPoint ¢er, double angle)

{

CvPoint dst;

int x = src.x - center.x;

int y = src.y - center.y;

dst.x = cvRound(x * cos(angle) + y * sin(angle) + center.x);

dst.y = cvRound(-x * sin(angle) + y * cos(angle) + center.y);

return dst;

}

要特别注意的是,在对一个原图像中的像素的坐标进行计算仿射变换之后的坐标的时候,一定要按照仿射变换的基本原理,将原来的坐标减去仿射变换的旋转中心的坐标,这样仿射变换之后得到的坐标再加上仿射变换旋转中心坐标才是原坐标在新的仿射变换之后的图像中的正确坐标。

下面给出计算对应瞳孔坐标旋转之后的坐标位置的示例代码:

// AffineTransformation.cpp : Defines the entry point for the console application.

//

#include "stdafx.h"

#include "stdio.h"

#include "iostream"

#include "opencv2/opencv.hpp"

using namespace std;

using namespace cv;

// 获取指定像素点放射变换后的新的坐标位置

CvPoint getPointAffinedPos(const CvPoint &src, const CvPoint ¢er, double angle);

Mat ImageRotate(Mat & src, const CvPoint &_center, double angle);

Mat ImageRotate2NewSize(Mat& src, const CvPoint &_center, double angle);

int _tmain(int argc, _TCHAR* argv[])

{

string image_path = "D:/lena.jpg";

Mat img = imread(image_path);

cvtColor(img, img, CV_BGR2GRAY);

Mat src;

img.copyTo(src);

CvPoint Leye;

Leye.x = 265;

Leye.y = 265;

CvPoint Reye;

Reye.x = 328;

Reye.y = 265;

// draw pupil

src.at<unsigned char>(Leye.y, Leye.x) = 255;

src.at<unsigned char>(Reye.y, Reye.x) = 255;

imshow("src", src);

//

CvPoint center;

center.x = img.cols / 2;

center.y = img.rows / 2;

double angle = 15L;

Mat dst = ImageRotate(img, center, angle);

// 计算原特征点在旋转后图像中的对应的坐标

CvPoint l2 = getPointAffinedPos(Leye, center, angle * CV_PI / 180);

CvPoint r2 = getPointAffinedPos(Reye, center, angle * CV_PI / 180);

// draw pupil

dst.at<unsigned char>(l2.y, l2.x) = 255;

dst.at<unsigned char>(r2.y, r2.x) = 255;

//Mat dst = ImageRotate2NewSize(img, center, angle);

imshow("dst", dst);

waitKey(0);

return 0;

}

Mat ImageRotate(Mat & src, const CvPoint &_center, double angle)

{

CvPoint2D32f center;

center.x = float(_center.x);

center.y = float(_center.y);

//计算二维旋转的仿射变换矩阵

Mat M = getRotationMatrix2D(center, angle, 1);

// rotate

Mat dst;

warpAffine(src, dst, M, cvSize(src.cols, src.rows), CV_INTER_LINEAR);

return dst;

}

// 获取指定像素点放射变换后的新的坐标位置

CvPoint getPointAffinedPos(const CvPoint &src, const CvPoint ¢er, double angle)

{

CvPoint dst;

int x = src.x - center.x;

int y = src.y - center.y;

dst.x = cvRound(x * cos(angle) + y * sin(angle) + center.x);

dst.y = cvRound(-x * sin(angle) + y * cos(angle) + center.y);

return dst;

}

这里,我们先通过手工找到瞳孔坐标,然后计算在图像旋转之后瞳孔的坐标。

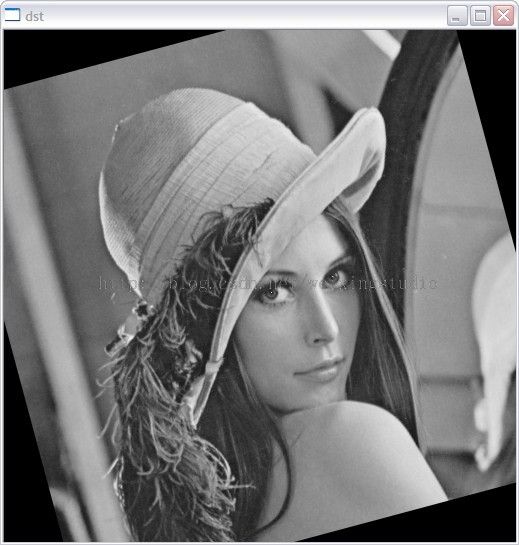

运行结果如图:

原图像

旋转之后的图像:

2. 旋转中心对于旋转的影响

然后我们看看仿射变换旋转点的选择对于旋转之后的图像的影响,一般情况下,我们选择图像的中心点作为仿射变换的旋转中心,获得的旋转之后的图像与原图像大小一样。

计算代码:

int _tmain(int argc, _TCHAR* argv[])

{

string image_path = "D:/lena.jpg";

Mat img = imread(image_path);

cvtColor(img, img, CV_BGR2GRAY);

Mat src;

img.copyTo(src);

CvPoint Leye;

Leye.x = 265;

Leye.y = 265;

CvPoint Reye;

Reye.x = 328;

Reye.y = 265;

// draw pupil

src.at<unsigned char>(Leye.y, Leye.x) = 255;

src.at<unsigned char>(Reye.y, Reye.x) = 255;

imshow("src", src);

//

/*CvPoint center;

center.x = img.cols / 2;

center.y = img.rows / 2;*/

CvPoint center;

center.x = 0;

center.y = 0;

double angle = 15L;

Mat dst = ImageRotate(img, center, angle);

// 计算原特征点在旋转后图像中的对应的坐标

CvPoint l2 = getPointAffinedPos(Leye, center, angle * CV_PI / 180);

CvPoint r2 = getPointAffinedPos(Reye, center, angle * CV_PI / 180);

// draw pupil

dst.at<unsigned char>(l2.y, l2.x) = 255;

dst.at<unsigned char>(r2.y, r2.x) = 255;

//Mat dst = ImageRotate2NewSize(img, center, angle);

imshow("dst", dst);

waitKey(0);

return 0;

}

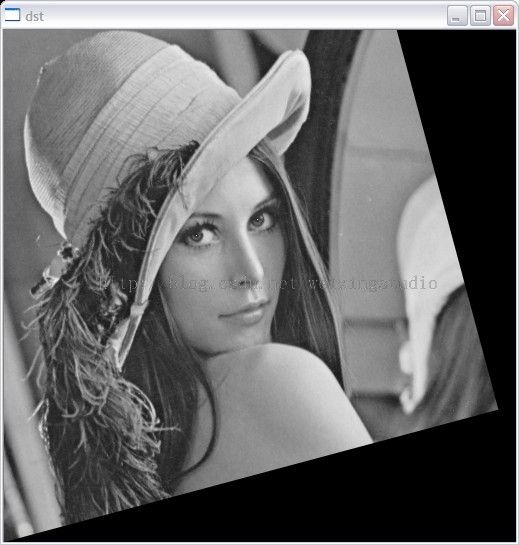

这里绕着(0,0)点进行旋转,旋转之后的图像:

绕着左下角旋转:

CvPoint center; center.x = 0; center.y = img.rows;

旋转之后的图像:

3. 缩放因子对于旋转图像的影响

上面我们的代码都没有添加缩放信息,现在对上面的代码进行稍加修改,添加缩放参数,然后看一下如何计算对应的新的坐标。

#include "stdafx.h"

#include "stdio.h"

#include "iostream"

#include "opencv2/opencv.hpp"

using namespace std;

using namespace cv;

// 获取指定像素点放射变换后的新的坐标位置

CvPoint getPointAffinedPos(const CvPoint &src, const CvPoint ¢er, double angle, double scale);

Mat ImageRotate(Mat & src, const CvPoint &_center, double angle, double scale);

Mat ImageRotate2NewSize(Mat& src, const CvPoint &_center, double angle, double scale);

int _tmain(int argc, _TCHAR* argv[])

{

string image_path = "D:/lena.jpg";

Mat img = imread(image_path);

cvtColor(img, img, CV_BGR2GRAY);

double scale = 0.5;

Mat src;

img.copyTo(src);

CvPoint Leye;

Leye.x = 265;

Leye.y = 265;

CvPoint Reye;

Reye.x = 328;

Reye.y = 265;

// draw pupil

src.at<unsigned char>(Leye.y, Leye.x) = 255;

src.at<unsigned char>(Reye.y, Reye.x) = 255;

imshow("src", src);

//

CvPoint center;

center.x = img.cols / 2;

center.y = img.rows / 2;

double angle = 15L;

Mat dst = ImageRotate(img, center, angle, scale);

// 计算原特征点在旋转后图像中的对应的坐标

CvPoint l2 = getPointAffinedPos(Leye, center, angle * CV_PI / 180, scale);

CvPoint r2 = getPointAffinedPos(Reye, center, angle * CV_PI / 180, scale);

// draw pupil

dst.at<unsigned char>(l2.y, l2.x) = 255;

dst.at<unsigned char>(r2.y, r2.x) = 255;

//Mat dst = ImageRotate2NewSize(img, center, angle);

imshow("dst", dst);

waitKey(0);

return 0;

}

Mat ImageRotate(Mat & src, const CvPoint &_center, double angle, double scale)

{

CvPoint2D32f center;

center.x = float(_center.x);

center.y = float(_center.y);

//计算二维旋转的仿射变换矩阵

Mat M = getRotationMatrix2D(center, angle, scale);

// rotate

Mat dst;

warpAffine(src, dst, M, cvSize(src.cols, src.rows), CV_INTER_LINEAR);

return dst;

}

// 获取指定像素点放射变换后的新的坐标位置

CvPoint getPointAffinedPos(const CvPoint &src, const CvPoint ¢er, double angle, double scale)

{

CvPoint dst;

int x = src.x - center.x;

int y = src.y - center.y;

dst.x = cvRound(x * cos(angle) * scale + y * sin(angle) * scale + center.x);

dst.y = cvRound(-x * sin(angle) * scale + y * cos(angle) * scale + center.y);

return dst;

}

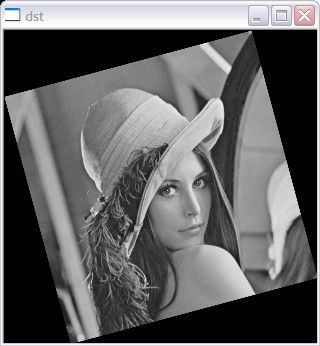

当缩放尺度为0.5的时候,程序的运行结果如图:

4. 根据旋转与缩放尺度获得与原始图像大小不同的图像大小(新的合适的大小)

上面的计算中,一直都是放射变换之后计算得到的图像和原始图像一样大,但是因为旋转、缩放之后图像可能会变大或者变小,我们再次对上面的代码进行修改,这样在获得仿射变换之后的图像前,需要重新计算生成的图像的大小。

计算方法:

double angle2 = angle * CV_PI / 180; int width = src.cols; int height = src.rows; double alpha = cos(angle2) * scale; double beta = sin(angle2) * scale; int new_width = (int)(width * fabs(alpha) + height * fabs(beta)); int new_height = (int)(width * fabs(beta) + height * fabs(alpha));

另外,因为我们的图像旋转是按照原图像的中心,所以当获取到图像的仿射变换矩阵之后,我们需要根据新生成的图像的大小,给仿射变换矩阵添加平移信息。

或者可以这么说,我们新计算得到的图像的大小,让原始图像绕着新的图像大小的中心进行旋转。

//计算二维旋转的仿射变换矩阵 Mat M = getRotationMatrix2D(center, angle, scale); // 给计算得到的旋转矩阵添加平移 M.at<double>(0, 2) += (int)((new_width - width )/2); M.at<double>(1, 2) += (int)((new_height - height )/2);

然后另外需要注意的是,如果你在原始图像中有一些特征点的坐标,这些特征点的坐标映射到新的图像上的时候,需要在以前的方法的基础上增加平移信息。

// 获取指定像素点放射变换后的新的坐标位置

CvPoint getPointAffinedPos(Mat & src, Mat & dst, const CvPoint &src_p, const CvPoint ¢er, double angle, double scale)

{

double alpha = cos(angle) * scale;

double beta = sin(angle) * scale;

int width = src.cols;

int height = src.rows;

CvPoint dst_p;

int x = src_p.x - center.x;

int y = src_p.y - center.y;

dst_p.x = cvRound(x * alpha + y * beta + center.x);

dst_p.y = cvRound(-x * beta + y * alpha + center.y);

int new_width = dst.cols;

int new_height = dst.rows;

int movx = (int)((new_width - width)/2);

int movy = (int)((new_height - height)/2);

dst_p.x += movx;

dst_p.y += movy;

return dst_p;

}

我们仿射变换函数代码:

Mat ImageRotate2NewSize(Mat& src, const CvPoint &_center, double angle, double scale)

{

double angle2 = angle * CV_PI / 180;

int width = src.cols;

int height = src.rows;

double alpha = cos(angle2) * scale;

double beta = sin(angle2) * scale;

int new_width = (int)(width * fabs(alpha) + height * fabs(beta));

int new_height = (int)(width * fabs(beta) + height * fabs(alpha));

CvPoint2D32f center;

center.x = float(width / 2);

center.y = float(height / 2);

//计算二维旋转的仿射变换矩阵

Mat M = getRotationMatrix2D(center, angle, scale);

// 给计算得到的旋转矩阵添加平移

M.at<double>(0, 2) += (int)((new_width - width )/2);

M.at<double>(1, 2) += (int)((new_height - height )/2);

// rotate

Mat dst;

warpAffine(src, dst, M, cvSize(new_width, new_height), CV_INTER_LINEAR);

return dst;

}

主函数:

int _tmain(int argc, _TCHAR* argv[])

{

string image_path = "D:/lena.jpg";

Mat img = imread(image_path);

cvtColor(img, img, CV_BGR2GRAY);

double scale = 0.5;

Mat src;

img.copyTo(src);

CvPoint Leye;

Leye.x = 265;

Leye.y = 265;

CvPoint Reye;

Reye.x = 328;

Reye.y = 265;

// draw pupil

src.at<unsigned char>(Leye.y, Leye.x) = 255;

src.at<unsigned char>(Reye.y, Reye.x) = 255;

imshow("src", src);

//

CvPoint center;

center.x = img.cols / 2;

center.y = img.rows / 2;

double angle = 15L;

//Mat dst = ImageRotate(img, center, angle, scale);

Mat dst = ImageRotate2NewSize(img, center, angle, scale);

// 计算原特征点在旋转后图像中的对应的坐标

CvPoint l2 = getPointAffinedPos(src, dst, Leye, center, angle * CV_PI / 180, scale);

CvPoint r2 = getPointAffinedPos(src, dst, Reye, center, angle * CV_PI / 180, scale);

// draw pupil

dst.at<unsigned char>(l2.y, l2.x) = 255;

dst.at<unsigned char>(r2.y, r2.x) = 255;

imshow("dst", dst);

waitKey(0);

return 0;

}

仿射变换结果以及瞳孔重新坐标计算结果:

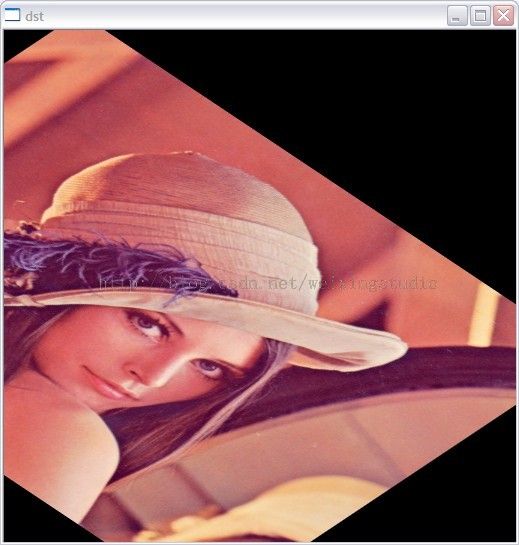

5. 根据三个点进行仿射变换

int _tmain(int argc, _TCHAR* argv[])

{

string image_path = "D:/lena.jpg";

Mat img = imread(image_path);

Point2f src_points[3];

src_points[0] = Point2f(100, 100);

src_points[1] = Point2f(400, 100);

src_points[2] = Point2f(250, 300);

Point2f dst_points[3];

dst_points[0] = Point2f(100, 100);

dst_points[1] = Point2f(400, 300);

dst_points[2] = Point2f(100, 300);

Mat M1 = getAffineTransform(src_points, dst_points);

Mat dst;

warpAffine(img, dst, M1, cvSize(img.cols, img.rows), INTER_LINEAR);

imshow("dst", dst);

//cvtColor(img, img, CV_BGR2GRAY);

//double scale = 1.5;

//Mat src;

//img.copyTo(src);

//CvPoint Leye;

//Leye.x = 265;

//Leye.y = 265;

//CvPoint Reye;

//Reye.x = 328;

//Reye.y = 265;

//// draw pupil

//src.at<unsigned char>(Leye.y, Leye.x) = 255;

//src.at<unsigned char>(Reye.y, Reye.x) = 255;

//imshow("src", src);

////

//CvPoint center;

//center.x = img.cols / 2;

//center.y = img.rows / 2;

//double angle = 15L;

////Mat dst = ImageRotate(img, center, angle, scale);

//Mat dst = ImageRotate2NewSize(img, center, angle, scale);

//// 计算原特征点在旋转后图像中的对应的坐标

//CvPoint l2 = getPointAffinedPos(src, dst, Leye, center, angle * CV_PI / 180, scale);

//CvPoint r2 = getPointAffinedPos(src, dst, Reye, center, angle * CV_PI / 180, scale);

//// draw pupil

//dst.at<unsigned char>(l2.y, l2.x) = 255;

//dst.at<unsigned char>(r2.y, r2.x) = 255;

//imshow("dst", dst);

waitKey(0);

return 0;

}

结果: