scikit-learn(工程中用的相对较多的模型介绍):1.11. Ensemble methods

参考:http://scikit-learn.org/stable/modules/ensemble.html

在实际项目中,我们真的很少用到那些简单的模型,比如LR、kNN、NB等,虽然经典,但在工程中确实不实用。

今天我们关注在工程中用的相对较多的Ensemble methods。

Ensemble methods(集成方法)主要是综合多个estimators加权或不加权的投票结果来产生最终结果。主要有两大类:

-

In averaging methods(平均方法), the driving principle is to build several estimatorsindependently and then toaverage their predictions. On average, the combined estimator is usually better than any of the single base estimator because its variance is reduced.

Examples: Bagging methods, Forests of randomized trees, ...

-

By contrast, in boosting methods(提升方法), base estimators are builtsequentially and one tries to reduce the bias of the combined estimator(the former estimator). The motivation is to combine several weak models to produce a powerful ensemble.

Examples: AdaBoost, Gradient Tree Boosting, ...

接下来主要讲:

1、Bagging meta-estimator

注意bagging和boosting的区别:bagging methods work best with strong and complex models (e.g., fully developed decision trees), in contrast with boosting methods which usually work best with weak models (e.g., shallow decision trees).

不同bagging方法的区别:产生random subsets的方式,有些是随机子样本集,有些是随机子features集,有些是随机子样本/features集,还有一些是有放回的抽样(samples、features可重复)。

scikit-learn提供了a unified BaggingClassifier meta-estimator (resp. BaggingRegressor),同时由参数max_samples和max_features决定子集大小、由bootstrap和bootstrap_features决定子集产生过程是否有替换。小例子:

- Single estimator versus bagging: bias-variance decomposition

2、Forests of ranomized trees

两种算法:RandomForest algorithm and the Extra-Trees method。最终结果是average prediction of the individual classifiers。给个简单例子:

Like decision trees, forests of trees also extend to multi-output problems (if Y is an array of size [n_samples, n_outputs]).

RandomForest algorithm :

有两个class,分别处理分类和回归,RandomForestClassifier and RandomForestRegressor classes。样本提取时允许replacement(a bootstrap sample),在随机选取的部分(而不是全部的)features上进行划分,与原论文的vote方法不同,scikit-learn通过平均每个分类器的预测概率(averaging their probabilistic prediction)来生成最终结果。

Extremely Randomized Trees :

有两个class,分别处理分类和回归, ExtraTreesClassifier and ExtraTreesRegressor classes。默认使用所有样本,但划分时features随机选取部分。

给个比较例子:

几点说明:

1)参数:最主要的调节参数是 n_estimators and max_features ,经验最好数据是,回归问题设置 max_features=n_features ,分类问题设置max_features=sqrt(n_features)(n_features是数据集的features个数).; 设置max_depth=None 并且结合min_samples_split=1 (i.e., when fully developing the trees)经常导致好的结果;但切记,最好的参数还是通过CV调出来的。

2)默认机制:random forests, bootstrap samples are used by default (bootstrap=True) while the default strategy for extra-trees is to use the whole dataset (bootstrap=False).

3)并行:设置n_jobs=k 保证使用机器的k个cores;设置 n_jobs=-1 使用所有可用的cores。

4)特征重要性评估:一个决策树,节点在越高的分支,相应的特征对最终预测结果的contribute越大。这里的大,是指影响输入数据集的比例比较大(the fraction of the input samples is large)。所以,对于某一个randomized tree,可以通过 The expected fraction of the samples they contribute to can thus be used as an estimate of the relative importance of the features.,然后对于 n_estimators 个randomized tree,通过averaging those expected activity rates over several randomized trees,达到区分特征重要性、特征选择的目的。但上面的叙述没什么X用,属性 feature_importances_ 已经保留了该重要性记录。。。。

最后还是几个例子:

- Plot the decision surfaces of ensembles of trees on the iris dataset

- Pixel importances with a parallel forest of trees

- Face completion with a multi-output estimators

- Pixel importances with a parallel forest of trees

- Feature importances with forests of trees

3、AdaBoost

重要的还是不翻译:The core principle of AdaBoost is to fit a sequence of weak learners (i.e., models that are only slightly better than random guessing, such as small decision trees) on repeatedly modified versions of the data. The predictions from all of them are then combined through a weighted majority vote (or sum) to produce the final prediction. The data modifications at each so-called boosting iteration consist of applying weights ![]() ,

, ![]() , ...,

, ..., ![]() to each of the training samples. Initially, those weights are all set to

to each of the training samples. Initially, those weights are all set to ![]() , so that the first step simply trains a weak learner on the original data. For each successive iteration, the sample weights are individually modified and the learning algorithm is reapplied to the reweighted data. At a given step, those training examples that were incorrectly predicted by the boosted model induced at the previous step have their weights increased, whereas the weights are decreased for those that were predicted correctly. As iterations proceed, examples that are difficult to predict receive ever-increasing influence. Each subsequent weak learner is thereby forced to concentrate on the examples that are missed by the previous ones in the sequence [HTF].

, so that the first step simply trains a weak learner on the original data. For each successive iteration, the sample weights are individually modified and the learning algorithm is reapplied to the reweighted data. At a given step, those training examples that were incorrectly predicted by the boosted model induced at the previous step have their weights increased, whereas the weights are decreased for those that were predicted correctly. As iterations proceed, examples that are difficult to predict receive ever-increasing influence. Each subsequent weak learner is thereby forced to concentrate on the examples that are missed by the previous ones in the sequence [HTF].

给个小例子:

AdaBoost同样是分类回归双可用:

- Discrete versus Real AdaBoost compares the classification error of a decision stump, decision tree, and a boosted decision stump using AdaBoost-SAMME and AdaBoost-SAMME.R.

- Multi-class AdaBoosted Decision Trees shows the performance of AdaBoost-SAMME and AdaBoost-SAMME.R on a multi-class problem.

- Two-class AdaBoost shows the decision boundary and decision function values for a non-linearly separable two-class problem using AdaBoost-SAMME.

- Decision Tree Regression with AdaBoost demonstrates regression with the AdaBoost.R2 algorithm.

4、Gradient Tree Boosting

Gradient Tree Boosting or Gradient Boosted Regression Trees (GBRT) is an accurate and effective off-the-shelf procedure that can be used for both regression and classification problems.

优点:

- Natural handling of data of mixed type (= heterogeneous features)

- Predictive power

- Robustness to outliers in output space (via robust loss functions)

1)分类:

GradientBoostingClassifier supports both binary and multi-class classification.

Note

Classification with more than 2 classes requires the induction of n_classes regression trees at each iteration, thus, the total number of induced trees equals n_classes * n_estimators. For datasets with a large number of classes we strongly recommend to use RandomForestClassifier as an alternative to GradientBoostingClassifier .

2)回归:

GradientBoostingRegressor supports a number of different loss functions for regression which can be specified via the argument loss; the default loss function for regression is least squares ('ls',least squares loss).

- Gradient Boosting regression

- Gradient Boosting Out-of-Bag estimates

3)在原模型的基础上再增加一些weak-learners:

Both GradientBoostingRegressor and GradientBoostingClassifier support warm_start=True which allows you to add more estimators to an already fitted model.

4)解释模型效果:

由于有多棵树组成,不能像单独的决策树一样画树结构,但是还有有些办法来summarize and interpret gradient boosting models.

features importance,The feature importance scores of a fit gradient boosting model can be accessed via the feature_importances_ property:

- Gradient Boosting regression

partial dependence,Partial dependence plots (PDP) show the dependence between the target response and a set of ‘target’ features。

下面重要的翻译:

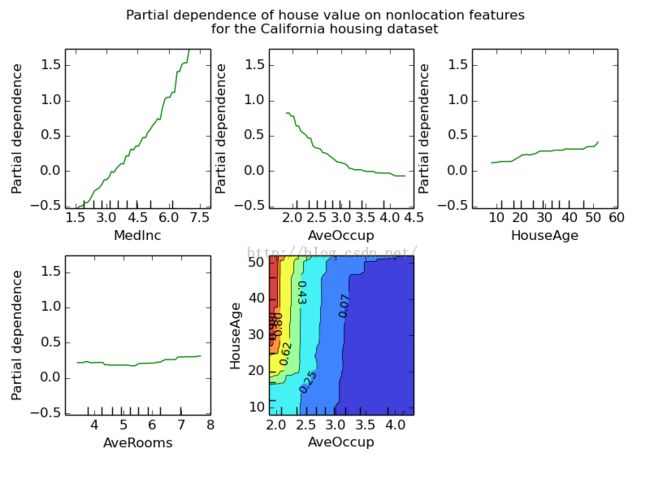

The Figure below shows four one-way and one two-way partial dependence plots for the California housing dataset:

One-way PDPs tell us about the interaction between the target response and the target feature (e.g. linear, non-linear). The upper left plot in the above Figure shows the effect of the median income in a district on the median house price; we can clearly see a linear relationship among them.

PDPs with two target features show the interactions among the two features. For example, the two-variable PDP in the above Figure shows the dependence of median house price on joint values of house age and avg. occupants per household. We can clearly see an interaction between the two features: For an avg. occupancy greater than two, the house price is nearly independent of the house age, whereas for values less than two there is a strong dependence on age.

The module partial_dependence provides a convenience function plot_partial_dependence to create one-way and two-way partial dependence plots. In the below example we show how to create a grid of partial dependence plots: two one-way PDPs for the features 0 and 1 and a two-way PDP between the two features:

For multi-class models, you need to set the class label for which the PDPs should be created via the label argument:

If you need the raw values of the partial dependence function rather than the plots you can use the partial_dependence function:

- Partial Dependence Plots

最后推荐大家看一下。。。。XgBoost:http://blog.csdn.net/mmc2015/article/details/47304779

xgboost简介:(摘自其他)xgboost的全称是eXtreme Gradient Boosting。正如其名,它是Gradient Boosting Machine的一个c++实现,作者为正在华盛顿大学研究机器学习的大牛陈天奇。他在研究中深感自己受制于现有库的计算速度和精度,因此在一年前开始着手搭建xgboost项目,并在去年夏天逐渐成型。xgboost最大的特点在于,它能够自动利用CPU的多线程进行并行,同时在算法上加以改进提高了精度。它的处女秀是Kaggle的希格斯子信号识别竞赛,因为出众的效率与较高的预测准确度在比赛论坛中引起了参赛选手的广泛关注,在1700多支队伍的激烈竞争中占有一席之地。随着它在Kaggle社区知名度的提高,最近也有队伍借助xgboost在比赛中夺得第一。