struct nf_conn {

/* Usage count in here is 1 for hash table/destruct timer, 1 per skb, plus 1 for any connection(s) we are `master' for */

struct nf_conntrack ct_general; /* 连接跟踪的引用计数 */

spinlock_t lock;

/* Connection tracking(链接跟踪)用来跟踪、记录每个链接的信息(目前仅支持IP协议的连接跟踪)。

每个链接由“tuple”来唯一标识,这里的“tuple”对不同的协议会有不同的含义,例如对tcp,udp

来说就是五元组: (源IP,源端口,目的IP, 目的端口,协议号),对ICMP协议来说是: (源IP, 目

的IP, id, type, code), 其中id,type与code都是icmp协议的信息。链接跟踪是防火墙实现状态检

测的基础,很多功能都需要借助链接跟踪才能实现,例如NAT、快速转发、等等。*/

struct nf_conntrack_tuple_hash tuplehash[IP_CT_DIR_MAX];

unsigned long status; /* 可以设置由enum ip_conntrack_status中描述的状态 */

struct nf_conn *master; /* 如果该连接是某个连接的子连接,则master指向它的主连接 */

/* Timer function; drops refcnt when it goes off. */

struct timer_list timeout;

union nf_conntrack_proto proto; /* 用于保存不同协议的私有数据 */

/* Extensions */

struct nf_ct_ext *ext; /* 用于扩展结构 */

};

这个结构非常简单,其中最主要的就是tuplehash(跟踪连接双方向数据)和status(记录连接状态),这也连接跟踪最主要的功能。

#include <linux/kernel.h>

#include <linux/module.h>

#include <linux/autoconf.h>

#include <linux/kthread.h>

#include <linux/udp.h>

#include <linux/rculist_nulls.h>

#include <net/netfilter/nf_conntrack_acct.h>

#include <linux/init.h>

#include <linux/module.h>

#include <linux/kernel.h>

#include <linux/version.h>

#include <linux/skbuff.h>

#include <linux/in.h>

#include <linux/ip.h>

#include <linux/udp.h>

#include <linux/tcp.h>

#include <linux/icmp.h>

#include <linux/if_arp.h>

#include <linux/if_ether.h>

#include <linux/if_packet.h>

#include <linux/types.h>

#include <linux/kmod.h>

#include <linux/proc_fs.h>

#include <linux/bitops.h>

#include <linux/socket.h>

#include <linux/netdevice.h>

#include <linux/netfilter.h>

#include <linux/netfilter_ipv4.h>

#include <linux/netfilter_arp.h>

#include <linux/netfilter_ipv4/ip_tables.h>

#include <linux/netfilter_ipv4/ipt_multiport.h>

#include <linux/netfilter_ipv4/ipt_iprange.h>

#include <net/checksum.h>

#include <net/route.h>

#include <linux/netfilter/nf_conntrack_common.h>

typedef u8 __a_uint8_t;

typedef s8 __a_int8_t;

typedef u16 __a_uint16_t;

typedef s16 __a_int16_t;

typedef u32 __a_uint32_t;

typedef s32 __a_int32_t;

typedef u64 __a_uint64_t;

typedef s64 __a_int64_t;

typedef __a_uint8_t a_uint8_t;

typedef __a_int8_t a_int8_t;

typedef __a_uint16_t a_uint16_t;

typedef __a_int16_t a_int16_t;

typedef __a_uint32_t a_uint32_t;

typedef __a_int32_t a_int32_t;

typedef __a_uint64_t a_uint64_t;

typedef __a_int64_t a_int64_t;

typedef a_uint32_t fal_ip4_addr_t;

#define dprintf printk

#define NAPT_AGE 0xe

static struct task_struct *test_TaskStruct;

typedef struct

{

a_uint32_t entry_id;

a_uint32_t flags;

a_uint32_t status;

a_uint32_t src_addr;

a_uint32_t dst_addr;

a_uint16_t src_port;

a_uint16_t dst_port;

a_uint32_t trans_addr;

a_uint16_t trans_port;

} napt_entry_t;

/*

void cmd_print(char *fmt, ...)

{

va_list args;

va_start(args, fmt);

// if(out_fd)

// vfprintf(out_fd, fmt, args);

// else

vfprintf(stdout, fmt, args);

va_end(args);

}

*/

void cmd_data_print_ip4addr(char * param_name, a_uint32_t * buf, a_uint32_t size)

{

a_uint32_t i;

fal_ip4_addr_t ip4;

ip4 = *((fal_ip4_addr_t *) buf);

printk("%s", param_name);

for (i = 0; i < 3; i++) {

printk("%d.", (ip4 >> (24 - i * 8)) & 0xff);

}

printk("%d", (ip4 & 0xff));

}

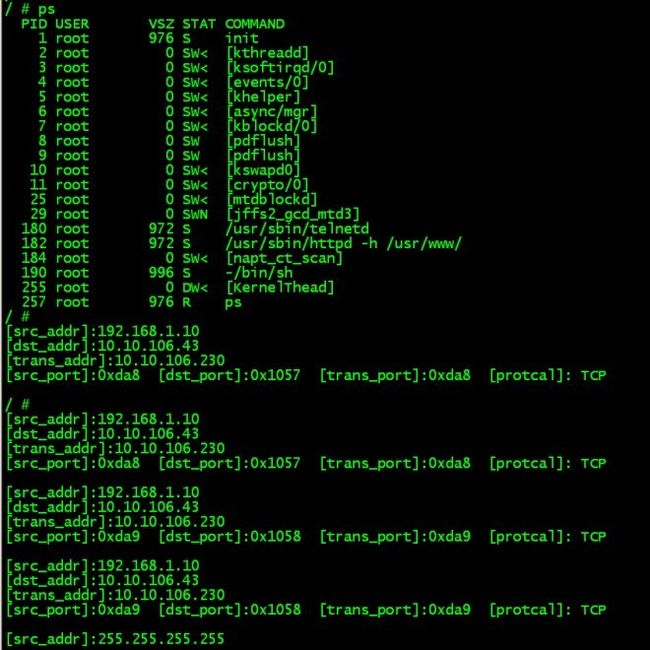

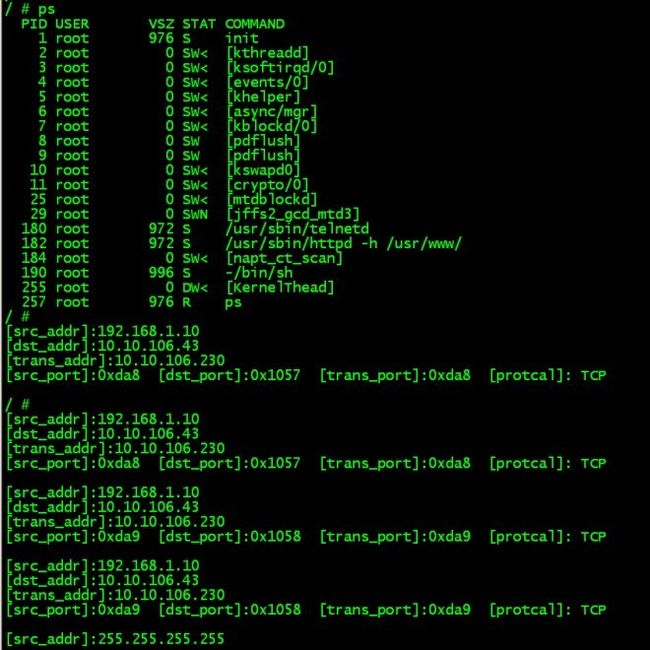

void napt_entry_show(uint32_t ct_addr)

{

napt_entry_t realnapt = {0};

napt_entry_t *entry=&realnapt;

if(!ct_addr)

{

return;

}

struct nf_conn *ct = (struct nf_conn *)ct_addr;

struct nf_conntrack_tuple *org_tuple, *rep_tuple;

if ((ct->status & IPS_NAT_MASK) == IPS_SRC_NAT) //snat

{

org_tuple = &(ct->tuplehash[IP_CT_DIR_ORIGINAL].tuple);

rep_tuple = &(ct->tuplehash[IP_CT_DIR_REPLY].tuple);

}

else //dnat

{

org_tuple = &(ct->tuplehash[IP_CT_DIR_REPLY].tuple);

rep_tuple = &(ct->tuplehash[IP_CT_DIR_ORIGINAL].tuple);

}

uint8_t protonum = org_tuple->dst.protonum;

entry->src_addr = org_tuple->src.u3.ip;

entry->src_port = org_tuple->src.u.all;

entry->dst_addr = org_tuple->dst.u3.ip;

entry->dst_port = org_tuple->dst.u.all;

entry->trans_addr = rep_tuple->dst.u3.ip;

entry->trans_port = rep_tuple->dst.u.all;

entry->status = NAPT_AGE;

cmd_data_print_ip4addr("\n[src_addr]:",(a_uint32_t *) & (entry->src_addr),sizeof (fal_ip4_addr_t));

cmd_data_print_ip4addr("\n[dst_addr]:",(a_uint32_t *) & (entry->dst_addr),sizeof (fal_ip4_addr_t));

cmd_data_print_ip4addr("\n[trans_addr]:",(a_uint32_t *) & (entry->trans_addr),sizeof (fal_ip4_addr_t));

dprintf("\n[src_port]:0x%x [dst_port]:0x%x [trans_port]:0x%x ", entry->src_port, entry->dst_port, entry->trans_port);

if(org_tuple->src.l3num == AF_INET)

{

if(protonum == IPPROTO_TCP)

{

printk("[protcal]: TCP \n");

}

else if(protonum == IPPROTO_UDP)

{

printk("[protcal]: UDP \n");

}

}

}

uint32_t napt_entry_list(uint32_t *hash, uint32_t *iterate)

{

struct net *net = &init_net;

struct nf_conntrack_tuple_hash *h = NULL;

struct nf_conn *ct = NULL;

struct hlist_nulls_node *pos = (struct hlist_nulls_node *) (*iterate);

while(*hash < nf_conntrack_htable_size)

{

if(pos == 0)

{

/*get head for list*/

pos = rcu_dereference((&net->ct.hash[*hash])->first);

}

hlist_nulls_for_each_entry_from(h, pos, hnnode)

{

(*iterate) = (uint32_t)(pos->next);

ct = nf_ct_tuplehash_to_ctrack(h);

//return (uint32_t) ct;

napt_entry_show(ct);

msleep(100);

}

++(*hash);

pos = 0;

}

}

void threadTask()

{

uint32_t hash = 0, iterate = 0;

while(1)

{

hash = 0;

if(kthread_should_stop())

{

printk("threadTask: kthread_should_stop\n");

break;

}

napt_entry_list(&hash,&iterate);

{

// schedule_timeout(80000*HZ);//让出CPU,使其他线程可以运行。

// 或者使用

msleep(2000);//的底层实现就是schedule_timeout().

}

}

}

static int __init init_nat_test_module(void)

{

test_TaskStruct=kthread_create(threadTask,NULL,"KernelThead");

if(IS_ERR(test_TaskStruct))

{

printk("kthread_create error\n");

}

else

{

wake_up_process(test_TaskStruct);

}

return 0;

}

static void __exit cleanup_anat_test_module(void)

{

kthread_stop(test_TaskStruct);

}

module_init(init_nat_test_module);

module_exit(cleanup_anat_test_module);

MODULE_LICENSE("GPL");

MODULE_AUTHOR("suiyuan from comba");

MODULE_DESCRIPTION("Led driver for nat test");