使用UDEV在Oracle Linux 6上安装Oracle 11g RAC(11.2.0.3) (五)

使用UDEV在Oracle Linux 6上安装Oracle 11g RAC(11.2.0.3) (一)

使用UDEV在Oracle Linux 6上安装Oracle 11g RAC(11.2.0.3) (二)

使用UDEV在Oracle Linux 6上安装Oracle 11g RAC(11.2.0.3) (三)

检查集群的运行状况

所有 Oracle 实例

节点应用程序

节点应用程序

数据库配置

ASM 状态

ASM 配置

TNS 监听器状态

TNS 监听器配置

节点应用程序配置 VIP、GSD、ONS、监听器

SCAN 状态

SCAN 配置

验证所有集群节点间的时钟同步

使用UDEV在Oracle Linux 6上安装Oracle 11g RAC(11.2.0.3) (二)

使用UDEV在Oracle Linux 6上安装Oracle 11g RAC(11.2.0.3) (三)

使用UDEV在Oracle Linux 6上安装Oracle 11g RAC(11.2.0.3) (四)

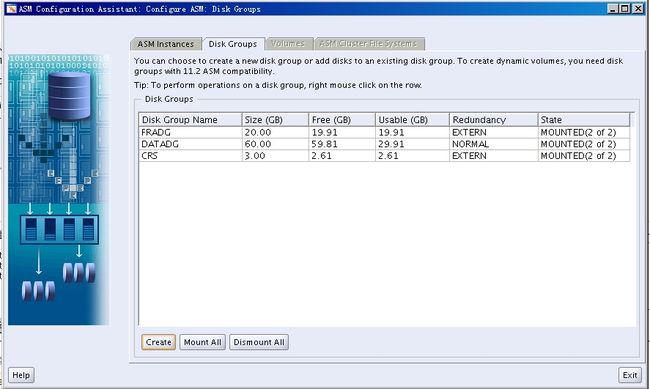

使用grid用户执行asmca,创建名为DATADG和FRADG的ASM磁盘组

create

Exit

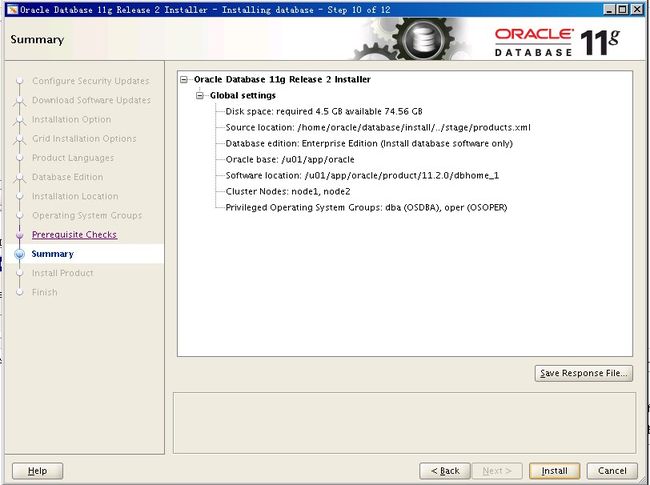

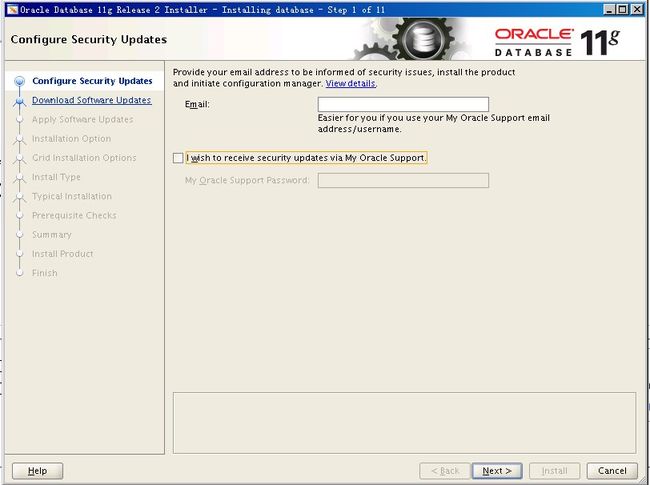

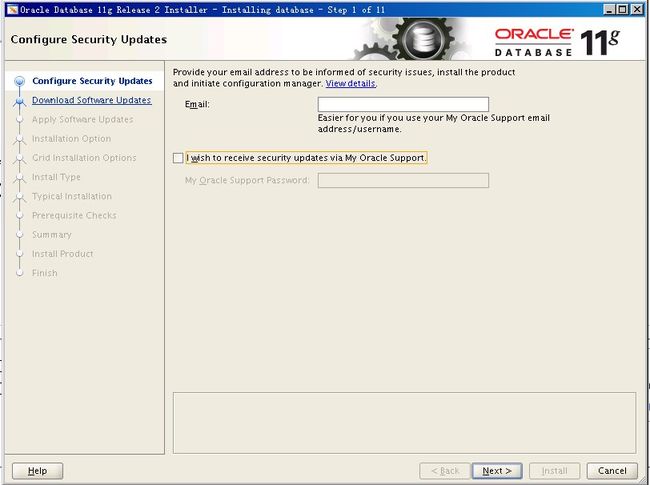

使用oracle用户在 Oracle Real Application Clusters 中安装 Oracle Database software

解压

p10404530_112030_Linux-x86-64_1of7.zip

p10404530_112030_Linux-x86-64_2of7.zip

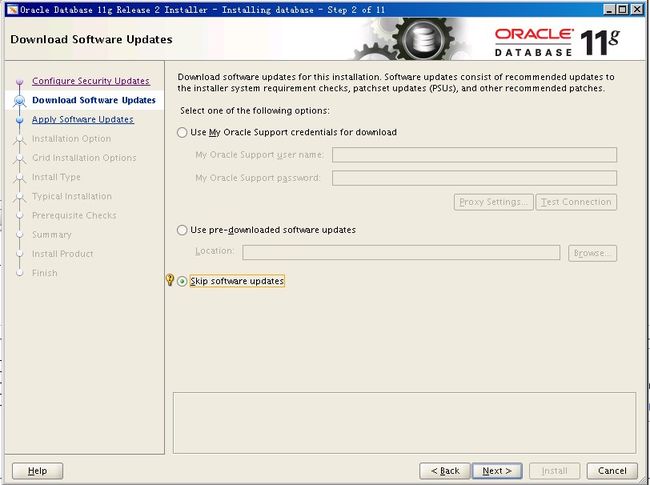

[oracle@node1 database]$ ./runInstaller Starting Oracle Universal Installer... Checking Temp space: must be greater than 120 MB. Actual 76592 MB Passed Checking swap space: must be greater than 150 MB. Actual 6134 MB Passed Checking monitor: must be configured to display at least 256 colors. Actual 16777216 Passed Preparing to launch Oracle Universal Installer from /tmp/OraInstall2012-12-29_11-05-25AM. Please wait ...[oracle@node1 database]$

Sikp Software Updates

Install database software only

Real Application Clusters database installation

如果之前没有配置oracle用户的ssh等效性,可以在这里配置,具体过程请看GI安装过程,这里不再演示

add Simplified Chinese

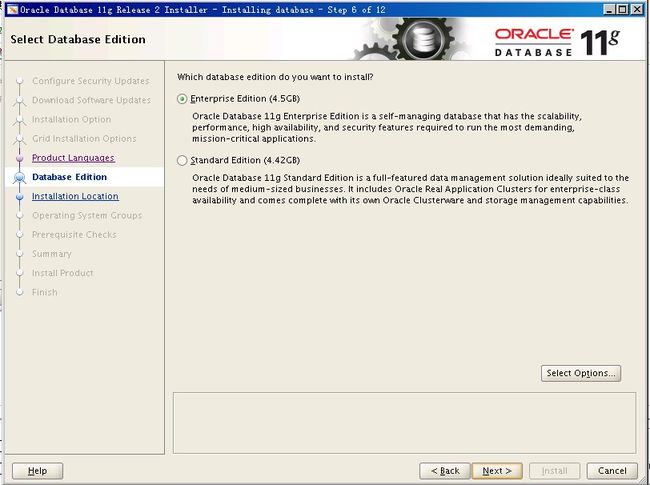

Enterprises Edition

忽略DNS错误

install

ssh node2

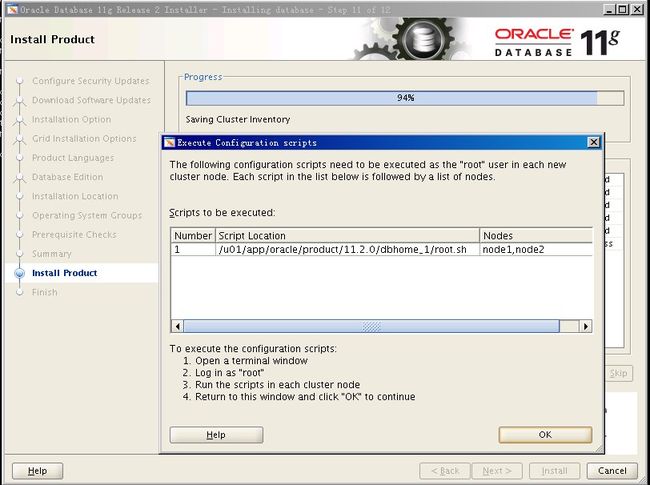

使用root用户在node1,node2上执行root.sh

Exit

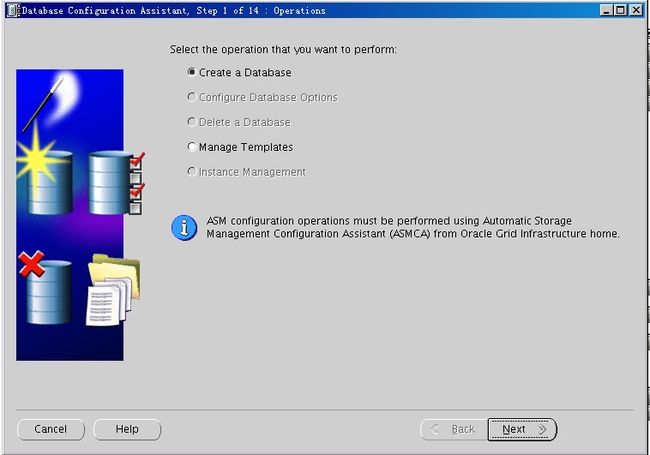

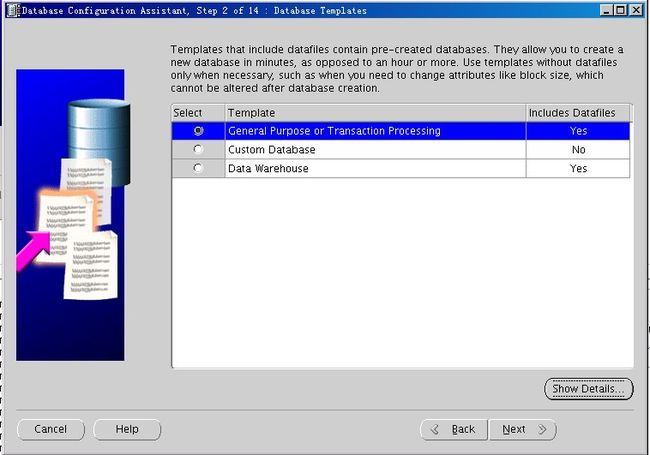

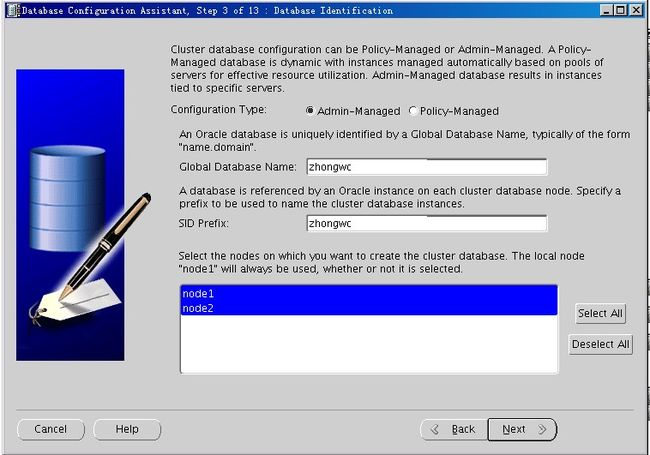

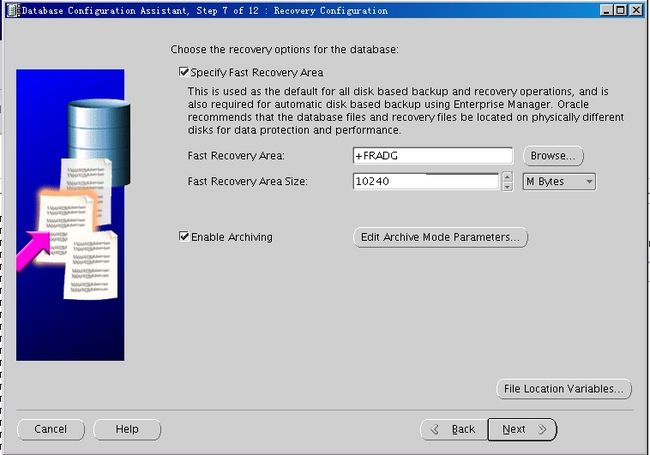

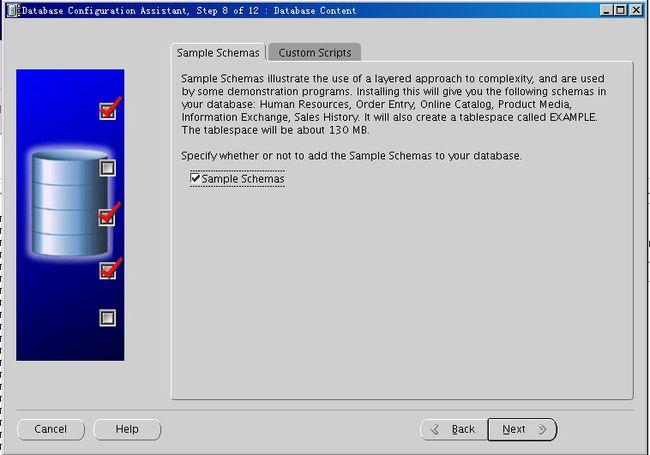

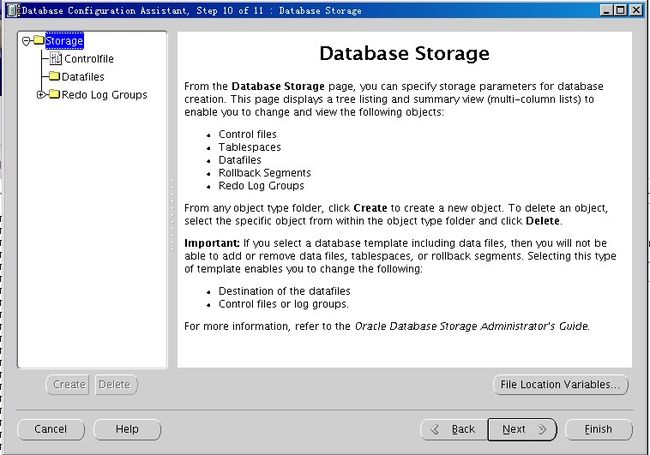

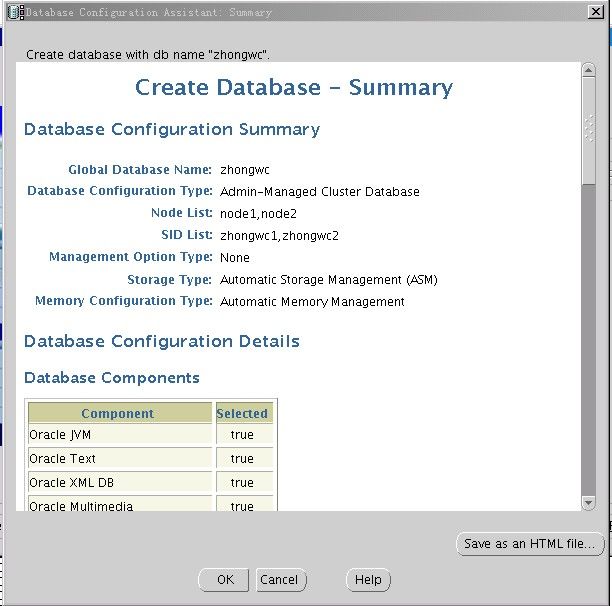

在任意node使用dbca创建集群化数据库

选择 Oracle Real Application Clusters database

验证集群化数据库已开启

[grid@node1 ~]$ crsctl stat res -t

--------------------------------------------------------------------------------

NAME TARGET STATE SERVER STATE_DETAILS

--------------------------------------------------------------------------------

Local Resources

--------------------------------------------------------------------------------

ora.CRS.dg

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.DATADG.dg

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.FRADG.dg

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.LISTENER.lsnr

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.asm

ONLINE ONLINE node1 Started

ONLINE ONLINE node2 Started

ora.gsd

OFFLINE OFFLINE node1

OFFLINE OFFLINE node2

ora.net1.network

ONLINE ONLINE node1

ONLINE ONLINE node2

ora.ons

ONLINE ONLINE node1

ONLINE ONLINE node2

--------------------------------------------------------------------------------

Cluster Resources

--------------------------------------------------------------------------------

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE node2

ora.LISTENER_SCAN2.lsnr

1 ONLINE ONLINE node1

ora.LISTENER_SCAN3.lsnr

1 ONLINE ONLINE node1

ora.cvu

1 ONLINE ONLINE node1

ora.node1.vip

1 ONLINE ONLINE node1

ora.node2.vip

1 ONLINE ONLINE node2

ora.oc4j

1 ONLINE ONLINE node1

ora.scan1.vip

1 ONLINE ONLINE node2

ora.scan2.vip

1 ONLINE ONLINE node1

ora.scan3.vip

1 ONLINE ONLINE node1

ora.zhongwc.db

1 ONLINE ONLINE node1 Open

2 ONLINE ONLINE node2 Open

检查集群的运行状况

[grid@node1 ~]$ crsctl check cluster CRS-4537: Cluster Ready Services is online CRS-4529: Cluster Synchronization Services is online CRS-4533: Event Manager is online [grid@node1 ~]$

所有 Oracle 实例

[grid@node1 ~]$ srvctl status database -d zhongwc Instance zhongwc1 is running on node node1 Instance zhongwc2 is running on node node2

[grid@node1 ~]$ srvctl status instance -d zhongwc -i zhongwc1 Instance zhongwc1 is running on node node1

节点应用程序

[grid@node1 ~]$ srvctl status nodeapps VIP node1-vip is enabled VIP node1-vip is running on node: node1 VIP node2-vip is enabled VIP node2-vip is running on node: node2 Network is enabled Network is running on node: node1 Network is running on node: node2 GSD is disabled GSD is not running on node: node1 GSD is not running on node: node2 ONS is enabled ONS daemon is running on node: node1 ONS daemon is running on node: node2

节点应用程序

[grid@node1 ~]$ srvctl config nodeapps Network exists: 1/192.168.0.0/255.255.0.0/eth0, type static VIP exists: /node1-vip/192.168.1.151/192.168.0.0/255.255.0.0/eth0, hosting node node1 VIP exists: /node2-vip/192.168.1.152/192.168.0.0/255.255.0.0/eth0, hosting node node2 GSD exists ONS exists: Local port 6100, remote port 6200, EM port 2016

数据库配置

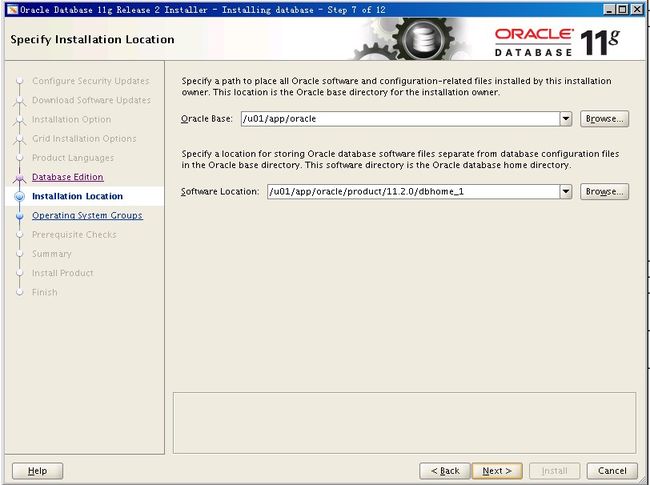

[grid@node1 ~]$ srvctl config database -d zhongwc -a Database unique name: zhongwc Database name: zhongwc Oracle home: /u01/app/oracle/product/11.2.0/dbhome_1 Oracle user: oracle Spfile: +DATADG/zhongwc/spfilezhongwc.ora Domain: Start options: open Stop options: immediate Database role: PRIMARY Management policy: AUTOMATIC Server pools: zhongwc Database instances: zhongwc1,zhongwc2 Disk Groups: DATADG,FRADG Mount point paths: Services: Type: RAC Database is enabled Database is administrator managed

ASM 状态

[grid@node1 ~]$ srvctl status asm ASM is running on node2,node1

ASM 配置

[grid@node1 ~]$ srvctl config asm -a ASM home: /u01/app/11.2.0/grid ASM listener: LISTENER ASM is enabled.

TNS 监听器状态

[grid@node1 ~]$ srvctl status listener Listener LISTENER is enabled Listener LISTENER is running on node(s): node2,node1

TNS 监听器配置

[grid@node1 ~]$ srvctl config listener -a Name: LISTENER Network: 1, Owner: grid Home: <CRS home> /u01/app/11.2.0/grid on node(s) node2,node1 End points: TCP:1521

节点应用程序配置 VIP、GSD、ONS、监听器

[grid@node1 ~]$ srvctl config nodeapps -a -g -s -l Warning:-l option has been deprecated and will be ignored. Network exists: 1/192.168.0.0/255.255.0.0/eth0, type static VIP exists: /node1-vip/192.168.1.151/192.168.0.0/255.255.0.0/eth0, hosting node node1 VIP exists: /node2-vip/192.168.1.152/192.168.0.0/255.255.0.0/eth0, hosting node node2 GSD exists ONS exists: Local port 6100, remote port 6200, EM port 2016 Name: LISTENER Network: 1, Owner: grid Home: <CRS home> /u01/app/11.2.0/grid on node(s) node2,node1 End points: TCP:1521

SCAN 状态

[grid@node1 ~]$ srvctl status scan SCAN VIP scan1 is enabled SCAN VIP scan1 is running on node node2 SCAN VIP scan2 is enabled SCAN VIP scan2 is running on node node1 SCAN VIP scan3 is enabled SCAN VIP scan3 is running on node node1 [grid@node1 ~]$

SCAN 配置

[grid@node1 ~]$ srvctl config scan SCAN name: cluster-scan.localdomain, Network: 1/192.168.0.0/255.255.0.0/eth0 SCAN VIP name: scan1, IP: /cluster-scan.localdomain/192.168.1.57 SCAN VIP name: scan2, IP: /cluster-scan.localdomain/192.168.1.58 SCAN VIP name: scan3, IP: /cluster-scan.localdomain/192.168.1.59 [grid@node1 ~]$

验证所有集群节点间的时钟同步

[grid@node1 ~]$ cluvfy comp clocksync -verbose Verifying Clock Synchronization across the cluster nodes Checking if Clusterware is installed on all nodes... Check of Clusterware install passed Checking if CTSS Resource is running on all nodes... Check: CTSS Resource running on all nodes Node Name Status ------------------------------------ ------------------------ node1 passed Result: CTSS resource check passed Querying CTSS for time offset on all nodes... Result: Query of CTSS for time offset passed Check CTSS state started... Check: CTSS state Node Name State ------------------------------------ ------------------------ node1 Active CTSS is in Active state. Proceeding with check of clock time offsets on all nodes... Reference Time Offset Limit: 1000.0 msecs Check: Reference Time Offset Node Name Time Offset Status ------------ ------------------------ ------------------------ node1 0.0 passed Time offset is within the specified limits on the following set of nodes: "[node1]" Result: Check of clock time offsets passed Oracle Cluster Time Synchronization Services check passed Verification of Clock Synchronization across the cluster nodes was successful.

[oracle@node1 ~]$ sqlplus / as sysdba SQL*Plus: Release 11.2.0.3.0 Production on Sat Dec 29 14:30:08 2012 Copyright (c) 1982, 2011, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production With the Partitioning, Real Application Clusters, Automatic Storage Management, OLAP, Data Mining and Real Application Testing options SQL> col host_name format a20 SQL> set linesize 200 SQL> select INSTANCE_NAME,HOST_NAME,VERSION,STARTUP_TIME,STATUS,ACTIVE_STATE,INSTANCE_ROLE,DATABASE_STATUS from gv$INSTANCE; INSTANCE_NAME HOST_NAME VERSION STARTUP_TIME STATUS ACTIVE_ST INSTANCE_ROLE DATABASE_STATUS ---------------- -------------------- ----------------- ----------------------- ------------ --------- ------------------ ----------------- zhongwc1 node1.localdomain 11.2.0.3.0 29-DEC-2012 13:55:55 OPEN NORMAL PRIMARY_INSTANCE ACTIVE zhongwc2 node2.localdomain 11.2.0.3.0 29-DEC-2012 13:56:07 OPEN NORMAL PRIMARY_INSTANCE ACTIVE

[grid@node1 ~]$ sqlplus / as sysasm SQL*Plus: Release 11.2.0.3.0 Production on Sat Dec 29 14:31:04 2012 Copyright (c) 1982, 2011, Oracle. All rights reserved. Connected to: Oracle Database 11g Enterprise Edition Release 11.2.0.3.0 - 64bit Production With the Real Application Clusters and Automatic Storage Management options SQL> select name from v$asm_diskgroup; NAME ------------------------------------------------------------ CRS DATADG FRADG

到此,使用UDEV在Oracle Linux 6上安装Oracle 11g RAC(11.2.0.3)完毕