Windows下利用MinGW编译FFmpeg

前言:

FFmpeg开源的项目没有提供Windows下的编译方式,因为需要在Windows下利用FFmpeg解H264编码的视频文件,所以搜集参考了网上的一些资料,把Linux下编译FFmpeg的 *.a 、*.so库文件利用MinGW编译为Windows下可用的 *.lib、 *.dll库文件。

1.下载最新的FFmpeg源码包, 我下载时间是2012-02-07

参见 http://www.ffmpeg.org/download.html

git clone git://source.ffmpeg.org/ffmpeg.git ffmpeg

如果没有安装git,那么在Fedora Linux下先安装git,使用如下命令 yum install git

2.下载最新的MinGW工具mingw-get-inst-20111118.exe

http://www.mingw.org/

http://sourceforge.net/projects/mingw/files/

3.在Windows Xp上完全安装mingw-get-inst-20111118.exe,所有选项都勾上(请确保能正常上网)

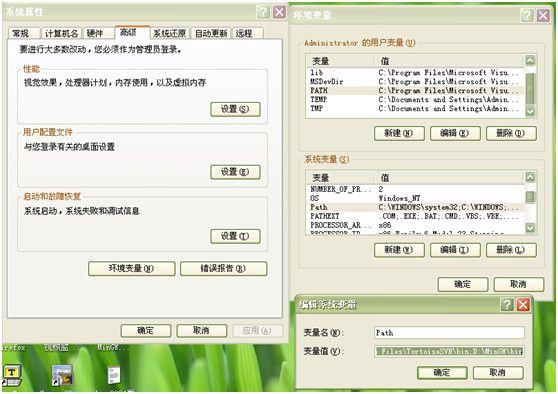

安装完后设置PATH环境变量

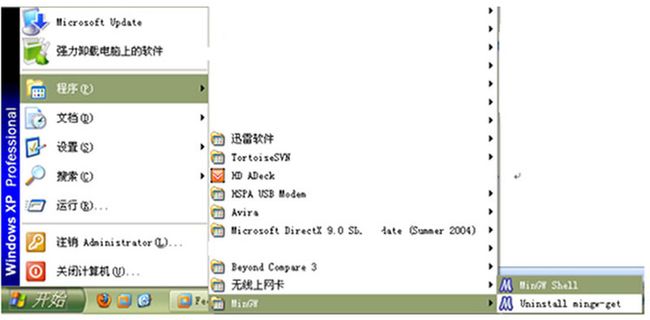

4.安装完后启动MinGW Shell

5.检查gcc、make、automake等工具是否安装

6.把FFmpeg源码包复制到目录D:\MinGW\msys\1.0\home,(请注意自己的MinGW安装目录,默认安装是在C:\MinGW)

7.修改D:\MinGW\msys\1.0\msys.bat文件,在文件开头增加如下一行(请确认自己的Visual Studio 2008或其他VS版本的安装路径)

D:\Program Files\Microsoft Visual Studio 9.0\VC\bin\vcvars32.bat

执行lib.exe结果显示如上则确认配置成功

8. 在FFmpeg源码目录下,执行./configure --enable-shared

参考文档: http://ffmpeg.org/trac/ffmpeg/wiki/MingwCompilationGuide

到 http://yasm.tortall.net/Download.html

这里去下载一个可用的yasm

我下的是yasm-1.2.0-win32.exe,然后重命名为yasm.exe 并把它放到目录D:\MinGW\bin下;然后重新执行./configure --enable-shared

pkg-config没有安装,解决办法:

参考文档: http://ffmpeg.org/trac/ffmpeg/wiki/MingwCompilationGuide

到 http://www.gtk.org/download/win32.php这里

下载GLib (Run-time) 、gettext-runtime (Run-time) 、 pkg-config (tool),下载之后解压它们并把解压后得到的 *.dll和 *.exe文件放到目录D:\MinGW\bin下;然后重新执行./configure --enable-shared

9. 执行make命令,进行编译

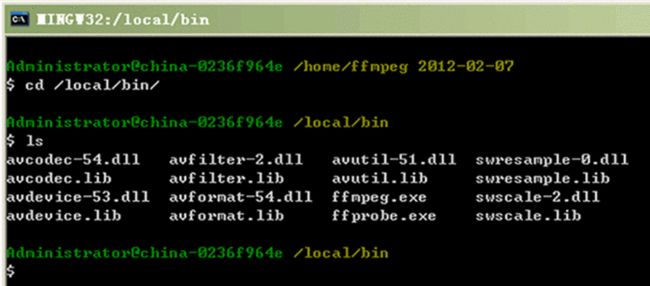

10.执行make install命令,进行安装,结果显示如下

生成的库文件位于目录D:\MinGW\msys\1.0\local\bin下

阶段小结:

到这里我们已经成功的把开源的FFmpeg的源码编译为Windows下可用的库文件!OK,我们已经前进了一大步,下面将继续展示如何使用这些库文件。

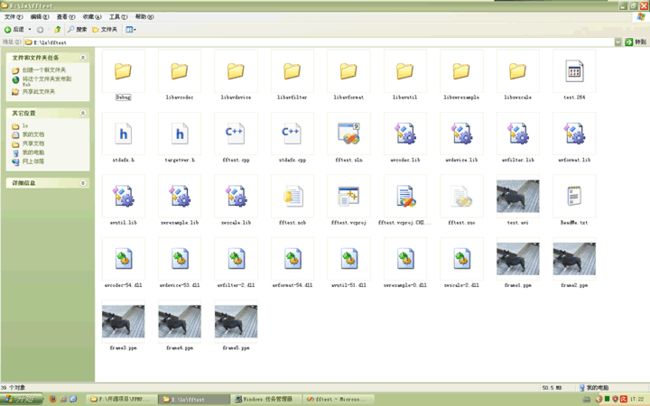

一、利用Visual Studio 2008 创建一基于控制台的项目fftest,注意请把预编译头的选项去掉

二、把上面第一部分整出来的,位于D:\MinGW\msys\1.0\local\bin目录下的所有 *.lib 和 *.dll 文件复制一份到项目fftest目录下;

把上面第一部分整出来的,位于D:\MinGW\msys\1.0\local\include目录下的所有头文件复制一份到项目fftest目录下;

三、利用下面的代码

#include "stdafx.h"

extern "C"{

#ifdef __cplusplus

#define __STDC_CONSTANT_MACROS

#ifdef _STDINT_H

#undef _STDINT_H

#endif

#include <stdint.h>

#ifdef __cplusplus

#define __STDC_CONSTANT_MACROS

#ifdef _STDINT_H

#undef _STDINT_H

#endif

#include <stdint.h>

#include "libavcodec/avcodec.h"

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#endif

}

#include "libavformat/avformat.h"

#include "libswscale/swscale.h"

#endif

}

#include <stdio.h>

#pragma comment(lib, "avutil.lib")

#pragma comment(lib, "avformat.lib")

#pragma comment(lib, "avcodec.lib")

#pragma comment(lib, "swscale.lib")

#pragma comment(lib, "avformat.lib")

#pragma comment(lib, "avcodec.lib")

#pragma comment(lib, "swscale.lib")

void SaveFrame(AVFrame *pFrame, int width, int height, int iFrame)

{

FILE *pFile;

char szFilename[32];

int y;

// Open file

sprintf(szFilename, "frame%d.ppm", iFrame);

pFile=fopen(szFilename, "wb");

if(pFile==NULL)

return;

// Write header

fprintf(pFile, "P6\n%d %d\n255\n", width, height);

// Write pixel data

for(y=0; y<height; y++)

fwrite(pFrame->data[0]+y*pFrame->linesize[0], 1, width*3, pFile);

// Close file

fclose(pFile);

}

FILE *pFile;

char szFilename[32];

int y;

// Open file

sprintf(szFilename, "frame%d.ppm", iFrame);

pFile=fopen(szFilename, "wb");

if(pFile==NULL)

return;

// Write header

fprintf(pFile, "P6\n%d %d\n255\n", width, height);

// Write pixel data

for(y=0; y<height; y++)

fwrite(pFrame->data[0]+y*pFrame->linesize[0], 1, width*3, pFile);

// Close file

fclose(pFile);

}

int avcodec_decode_video(AVCodecContext *avctx, AVFrame *picture, int *got_picture_ptr, uint8_t *buf, int buf_size)

{

AVPacket avpkt;

av_init_packet(&avpkt);

avpkt.data = buf;

avpkt.size = buf_size;

// HACK for CorePNG to decode as normal PNG by default

avpkt.flags = AV_PKT_FLAG_KEY;

{

AVPacket avpkt;

av_init_packet(&avpkt);

avpkt.data = buf;

avpkt.size = buf_size;

// HACK for CorePNG to decode as normal PNG by default

avpkt.flags = AV_PKT_FLAG_KEY;

return avcodec_decode_video2(avctx, picture, got_picture_ptr, &avpkt);

}

}

int main(int argc, char* argv[])

{

AVFormatContext *pFormatCtx;

int i, videoStream;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame;

AVFrame *pFrameRGB;

AVPacket packet;

int frameFinished;

int numBytes;

uint8_t *buffer;

struct SwsContext *img_convert_ctx;

{

AVFormatContext *pFormatCtx;

int i, videoStream;

AVCodecContext *pCodecCtx;

AVCodec *pCodec;

AVFrame *pFrame;

AVFrame *pFrameRGB;

AVPacket packet;

int frameFinished;

int numBytes;

uint8_t *buffer;

struct SwsContext *img_convert_ctx;

if(argc < 2) {

printf("Please provide a movie file\n");

return -1;

}

printf("Please provide a movie file\n");

return -1;

}

// Register all formats and codecs

av_register_all();

pFormatCtx = avformat_alloc_context();

// Open video file

//if(av_open_input_file(&pFormatCtx, argv[1], NULL, 0, NULL)!=0)

if(avformat_open_input(&pFormatCtx, argv[1], NULL, NULL) < 0 )

return -1; // Couldn't open file

// Retrieve stream information

//if(av_find_stream_info(pFormatCtx)<0)

if(avformat_find_stream_info(pFormatCtx, NULL)<0)

return -1; // Couldn't find stream information

// Find the first video stream

videoStream=-1;

for(i=0; i<pFormatCtx->nb_streams; i++)

//if(pFormatCtx->streams[i]->codec->codec_type==CODEC_TYPE_VIDEO)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO)

{

videoStream=i;

break;

}

if(videoStream==-1)

return -1; // Didn't find a video stream

// Get a pointer to the codec context for the video stream

pCodecCtx=pFormatCtx->streams[videoStream]->codec;

// Find the decoder for the video stream

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL) {

fprintf(stderr, "Unsupported codec!\n");

return -1; // Codec not found

}

// Open codec

if(avcodec_open(pCodecCtx, pCodec)<0)

return -1; // Could not open codec

// Allocate video frame

pFrame=avcodec_alloc_frame();

// Allocate an AVFrame structure

pFrameRGB=avcodec_alloc_frame();

if(pFrameRGB==NULL)

return -1;

// Determine required buffer size and allocate buffer

numBytes=avpicture_get_size(PIX_FMT_RGB24, pCodecCtx->width,

pCodecCtx->height);

buffer=(uint8_t *)av_malloc(numBytes*sizeof(uint8_t));

// Assign appropriate parts of buffer to image planes in pFrameRGB

// Note that pFrameRGB is an AVFrame, but AVFrame is a superset

// of AVPicture

avpicture_fill((AVPicture *)pFrameRGB, buffer, PIX_FMT_RGB24,

pCodecCtx->width, pCodecCtx->height);

// Read frames and save first five frames to disk

i=0;

while(av_read_frame(pFormatCtx, &packet)>=0) {

// Is this a packet from the video stream?

if(packet.stream_index==videoStream) {

// Decode video frame

avcodec_decode_video(pCodecCtx, pFrame, &frameFinished, packet.data, packet.size);

// Did we get a video frame?

if(frameFinished) {

// Convert the image from its native format to RGB

//img_convert((AVPicture *)pFrameRGB, PIX_FMT_RGB24,(AVPicture*)pFrame, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height);

//img_convert is departed, use sws_scale replace

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, PIX_FMT_RGB24,SWS_BICUBIC, NULL, NULL, NULL);

if( img_convert_ctx == NULL ) printf("sws_getContext is failed");

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pFrameRGB->data, pFrameRGB->linesize);

sws_freeContext(img_convert_ctx);

// Save the frame to disk

if(++i<=5)

SaveFrame(pFrameRGB, pCodecCtx->width, pCodecCtx->height, i);

}

}

// Free the packet that was allocated by av_read_frame

av_free_packet(&packet);

}

// Free the RGB image

av_free(buffer);

av_free(pFrameRGB);

// Free the YUV frame

av_free(pFrame);

// Close the codec

avcodec_close(pCodecCtx);

// Close the video file

av_close_input_file(pFormatCtx);

return 0;

}

av_register_all();

pFormatCtx = avformat_alloc_context();

// Open video file

//if(av_open_input_file(&pFormatCtx, argv[1], NULL, 0, NULL)!=0)

if(avformat_open_input(&pFormatCtx, argv[1], NULL, NULL) < 0 )

return -1; // Couldn't open file

// Retrieve stream information

//if(av_find_stream_info(pFormatCtx)<0)

if(avformat_find_stream_info(pFormatCtx, NULL)<0)

return -1; // Couldn't find stream information

// Find the first video stream

videoStream=-1;

for(i=0; i<pFormatCtx->nb_streams; i++)

//if(pFormatCtx->streams[i]->codec->codec_type==CODEC_TYPE_VIDEO)

if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO)

{

videoStream=i;

break;

}

if(videoStream==-1)

return -1; // Didn't find a video stream

// Get a pointer to the codec context for the video stream

pCodecCtx=pFormatCtx->streams[videoStream]->codec;

// Find the decoder for the video stream

pCodec=avcodec_find_decoder(pCodecCtx->codec_id);

if(pCodec==NULL) {

fprintf(stderr, "Unsupported codec!\n");

return -1; // Codec not found

}

// Open codec

if(avcodec_open(pCodecCtx, pCodec)<0)

return -1; // Could not open codec

// Allocate video frame

pFrame=avcodec_alloc_frame();

// Allocate an AVFrame structure

pFrameRGB=avcodec_alloc_frame();

if(pFrameRGB==NULL)

return -1;

// Determine required buffer size and allocate buffer

numBytes=avpicture_get_size(PIX_FMT_RGB24, pCodecCtx->width,

pCodecCtx->height);

buffer=(uint8_t *)av_malloc(numBytes*sizeof(uint8_t));

// Assign appropriate parts of buffer to image planes in pFrameRGB

// Note that pFrameRGB is an AVFrame, but AVFrame is a superset

// of AVPicture

avpicture_fill((AVPicture *)pFrameRGB, buffer, PIX_FMT_RGB24,

pCodecCtx->width, pCodecCtx->height);

// Read frames and save first five frames to disk

i=0;

while(av_read_frame(pFormatCtx, &packet)>=0) {

// Is this a packet from the video stream?

if(packet.stream_index==videoStream) {

// Decode video frame

avcodec_decode_video(pCodecCtx, pFrame, &frameFinished, packet.data, packet.size);

// Did we get a video frame?

if(frameFinished) {

// Convert the image from its native format to RGB

//img_convert((AVPicture *)pFrameRGB, PIX_FMT_RGB24,(AVPicture*)pFrame, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height);

//img_convert is departed, use sws_scale replace

img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, PIX_FMT_RGB24,SWS_BICUBIC, NULL, NULL, NULL);

if( img_convert_ctx == NULL ) printf("sws_getContext is failed");

sws_scale(img_convert_ctx, (const uint8_t* const*)pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pFrameRGB->data, pFrameRGB->linesize);

sws_freeContext(img_convert_ctx);

// Save the frame to disk

if(++i<=5)

SaveFrame(pFrameRGB, pCodecCtx->width, pCodecCtx->height, i);

}

}

// Free the packet that was allocated by av_read_frame

av_free_packet(&packet);

}

// Free the RGB image

av_free(buffer);

av_free(pFrameRGB);

// Free the YUV frame

av_free(pFrame);

// Close the codec

avcodec_close(pCodecCtx);

// Close the video file

av_close_input_file(pFormatCtx);

return 0;

}

感谢老美提供的代码

This work is licensed under the CreativeCommons Attribution-Share Alike 2.5

License. To view a copy of thislicense, visit

http://creativecommons.org/licenses/by-sa/2.5/ or senda letter to Creative

Commons, 543 Howard Street, 5th Floor, SanFrancisco, California, 94105, USA.

Code examples are based off of FFplay, Copyright (c) 2003 FabriceBellard, and

a tutorial by Martin Bohme.

License. To view a copy of thislicense, visit

http://creativecommons.org/licenses/by-sa/2.5/ or senda letter to Creative

Commons, 543 Howard Street, 5th Floor, SanFrancisco, California, 94105, USA.

Code examples are based off of FFplay, Copyright (c) 2003 FabriceBellard, and

a tutorial by Martin Bohme.

因为代码基于比较旧的FFmpeg版本,所以我做了很微小的修改使其能适应最近版本的FFmpeg。

四、请准备一份 *.avi 格式的视频文件,或h264编码的视频文件,并把它放到项目fftest的目录下;

五、设置VC项目fftest的命令参数 test.avi(它对应用来测试的avi视频文件)

六、编译运行