audioflinger

如果要转载,请注明原创作者是蝈蝈

1. OverView

This document is mainly focused on Jean Bean audioFlinger, but the 1st chaptor introduces Audio Policy Service and Audio Hardware, you can jump to chapter AudioFlinger if you are already a veteran of Audio. Below is overview of all Audio Flinger classes for reference.

2. Audio Policy and Audio Hardware

Below is a chart briefly describes the control command and audio data flow.

Same as UNIX, Android audio treat the AudioHardware as IO whose basic manipulation includes open, close, read and write. AudioHardware can be considered as a file, and the file has the IO streams. Audio-Hardware is responsible to provide such interfaces to manipulate the file and IO streams. While Audio Flinger is the main player who directly manipulates the Audio Hardware, i.e., Audio Policy Service, Audio System, AudioRecord and AudioTrack are all clients of AudioFlinger.

2.1 Audio Hardware Device

Audio Hardware has basic functionality for:

l Opening/closing audio input/output streams,

l Audio devices enabling/disabling, like EARPIECE, SPEAKER, and BLUETOOTH_SCO, etc.

l Set volume for voice call.

l Mute Mic.

l Change audio modes including AUDIO_MODE_NORMAL for standard audio playback, AUDIO_MODE_IN_CALL when a call is in progress, AUDIO_MODE_RINGTONE when a ringtone is playing.

Each input/output stream should provide function for

l data write/read

l Get/set Sample rate

l Get channels, buffer size information of current stream

l Get/set current format, eg. AUDIO_FORMAT_PCM_16_BIT

l Put stream into standby mode

l Add/remove audio effect on the stream

l Get/set parameter to switch audio path.

2.2 Audio Policy Service

AudioPolicyService is an assistant of AudioFlinger, its job is to control the activity and configuration of audio input and output streams, a default output is created when initializing AudioPolicyService.

To let vendor implement its private AudioPolicy, AudioPolicyManager in audioHAL acts as a bridge to do most of the jobs, so that we can treat AudioPolicyManager as a part of AudioPolicyService.

2.3 Audio Policy Manager

The platform specific audio policy manager is in charge of the audio routing and volume control policies for a given platform.

The main roles of this module are:

1. Keep track of current system state (removable device connections, phone state, user requests...). System state changes and user actions are notified to audio policy manager with methods of the audio policy.

2. process get_output() queries received when AudioTrack objects are created: Those queries return a handler on an output that has been selected, configured and opened by the audio policy manager and that must be used by the AudioTrack when registering to the AudioFlinger with the createTrack() method. When the AudioTrack object is released, a release_output() query is received and the audio policy manager can decide to close or reconfigure the output depending on other streams using this output and current system state.

3. Similarly process get_input() and release_input() queries received from AudioRecord objects and configure audio inputs.

4. Process volume control requests: the stream volume is converted from an index value (received from UI) to a float value applicable to each output as a function of platform specific settings and current output route (destination device). It also makes sure that streams are not muted if not allowed (e.g. camera shutter sound in some countries).

3. Audio Flinger

3.1 Fast Track MIxer

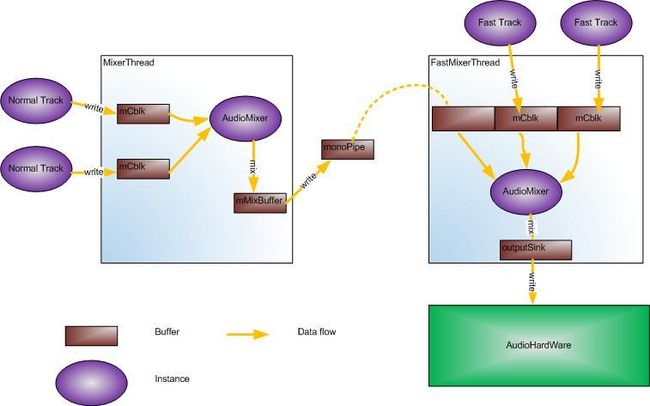

Below is Google’s official reply for designing fast mixer. In shorts words, to take advantage of multi-core, part of normal mixer-thread’s work is moved to fast mixer-thread.

The ToneGenerator and SoundPool uses fast track. Below list the flags to create an audio track.

Fast track’s buffer is mixed in fast mixer thread, to enable fast mixer, the period time of audio Hardware should be less than 20ms.

For normal tracks, the buffer is mixed by Mixer thread, and then Mixer thread write mixed buffer to the monoPipe which read by fast Mixer Thread to mix with other fast track’s buffers. In Fast Mixer thread, the monoPipe is considered as a FastTrack’s buffer.

Once fast Mixer finishes the mixing process, the mixed buffer would be dumped to the Audio hardware.

Below chart expresses fast mixer.

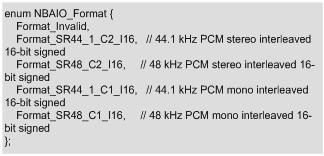

Both MonoPipe and OutputSink are created in MixerThread::MixerThread(), MonoPipe is used for data transferring from Mixer thread to fast Mixer thread while OutputSink is used by fast Mixer thread to dump audio data to audio hardware, both of them can be considered as buffers. The MonoPipe and OutputSink are derived from NBAIO (non blocking audio input output) which only support below formats.

So that fast mixer doesn’t support the phone output whose sample rate is 8k.

Audio Mixer’s mixing logic is as below.

The audio signal is represented with complement code (minimal is 0x8000, maximum is 0x7FFF), Each 16 bit signal is multiplied with 16 bit volume (4bit integer, 12 bit fraction), the output multiplied buffer is 32 bit buffer with 20 bit integer part and 12 bit fraction part.

Volume 1 (4 integer, 12 fraction) * signal 1 (16 integer) = mul 1 on 32 bits (20 integer, 12 fraction)

Volume 2 (4 integer, 12 fraction) * signal 2 (16 integer) = mul 2 on 32 bits (20 integer, 12 fraction)

Audio Mixer addes two audio multi signals to a 32 bit buffer, and then do the dither by just removing the 12 bit fraction part, after that, Audio Mixer will do the clamping by replacing audio signal above 0x7FFF with 0x7FFF, and audio signal bellows 0x8000 with 0x8000, you can say it as truncate.

Audio Mixer is not yet capable of mix multi-channel beyond stereo for which down mixer is required; down mixer is one type of audio effect introduced in next chapter.

3.2 Audio Effect

Each playback/record threads hold an EffectChain, and processes the audio effect before writing the audio to the audio hardware or after reading audio. For playback, the audio effect can be done in both audioFlinger and audioHardware, but for record, it can only be done in audio Hardware, suppose the reason is that it is convenient to do echo cancellation in audio Hardware.

Below is an overview of audio effects for playback/record.

3.2.1 Playback Audio Effect

To add an audio Effect is simple, just create the audio Effect instance, and audio Effect module will be created in audioflinger by calling audioflinger’s private instance AudioEffectsFactory. AudioEffectsFactory is initialized by reading configuration file audio_effects.conf, and responsible for creating audio Effect module instances, after that AudioFlinger pushed the newly created audio effect instances to the effect chain owned by each output thread.

`

Below list all audio effect types currently supported, each is identified with a UUID.

| EFFECT CONSTANT |

UUID |

| EFFECT_TYPE_ENV_REVERB |

c2e5d5f0-94bd-4763-9cac-4e234d06839e |

| EFFECT_TYPE_PRESET_REVERB |

47382d60-ddd8-11db-bf3a-0002a5d5c51b |

| EFFECT_TYPE_EQUALIZER |

0bed4300-ddd6-11db-8f34-0002a5d5c51b |

| EFFECT_TYPE_BASS_BOOST |

0634f220-ddd4-11db-a0fc-0002a5d5c51b |

| EFFECT_TYPE_VIRTUALIZER |

37cc2c00-dddd-11db-8577-0002a5d5c51b |

| EFFECT_TYPE_AGC |

0a8abfe0-654c-11e0-ba26-0002a5d5c51b |

| EFFECT_TYPE_AEC |

7b491460-8d4d-11e0-bd61-0002a5d5c51b |

| EFFECT_TYPE_NS |

58b4b260-8e06-11e0-aa8e-0002a5d5c51b |

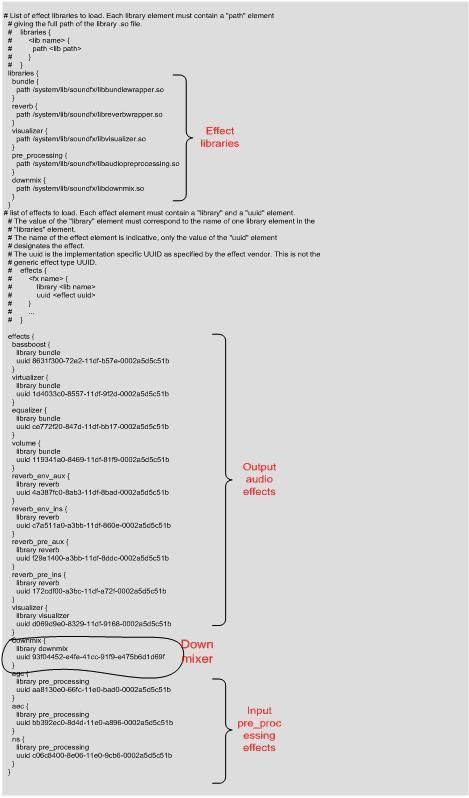

For the audio factory initialization, audio flinger parsed the audio_effects.conf and established a map between each audio effect to the corresponding library, like revertb effect is created by reverbwrapper.so. Each audio effect library should at least provide the process() function to implement the audio effect.

Below is a sample to add an audioEffect for an audioTrack, and the audio effect is used for the whole mixed buffer of the selected output thread.

Correspondingly, audio effect module is removed from the chain when destroying audio effect instance.

Below is the calling sequence for adding and removing an effect module.

Google provides default effect libraries and configuration file at /system/etc/audio_effects.conf, vendor can override and provides its own libraries by creating configuration file at /vendor/etc/audio_effects.conf. Below is the Google default audio_effects.conf.

DownMixer is one type of AudioEffect. Google also provide default downmixer library.

For AudioTrack whose channel count above stereo, AndioFlinger would create a downmixer instance for this track in Track create function. And For such AudioTrack, the audio Mixer gets the buffer out of down mixer but not mCblk before mixing.

3.2.2 Record Audio Effect

For recording, the audio effect is processed in audio hardware but not in audio flinger in which audio effect process is disabled by setting the input buffer and output buffer as NULL.

And the effect module just ignores such NULL buffer when doing process.

As above figure, audioPolicyService loaded audio_effects.conf and registered the audio effect information to an EffectDesc array. AudioFlinger create audio effect modules in getInput() based on the EffectDesc passed by AudioPolicyService. Starting the record thread would add the effect handlers to AudioHardware via function add_audio_effect, vice verse. AudioFlinger passed down the effect handles to audioHardware. It is audioHardware’s responsibility to process the audio effect.

Below is a part of audio_effects.conf which indicates the input audio effects.

Below is the sequence.

3.3 Watch Dog

Watch dog thread is used to monitor CPU usage only when fast Mixer thread is enabled, with ANDROID_PRIORITY_URGENT_AUDIO=-19 priority. It is simple, audio Flinger triggered the watch dog in mixerThread::threadLoop_write() function before each writing, once the interval between two consequent writing is larger than 100ms, watch dog thread reports warnings “Insufficient CPU for load”.

3.4 Soaker

Soaker is a thread for performance testing; it is disabled by default and can be opened by MACRO SOAKER in Android.mk in audio flinger. It is created when creating the mixer thread with priority PRIORITY_LOWEST. From below codes, you can see that it runs for ever.

So here comes a message from Google, don’t play with Android with Single core!

3.5 Timed Audio Track

Timed audio track is used by streaming media; the rtp aah create the timed audio track. Class TimedTrack is a derived class of class Track; it supports buffers with time stamp. For RTP, the most important part is the time stamp, and AudioMixer get the buffer from TimedTrack according to the time stamp. Once TimedTrack lacks the required timestamp buffer, the returned buffer to AudioMixer will be filled with zero.

3.6 Audio Recording Trigger

Android vendors may add several hundreds milliseconds of delay before start recording to avoid recorded the audio played by the playback thread. Google add an official fix in AudioFlinger by synchronizing the playback thread and record thread with syncEvent mechanism.

Two types of sync events are defined.

l SYNC_EVENT_NONE

Equivalent to calling the corresponding non synchronized method

SYNC_EVENT_PRESONTATION_COMPLETE

The corresponding action is triggered only when the presentation is completed

To use this feature, users should start the AudioRecord with parameter SYNC_EVENT_PRESENTATION_COMPLETE. And the first two period (~50ms) recorded buffer after audio playback track stops would be discarded, this is hardcoded in audioFlinger.

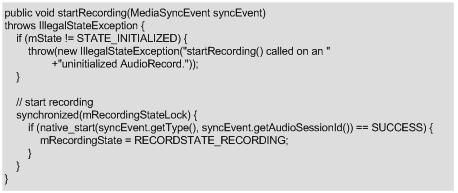

The codes of startRecording is as below, in

frameworks /base/media/java/android/media/AudioRecord.java

When start an audioRecord, it would first check whether there are active playback threads, once have, a syncEvent instance is created, and the recordThread would discard what it reads until 30 Seconds timeout or a syncEvent with SYNC_EVENT_PRESENTATION_COMPLETE typeis sent when destroying a audioTrack, in the latter case, recordThread would discard two more period buffer after received the syncEvent. The syncEvent acts as a command to stop discarding buffer.

Once there are no active playback threads when starting the record thread, audioRecord thread acts as normal, i.e., without buffer discarding.

[Note]: As libstagefright not used the feature in JB, so that the default Sound Recorder doesn’t use this feature.