Multi-target tracking with Single Moving Camera

引自:http://www.eecs.umich.edu/vision/mttproject.html

- Wongun Choi, Caroline Pantofaru, Silvio Savarese, "A General Framework for Tracking Multiple People from a Moving Camera", PAMI (under revision, accepted with minor revision).

- Wongun Choi and Silvio Savarese, "Multiple Target Tracking in World Coordinate with Single, Minimally Calibrated Camera", ECCV 2010 (pdf).

- Wongun Choi, Caroline Pantofaru, Silvio Savarese, "Detecting and Tracking People using an RGB-D Camera via Multiple Detector Fusion" CORP (in conjunction with ICCV) 2011 (pdf).

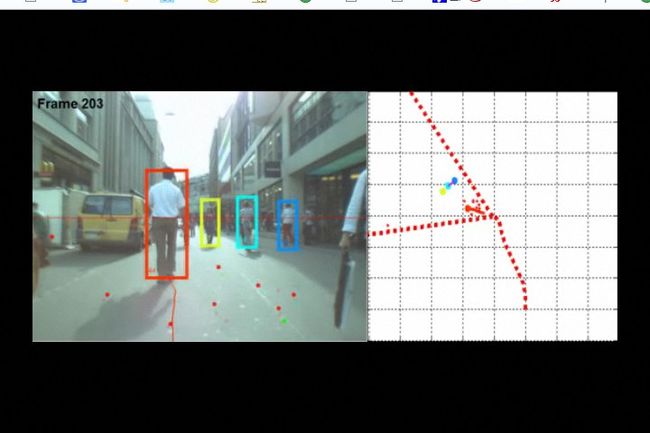

In this project, we aim to solve a challenging problem of multi-target tracking under unknown camera motion. To meet the most general condition of tracking applications, we did not rely on the use of stereo-camera and SfM algorithm. To cope with the unknown camera motion as well as frequent occlusion between targets, the targets were tracked in the 3D coordinate and the camera's parameters are estimated in a joint fashion. Specifically, our goals are to: i) solve the multi-object tracking problem by using a single uncalibrated moving camera; ii) handle complex scenes where multiple pedestrians are moving at the same time and occluding each other; iii) estimate the 2D/3D temporal trajectories within the camera reference system.

The key contribution of our work relies on the fact that we simultaneously estimate the camera parameters (such as focal length and camera pose) and track objects (such as pedestrians) as they move in the scene. Tracks provide cues for estimating camera parameters by using their scale and velocity in the image plane; at the same time, camera parameters can help track objects more robustly as critical prior information becomes available. This, in turn, allows us to estimate object 3D trajectories in the camera reference system. We utilize a simplified camera model that allows to find a compact (but powerful) relationship between the variables (targets and camera parameters) via camera projection constraints. The identification of a handful of feature tracks associated with the static background allows us to add additional constraints to the camera model. Eventually, we frame our problem as a maximum-posterior problem in the joint variable space. In order to reduce the (otherwise extremely) large search space caused by the high dimensionality of the representation, we incorporate MCMC particle filtering algorithm which finds the best explanation in sequential fashion. Notice that, unlike previous methods using MCMC, our method is the first that uses MCMC for efficiently solving the joint camera estimation and multi-target problem.

The second key contribution is that we obtain robust and stable tracking results (i.e. uniquely associate object identities to each track) by incorporating interaction models. Interaction between targets have been largely ignored in the object tracking literature, due to the high complexity in modeling moving targets and the consequential computational complexity. The independent assumption is reasonable when the scene is sparse (only few objects exists in the scene). In a crowded scene, however, the independent motion model often fails to account for the target's deviation from the prediction, e.g. if a collision is expected, targets will change their velocity and direction rapidly so as to avoid a collision. Thus, modeling interactions allows us to disambiguate occlusions between targets and better associate object labels to underlying trajectories. This capability is further enhanced by the fact that our trajectories are estimated in 3D rather than in the image plane. Our interaction models are coherently integrated in the graphical model introduced above.

We validate our theoretical results in a number of experiments using our own dataset (provided below) and the publicly available ETH dataset. Also, a new RGB-D dataset collected using Kinect camera mounted on a robot (will be available soon) is used to evaluate our algotithm. Please see the eccv paper and workshop paper for more details. The poster presented in ECCV is available as well.

Dataset

Semi-static datasets used in our research can be found here. All the videos were taken by a person holding a single hand-held camera. The videos contain multiple number of targets, panning of the camera, sudden camera's shakes, etc.

Result Video

Result Image

Source Code

Launch SVN client and checkout (e.g. svn co link):

- Version 0.5 release(August 7th, 2012): C++ code with a demo at http://mtt-umich.googlecode.com/svn/tags/release-0.5

Bibtex

@InProceedings{Wongun_iccvw_2011,

author = {Wongun Choi and Caroline Pantofaru and Silvio Savarese},

title = {Detecting and Tracking People using an RGB-D Camera via Multiple Detector Fusion},

booktitle = {CORP in conjunction with ICCV},

year = {2011},

}

author = {Wongun Choi and Silvio Savarese},

title = {Multiple Target Tracking in World Coordinate with Single, Minimally Calibrated Camera},

booktitle = {European Conference on Computer Vision, ECCV},

year = {2010},

}

Acknowledgement

This project is sponsored by NSF AEGER (award #1052762) and Toyota. This work is in collaboration with Dr. Caroline Pantofaru (Willow Garage).

Last Updated: September 12th, 2012

Contact : wgchoi at umich dot edu