论文上对GPU的讨论之《矩阵式FDTD算法图形加速实现》

原文标题:《IMPLEMENTATION OF MATRIX-TYPE FDTD ALGORITHM ON A GRAPHICS ACCELERATOR》

首位两段就不加介绍了,主要翻译正文:

稀疏矩阵的压缩

So far the efficiency of GPUs has been demonstrated on algorithms such as FDTD [2] and MRTD [5].

迄今为止,在GPU上已经可以有效运行诸如FDTD,MRTD的算法。

In all these algorithms computations can be carried out without the need to arrange the data in matrices.

在这些算法中计算时不需要用矩阵存储数据。

On the other hand, many techniques used nowadays in computational electromagnetics rely on processing matrices.

另一方面,当今许多计算电磁学技术还是依赖于矩阵的。

For that reason in this paper we shall concentrate on an alternative representation of the FDTD technique, in which the curl operators are represented by sparse matrices.

基于上述原因,此篇文章我们将要集中(解决)在FDTD技术替代的表示方法,其中,旋度算符用稀疏矩阵表示。

Using a C-like language notation the matrix version of one FDTD step can be written as :

使用类C语言标记一步FDTD的矩阵形式,写成:

[e] += [RH] * [h] (2.1)

[h] += [RE] * [e] (2.2)

Analyzing (2.1) and (2.2), one can draw a conclusion that it is the matrix and vector multiplication that is a key to arriving at an effective computation.

分析2.1和2.2式可以得出结论,高效得计算关键取决于矩阵和向量乘法。

Moreover, being aware of the fact that matrices are highly sparse [6].

此外,矩阵RH和RE 稀疏度高,有必要选择一个恰当的矩阵存储方案。

The results of test carried out on and for three matrix storage schemes CCS (Compressed Column Storage), BCRS (Block Compressed Row Storage) and CRS (Compressed Column Storage) are presented in Tab.1.

在CPU (Intel Core2 Duo E6400 [2,14 GHz])和GPU (Nvidia GeForce 8600 GT [1,188 GHz])上所做的测试,三个矩阵存储方案:CCS (Compressed Column Storage压缩列存储), BCRS (Block Compressed Row Storage) 和 CRS (Compressed Row Storage) 如表1所示。

Table 1. Results for a problem with one sub-diagonal, one diagonal and one super-diagonal.

A very simple was used in this test.

用以测试的是一个简单的三对角线矩阵。

The results indicate that depending on the assumed matrix storage scheme, ) or lag behind the same operation executed on a CPU.

结果表明,假定的矩阵存储方案不同,在GPU上执行一个矩阵乘以向量运算领先(对于CRS和 BCRS)或者落后CPU执行相同的操作。

To explain poor performance of the CCS, one has to note that during the tests on CCS that the column compression was subject to,

为了解释CCS拙劣的性能,需要指出来的是,在CCS执行测试的时候,列是受压缩的,看来,该算法的提出形式,即一个稀疏矩阵乘一个向量陷入两个主要的困难。

The first of them occurred on attempts to write data to the same address in global memory by different threads, which results from an imperfection of the architecture for float type.

第一个在于不同的线程往全局内存写入数据的时候,这个问题是由于单精度类型的不完美的体系结构上的。

The second occurs when columns have a different number of elements. So far,

第二个出现在当列有不同数目的元素的时候。到此为止,解说了在GPU执行程序速度比CPU上慢的问题所在。

BCRS is effective only for those problems where of non-zero elements occur.

BSRS 仅对这些问题有效:局部非零元素凝聚情况出现。

The best results were obtained for CRS.

最好的结果有CRS获得。使得这种压缩比前几个更好的原因在于,相对于CCS,它没有想要写入相同(内存)地址的线程。

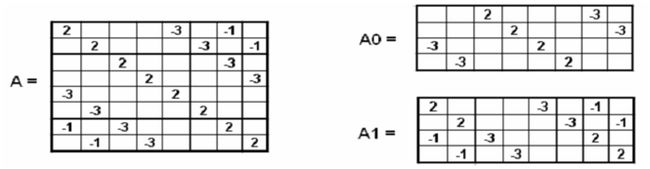

在一行有不同数目的元素的问题可以这样解决:将原问题分解为有相同数目元素的子问题。如图1所示。

Figure 1. Dividing a problem A to sub-problems A0 and A1.

is divided to kernels and each of them is a sub-problem.

从这个角度看一个程序,只需要将一个核分解为一对核,并且每个核负责解决一个子问题。

of CRS implementations with and without dividing a problem to sub-problems for problem dimension is showed in Table 2.

对一个尺寸为n = 98304的问题,有没有将问题分解为子问题的CRS实现的比较如表2所示。

Table 2. Results for a problem with one sub-diagonal, diagonal and super-diagonal.

(2:CRS* - implementation with subdivision)

3. CRS subdivision in a matrix version of FDTD method.

FDTD方法的CRS矩阵分解形式

上一节中介绍的实验表明,在CRS上再进行细分使其每一行拥有相同数目的元素,可以达到最好的结果。

本节介绍,使用了式2.1和2.2的矩阵式的FDTD方法,在一个空的谐振腔内进行测试,以计算一个三维问题的电场矢量和磁场矢量(图2.2)。

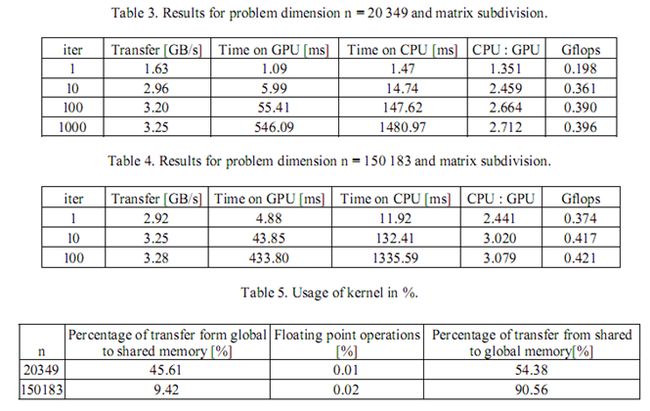

The tests were performed for different problem dimensions n = 20 349 and n = 150 183.

测试演示了不同的问题规模n = 20 349 和 n = 150 183.

Table 3 and Table 4 present results where matrixes compressed with dividing them to sub-problems (showed in fig.1) for different problem`s dimensions.

表2和表4描述了对于不同问题规模,RE 和 RH 矩阵使用细分子问题法(如图1所示)压缩。

Table 5 s percentage of three basic operations performed in kernels at each step.

表5描述了三个基本的运算在核内的迭代过程所占的百分比。

观察3和表4收集到的数据,可以推导随着问题规模的增加,效率随之提高。

Additionally, the due to kernel start up has to be taken into account if the number of iterations is small.

此外,如果迭代数目很小……必须考虑在内。

While this is a rare situation in FDTD, other techniques such as FDFD where iterative matrix solvers are often used, may suffer from this problem.

尽管这是FDTD中很少用的仿真,其他技术,诸如FDTD,迭代矩阵求解也经常使用到,可以从此问题中获得启发。

Theoretically, even better efficiency can be achieved if transfer from global memory to shared memory is coalesced and usage of data from shared memory is of conflicts [7].

理论上,可以获得跟好的效率,如果从全局内存移动(数据)到共享内存接合,并且使用共享内存数据没有冲突。

An analysis of kernels executed on GPU in one iteration proves that for calculations takes up only 0.01% of the total execution time, transfer from global memory to shared memory 45.61% and transfer from shared memory to global memory consumes 54.38% of kernel`s.

分析在GPU内核执行一次迭代证明了,对于n = 20 349的计算量,花费总的执行时间仅仅0.01%,从全局内存传递数据到共享内存45.61%,从共享内存传递数据到全局内存花费54.38% 。

It means that basic floating point computations based on (2.1) and (2.2) are very efficient, but the transfer between memories still needs to be optimized

这就意味着,基本2.1和2.2的单精度浮点数运算是非常有效的,但是内存之间的移动仍然有待于优化.

The reason why transfer from shared memory to global memory grows with problem is due to that are done in order to receive write es in vectors after dividing problem to sub-problems.

从共享内存中移动到全局内存增加了问题的规模原因在于,在分解问题为子问题后,为了接受向量中的写索引,进行了排列(操作)。

References

[1] General-Purpose Computation Using Graphics Hardware (GPGPU), Web site [online] http://www.gpgpu.org/

[2] A. Taflove and S. C. Hagness, Computational Electrodynamics: The Finite-Difference Time-Domain

Method, 3rd ed. Norwood, MA: Artech House, 2005. Chapter 20,R. Schneider, L.Turner, M.Okoniewski

Advances in Hardware Acceleration for FDTD

[3] Acceleware, Web site [online] : http://www.acceleware.com

[4] NVIDIA CUDA Programming Guide_1.0,

Web site [online] : http://www.nvidia.com/object/cuda_develop.html

[5] Baron, G.; Fiume, E.; Sarris, C.D. Accelerated Implementation of the S-MRTD Technique Using Graphics

Processor Units; Microwave Symposium Digest, 2006. IEEE MTT-S International

11-16 June 2006 Page(s):1073 - 1076

[6] Shahnaz, Rukhsana; Usman, Anila; Chughtai, Imran R., Review of Storage Techniques for Sparse Matrices;

9th International Multitopic Conference, IEEE INMIC 2005,Dec. 2005 Page(s):1 - 7

[7] Mark Harris, Data Parallel Algorithms in CUDA; Web site [online] http://www.gpgpu.org/sc2007/