【stagefrightplayer】5 音频输出AudioPlayer介绍

概述

stagefrightplayer中使用audioplayer类来进行音频的输出。

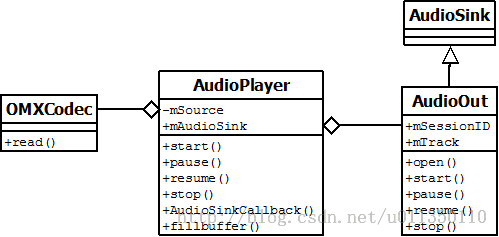

先来看下audioplayer相关的类图

在之前介绍awesomeplayer结构的时候有画图介绍,mAudioPlayer的输入为mAudioSource,也就是解码器对应的OMXCodec,在构造AudioPlayer对象时会存放在其成员mSource中,而AudioPlayer可以看做是AudioOut的封装,实际的pcm playback操作都是借由AudioOut类来实现的。

AudioOut继承自AudioSink,AudioSink为纯虚类,定义了接口,实际的工作由AudioTrack来完成(存放在mTrack中,以后介绍audioflinger时会详细介绍audiotrack)。

本篇的主要内容如下:

1 准备工作

2 时间戳计算

3 Seek功能

下面就从代码详细介绍整个过程。

1 准备工作

在awesomeplayer中,与audioplayer相关的语句主要有:

mAudioPlayer = new AudioPlayer(mAudioSink, allowDeepBuffering, this);

mAudioPlayer->setSource(mAudioSource);

startAudioPlayer_l();

主要的调用位置在awesomeplayer中,代码如下

status_t AwesomePlayer::play_l() {

*********************

if (mAudioSource != NULL) {

if (mAudioPlayer == NULL) {

if (mAudioSink != NULL) {

mAudioPlayer = new AudioPlayer(mAudioSink);

mAudioPlayer->setSource(mAudioSource);

// We've already started the MediaSource in order to enable

// the prefetcher to read its data.

status_t err = mAudioPlayer->start(

true /* sourceAlreadyStarted */);

if (err != OK) {

delete mAudioPlayer;

mAudioPlayer = NULL;

mFlags &= ~(PLAYING | FIRST_FRAME);

return err;

}

delete mTimeSource;

mTimeSource = mAudioPlayer;

deferredAudioSeek = true;

mWatchForAudioSeekComplete = false;

mWatchForAudioEOS = true;

}

} else {

mAudioPlayer->resume();

}

postCheckAudioStatusEvent_l();

}

***************

}

下面依次来看这几个调用

1.1 构造函数

这里首先要介绍一下mAudioSink ,当mAudioSink不为NULL的时候,AudioPlayer会将其传入构造函数。

而且AudioPlayer中的播放操作都会依托mAudioSink来完成。

从上面的类图可以知道,此处mAudioSink是从MediaPlayerService注册而来的AudioOut对象。具体代码在MediaPlayerservice中

status_t MediaPlayerService::Client::setDataSource(*)

{

************

mAudioOutput = new AudioOutput();

static_cast<MediaPlayerInterface*>(p.get())->setAudioSink(mAudioOutput);

************

}

间接地调用到stagefrightplayer->setAudioSink,最终到awesomeplayer中,如下

void AwesomePlayer::setAudioSink(

const sp<MediaPlayerBase::AudioSink> &audioSink) {

Mutex::Autolock autoLock(mLock);

mAudioSink = audioSink;

}

而构造AudioPlayer时用到的就是mAudioSink成员,因此后面分析传入的mAudioSink的操作时,记住实际的对象为AudioOut对象,在MediaPlayerService定义。

下面看实际的构造函数

AudioPlayer::AudioPlayer(const sp<MediaPlayerBase::AudioSink> &audioSink)

: mAudioTrack(NULL),

mInputBuffer(NULL),

mSampleRate(0),

mLatencyUs(0),

mFrameSize(0),

mNumFramesPlayed(0),

mPositionTimeMediaUs(-1),

mPositionTimeRealUs(-1),

mSeeking(false),

mReachedEOS(false),

mFinalStatus(OK),

mStarted(false),

mIsFirstBuffer(false),

mFirstBufferResult(OK),

mFirstBuffer(NULL),

mAudioSink(audioSink) {

}

主要是进行初始化,并将传入的mAudioSink存在成员mAudioSink中

1.2 setSource

在awesomeplayer中的调用语句为mAudioPlayer->setSource(mAudioSource);

其中mAudioSource是OMXCodec对象,也就是解码器的输出mediabuffer,AudioPlayer中的实现如下

void AudioPlayer::setSource(const sp<MediaSource> &source) {

CHECK_EQ(mSource, NULL);

mSource = source;

}

只是简单的将其存在成员 mSource中

1.3 startAudioPlayer_l()

awesomeplayer中的实际代码主要是调用status_t err = mAudioPlayer->start(true/* sourceAlreadyStarted */);

看下AudioPlayer实现

status_t AudioPlayer::start(bool sourceAlreadyStarted) {

CHECK(!mStarted);

CHECK(mSource != NULL);

status_t err;

if (!sourceAlreadyStarted) {

err = mSource->start();

if (err != OK) {

return err;

}

}

// We allow an optional INFO_FORMAT_CHANGED at the very beginning

// of playback, if there is one, getFormat below will retrieve the

// updated format, if there isn't, we'll stash away the valid buffer

// of data to be used on the first audio callback.

CHECK(mFirstBuffer == NULL);

mFirstBufferResult = mSource->read(&mFirstBuffer);

if (mFirstBufferResult == INFO_FORMAT_CHANGED) {

LOGV("INFO_FORMAT_CHANGED!!!");

CHECK(mFirstBuffer == NULL);

mFirstBufferResult = OK;

mIsFirstBuffer = false;

} else {

mIsFirstBuffer = true;

}

sp<MetaData> format = mSource->getFormat();

const char *mime;

bool success = format->findCString(kKeyMIMEType, &mime);

CHECK(success);

CHECK(!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_RAW));

success = format->findInt32(kKeySampleRate, &mSampleRate);

CHECK(success);

int32_t numChannels;

success = format->findInt32(kKeyChannelCount, &numChannels);

CHECK(success);

if (mAudioSink.get() != NULL) {

status_t err = mAudioSink->open(

mSampleRate, numChannels, AudioSystem::PCM_16_BIT,

DEFAULT_AUDIOSINK_BUFFERCOUNT,

&AudioPlayer::AudioSinkCallback, this);

if (err != OK) {

if (mFirstBuffer != NULL) {

mFirstBuffer->release();

mFirstBuffer = NULL;

}

if (!sourceAlreadyStarted) {

mSource->stop();

}

return err;

}

mLatencyUs = (int64_t)mAudioSink->latency() * 1000;

mFrameSize = mAudioSink->frameSize();

mAudioSink->start();

} else {

mAudioTrack = new AudioTrack(

AudioSystem::MUSIC, mSampleRate, AudioSystem::PCM_16_BIT,

(numChannels == 2)

? AudioSystem::CHANNEL_OUT_STEREO

: AudioSystem::CHANNEL_OUT_MONO,

0, 0, &AudioCallback, this, 0);

if ((err = mAudioTrack->initCheck()) != OK) {

delete mAudioTrack;

mAudioTrack = NULL;

if (mFirstBuffer != NULL) {

mFirstBuffer->release();

mFirstBuffer = NULL;

}

if (!sourceAlreadyStarted) {

mSource->stop();

}

return err;

}

mLatencyUs = (int64_t)mAudioTrack->latency() * 1000;

mFrameSize = mAudioTrack->frameSize();

mAudioTrack->start();

}

mStarted = true;

return OK;

}

这里代码主要部分如下:

调用mSource->read 启动解码,在OMXCodec一篇的介绍中知道,解码第一帧相当于启动了解码循环

获取音频参数:采样率、声道数、以及量化位数(这里只支持PCM_16_BIT)

启动输出:这里若mAudioSink非空,则启动mAudioSink进行输出,否则构造一个AudioTrack进行音频输出,这里AudioTrack是比较底层的接口 AudioOut是AudioTrack的封装。

在start方法中主要是调用mAudioSink进行工作,主要代码为

status_t err = mAudioSink->open(mSampleRate, numChannels, channelMask, AUDIO_FORMAT_PCM_16_BIT,

DEFAULT_AUDIOSINK_BUFFERCOUNT,

&AudioPlayer::AudioSinkCallback,

this,

(mAllowDeepBuffering ?

AUDIO_OUTPUT_FLAG_DEEP_BUFFER :

AUDIO_OUTPUT_FLAG_NONE));

mAudioSink->start();

之前说过mAudioSink是AudioOut对象,看下实际的实现(代码在mediaplayerservice.cpp中)

首先mAudioSink->open 需要注意的是传入的参数中有个函数指针 AudioPlayer::AudioSinkCallback ,其主要作用就是audioout播放pcm的时候会定期调用此回调函数填充数据,具体实现如下

分段来看代码

status_t MediaPlayerService::AudioOutput::open(

uint32_t sampleRate, int channelCount, audio_channel_mask_t channelMask,

audio_format_t format, int bufferCount,

AudioCallback cb, void *cookie,

audio_output_flags_t flags)

{

mCallback = cb;

mCallbackCookie = cookie;

// Check argument "bufferCount" against the mininum buffer count

if (bufferCount < mMinBufferCount) {

ALOGD("bufferCount (%d) is too small and increased to %d", bufferCount, mMinBufferCount);

bufferCount = mMinBufferCount;

}

ALOGV("open(%u, %d, 0x%x, %d, %d, %d)", sampleRate, channelCount, channelMask,

format, bufferCount, mSessionId);

int afSampleRate;

int afFrameCount;

uint32_t frameCount;

if (AudioSystem::getOutputFrameCount(&afFrameCount, mStreamType) != NO_ERROR) {

return NO_INIT;

}

if (AudioSystem::getOutputSamplingRate(&afSampleRate, mStreamType) != NO_ERROR) {

return NO_INIT;

}

frameCount = (sampleRate*afFrameCount*bufferCount)/afSampleRate;

if (channelMask == CHANNEL_MASK_USE_CHANNEL_ORDER) {

channelMask = audio_channel_out_mask_from_count(channelCount);

if (0 == channelMask) {

ALOGE("open() error, can\'t derive mask for %d audio channels", channelCount);

return NO_INIT;

}

}

上述代码主要就是处理传入的参数,回调函数保存在 mCallback中, cookie代表的是AudioPlayer对象指针

后面是根据采样率声道数等计算 frameCount等

AudioTrack *t;

CallbackData *newcbd = NULL;

if (mCallback != NULL) {

newcbd = new CallbackData(this);

t = new AudioTrack(

mStreamType,

sampleRate,

format,

channelMask,

frameCount,

flags,

CallbackWrapper,

newcbd,

0, // notification frames

mSessionId);

} else {

t = new AudioTrack(

mStreamType,

sampleRate,

format,

channelMask,

frameCount,

flags,

NULL,

NULL,

0,

mSessionId);

}

上面是构造AudioTrack对象,之前介绍实际的playback接口是通过封装AudioTrack来实现的。

mCallbackData = newcbd;

ALOGV("setVolume");

t->setVolume(mLeftVolume, mRightVolume);

mSampleRateHz = sampleRate;

mFlags = flags;

mMsecsPerFrame = mPlaybackRatePermille / (float) sampleRate;

uint32_t pos;

if (t->getPosition(&pos) == OK) {

mBytesWritten = uint64_t(pos) * t->frameSize();

}

mTrack = t;

status_t res = t->setSampleRate(mPlaybackRatePermille * mSampleRateHz / 1000);

if (res != NO_ERROR) {

return res;

}

t->setAuxEffectSendLevel(mSendLevel);

return t->attachAuxEffect(mAuxEffectId);;

}

最后是将audiotrack对象存储在mTrack成员中。

这里还有个重要的细节要注意,在构造AudioTrack对象的时候,传入了CallbackWrapper作为audiotrack的callback

当audiotrack需要数据的时候,就会调用此函数,看下实现

void MediaPlayerService::AudioOutput::CallbackWrapper(

int event, void *cookie, void *info) {

//ALOGV("callbackwrapper");

if (event != AudioTrack::EVENT_MORE_DATA) {

return;

}

CallbackData *data = (CallbackData*)cookie;

AudioOutput *me = data->getOutput();

size_t actualSize = (*me->mCallback)(

me, buffer->raw, buffer->size, me->mCallbackCookie);

}

只列出了重要的代码,当event ==EVENT_MORE_DATA即需要更多的数据,则调用 mCallback来填充数据

此处mCallback就是open传入的AudioSinkCallback函数指针,代码如下

size_t AudioPlayer::AudioSinkCallback(

MediaPlayerBase::AudioSink *audioSink,

void *buffer, size_t size, void *cookie) {

AudioPlayer *me = (AudioPlayer *)cookie;

return me->fillBuffer(buffer, size);

}

因此实际调用的是fillBuffer,这里不再列出fillbuffer的代码了,核心的语句就一句

err = mSource->read(&mInputBuffer, &options);

即调用解码器的mediabuffer来填充数据。这里还有些播放进度的操作,后面介绍同步的时候再看。

最后看下start

void MediaPlayerService::AudioOutput::start()

{

ALOGV("start");

if (mCallbackData != NULL) {

mCallbackData->endTrackSwitch();

}

if (mTrack) {

mTrack->setVolume(mLeftVolume, mRightVolume);

mTrack->setAuxEffectSendLevel(mSendLevel);

mTrack->start();

}

}

比较简单就是只掉调用mTrack->start, audiotrack启动后就会周期性的调用 回调函数从解码器获取数据。

2 时间戳计算

这里主要是讲audioplayer如何更新时间戳,在awesomeplayer中会使用audio的时间戳来做同步

之前讲过在awesomeplayer中,通过onVideoEvent来驱动整个整个播放的进度,其中有如下代码

TimeSource *ts =

((mFlags & AUDIO_AT_EOS) || !(mFlags & AUDIOPLAYER_STARTED))

? &mSystemTimeSource : mTimeSource;

if (mFlags & FIRST_FRAME) {

modifyFlags(FIRST_FRAME, CLEAR);

mSinceLastDropped = 0;

mTimeSourceDeltaUs = ts->getRealTimeUs() - timeUs;

}

int64_t realTimeUs, mediaTimeUs;

if (!(mFlags & AUDIO_AT_EOS) && mAudioPlayer != NULL

&& mAudioPlayer->getMediaTimeMapping(&realTimeUs, &mediaTimeUs)) {

mTimeSourceDeltaUs = realTimeUs - mediaTimeUs;

}

int64_t nowUs = ts->getRealTimeUs() - mTimeSourceDeltaUs;

int64_t latenessUs = nowUs - timeUs;

======================

if (latenessUs > 500000ll

&& mAudioPlayer != NULL

&& mAudioPlayer->getMediaTimeMapping(

&realTimeUs, &mediaTimeUs)) {

if (mWVMExtractor == NULL) {

ALOGI("we're much too late (%.2f secs), video skipping ahead",

latenessUs / 1E6);

mVideoBuffer->release();

mVideoBuffer = NULL;

mSeeking = SEEK_VIDEO_ONLY;

mSeekTimeUs = mediaTimeUs;

postVideoEvent_l();

return;

} else {

// The widevine extractor doesn't deal well with seeking

// audio and video independently. We'll just have to wait

// until the decoder catches up, which won't be long at all.

ALOGI("we're very late (%.2f secs)", latenessUs / 1E6);

}

}

if (latenessUs < -10000) {

// We're more than 10ms early.

postVideoEvent_l(10000);

return;

}

这里主要就是 == 上面的部分,用于计算latenessUs,后面依据此变量的值来决定是丢帧还是快播 慢播等操作。 == 下面的代码是实际的响应代码

主要就是上半部分代码。在构造audioplayer的时候会执行如下语句

mTimeSource = mAudioPlayer;

即将AudioPlayer作为参考时钟,上述代码中timeUs是由如下语句获得:CHECK(mVideoBuffer->meta_data()->findInt64(kKeyTime, &timeUs));

即timeUs 表示下一帧画面的时间戳

而mTimeSourceDeltaUs = ts->getRealTimeUs() - timeUs; 当显示画面是第一帧时,表示当前的audio的播放时间与第一帧video的时间差值

下面mTimeSourceDeltaUs = realTimeUs - mediaTimeUs; 而其中变量又是通过mAudioPlayer->getMediaTimeMapping(&realTimeUs, &mediaTimeUs)得到

【问题】此处会覆盖第一帧的结果,难道只有video的时候要特殊处理吗,知道的普及下。觉得此处没啥用

这里看下realTimeUs 和 mediaTimeUs 的计算方式

实际计算代码如下

bool AudioPlayer::getMediaTimeMapping(

int64_t *realtime_us, int64_t *mediatime_us) {

Mutex::Autolock autoLock(mLock);

*realtime_us = mPositionTimeRealUs;

*mediatime_us = mPositionTimeMediaUs;

return mPositionTimeRealUs != -1 && mPositionTimeMediaUs != -1;

}

因此实际的值分别是mPositionTimeRealUs 和mPositionTimeMediaUs

在AudioPlayer中主要是通过fillbuffer来更新此两个成员的值,看下实现

size_t AudioPlayer::fillBuffer(data, size)

{

...

mSource->read(&mInputBuffer, ...);

mInputBuffer->meta_data()->findInt64(kKeyTime, &mPositionTimeMediaUs);

mPositionTimeRealUs = ((mNumFramesPlayed + size_done / mFrameSize) * 1000000) / mSampleRate;

...

}

从代码可以看出 mPositionTimeMediaUs 是当前播放的pcm一包数据的开头的时间戳

而mPositionTimeRealUs 是依据当前读取了多少数据计算出来的更精确的时间。

二者的差值表示这一包pcm数据已经播放了多少。

最后通过如下语句计算

int64_t nowUs = ts->getRealTimeUs() - mTimeSourceDeltaUs;

这里ts->getRealTimeUs() 得到的时间是将后面audiotrack的缓冲区也考虑进来得到的实际播放时间。nowUs 可以认为是是当前读到的pcm包的开头的时间戳

int64_t latenessUs = nowUs - timeUs;

这里是将 pcm包开头的时间戳与视频帧的时间戳对比得到二者之间的差值来做同步

这里需要注意的是:a-v 时间戳对比的是audio包头的差值,而不是用audio的实际播放进度来计算的 (这样可能不太准吧)

3 Seek功能

之前在研究extractor以及decoder的时候由于一些细节不了解,对seek没有分析,在这里简单分析一下。

当seek命令到来时

status_t AwesomePlayer::seekTo_l(int64_t timeUs) {

if ((mFlags & PLAYING) && mVideoSource != NULL && (mFlags & VIDEO_AT_EOS)) {

// Video playback completed before, there's no pending

// video event right now. In order for this new seek

// to be honored, we need to post one.

postVideoEvent_l();

}

mSeeking = SEEK;

mSeekNotificationSent = false;

mSeekTimeUs = timeUs;

modifyFlags((AT_EOS | AUDIO_AT_EOS | VIDEO_AT_EOS), CLEAR);

seekAudioIfNecessary_l();

return OK;

}

在awesomeplayer中主要就是设置seek 标志及seek后的时间

对于extractor,这里还是以ts为例,在read的时候

status_t MPEG2TSSource::read(

MediaBuffer **out, const ReadOptions *options) {

*out = NULL;

int64_t seekTimeUs;

ReadOptions::SeekMode seekMode;

if (mSeekable && options && options->getSeekTo(&seekTimeUs, &seekMode)) {

mExtractor->seekTo(seekTimeUs);

}

status_t finalResult;

while (!mImpl->hasBufferAvailable(&finalResult)) {

if (finalResult != OK) {

return ERROR_END_OF_STREAM;

}

status_t err = mExtractor->feedMore();

if (err != OK) {

mImpl->signalEOS(err);

}

}

return mImpl->read(out, options);

}

如果需要seek则首先会调用mExtractor->seekTo(seekTimeUs); seek到对应的时间的位置

然后下一包数据就是seek后的数据了

而对于decoder来讲,读取数据在omxcodec中

status_t OMXCodec::read()

{

**********

if (seeking) {

CODEC_LOGV("seeking to %lld us (%.2f secs)", seekTimeUs, seekTimeUs / 1E6);

mSignalledEOS = false;

CHECK(seekTimeUs >= 0);

mSeekTimeUs = seekTimeUs;

mSeekMode = seekMode;

mFilledBuffers.clear();

CHECK_EQ((int)mState, (int)EXECUTING);

bool emulateInputFlushCompletion = !flushPortAsync(kPortIndexInput);

bool emulateOutputFlushCompletion = !flushPortAsync(kPortIndexOutput);

if (emulateInputFlushCompletion) {

onCmdComplete(OMX_CommandFlush, kPortIndexInput);

}

if (emulateOutputFlushCompletion) {

onCmdComplete(OMX_CommandFlush, kPortIndexOutput);

}

while (mSeekTimeUs >= 0) {

if ((err = waitForBufferFilled_l()) != OK) {

return err;

}

}

}

*********

}

这里可以看到,当需要seek的时候会首先清空所有已经读到的数据。然后等待extractor填充数据。填充完毕后就seek完毕了。

【结束】