DataStage tip: using job parameters without losing your mind

A job parameter in the ETL environment is much like a parameter in other products, it lets you change the way your programs behave at run-time by tweaking or changing parameters to alter the way the job behaves. This constant changing can either ease your support burden or drive your support staff mad.

In this blog we will be looking at project specific environment variables and how they become happy job parameters. This is also my first blog with diagrams to show steps.

This is a happy parameter method because an administrator or support person can modify the value of a parameter, such as a database password, and every job in production will run with the new value. Yippee!

If you have trouble with the pictures below you can read the alternative text (which isn't nearly as good) or go straight to the photo album.

Objectives

A job parameter is a way to change a property within a job without having to alter and recompile it. The trick to job parameters is to manage them so the values can be easily maintained and there are not too many manual maintenance steps that can result in incorrect job execution.

Creating Project Specific Environment Variables

Finding the place to set these variables is a bit of a Minataurs Maze, we can only hope that under DataStage Hawk we get a short cut to this screen for the benefit of admin staff, here is the sequence of buttons:

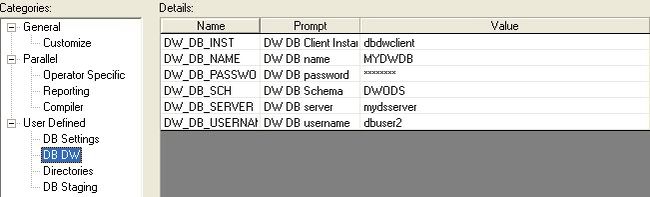

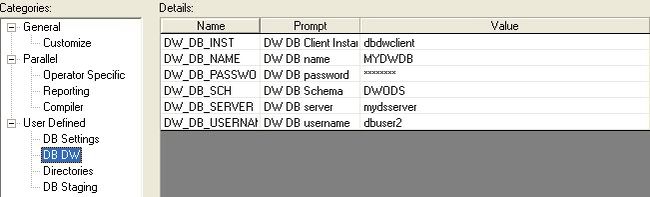

Type in all the required job parameters that are going to be shared between jobs, note the passwords are set to a type of encrypted and will only show up as asterisks here and in any job logs:

* Database login values are job parameters as they change when you move from development through to production and database passwords should be modified on a regular timetable (though some sites never change them!).

* File directories can change when you move from development to production and they can change if new disk partitions are added.

* DB2 settings such as transaction size can be changed by support to test different performance settings or to match modifications to the database setup.

The problem with these settings is that they all sit in the one folder, this makes them a bit cumberson to administer and find from within a job. Folder names can be added by hacking the DSParams file found in the project home directory:

This looks a lot neater. Note how now only the value field is modifiable, moving these values into folders prevents the entries from accidentally being deleted by administrators, they become protected entries. This is a risky modification if you attempt it directly in production as you may corrupt your DSParams file, always take a backup. The mofication is done by adding a folder name into the file entry:

[EnvVarDefns]

DW_DB_INST/User Defined/-1/String//0/Project/DW DB Client Instance

Changed to:

[EnvVarDefns]

DW_DB_INST/User Defined /DB DW/-1/String//0/Project/DW DB Client Instance

The text in bold shows the hack, the folder name has been inserted into the second field on that line adding the sub directory "DB DW" under the folder called "User Defined". I do not think IBM support will be too thrilled with this hack, would really help if the next version had folder support built in.

Adding to a job

Now we have our variables for the project we can add them to our jobs. They are added via the standard job parameters form via the verbose but slightly misleading "Add Environment Variable.." button which doesn't add an environment variable but rather brings an existing environment variable into your job as a job parameter:

The job parameters need to be set to the magic word "$PROJDEF" to ensure they are dynamically set each time the job is run. Think of it like the DataStage equivelent of abra cadabra. When the job runs and it finds a job parameter value of $PROJDEF it goes looking for the value within the DSParams file and sets it. Even the password is set to $PROJDEF, use cut and paste into the encrypted password entry field instead of trying to type it to avoid mistakes:

These job properties can now be used in a DB2 database stage:

Low Administration

So now the job will dynamically pick up job parameter values using whatever has been entered by the Administrator for that parameter. The parameter values on the individual jobs never need to be changed.

The View Data Drawback

In the current version you cannot easily use the database or sequential file "View Data" option as it fails with the magic word $PROJDEF, it treats it as a normal word and does not do the magic substitution. It becomes a pain as you find yourself repeatedly switching the magic world for real values manually each time you want to View Data. Since I use View Data all the time in development (fast way to make sure a stage is setup correctly) I use normal job parameters at the parallel/server job level and use environment variable job parameters at the sequence job level.

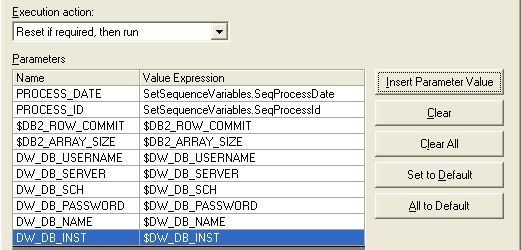

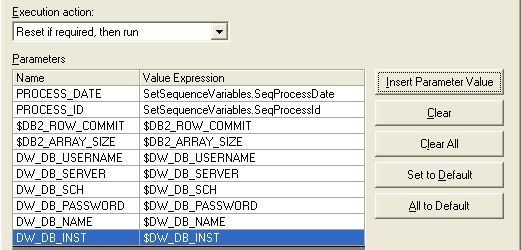

The sequence job maps project specific environment variables into normal job parameters:

In this example the database login values required for View Data to work have been turned into normal job parameters within the parallel job. The two DB2 properties are still $ parameters within the job because they do not affect view data. The PROCESS_ID and PROCESS_DATE are both fully dynamic parameters set by some BASIC code within the Sequence job User Variables stage. They are set to a counter ID and the current processing date.

The restriction on this design is that users should only run jobs via a Sequence job, if they try to run the job directly the normal job parameters may have incorrect default values.

Disclaimer: The opinions expressed herein are my own personal opinions and do not represent my employer's view in any way.

In this blog we will be looking at project specific environment variables and how they become happy job parameters. This is also my first blog with diagrams to show steps.

This is a happy parameter method because an administrator or support person can modify the value of a parameter, such as a database password, and every job in production will run with the new value. Yippee!

If you have trouble with the pictures below you can read the alternative text (which isn't nearly as good) or go straight to the photo album.

Objectives

A job parameter is a way to change a property within a job without having to alter and recompile it. The trick to job parameters is to manage them so the values can be easily maintained and there are not too many manual maintenance steps that can result in incorrect job execution.

Creating Project Specific Environment Variables

Finding the place to set these variables is a bit of a Minataurs Maze, we can only hope that under DataStage Hawk we get a short cut to this screen for the benefit of admin staff, here is the sequence of buttons:

Type in all the required job parameters that are going to be shared between jobs, note the passwords are set to a type of encrypted and will only show up as asterisks here and in any job logs:

* Database login values are job parameters as they change when you move from development through to production and database passwords should be modified on a regular timetable (though some sites never change them!).

* File directories can change when you move from development to production and they can change if new disk partitions are added.

* DB2 settings such as transaction size can be changed by support to test different performance settings or to match modifications to the database setup.

The problem with these settings is that they all sit in the one folder, this makes them a bit cumberson to administer and find from within a job. Folder names can be added by hacking the DSParams file found in the project home directory:

This looks a lot neater. Note how now only the value field is modifiable, moving these values into folders prevents the entries from accidentally being deleted by administrators, they become protected entries. This is a risky modification if you attempt it directly in production as you may corrupt your DSParams file, always take a backup. The mofication is done by adding a folder name into the file entry:

[EnvVarDefns]

DW_DB_INST/User Defined/-1/String//0/Project/DW DB Client Instance

Changed to:

[EnvVarDefns]

DW_DB_INST/User Defined /DB DW/-1/String//0/Project/DW DB Client Instance

The text in bold shows the hack, the folder name has been inserted into the second field on that line adding the sub directory "DB DW" under the folder called "User Defined". I do not think IBM support will be too thrilled with this hack, would really help if the next version had folder support built in.

Adding to a job

Now we have our variables for the project we can add them to our jobs. They are added via the standard job parameters form via the verbose but slightly misleading "Add Environment Variable.." button which doesn't add an environment variable but rather brings an existing environment variable into your job as a job parameter:

The job parameters need to be set to the magic word "$PROJDEF" to ensure they are dynamically set each time the job is run. Think of it like the DataStage equivelent of abra cadabra. When the job runs and it finds a job parameter value of $PROJDEF it goes looking for the value within the DSParams file and sets it. Even the password is set to $PROJDEF, use cut and paste into the encrypted password entry field instead of trying to type it to avoid mistakes:

These job properties can now be used in a DB2 database stage:

Low Administration

So now the job will dynamically pick up job parameter values using whatever has been entered by the Administrator for that parameter. The parameter values on the individual jobs never need to be changed.

The View Data Drawback

In the current version you cannot easily use the database or sequential file "View Data" option as it fails with the magic word $PROJDEF, it treats it as a normal word and does not do the magic substitution. It becomes a pain as you find yourself repeatedly switching the magic world for real values manually each time you want to View Data. Since I use View Data all the time in development (fast way to make sure a stage is setup correctly) I use normal job parameters at the parallel/server job level and use environment variable job parameters at the sequence job level.

The sequence job maps project specific environment variables into normal job parameters:

In this example the database login values required for View Data to work have been turned into normal job parameters within the parallel job. The two DB2 properties are still $ parameters within the job because they do not affect view data. The PROCESS_ID and PROCESS_DATE are both fully dynamic parameters set by some BASIC code within the Sequence job User Variables stage. They are set to a counter ID and the current processing date.

The restriction on this design is that users should only run jobs via a Sequence job, if they try to run the job directly the normal job parameters may have incorrect default values.

Disclaimer: The opinions expressed herein are my own personal opinions and do not represent my employer's view in any way.