Moses运行过程记录---Moses结果和评测(四)

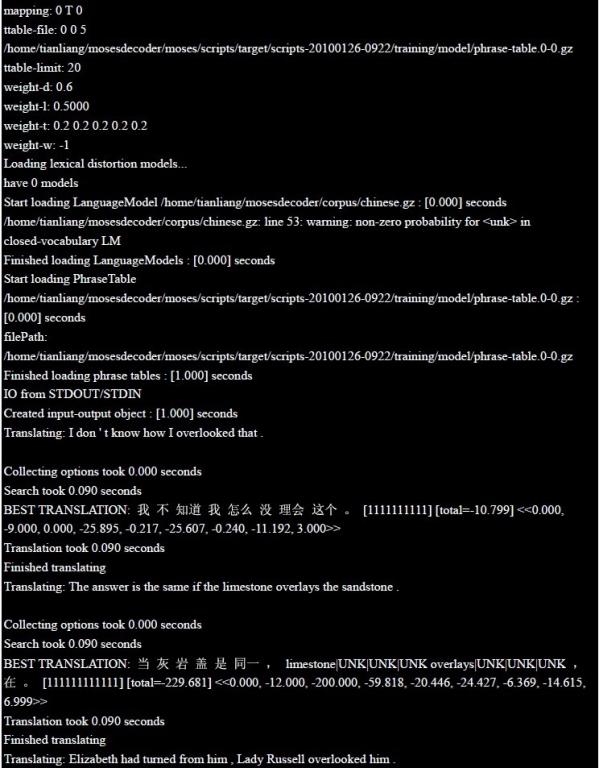

接下来的是Moses运行的结果和评测部分,具体的运行过程如下:

1. Copy the executable program moses to ~/scripts-20100126-0922/training/model.

2. Make a input file containing the sentences that wanting to be translated ~model/input .

I don ' t know how I overlooked that .

The answer is the same if the limestone overlays the sandstone .

Elizabeth had turned from him , Lady Russell overlooked him .

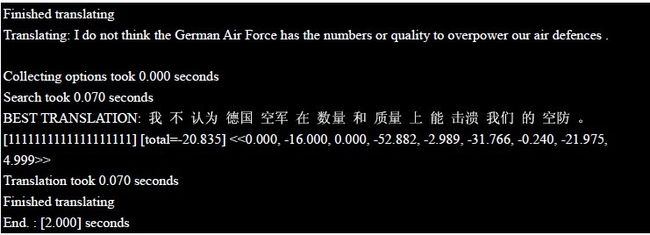

I do not think the German Air Force has the numbers or quality to overpower our air defences .

3. Translate the sentences using command like below:

tianliang@ubuntu:~/mosesdecoder/moses/scripts/target/scripts-20100126-0922/training/model$ ./moses -f

moses.ini <input> output

tianliang@ubuntu:~/mosesdecoder/moses/scripts/target/scripts-20100126-0922/training/model$ cat output

PART VI – Evaluation

There are two scripts perl that we can use to evaluate the translation quality---multi-bleu.perl and mteval-v11b.pl. The simpler one is the multi-bleu.perl.

multi-bleu.perl reference < machine translation-output

Reference file and system output have to be sentence-aligned (line X in the reference file corresponds to line X in the system output). If multiple reference

translation exists, these have to be stored in separate files and named reference0, reference1, reference2, etc.

All the texts need to be tokenized.

I choose 30 English sentences to be translated and named the output file “output”, and then I get the reference

translation of Chinese named “reference” .Now I can get the result:

tianliang@ubuntu:~/mosesdecoder/moses/scripts/target/scripts-20100126-0922/training/model$ ./multi-bleu.perl reference < output

The other popular script to score translations with BLEU is the NIST mteval script. It requires that text is

wrapped into a SGML format. This format is used for instance by the NIST evaluation and the WMT

Shared Task evaluations. Here I won’t talk too much about it .The following below is the grammar:

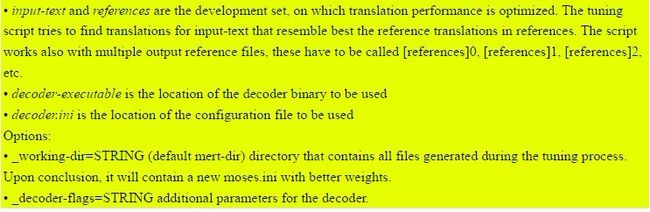

Parameters:

The command I that I will use is like below, here the “clean.lowercased.eng” is the English lowercased

sentences and “clean.chn” is the Chinese corpus.

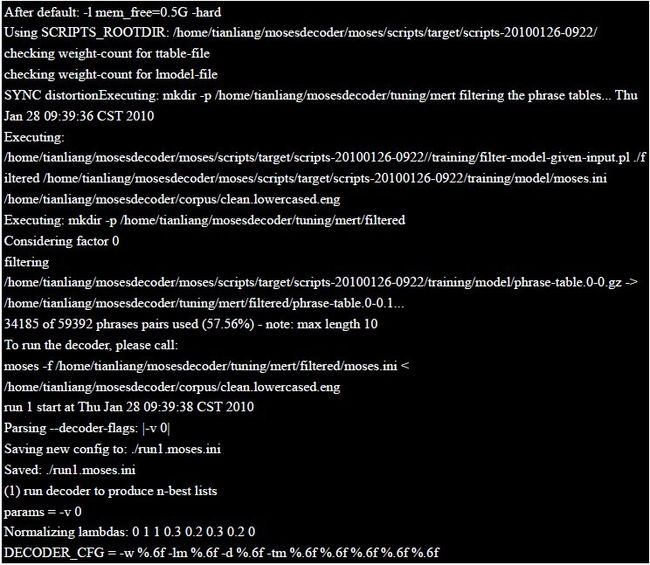

tianliang@ubuntu:~/mosesdecoder/moses/scripts/target/scripts-20100126-0922/training$ ./mert-moses.pl

/home/tianliang/mosesdecoder/corpus/clean.lowercased.eng /home/tianliang/mosesdecoder/corpus/clean.chn

/home/tianliang/mosesdecoder/moses/moses-cmd/src/moses

/home/tianliang/mosesdecoder/moses/scripts/target/scripts-20100126-0922/training/model/moses.ini

--working-dir /home/tianliang/mosesdecoder/tuning/mert --root-dir

/home/tianliang/mosesdecoder/moses/scripts/target/scripts-20100126-0922/ --decoder-flags "-v 0"

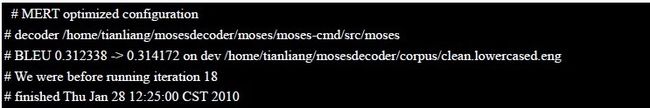

The above is just the first iteration; the others are nearly the same. After a long running process

(18 iterations),we will get a configure file called “mose.ini”, we can get the BLEU score from the

first line as below:

I am amusing that Moses have so good translation. What you should know is that I didn't use a lot of

additional function, for example, the generation table, distortion parameters and so on. Although Moses

still can't translate very well on those that it hasn't met, just consider my corpus is just 1500 sentences.

You should understand it. People can not understand a word if he or she has not seen before! I am sure

Moses will have a better translation with large corpus. Of course, if I consider all the translation output,

the BUEU score will be lower.30 sentences are too small! But it doesn’t matter, here I just want to give

the operating steps!

I am preparing a large corpus about 100,000 parallel sentences with the help of my classmates and

some software. I will have a try when finishing it. During the preparing, I begin to feel that we should

let the machine to “learn” as people do. Consider our learning process, we first learn one word or one

sentence and then we can use it, maybe we cannot express it very well at first, but better after we can

learn grammar or rules. I think machine should have this process. What I want to do is to collect the

data from different fields, from the Peoples ' names to special terms, from short sentence to long sentences.

I do by step by step and let machine to learn step by step too.