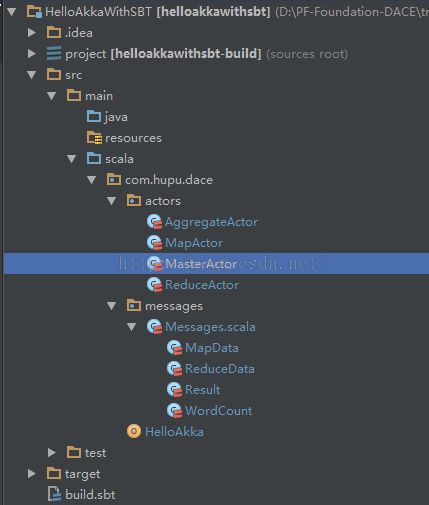

scala akka wordcount程序

Message.scala:

package com.hupu.dace.messages sealed case class Result() sealed case class WordCount(word: String, count: Int) sealed case class MapData(data: List[WordCount]) sealed case class ReduceData(data: List[WordCount])

MasterActor:

package com.hupu.dace.actors

import akka.actor.{Actor, Props}

import com.hupu.dace.messages.Result

/**

* Created by xiaojun on 2015/10/12.

*/

class MasterActor extends Actor {

val aggregateActor = context.actorOf(Props[AggregateActor], name = "agg")

val reduceActor = context.actorOf(Props(classOf[ReduceActor], aggregateActor), name = "reduce")

val mapActor = context.actorOf(Props(classOf[MapActor], reduceActor), name = "map")

override def receive: Receive = {

case msg: String =>

mapActor ! msg

case Result() =>

aggregateActor ! Result()

case _ =>

}

}

package com.hupu.dace.actors

import java.util.StringTokenizer

import akka.actor.{Actor, ActorRef}

import com.hupu.dace.messages.{MapData, WordCount}

import scala.collection.mutable.ArrayBuffer

/**

* Created by xiaojun on 2015/10/12.

*/

class MapActor(reduceActor: ActorRef) extends Actor {

override def receive: Receive = {

case msg: String =>

val mapData = getMapData(msg)

reduceActor ! mapData

case msg => unhandled(msg)

}

private def getMapData(msg: String): MapData = {

val list = ArrayBuffer[WordCount]()

val tokenizer: StringTokenizer = new StringTokenizer(msg)

while (tokenizer.hasMoreTokens) {

val word: String = tokenizer.nextToken.toLowerCase

list += WordCount(word, 1)

}

MapData(list.toList)

}

}

ReduceActor:

package com.hupu.dace.actors

import akka.actor.{Actor, ActorRef}

import com.hupu.dace.messages.{MapData, ReduceData, WordCount}

/**

* Created by xiaojun on 2015/10/12.

*/

class ReduceActor(aggregateActor: ActorRef) extends Actor {

override def receive: Receive = {

case MapData(data) =>

val list = data.groupBy(_.word).map {

case (word, list) => WordCount(word, list.foldLeft(0)((count, wc) => count + wc.count))

}.toList

aggregateActor ! ReduceData(list)

case msg => unhandled(msg)

}

}

AggregateActor:

package com.hupu.dace.actors

import akka.actor.Actor

import com.hupu.dace.messages.{Result, ReduceData}

import scala.collection.mutable

/**

* Created by xiaojun on 2015/10/12.

*/

class AggregateActor extends Actor {

val finalData = mutable.HashMap[String, Int]()

override def receive: Receive = {

case ReduceData(data) =>

data.foreach(wc => {

finalData.put(wc.word, finalData.getOrElse(wc.word, 0) + wc.count)

})

case Result() =>

println(finalData)

case msg => unhandled(msg)

}

}

package com.hupu.dace

import akka.actor.{ActorSystem, Props}

import com.hupu.dace.actors.MasterActor

import com.hupu.dace.messages.Result

/**

* Created by xiaojun on 2015/10/10.

*/

object HelloAkka {

def main(args: Array[String]) {

val _system = ActorSystem("HelloAkka")

val master = _system.actorOf(Props[MasterActor],"master")

master ! "hello world hadoop"

master ! "hello hadoop"

master ! " hello spark world"

Thread.sleep(1000)

master ! Result()

Thread.sleep(1000)

_system.shutdown()

}

}

ReduceActor做本地聚合类似HADOOP wordcount的combiner,然后全部发送给AggregateActor做全局聚合获得最终结果。