ffmpeg(1)环境搭建+tutorial01.c

环境搭建

1)将ffmpeg-20121125-git-26c531c-win32-shared\bin加入到环境变量中。

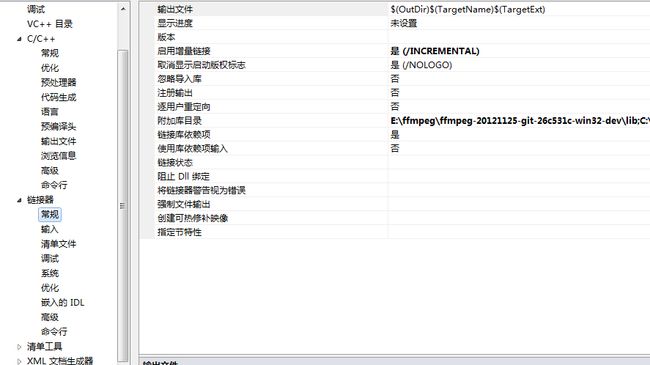

2)将SDL-1.2.15\include,ffmpeg-20121125-git-26c531c-win32-dev\include加入到工程中,如图示:

3)在附加依赖库中添加如下lib文件

SDL.lib

SDLmain.lib

avcodec.lib

avformat.lib

avdevice.lib

avfilter.lib

avutil.lib

swscale.lib

swresample.lib

postproc.lib

4)导入tutorial01.c工程

// tutorial01.c // Code based on a tutorial by Martin Bohme ([email protected]) // Tested on Gentoo, CVS version 5/01/07 compiled with GCC 4.1.1 // A small sample program that shows how to use libavformat and libavcodec to // read video from a file. // // Use // // gcc -o tutorial01 tutorial01.c -lavformat -lavcodec -lz // // to build (assuming libavformat and libavcodec are correctly installed // your system). // // Run using // // tutorial01 myvideofile.mpg // // to write the first five frames from "myvideofile.mpg" to disk in PPM // format. extern "C" { #include <libavcodec\avcodec.h> #include <libavformat\avformat.h> //****************************************************************************** #include <libavutil/avstring.h> #include <libswscale/swscale.h> //********************************************************************************* } #include <stdio.h> #include <math.h> #include <iostream> using namespace std ; void SaveFrame(AVFrame *pFrame, int width, int height, int iFrame) { FILE *pFile; char szFilename[32]; int y; // Open file sprintf(szFilename, "frame%d.ppm", iFrame); pFile=fopen(szFilename, "wb"); if(pFile==NULL) return; // Write header fprintf(pFile, "P6\n%d %d\n255\n", width, height); // Write pixel data for(y=0; y<height; y++) fwrite(pFrame->data[0]+y*pFrame->linesize[0], 1, width*3, pFile); // Close file fclose(pFile); } int main(int argc, char *argv[]) { AVFormatContext *pFormatCtx = avformat_alloc_context();//!!!这一句没有加后面部分时会有错误,会在执行avformat_open_input函数报错 int i, videoStream; AVCodecContext *pCodecCtx; AVCodec *pCodec; AVFrame *pFrame; AVFrame *pFrameRGB; AVPacket packet; int frameFinished; int numBytes; uint8_t *buffer; struct SwsContext *img_convert_ctx; const char * filename="c:\\1.mp4"; // Register all formats and codecs av_register_all(); // Open video file if(avformat_open_input(&pFormatCtx, filename, NULL, NULL)!=0) return -1; // Couldn't open file // Retrieve stream information if(av_find_stream_info(pFormatCtx)<0) return -1; // Couldn't find stream information // Dump information about file onto standard error av_dump_format(pFormatCtx, 0, filename, 0); // Find the first video stream videoStream=-1; for(i=0; i<pFormatCtx->nb_streams; i++) if(pFormatCtx->streams[i]->codec->codec_type==AVMEDIA_TYPE_VIDEO) { videoStream=i; break; } if(videoStream==-1) return -1; // Didn't find a video stream // Get a pointer to the codec context for the video stream pCodecCtx=pFormatCtx->streams[videoStream]->codec; // Find the decoder for the video stream pCodec=avcodec_find_decoder(pCodecCtx->codec_id); if(pCodec==NULL) { fprintf(stderr, "Unsupported codec!\n"); return -1; // Codec not found } // Open codec if(avcodec_open(pCodecCtx, pCodec)<0) return -1; // Could not open codec // Allocate video frame pFrame=avcodec_alloc_frame(); // Allocate an AVFrame structure pFrameRGB=avcodec_alloc_frame(); if(pFrameRGB==NULL) return -1; // Determine required buffer size and allocate buffer numBytes=avpicture_get_size(PIX_FMT_RGB24, pCodecCtx->width, pCodecCtx->height); buffer=(uint8_t *)av_malloc(numBytes*sizeof(uint8_t)); // Assign appropriate parts of buffer to image planes in pFrameRGB // Note that pFrameRGB is an AVFrame, but AVFrame is a superset // of AVPicture avpicture_fill((AVPicture *)pFrameRGB, buffer, PIX_FMT_RGB24, pCodecCtx->width, pCodecCtx->height); // Read frames and save first five frames to disk i=0; while(av_read_frame(pFormatCtx, &packet)>=0) { // Is this a packet from the video stream? if(packet.stream_index==videoStream) { // Decode video frame avcodec_decode_video2(pCodecCtx, pFrame, &frameFinished, &packet); // Did we get a video frame? if(frameFinished) { img_convert_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, PIX_FMT_RGB565,SWS_BILINEAR, NULL, NULL, NULL); if (img_convert_ctx == NULL) { fprintf(stderr, "Cannot initialize the conversion context/n"); exit(1); } sws_scale(img_convert_ctx,(const uint8_t* const*)pFrame->data,pFrame->linesize,0,pCodecCtx->height,pFrameRGB->data,pFrameRGB->linesize); if(++i<=5) SaveFrame(pFrameRGB, pCodecCtx->width, pCodecCtx->height, i); } } } // Free the packet that was allocated by av_read_frame av_free_packet(&packet); // Free the RGB image av_free(buffer); av_free(pFrameRGB); // Free the YUV frame av_free(pFrame); // Free the SwsContext sws_freeContext(img_convert_ctx); // Close the codec avcodec_close(pCodecCtx); return 0; }

总结:

1)调试时,朋友给的代码,很多错误,所以还是从官网自己下载比较好,另外,这个是最新版本的,ffmpeg修改了很多接口

2)tutorial01提供了很好的操作视频的基本方法,只要你处理视频,就必须使用这些方法。

下面对tutorial2进行调试,当然这些基本的只是练手和熟悉ffmpeg,最终还是为了自己的编解码和小型播放器