#R_packages#10 R packages I wish I knew about earlier

10 R packages I wish I knew about earlier

February 10, 2013 by

I started using R about 3 years ago. It was slow going at first. R had tricky and less intuitive syntax than languages I was used to, and it took a while to get accustomed to the nuances. It wasn't immediately clear to me that the power of the language was bound up with the community and the diverse packages available.

R can be more prickly and obscure than other languages like Python or Java. The good news is that there are tons of packages which provide simple and familiar interfaces on top of Base R. This post is about ten packages I love and use everyday and ones I wish I knew about earlier.

-

sqldf

install.packages("sqldf")One of the steepest parts of the R learning curve is the syntax. It took me a while to get over using

<-instead of=. I hear people say a lot of times How do I just do aVLOOKUP?!? R is great for general data munging tasks, but it takes a while to master. I think it's safe to say thatsqldfwas my R "training wheels".sqldflet's you perform SQL queries on your R data frames. People coming over from SAS will find it very familiar and anyone with basic SQL skills will have no trouble using it--sqldfuses SQLite syntax.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 library (sqldf )sqldf ( "SELECTday, avg(temp) as avg_tempFROM beaver2GROUP BYday;" )# day avg_temp#1 307 37.57931#2 308 37.71308#beavers1 and beavers2 come with base Rbeavers <- sqldf ( "select * from beaver1union allselect * from beaver2;" )#head(beavers)# day time temp activ#1 346 840 36.33 0#2 346 850 36.34 0#3 346 900 36.35 0#4 346 910 36.42 0#5 346 920 36.55 0#6 346 930 36.69 0movies <- data.frame (title =c ( "The Great Outdoors" , "Caddyshack" , "Fletch" , "Days of Thunder" , "Crazy Heart" ),year =c ( 1988 , 1980 , 1985 , 1990 , 2009 ))boxoffice <- data.frame (title =c ( "The Great Outdoors" , "Caddyshack" , "Fletch" , "Days of Thunder" , "Top Gun" ),revenue =c ( 43455230 , 39846344 , 59600000 , 157920733 , 353816701 ))sqldf ( "SELECTm.*, b.revenueFROMmovies mINNER JOINboxoffice bON m.title = b.title;" )# title year revenue#1 The Great Outdoors 1988 43455230#2 Caddyshack 1980 39846344#3 Fletch 1985 59600000#4 Days of Thunder 1990 157920733 -

forecast

install.packages("forecast")I don't do time series analysis very often, but when I do

forecastis my library of choice.forecastmakes it incredibly easy to fit time series models like ARIMA, ARMA, AR, Exponential Smoothing, etc.1 2 3 4 5 6 7 8 9 10 11 12 library (forecast )# mdeaths: Monthly Deaths from Lung Diseases in the UKfit <- auto.arima (mdeaths )#customize your confidence intervalsforecast (fit , level =c ( 80 , 95 , 99 ), h = 3 )# Point Forecast Lo 80 Hi 80 Lo 95 Hi 95 Lo 99 Hi 99#Jan 1980 1822.863 1564.192 2081.534 1427.259 2218.467 1302.952 2342.774#Feb 1980 1923.190 1635.530 2210.851 1483.251 2363.130 1345.012 2501.368#Mar 1980 1789.153 1495.048 2083.258 1339.359 2238.947 1198.023 2380.283plot (forecast (fit ), shadecols = "oldstyle" )

My favorite feature is the resulting forecast plot.

-

plyr

install.packages("plyr")When I first started using R, I was using basic control operations for manipulating data (for, if, while, etc.). I quickly learned that this was an amateur move, and that there was a better way to do it.

In R, the

applyfamily of functions is the preferred way to call a function on each element of a list or vector. While Base R has this out of the box, its usage can be tricky to master. I've found theplyrpackage to be an easy to use substitute forsplit,apply,combinefunctionality in Base R.plyrgives you several functions (ddply,daply,dlply,adply,ldply) following a common blueprint: Split a data structure into groups, apply a function on each group, return the results in a data structure.ddplysplits a data frame and returns a data frame (hence the dd).daplysplits a data frame and results an array (hence the da). Hopefully you're getting the idea here.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 library (plyr )# split a data frame by Species, summarize it, then convert the results# into a data frameddply (iris , . (Species ), summarise ,mean_petal_length =mean (Petal.Length ))# Species mean_petal_length#1 setosa 1.462#2 versicolor 4.260#3 virginica 5.552# split a data frame by Species, summarize it, then convert the results# into an arrayunlist (daply (iris [, 4 : 5 ], . (Species ), colwise (mean )))# setosa.Petal.Width versicolor.Petal.Width virginica.Petal.Width# 0.246 1.326 2.026 -

stringr

install.packages("stringr")I find base R's string functionality to be extremely difficult and cumbersome to use. Another package written by Hadley Wickham,

stringr, provides some much needed string operators in R. Many of the functions use data strcutures that aren't commonly used when doing basic analysis.

stringris remarkably easy to use. Nearly all of the functions (and all of the important ones) are prefixed with "str" so they're very easy to remember.1 2 3 4 5 6 7 8 9 10 11 12 library (stringr )names (iris )#[1] "Sepal.Length" "Sepal.Width" "Petal.Length" "Petal.Width" "Species"names (iris ) <- str_replace_all (names (iris ), "[.]" , "_" )names (iris )#[1] "Sepal_Length" "Sepal_Width" "Petal_Length" "Petal_Width" "Species"s <- c ( "Go to Heaven for the climate, Hell for the company." )str_extract_all (s , "[H][a-z]+ " )#[[1]]#[1] "Heaven " "Hell " -

The database driver package of your choice

install.packages("RPostgreSQL") install.packages("RMySQL") install.packages("RMongo") install.packages("RODBC") install.packages("RSQLite")Everyone does it when they first start (myself included). You've just written an awesome query in your preferred SQL editor. Everything is perfect - the column names are all snake case, the dates have the right datatype, you finally debugged the

"must appear in the GROUP BY clause or be used in an aggregate function"issue. You're ready to do some analysis in R, so you run the query in your SQL editor, copy the results to a csv (or...God forbid... .xlsx) and read into R. You don't have to do this!R has great drivers for nearly every conceivable database. On the off chance you're using a database which doesn't have a standalone driver (SQL Server), you can always use

RODBC.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 library (RPostgreSQL )drv <- dbDriver ( "PostgreSQL" )db <- dbConnect (drv , dbname = "ncaa" ,user = "YOUR USER NAME" , password = "YOUR PASSWORD" )q <- "SELECT*FROMgame_scores;"data <- dbGetQuery (db , q )head (data )#id school game_date spread school_score opponent opp_score was_home#1 45111 Boston College 1985-11-16 6.0 21 Syracuse 41 False#2 45112 Boston College 1985-11-02 13.5 12 Penn State 16 False#3 45113 Boston College 1985-10-26 -11.0 17 Cincinnati 24 False#4 45114 Boston College 1985-10-12 -2.0 14 Army 45 False#5 45115 Boston College 1985-09-28 5.0 10 Miami 45 True#6 45116 Boston College 1985-09-21 6.5 29 Pittsburgh 22 Falsenrow (data )#[1] 30932ncol (data )#[1] 8Next time you've got that perfect query written, just paste it into R and execute it using

RPostgreSQL,RMySQL,RMongo,RMongo, orRODBC. In addition to preventing you from having tens of hundreds of CSV files sitting arround, running the query in R saves you time both in I/O but also in converting datatypes. Dates, times, and datetimes will be automatically set to their R equivalent. It also makes your R script reproducible, so you or someone else on your team can easily produce the same results. -

lubridate

install.packages("lubridate")I've never had great luck with dates in R. I've never fully grasped the idiosyncracies of working with POSIXs vs. R Dates. Enter

lubridate.lubridateis one of those magical libraries that just seems to do exactly what you expect it to. The functions all have obvious names likeyear,month,ymd, andymd_hms. It's similar toMoment.jsfor those familiar with javascript.1 2 3 4 5 6 7 8 9 library (lubridate )year ( "2012-12-12" )#[1] 2012day ( "2012-12-12" )#[1] 12ymd ( "2012-12-12" )#1 parsed with %Y-%m-%d#[1] "2012-12-12 UTC"Here's a really handy reference card that I found in a paper. It covers just about everything you might conveivably want to do to a date. I've also found this Date Cheat Sheet to be a handy reference.

-

ggplot2

install.packages("ggplot2")Another Hadley Wickham pacakge and probably his most widely known one.

ggplot2ranks high on everyone's list of favorite R pacakges. It's easy to use and it produces some great looking plots. It's a great way to present your work, and there are many resources available to help you get started.- Elegant Graphics for Data Analysis by Hadley Wickham (Amazon)

- A Rosetta Stone for Excel to

ggplot(Yaksis Blog) - Hadley Wickham ggplot2 Presentation at Google (youtube)

- R Graphics Cookbook by Winston Chang (Amazon)

-

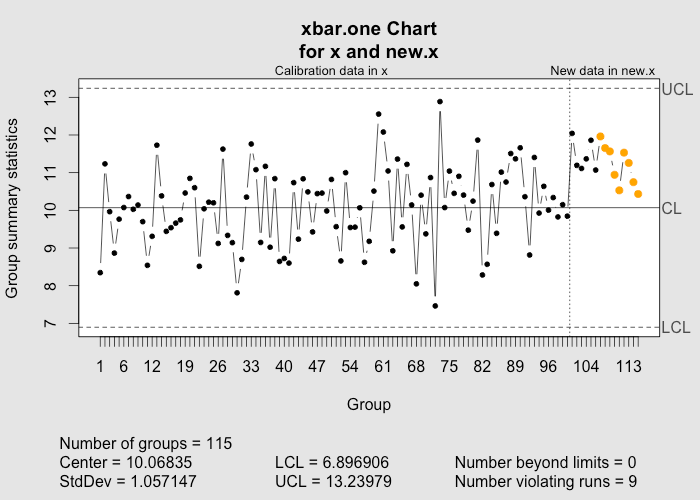

qcc

install.packages("qcc")qccis a library for statistical quality control. Back in the 1950s, the now defunct Western Electric Company was looking for a better way to detect problems with telephone and eletrical lines. They came up with a set of rules to help them identify problematic lines. The rules look at the historical mean of a series of datapoints and based on the standard deviation, the rules help judge whether a new set of points is experiencing a mean shift.The classic example is monitoring a machine that produces lug nuts. Let's say the machine is supposed to produce 2.5 inch long lug nuts. We measure a series of lug nuts: 2.48, 2.47, 2.51, 2.52, 2.54, 2.42, 2.52, 2.58, 2.51. Is the machine broken? Well it's hard to tell, but the Western Electric Rules can help.

1 2 3 4 5 6 7 8 library (qcc )# series of value w/ mean of 10 with a little random noise added inx <- rep ( 10 , 100 ) + rnorm ( 100 )# a test series w/ a mean of 11new.x <- rep ( 11 , 15 ) + rnorm ( 15 )# qcc will flag the new pointsqcc (x , newdata =new.x , type = "xbar.one" )While you might not be monitoring telephone lines,

qcccan help you monitor transaction volumes, visitors or logins on your website, database operations, and lots of other processes.

-

reshape2

install.packages("reshape2")I always find that the hardest part of any sort of analysis is getting the data into the right format.

reshape2is yet another package by Hadley Wickham that specializes in converting data from wide to long format and vice versa. I use it all the time in conjunction withggplot2andplyr.1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 library (reshape2 )# generate a unique id for each row; this let's us go back to wide format lateriris $id <- 1 :nrow (iris )iris.lng <- melt (iris , id =c ( "id" , "Species" ))head (iris.lng )# id Species variable value#1 1 setosa Sepal.Length 5.1#2 2 setosa Sepal.Length 4.9#3 3 setosa Sepal.Length 4.7#4 4 setosa Sepal.Length 4.6#5 5 setosa Sepal.Length 5.0#6 6 setosa Sepal.Length 5.4iris.wide <- dcast (iris.lng , id + Species ~ variable )head (iris.wide )# id Species Sepal.Length Sepal.Width Petal.Length Petal.Width#1 1 setosa 5.1 3.5 1.4 0.2#2 2 setosa 4.9 3.0 1.4 0.2#3 3 setosa 4.7 3.2 1.3 0.2#4 4 setosa 4.6 3.1 1.5 0.2#5 5 setosa 5.0 3.6 1.4 0.2#6 6 setosa 5.4 3.9 1.7 0.4library (ggplot2 )# plots a histogram for each numeric column in the datasetp <- ggplot (aes (x =value , fill =Species ), data =iris.lng )p + geom_histogram () +facet_wrap ( ~variable , scales = "free" )It's a great way to quickly take a look at a dataset and get your bearings. You can use the

meltfunction to convert wide data to long data, anddcastto go from long to wide. -

randomForest

install.packages("randomForest")This list wouldn't be complete without including at least one machine learning package you can impress your friends with. Random Forest is a great algorithm to start with. It's easy to use, can do supervised or unsupervised learning, it can be used with many differnet types of datasets, but most importantly it's effective! Here's how it works in R.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 library (randomForest )# download Titanic Survivors datadata <- read.table ( "http://math.ucdenver.edu/RTutorial/titanic.txt" , h = T , sep = "\t" )# make survived into a yes/nodata $Survived <- as.factor (ifelse (data $Survived == 1 , "yes" , "no" ))# split into a training and test setidx <- runif (nrow (data )) <= .75data.train <- data [idx ,]data.test <- data [ -idx ,]# train a random forestrf <- randomForest (Survived ~ PClass + Age + Sex ,data =data.train , importance = TRUE , na.action =na.omit )# how important is each variable in the modelimp <- importance (rf )o <- order (imp [, 3 ], decreasing = T )imp [o ,]# no yes MeanDecreaseAccuracy MeanDecreaseGini#Sex 51.49855 53.30255 55.13458 63.46861#PClass 25.48715 24.12522 28.43298 22.31789#Age 20.08571 14.07954 24.64607 19.57423# confusion matrix [[True Neg, False Pos], [False Neg, True Pos]]table (data.test $Survived , predict (rf , data.test ), dnn =list ( "actual" , "predicted" ))# predicted#actual no yes# no 427 16# yes 117 195