最近考虑做些英文词语词干化的工作,听说coreNLP这个工具不错,就拿来用了。

coreNLP是斯坦福大学开发的一套关于自然语言处理的工具(toolbox),使用简单功能强大,有;命名实体识别、词性标注、词语词干化、语句语法树的构造还有指代关系等功能,使用起来比较方便。

coreNLP是使用Java编写的,运行环境需要在JDK1.8,1.7貌似都不支持。这是需要注意的

coreNLP官方文档不多,但是给的几个示例文件也差不多能摸索出来怎么用,刚才摸索了一下,觉得还挺顺手的。

环境:

window7 64位

JDK1.8

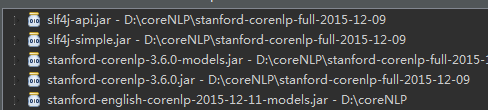

需要引进的ar包:

说明:这里只是测试了英文的,所以使用的Stanford-corenlp-3.6.0.models.jar文件,如果使用中文的需要在官网上下对应中文的model jar包,然后引进项目即可。

直接看代码比较简单:

package com.luchi.corenlp;

import java.util.List;

import java.util.Map;

import java.util.Properties;

import edu.stanford.nlp.hcoref.CorefCoreAnnotations.CorefChainAnnotation;

import edu.stanford.nlp.hcoref.data.CorefChain;

import edu.stanford.nlp.ling.CoreAnnotations;

import edu.stanford.nlp.ling.CoreAnnotations.LemmaAnnotation;

import edu.stanford.nlp.ling.CoreAnnotations.NamedEntityTagAnnotation;

import edu.stanford.nlp.ling.CoreAnnotations.PartOfSpeechAnnotation;

import edu.stanford.nlp.ling.CoreAnnotations.SentencesAnnotation;

import edu.stanford.nlp.ling.CoreAnnotations.TextAnnotation;

import edu.stanford.nlp.ling.CoreAnnotations.TokensAnnotation;

import edu.stanford.nlp.ling.CoreLabel;

import edu.stanford.nlp.pipeline.Annotation;

import edu.stanford.nlp.pipeline.StanfordCoreNLP;

import edu.stanford.nlp.semgraph.SemanticGraph;

import edu.stanford.nlp.semgraph.SemanticGraphCoreAnnotations;

import edu.stanford.nlp.trees.Tree;

import edu.stanford.nlp.trees.TreeCoreAnnotations.TreeAnnotation;

import edu.stanford.nlp.util.CoreMap;

public class TestNLP {

public void test(){

//构造一个StanfordCoreNLP对象,配置NLP的功能,如lemma是词干化,ner是命名实体识别等

Properties props = new Properties();

props.setProperty("annotators", "tokenize, ssplit, pos, lemma, ner, parse, dcoref");

StanfordCoreNLP pipeline = new StanfordCoreNLP(props);

// 待处理字符串

String text = "judy has been to china . she likes people there . and she went to Beijing ";// Add your text here!

// 创造一个空的Annotation对象

Annotation document = new Annotation(text);

// 对文本进行分析

pipeline.annotate(document);

//获取文本处理结果

List<CoreMap> sentences = document.get(SentencesAnnotation.class);

for(CoreMap sentence: sentences) {

// traversing the words in the current sentence

// a CoreLabel is a CoreMap with additional token-specific methods

for (CoreLabel token: sentence.get(TokensAnnotation.class)) {

// 获取句子的token(可以是作为分词后的词语)

String word = token.get(TextAnnotation.class);

System.out.println(word);

//词性标注

String pos = token.get(PartOfSpeechAnnotation.class);

System.out.println(pos);

// 命名实体识别

String ne = token.get(NamedEntityTagAnnotation.class);

System.out.println(ne);

//词干化处理

String lema=token.get(LemmaAnnotation.class);

System.out.println(lema);

}

// 句子的解析树

Tree tree = sentence.get(TreeAnnotation.class);

tree.pennPrint();

// 句子的依赖图

SemanticGraph graph = sentence.get(SemanticGraphCoreAnnotations.CollapsedCCProcessedDependenciesAnnotation.class);

System.out.println(graph.toString(SemanticGraph.OutputFormat.LIST));

}

// 指代词链

//每条链保存指代的集合

// 句子和偏移量都从1开始

Map<Integer, CorefChain> corefChains = document.get(CorefChainAnnotation.class);

if (corefChains == null) { return; }

for (Map.Entry<Integer,CorefChain> entry: corefChains.entrySet()) {

System.out.println("Chain " + entry.getKey() + " ");

for (CorefChain.CorefMention m : entry.getValue().getMentionsInTextualOrder()) {

// We need to subtract one since the indices count from 1 but the Lists start from 0

List<CoreLabel> tokens = sentences.get(m.sentNum - 1).get(CoreAnnotations.TokensAnnotation.class);

// We subtract two for end: one for 0-based indexing, and one because we want last token of mention not one following.

System. out.println(" " + m + ", i.e., 0-based character offsets [" + tokens.get(m.startIndex - 1).beginPosition() +

", " + tokens.get(m.endIndex - 2).endPosition() + ")");

}

}

}

public static void main(String[]args){

TestNLP nlp=new TestNLP();

nlp.test();

}

}

具体的注释都给出来了,我们可以直接看结果就知道代码的作用了:

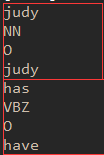

对于每个token的识别结果:

原句中的:

judy 识别结果为:词性为NN,也就是名词,命名实体对象识别结果为O,词干识别为Judy

注意到has识别的词干已经被识别出来了,是“have”

而Beijing的命名实体标注识别结果为“Location”,也就意味着识别出了地名

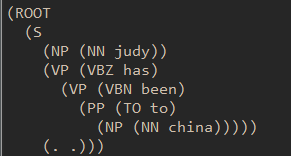

然后我们看 解析树的识别(以第一句为例):

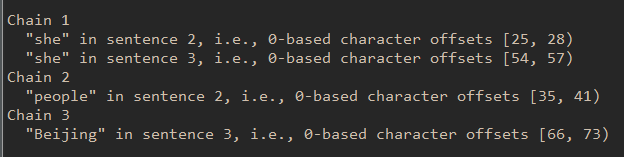

最后我们看一下指代的识别:

每个chain包含的是指代相同内容的词语,如chain1中两个she虽然在句子的不同位置,但是都指代的是第一句的“Judy”,这和原文的意思一致,表示识别正确,offsets表示的是该词语在句子中的位置

当然我只是用到了coreNLP的词干化功能,所以只需要把上面代码一改就可以处理词干化了,测试代码如下:

package com.luchi.corenlp;

import java.util.List;

import java.util.Properties;

import edu.stanford.nlp.ling.CoreLabel;

import edu.stanford.nlp.ling.CoreAnnotations.LemmaAnnotation;

import edu.stanford.nlp.ling.CoreAnnotations.SentencesAnnotation;

import edu.stanford.nlp.ling.CoreAnnotations.TokensAnnotation;

import edu.stanford.nlp.pipeline.Annotation;

import edu.stanford.nlp.pipeline.StanfordCoreNLP;

import edu.stanford.nlp.util.CoreMap;

public class Lemma {

// 词干化

public String stemmed(String inputStr) {

Properties props = new Properties();

props.setProperty("annotators", "tokenize, ssplit, pos, lemma, ner, parse, dcoref");

StanfordCoreNLP pipeline = new StanfordCoreNLP(props);

Annotation document = new Annotation(inputStr);

pipeline.annotate(document);

List<CoreMap> sentences = document.get(SentencesAnnotation.class);

String outputStr = "";

for (CoreMap sentence : sentences) {

// traversing the words in the current sentence

// a CoreLabel is a CoreMap with additional token-specific methods

for (CoreLabel token : sentence.get(TokensAnnotation.class)) {

String lema = token.get(LemmaAnnotation.class);

outputStr += lema+" ";

}

}

return outputStr;

}

public static void main(String[]args){

Lemma lemma=new Lemma();

String input="jack had been to china there months ago. he likes china very much,and he is falling love with this country";

String output=lemma.stemmed(input);

System.out.print("原句 :");

System.out.println(input);

System.out.print("词干化:");

System.out.println(output);

}

}

输出结果为:

结果还是很准确的