虚拟化网络之OpenvSwitch(三)

上一篇介绍了openvswitch利用GRE协议,搭建多台宿主机的虚拟网络,接下来在利用vxlan通道搭建一个跨多宿主机的虚拟化网络,深入了解openvswitch的功能。

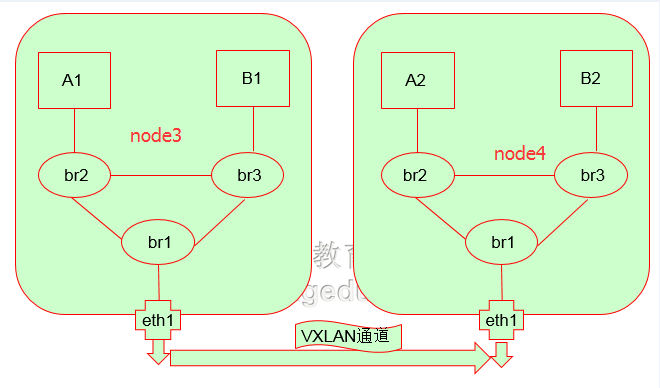

一、实验拓扑

ip地址分配:

A1:192.168.10.1/24

A2:192.168.10.10/24

B1:192.168.10.2/24

B2:192.168.10.20/24

eth1:10.10.10.1/24(图左)

eth1:10.10.10.2/24(图右)

操作系统:

CentOS6.6x86_64

二、实验步骤

1)修改内核参数(一定要先修改内核参数,若果配置了网络名称空间在配置内核参数,内核参数将不会生效)

net.ipv4.ip_forward = 1 \\启用内核转发功能 net.ipv4.conf.all.rp_filter = 0 \\关闭路由验证 net.ipv4.conf.default.rp_filter = 0 \\关闭路由验证 # /etc/init.d/iptables stop \\关闭防火墙 # setenforce 0 \\关闭Selinux

2)准备yum源

[openswitch] name= openswitch baseurl=https://repos.fedorapeople.org/openstack/EOL/openstack-icehouse/epel-6/ enabled=1 gpgcheck=0

3)安装openvswitch

# yum install openvswitch \\两台宿主机都要安装

4)启动openvswitch

# /etc/init.d/openvswitch start Starting ovsdb-server [ OK ] \\启动openvswitch数据库 Configuring Open vSwitch system IDs [ OK ] \\配置openvswitch的id Starting ovs-vswitchd [ OK ] \\启动openvswitch服务 Enabling remote OVSDB managers [ OK ] \\启用openvswitch的远程管理

5)更新iproute软件包,我们用来创建网络名称空间

# yum update iproute \\更新iproute软件 # rpm -q iproute \\查看更新之后的iproute软件是否带有netns后缀,如果没有,需要重新更新 iproute-2.6.32-130.el6ost.netns.2.x86_64

6)配置node3宿主机的虚拟网络

[root@node3 ~]# ip netns add A1 \\创建A1网络名称空间 [root@node3 ~]# ip netns add B1 \\创建B1网络名称空间 [root@node3 ~]# ip netns show \\查看创建的玩两个名称空间 A1 B1 [root@node3 ~]# ovs-vsctl add-br br1 \\使用openvswitch创建br1桥设备 [root@node3 ~]# ovs-vsctl add-br br2 \\使用openvswitch创建br2桥设备 [root@node3 ~]# ovs-vsctl add-br br3 \\使用openvswitch创建br3桥设备 [root@node3 ~]# ovs-vsctl show \\查看创建的桥设备 a6979a5b-bf54-48ac-b725-9beaa9be6c10 Bridge "br2" Port "br2" Interface "br2" type: internal Bridge "br1" Port "br1" Interface "br1" type: internal Bridge "br3" Port "br3" Interface "br3" type: internal ovs_version: "2.1.3" [root@node3 ~]# ip link add name a1.1 type veth peer name a1.2 \\创建一对端口,用于连接A1网络名称空间跟br2桥设备 [root@node3 ~]# ip link set a1.1 up \\激活a1.1端口 [root@node3 ~]# ip link set a1.2 up \\激活a1.2端口 [root@node3 ~]# ip link add name b1.1 type veth peer name b1.2 \\创建一对端口,用于连接B1网络名称空间与br3桥设备 [root@node3 ~]# ip link set b1.2 up \\激活b1.2端口 [root@node3 ~]# ip link set b1.1 up \\激活b1.1端口 [root@node3 ~]# ip link add name b12.1 type veth peer name b12.2 \\创建一对端口,用于连接br2与br1桥设备 [root@node3 ~]# ip link set b12.1 up \\激活b12.1端口 [root@node3 ~]# ip link set b12.2 up \\激活b12.2端口 [root@node3 ~]# ip link add name b13.1 type veth peer name b13.2 \\创建一对端口,用于连接br3与br1桥设备 [root@node3 ~]# ip link set b13.1 up \\激活b13.1端口 [root@node3 ~]# ip link set b13.2 up \\激活b13.2端口 [root@node3 ~]# ip link add name b23.1 type veth peer name b23.2 \\创建一对端口,用于连接br2与br3桥设备 [root@node3 ~]# ip link set b23.1 up \\激活b23.1端口 [root@node3 ~]# ip link set b23.2 up \\激活b23.2端口 [root@node3 ~]# ovs-vsctl add-port br2 a1.1 \\把a1.2端口加入到br2桥设备上 [root@node3 ~]# ip link set a1.2 netns A1 \\把a1.2端口添加到A1网络名称空间,要注意,a1.2添加到网络名称空间后不会再本地显示 [root@node3 ~]# ovs-vsctl add-port br3 b1.1 \\把b1.1端口加入到br3桥设备上 [root@node3 ~]# ip link set b1.2 netns B1 \\把b1.2端口加入到B1网络名称空间 [root@node3 ~]# ovs-vsctl add-port br2 b23.2 \\把b23.2端口加入到br2桥设备上 [root@node3 ~]# ovs-vsctl add-port br3 b23.1 \\把b23.1加入到br3桥设备上 [root@node3 ~]# ip netns exec A1 ip link set a1.2 up \\把添加到A1网络名称空间的网卡激活 [root@node3 ~]# ip netns exec A1 ip addr add 192.168.10.1/24 dev a1.2 \\给A1网络名称空间配置一个ip地址 [root@node3 ~]# ip netns exec A1 ifconfig \\查看配置的ip地址 a1.2 Link encap:Ethernet HWaddr 2A:1F:79:47:7D:DC inet addr:192.168.10.1 Bcast:0.0.0.0 Mask:255.255.255.0 inet6 addr: fe80::281f:79ff:fe47:7ddc/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:6 errors:0 dropped:0 overruns:0 frame:0 TX packets:12 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:468 (468.0 b) TX bytes:936 (936.0 b) [root@node3 ~]# ip netns exec B1 ip link set b1.2 up \\把添加到B1网络名称空间的端口激活 [root@node3 ~]# ip netns exec B1 ip addr add 192.168.10.2/24 dev b1.2 \\激活之后配置ip地址 [root@node3 ~]# ip netns exec B1 ifconfig \\查看配置的ip地址 b1.2 Link encap:Ethernet HWaddr BA:E8:B0:20:1C:05 inet addr:192.168.10.2 Bcast:0.0.0.0 Mask:255.255.255.0 inet6 addr: fe80::b8e8:b0ff:fe20:1c05/64 Scope:Link UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:6 errors:0 dropped:0 overruns:0 frame:0 TX packets:12 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1000 RX bytes:468 (468.0 b) TX bytes:936 (936.0 b) [root@node3 ~]# ip netns exec B1 ping 192.168.10.1 \\在B1网络名称空间可以ping通A1网络名称空间 PING 192.168.10.1 (192.168.10.1) 56(84) bytes of data. 64 bytes from 192.168.10.1: icmp_seq=1 ttl=64 time=2.66 ms 64 bytes from 192.168.10.1: icmp_seq=2 ttl=64 time=0.051 ms 64 bytes from 192.168.10.1: icmp_seq=3 ttl=64 time=0.054 ms [root@node3 ~]# ip netns exec A1 ping 192.168.10.2 \\在A1网络名称空间可以ping通B1网络名称空间 PING 192.168.10.2 (192.168.10.2) 56(84) bytes of data. 64 bytes from 192.168.10.2: icmp_seq=1 ttl=64 time=1.52 ms 64 bytes from 192.168.10.2: icmp_seq=2 ttl=64 time=0.075 ms [root@node3 ~]# ovs-vsctl add-port br1 b12.2 \\添加b12.2端口到br1桥设备上 [root@node3 ~]# ovs-vsctl add-port br1 b13.2 \\添加b13.2端口到br1桥设备上 [root@node3 ~]# ovs-vsctl add-port br2 b12.1 \\添加b12.1端口到br2桥设备上 [root@node3 ~]# ovs-vsctl add-port br3 b13.1 \\添加b13.1端口到br3桥设备上 [root@node3 ~]# ovs-vsctl set Bridge br1 stp_enable=true \\为了防止br1、br2、br3桥设备产生环路,开启stp协议 [root@node3 ~]# ovs-vsctl set Bridge br2 stp_enable=true \\为了防止br1、br2、br3桥设备产生环路,开启stp协议 [root@node3 ~]# ovs-vsctl set Bridge br3 stp_enable=true \\为了防止br1、br2、br3桥设备产生环路,开启stp协议

7)配置node4宿主机的虚拟网络

修改内核信息 net.ipv4.ip_forward = 1 net.ipv4.conf.default.rp_filter = 0 net.ipv4.conf.all.rp_filter = 0 # /etc/init.d/iptables stop \\关闭防火墙 # setenforce 0 \\关闭SElinux

创建网桥 [root@node4 ~]# ovs-vsctl add-br br1 [root@node4 ~]# ovs-vsctl add-br br2 [root@node4 ~]# ovs-vsctl add-br br3

创建网络名称空间 [root@node4 ~]# ip netns add A2 [root@node4 ~]# ip netns add B2

创建多对端口,用于连接桥设备与网络名称空间的互联 [root@node4 ~]# ip link add a1.1 type veth peer name a1.2 [root@node4 ~]# ip link set a1.1 up [root@node4 ~]# ip link set a1.2 up [root@node4 ~]# ip link add b1.1 type veth peer name b1.2 [root@node4 ~]# ip link set b1.1 up [root@node4 ~]# ip link set b1.2 up [root@node4 ~]# ip link add b12.1 type veth peer name b12.2 [root@node4 ~]# ip link set b12.1 up [root@node4 ~]# ip link set b12.2 up [root@node4 ~]# ip link add b13.1 type veth peer name b13.2 [root@node4 ~]# ip link set b13.1 up [root@node4 ~]# ip link set b13.2 up [root@node4 ~]# ip link add name b23.1 type veth peer name b23.2 [root@node4 ~]# ip link set b23.1 up [root@node4 ~]# ip link set b23.2 up

配置br2与A2网络名称空间互联 [root@node4 ~]# ip link set a1.1 netns A2 [root@node4 ~]# ovs-vsctl add-port br2 a1.2

配置br3与B2网络名称空间互联 [root@node4 ~]# ip link set b1.1 netns B2 [root@node4 ~]# ovs-vsctl add-port br3 b1.2

配置br2与br3桥设备互联 [root@node4 ~]# ovs-vsctl add-port br2 b23.1 [root@node4 ~]# ovs-vsctl add-port br3 b23.2

配置A2网络名称空间的ip地址 [root@node4 ~]# ip netns exec A2 ip link set a1.1 up [root@node4 ~]# ip netns exec A2 ip addr add 192.168.10.10/24 dev a1.1

配置B2网络名称空间的ip地址 [root@node4 ~]# ip netns exec B2 ip link set b1.1 up [root@node4 ~]# ip netns exec B2 ip addr add 192.168.10.20/24 dev b1.1

测试A2与B2网络名称空间的连通性 [root@node4 ~]# ip netns exec B2 ping 192.168.10.10 PING 192.168.10.10 (192.168.10.10) 56(84) bytes of data. 64 bytes from 192.168.10.10: icmp_seq=1 ttl=64 time=1.68 ms 64 bytes from 192.168.10.10: icmp_seq=2 ttl=64 time=0.065 ms [root@node4 ~]# ip netns exec A2 ping 192.168.10.20 PING 192.168.10.20 (192.168.10.20) 56(84) bytes of data. 64 bytes from 192.168.10.20: icmp_seq=1 ttl=64 time=2.80 ms 64 bytes from 192.168.10.20: icmp_seq=2 ttl=64 time=0.048 ms

配置br2与br1桥设备互联 [root@node4 ~]# ovs-vsctl add-port br1 b12.1 [root@node4 ~]# ovs-vsctl add-port br2 b12.2

配置br3与br1桥设备互联 [root@node4 ~]# ovs-vsctl add-port br1 b13.1 [root@node4 ~]# ovs-vsctl add-port br3 b13.2

为了防止桥设备产生环路,在桥设备上开启stp协议 [root@node4 ~]# ovs-vsctl set Bridge br1 stp_enable=true [root@node4 ~]# ovs-vsctl set Bridge br2 stp_enable=true [root@node4 ~]# ovs-vsctl set Bridge br3 stp_enable=true

8)配置GRE通道

先配置用于建立GRE通道的eth1网卡接口的ip地址 [root@node3 ~]# ifconfig eth1 10.10.10.1/24 up [root@node4 ~]# ifconfig eth1 10.10.10.2/24 up

测试连通性 [root@node3 ~]# ping 10.10.10.1 PING 10.10.10.1 (10.10.10.1) 56(84) bytes of data. 64 bytes from 10.10.10.1: icmp_seq=1 ttl=64 time=0.066 ms [root@node4 ~]# ping 10.10.10.1 PING 10.10.10.1 (10.10.10.1) 56(84) bytes of data. 64 bytes from 10.10.10.1: icmp_seq=1 ttl=64 time=2.94 ms 64 bytes from 10.10.10.1: icmp_seq=2 ttl=64 time=0.305 ms

分别在两台宿主机的br1桥设备上添加一个用于GRE封装的端口 [root@node3 ~]# ovs-vsctl add-port br1 vxlan [root@node4 ~]# ovs-vsctl add-port br1 vxlan

更改添加GRE端口的封装属性 [root@node3 ~]# ovs-vsctl set Interface vxlan type=vxlan options:remote_ip=10.10.10.2 [root@node4 ~]# ovs-vsctl set Interface vxlan type=vxlan options:remote_ip=10.10.10.1

测试两台宿主机之间网络名称空间的连通性 在node3宿主上测试 [root@node3 ~]# ip netns exec A1 ping 192.168.10.2 PING 192.168.10.2 (192.168.10.2) 56(84) bytes of data. 64 bytes from 192.168.10.2: icmp_seq=1 ttl=64 time=2.30 ms ^C --- 192.168.10.2 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 917ms rtt min/avg/max/mdev = 2.306/2.306/2.306/0.000 ms [root@node3 ~]# ip netns exec A1 ping 192.168.10.10 PING 192.168.10.10 (192.168.10.10) 56(84) bytes of data. 64 bytes from 192.168.10.10: icmp_seq=1 ttl=64 time=3.66 ms 64 bytes from 192.168.10.10: icmp_seq=2 ttl=64 time=0.759 ms ^C --- 192.168.10.10 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1263ms rtt min/avg/max/mdev = 0.759/2.211/3.664/1.453 ms [root@node3 ~]# ip netns exec A1 ping 192.168.10.20 PING 192.168.10.20 (192.168.10.20) 56(84) bytes of data. 64 bytes from 192.168.10.20: icmp_seq=1 ttl=64 time=4.79 ms 64 bytes from 192.168.10.20: icmp_seq=2 ttl=64 time=0.442 ms ^C --- 192.168.10.20 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1285ms rtt min/avg/max/mdev = 0.442/2.619/4.797/2.178 ms 在node4宿主机上测试 [root@node4 ~]# ip netns exec A2 ping 192.168.10.1 PING 192.168.10.1 (192.168.10.1) 56(84) bytes of data. 64 bytes from 192.168.10.1: icmp_seq=1 ttl=64 time=6.75 ms 64 bytes from 192.168.10.1: icmp_seq=2 ttl=64 time=1.53 ms ^C --- 192.168.10.1 ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1376ms rtt min/avg/max/mdev = 1.536/4.146/6.756/2.610 ms [root@node4 ~]# ip netns exec A2 ping 192.168.10.3 PING 192.168.10.3 (192.168.10.3) 56(84) bytes of data. ^C --- 192.168.10.3 ping statistics --- 2 packets transmitted, 0 received, 100% packet loss, time 1381ms [root@node4 ~]# ip netns exec A2 ping 192.168.10.2 PING 192.168.10.2 (192.168.10.2) 56(84) bytes of data. 64 bytes from 192.168.10.2: icmp_seq=1 ttl=64 time=5.68 ms ^C --- 192.168.10.2 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 769ms rtt min/avg/max/mdev = 5.680/5.680/5.680/0.000 ms [root@node4 ~]# ip netns exec A2 ping 192.168.10.20 PING 192.168.10.20 (192.168.10.20) 56(84) bytes of data. 64 bytes from 192.168.10.20: icmp_seq=1 ttl=64 time=2.50 ms ^C --- 192.168.10.20 ping statistics --- 1 packets transmitted, 1 received, 0% packet loss, time 913ms rtt min/avg/max/mdev = 2.502/2.502/2.502/0.000 ms

在node4宿主机上ping node3宿主机上的网络名称空间,在node3宿主机上抓包分析 [root@node3 ~]# tcpdump -nn -i eth1 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on eth1, link-type EN10MB (Ethernet), capture size 65535 bytes 10:34:12.799191 IP 10.10.10.1.58588 > 10.10.10.2.4789: UDP, length 60 10:34:13.578197 IP 10.10.10.2.56807 > 10.10.10.1.4789: UDP, length 106 10:34:13.578264 IP 10.10.10.1.38137 > 10.10.10.2.4789: UDP, length 106 通过以上数据分析,可以发现vxlan利用udp封装数据报文将两台宿主机之前的虚拟网络打通 [root@node3 ~]# ip netns exec A1 tcpdump -nn icmp -i a1.2 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on a1.2, link-type EN10MB (Ethernet), capture size 65535 bytes 10:35:33.692847 IP 192.168.10.10 > 192.168.10.1: ICMP echo request, id 42539, seq 93, length 64 10:35:33.692875 IP 192.168.10.1 > 192.168.10.10: ICMP echo reply, id 42539, seq 93, length 64 10:35:34.693605 IP 192.168.10.10 > 192.168.10.1: ICMP echo request, id 42539, seq 94, length 64 通过以上抓包数据分析,可以看出A1与A2之间的通信信息

9)到此实验已经结束,两台宿主机之前的虚拟网络搭建完成

关于GRE协议,大家可以参考以下链接,这里就不在详细讲述了:

http://www.tuicool.com/articles/zyiuIzU

http://www.tuicool.com/articles/6zMJRn