Papers about DL

Reading some papers about DL

- Reading some papers about DL

- SegNet A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation

- Encoder network

- Decoder network

- Training

- Analysis

- 个人想法

- Do Convnets learn Correspondence

- Ideas

- Methods 1

- Method 2

- 个人想法

- FCN

- Semantics Segmentation VS Spatial Segmentation

- Fully Convolutional Networks for Semantic Segmentation

- Receptive fileds 是指什么

- Stride

- 如何达到 Dense Prediction

- 方案一 stitching together coarse outputs by shifted inputs

- 方案二减小stride如将采样步长设置为 1

- 方案三upsampling 参数通过学习获得

- Combining what and where

- Some tricks

- OverFeat

- Ideas

- Model Design and Training

- Multi - Scale Classification Multi-view voting at each location and at mutiple scale

- Classification

- Localization

- 参考文章

- SegNet A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation

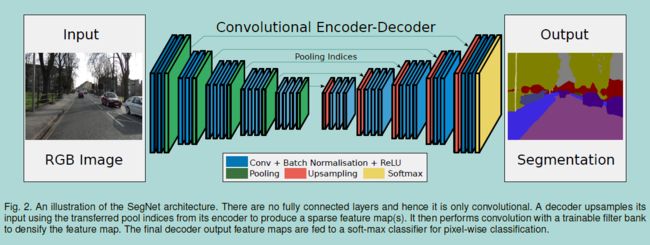

SegNet : A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation

- Encoder network is topologically identical to the 13 convolutional layers in VGG16 network – 基于VGG(移除全链接层)

- Stores the max-pooling indices of the feature maps and uses them in ites decoder network to achieve good performance – DownSampling 时标记所取值在feature map 中的位置,UpSampling (non-linear)时尽量恢复数据

- Train : end-to-end , stochastic gradient descent – 训练时,端到端,随机梯度下降

The key learning module is an encoder-decoder network. An encoder consists of convolution with a filter bank, element-wise tanh non-linearity, max-pooling and sub-sampling to obtain the feature maps. For each sample, the indices of the max locations computed during pooling are stored and passed to the decoder. The decoder upsamples the feature maps by using the stored pooled indices. It convolves this upsampled map using a trainable decoder filter bank to reconstruct the input image.

Encoder network

- First 13 convolutional layers in the VGG16 network , 丢掉全链接层参数

- Use batch normalized

- ReLU is applied

- Max-pooling 2*2 window and stride 2 (non-overlapping window)

- Storing only the max-pooling indices

- the locations of the maximum feature value in each pooling window

Decoder network

- Each encoder layer has a corresponding decoder layer and hence the decoder network has 13 layer

- The final decoder output is fed to a multi-class soft-max classifier to produce class probablilities for each pixel independently

Batch normalization

Each decoder filter has the same number of channels as the number of upsampled feature maps. A smaller variant is one where the decoder filters are single channel, i.e they only convolve their corresponding upsampled feature map.

- The FCN decoder model requires storing encoder feature maps during inference

Training

- use stochastic gradient descent (SGD)

- fixed learning rate of 0.1

- mini-batch (12 images)

- cross-entropy loss

- median frequency balancing

- label images must be single channel, with each pixel lablled with its class

- where the weight assigned to a class in the loss function is the ratio of the median of class frequencies computed on the entire training set divided by the class frequency. This implies that larger classes in the training set have a weight smaller than 1 and the weights of the smallest classes are the highest.

Analysis

- G : global accuracy – measures the percentage of pixels correctily classified in the dataset

- C : class average accuracy – the mean of the predictive accuracy over all classes

- IU : mean intersection over union

个人想法:

- 是否可以用 CNN 训练 Decoder 来放大低分辨率图片

- 减少网络模型大小 – 重点减少全链接参数

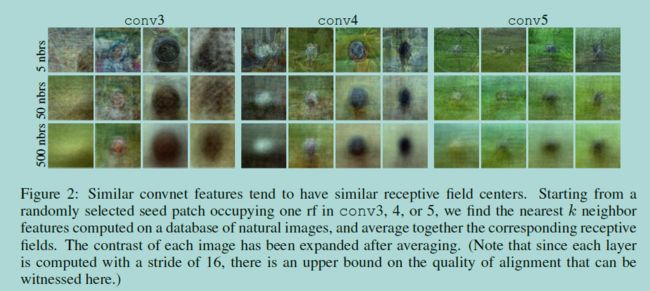

Do Convnets learn Correspondence

Ideas

可视化 convnets feature 的空间特征,验证其特征是否与输入图像的空间位置对应 * Are the modern convnets that excel at classification and detection also able to find precise correspondences between object parts *

- 如果使用大的 pooling 域以及使用整幅图像训练,convnets 是否还能对应着图像局部精细特征 (直观上,大的感受野可能会把这些信息 pooled away)

Methods 1

论文提供了一种 novel visual investigation 来考察convnet features – 使用 convnet features 重建原始图像(使用简单的 top-k nearest neighbors,取平均)

目的:验证随着层数加深,convnet features 感受野越来越大,是否还是能精确对应原始图像的局部空间结构

结论:尽管convnet feature 感受野越来越大, still can carry local information at a finer scale

- 左上角黄框为输入patch ,黄框外的黑框为不断增大的感受野

- 最右一列为,输入为 choosing input patches uniformly at random from conv3-sized neighborhoods. 不是中心cut ,而是在感受野范围内随机cut

Method 2

不是融合 top - k 的cut 出的patch块,而是 average the entire receptive fields of the neighbors

个人想法

- 是否可以使用 feature map 与图像之间的空间对应关系来做图像对准 (Image alignment) 或 图像拼合(Image stiching)

- 如何使用 CNN 来做Keypoint prediction?

FCN

Semantics Segmentation VS Spatial Segmentation

语义分割,也就是分割不同语义的物体,包含着一些recognition的感觉 – 分割边界的划定不是根据纹理梯度等简单信息,而是根据其语义类别

- ” global information resolves what while local information resolves where “

Fully Convolutional Networks for Semantic Segmentation

- 图像分割问题可以看成是 end-to-end ,pixels-to-pixels 的spatially dense prediction tasks。

- 对全链接层进行改造,将全链接看成是卷积核为整个 feature map 大小的卷积 (these fully connected layers can also be viewed as convolutions with kernels that cover their entire input regions)

- 使用双线性插值的方式 upsample ,并通过学习来获取插值参数

- 使用 “skip architecture” 的方式来融合全局信息(deep , coarse layer , semantic)和部分信息(shallow,fine layer , appearance),改善分割效果

Receptive fileds 是指什么?

Locations in higher layers correspond to the locations in the image they are path-connected to , which are called their receptive fields 。

- 感受野,深层神经元感受到的图片(输入图片)的范围。直观上,由于卷积操作的局部连接性质,深层神经元的感受野大于浅层神经元

Stride

间隔采样步长,若卷积窗口为 w , stride = n ,则卷积网络中两个相邻神经元的 overlap 数目为 w-n

如何达到 Dense Prediction ?

方案一 : stitching together coarse outputs ( by shifted inputs )

- 通过平移输入图像,得到 coarse outputs ,(注意,此时为FCN,无全链接层,outputs 与 图像 spatial corordinates 之间有对应关系)

- 拼接这些 outputs ,得到 dense prediction

- 如果 downsampled by a factor of f, 图像坐标x向右平移,y向下平移,共需处理 f*f 个输出图像

- 虽然这种方式在不改变inputs的情况下,达到了 dense prediction , 但无法获取到更精细的信息 (解释牵强 : but the filters are prohibited from accessing information at a finer scale than their original design)

方案二:减小stride,如将采样步长设置为 1

- 这样会达到 dense prediction , 但输出结果与 shift - and -stitch 不同,因为后层神经元的感受野变小了,提取的特征不同,而且计算时间变长

- 还有一种策略就是,stride 减小,但只有原input保持不变,多余的input置0,并从此层之后每一卷基层卷积核大小加倍

- 目的是保证感受野不变,但这种方式无疑增大了后层负担

方案三:upsampling ,参数通过学习获得

- 使用双线性插值 simple bilinear interpolation , 插值参数由学习获得 upsampling !

- end - to - end backprogagation 可以使参数很快学到 , fast and effective

- 其实就是学习卷积(插值)核的参数,如果是这样还算双线性吗?

Combining what and where

- 浅层的feature map 有更多的细节信息,局部

- 深层的 feature map 含有更多的语义信息,全局

- 在论文中通过控制 output stride 和 upsampling 尺寸来实现:浅层的stride是深层的二倍,融合的时,将深层结果 upsampling 2倍,然后与浅层融合。

Some tricks

- A minibatch size of 20 images and fixed learning rates

- Dropout

- Found class balance unneccessary (其数据四分之三是背景)s

- Augmenting the training data by randomly mirroring and “jittering” the images by translating them up to 32 pixels

OverFeat

Ideas

提出了集成框架,用 convnet 来继承识别,定位和检测,一网(相同的framework , a shared feature learning base)多用且互相增益

- Training a convolutional network to simultaneously classify, locate and detect objects in images can boost the classification accuracy and the detection and localization accuracy of all tasks.

解释了 how convnet can be used for locatization and detection ?

- 使用多尺度和滑动窗口来 predict object boundaries

- 使用 bounding box 累积的方式 increase detection confidence,这样做同时避免了 training on background samples ,使准确率提高

提高了识别分类精度 (主要原因是类内差异性大)

- 不同尺度滑动窗口

- 避免 view windows 中的对象不完整(会使 poor localization and detecction)

- 训练网络不仅学习每个滑动窗口的类别分布,还预测包含部分 object 的 view window 的位置和尺寸

- 累积这些不同位置和尺寸的类别的置信度 accumulate the evidence for each category at each location and size

图像分割的实现机制

- 计算 view window 中心像素点的 label

- 好处:可以确定物体之间的语义界限(物体之间不需要是界限分明的)

- 坏处:需要稠密的 pixel-level 估计

Model Design and Training

- Downsample , Each image is downsampled so that the smallest dimension is 256 pixels

- Extract 5 random crops (and their horizontal flips ) of size 221*221 pixels

- Present these to the network in mini-batches of size 128

- Initial the network randomly with N( 0 , 0.01) , learning rate 0.05 and is successively decreased by a facotr of 0.5 after (30 , 50 , 60 , 70 , 80) epochs

- Dropout with a rate of 0.5 is empolyed on the fully connected layers in the classifier

- Rectification “relu”

- No contrast normalization is used

Pooling regions are non-overlapping

While the sliding window approach may be computationally prohibitive for certain types of model, it is inherently efficient in the case of ConvNets . why ?

- 是拿整幅图做输入 和 把图像分成互相重叠的的patch将patch做输入 来进行比较,减少了重叠区域的计算量

- 其实 Slide window 可以实现patch块的效果,两者等效

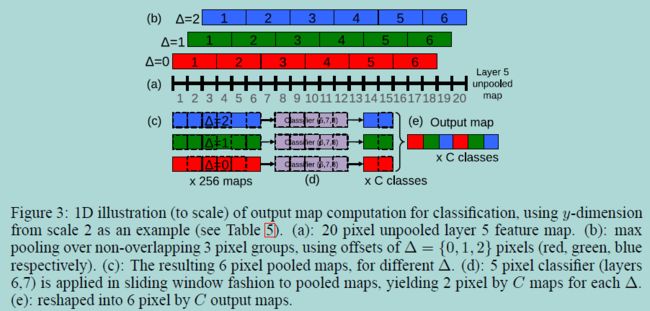

Multi - Scale Classification (Multi-view voting : at each location and at mutiple scale)

Apply the last subsampling operation at every offset – resolution augmentation

- 最右一层的 pooling(subsampling ratio is 3),在每一 offset 处 pooling,即(0,1,2),得到不同 offset 的 pooling feature map,然后将结果交叉排列,输入到下层的 classifer

For a single image, at a given scale , we start with the unpooled layer 5 feature maps

- Each of unpooled maps undergoes a 3*3 max pooling operation (non-overlapping regions) ,repeated 3*3 times for pixel offsets of {0,1,2} , produces a set of pooled feature maps

- The classifier (layers 6,7,8) has a fixed input size of 5*5 and produces a C-dimensional output vector for each location within the pooled maps.The classifier is applied in sliding-window fashion to the pooled maps

- The output maps for different offset combinations are reshaped into a single 3D ouput map

Classification

- Taking the spatial max for each class , at each scale and flip

- Averaging the resulting C-dimensional vectors from different scales and flips

- Taking the top - n elements from the mean class vector ,根据不同的model version

Localization

- Combine the regression predictions together , along with the classification results at each location

- Since these share the same feature extraction layers, only the final regression layers need to be recomputed after computing the classification network

- 输入全图象,分类层滑动窗口,得到每个位置包含物体类别的打分。

参考文章:

SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation

Do Convnets Learn Correspondence?

Fully Convolutional Networks for Semantic Segmentation

Overfeat: Integrated recognition, localization and detection using convolutional networks