- 如何将Docker容器打包并在其他服务器上运行

IT小辉同学

技巧性工具栏分布式云部署搜索引擎docker服务器容器

如何将Docker容器打包并在其他服务器上运行我会幻想很多次我们的相遇,你穿着合身的T恤,一个素色的外套,搭配一条蓝色的牛仔裤,干净的像那天空中的云朵,而我,还是一个的傻傻的少年,我们相识而笑,默默不语,如此甚好!Docker容器使得应用程序的部署和管理变得更加简单和高效。有时,我们可能需要将一个运行中的Docker容器打包,并在其他服务器上运行。本文将详细介绍如何实现这一过程。1.提交容器为镜像

- python读取zip包内文件_Python模块学习:zipfile zip文件操作

weixin_40001634

python读取zip包内文件

最近在写一个网络客户端下载程序,用于下载服务器上的数据。有些数据(如文本,office文档)如果直接传输的话,将会增加通信的数据量,使下载时间变长。服务器在传输这些数据之前先对其进行压缩,客户端接收到数据之后进行解压,这样可以减小网通传输数据的通信量,缩短下载的时间,从而增加客户体验。以前用C#做类似应用程序的时候,我会用SharpZipLib这个开源组件,现在用Python做类似的工作,只要使用

- Ubuntu之12.04常用快捷键——记住这些你就是高手啦!

码莎拉蒂 .

Linux/Unix积累ubuntu快捷键

桌面ALT+F1:聚焦到桌面左侧任务导航栏,可按上下键导航。ALT+F2:运行命令ALT+F4:关闭窗口ALT+TAB:切换程序窗口ALT+空格:打开窗口菜单PRINT:桌面截图SUPER:打开Dash面板,可搜索或浏览项目,默认有个搜索框,按“下”方向键进入浏览区域(SUPER键指Win键或苹果电脑的command键)在Dash面板中按CTRL+TAB:切换到下一个子面板(可搜索不同类型项目,如

- Java JVM性能优化与调优

卖血买老婆

Java专栏javajvm性能优化

优化Java应用的性能通常需要深入理解JVM(JavaVirtualMachine)的工作原理和运行机制,因为JVM直接决定了Java程序的运行时表现。以下是JVM性能优化与调优的要点和详细指导,涵盖常见问题、调优工具及策略。一、常见性能问题内存相关问题堆内存不足(OutOfMemoryError:Javaheapspace)元空间(Metaspace)不足频繁的垃圾回收导致长时间停顿内存泄漏(对

- C++(23):lambda可以省略()

风静如云

C/C++c++

C++越来越多的使用了lambda,C++23也进一步的放宽了对lambda的限制,这一次,如果lambda没有参数列表,那么可以直接省略掉():#includeusingnamespacestd;voidfunc(){autof=[]{cout<<"inf"<<endl;};f();}intmain(){func();return0;}允许程序输出:inf

- 网站小程序app怎么查有没有备案?

wayuncn

小程序

网站小程序app怎么查有没有备案?只需要官方一个网址就可以,工信部备案查询官网地址有且只有一个,百度搜索"ICP备案查询"找到官方gov.cn网站即可查询!注:网站小程序app备案查询,可通过输入单位名称或域名或备案号查询,请勿使用子域名或者带http://www等字符的网址查询,网站,APP,小程序,快应用备案也可以此查询。

- 2分钟学会编写maven插件

聪明马的博客

Javamavenjavaspring

什么是Maven插件Maven是Java项目中常用的构建工具,可以自动化构建、测试、打包和发布Java应用程序。Maven插件是Maven的一项重要功能,它可以在Maven构建过程中扩展Maven的功能,实现自定义的构建逻辑。Maven插件可以提供很多不同的功能,例如:生成代码、打包文件、部署应用程序等。插件通常是在Maven构建生命周期中的某个阶段执行,例如:编译、测试、打包、安装和部署。Mav

- Git Submodule用的多吗?

Eleven

git全栈工程师

接上篇文章,再来一起学习下gitsubmodule。我之前在项目中遇到过这种情况:多团队开发微信小程序,一个主包有很多分包的,做法是在主包里用一个脚本文件管理各分包的情况。主包在编译前,需执行一下这个脚本文件,已便于update各分包。GitSubmodule是Git提供的一种管理项目依赖的方式,允许你将一个Git仓库作为另一个Git仓库的子目录。这种方式非常适合管理项目依赖的第三方库或模块化开发

- Maven 与 Docker 集成:构建 Docker 镜像并与容器化应用集成

drebander

dockermavendocker

在现代软件开发中,容器化已成为一种流行的部署和运行应用程序的方式。通过将应用程序及其所有依赖打包成Docker镜像,开发者可以确保应用能够在不同的环境中一致地运行。而Maven是广泛使用的构建工具,能够帮助管理项目的构建、依赖和发布。本文将介绍如何使用Maven构建Docker镜像,并将其与容器化应用集成,以便于自动化部署和管理。1.Maven与Docker集成概述Maven可以通过插件来构建Do

- 【系统架构设计师】系统性能之性能指标

王佑辉

系统架构设计师系统架构

目录1.说明2.计算机的性能指标3.路由器的性能指标4.交换机的性能指标5.网络的性能指标6.操作系统的性能指标7.数据库管理系统的性能指标8.Web服务器的性能指标9.例题9.1例题11.说明1.性能指标是软、硬件的性能指标的集成。2.在硬件中,包括计算机、各种通信交换设备、各类网络设备等;在软件中,包括操作系统、数据库、网络协议以及应用程序等。2.计算机的性能指标1.评价计算机的主要性能指标有

- Node.js 中的 Event 模块详解

小灰灰学编程

Node.jsnode.js前端

Node.js中的Event模块是实现事件驱动编程的核心模块。它基于观察者模式,允许对象(称为“事件发射器”)发布事件,而其他对象(称为“事件监听器”)可以订阅并响应这些事件。这种模式非常适合处理异步操作和事件驱动的场景。1.概念1.1事件驱动编程事件驱动编程是一种编程范式,程序的执行流程由事件(如用户输入、文件读取完成、网络请求响应等)决定。Node.js的核心设计理念就是基于事件驱动的非阻塞I

- Spring IoC容器的两大功能

Mr_Zerone

SpringFrameworkspringjava后端

1.控制反转(1)没有控制反转的情况下常规思路下,也就是在没有控制反转的情况下,程序员需要通过编写应用程序来创建(new关键字)和使用对象。(2)存在控制反转的情况下控制反转主要是针对对象的创建和调用控制而言的。应用程序需要使用一个对象时,不再是由程序员写的应用程序通过new关键字来直接创建该对象,而是由SpringIoC容器来创建和管理,即创建和管理对象的控制权由应用程序转移到IoC容器。我们的

- 事件驱动-事件驱动应用于软件开发

海水天涯

事件驱动驱动开发

一、前言1.1软件开发概述软件开发是一个涉及计算机科学、工程学、设计和项目管理等领域的广泛概念。它指的是创建、部署和维护软件应用程序或系统的整个过程。这包括从最初的构思和需求分析,到设计、编码、测试、部署,以及后续的维护和更新。在软件开发过程中,通常会遵循一定的方法论或开发模型,如瀑布模型、敏捷开发等,以确保项目能按时、按质完成。软件开发工具如集成开发环境(IDE)、版本控制系统等,也在这个过程中

- 深入理解Spring Boot中的事件驱动架构

省赚客APP开发者@聚娃科技

springboot架构java

深入理解SpringBoot中的事件驱动架构大家好,我是微赚淘客系统3.0的小编,也是冬天不穿秋裤,天冷也要风度的程序猿!1.引言事件驱动架构在现代软件开发中越来越受欢迎,它能够提高系统的松耦合性和可扩展性。SpringBoot作为一个流行的Java框架,提供了强大的事件驱动支持。本文将深入探讨SpringBoot中事件驱动架构的实现原理和最佳实践。2.SpringFramework中的事件模型在

- RT-Thread I2C 驱动框架学习笔记

DgHai

RT-Threadmcu单片机

RT-ThreadI2C驱动框架(5.1.0)II2C驱动包括两大部分,I2C驱动总线驱动和I2C设备驱动。I2C总线驱动负责控制I2C总线的硬件,包括发送和接收数据的时序控制,以及处理总线冲突等。它与嵌入式系统的硬件层交互,实现对I2C总线的底层操作,使得应用程序可以通过I2C总线与外部设备进行通信。I2C设备驱动负责管理和控制连接在I2C总线上的具体外部设备。它与I2C总线驱动和嵌入式系统的驱

- XML的介绍及使用DOM,DOM4J解析xml文件

late summer182

xmljava

1XML简介XML(可扩展标记语言,ExtensibleMarkupLanguage)是一种用于定义文档结构和数据存储的标记语言。它主要用于在不同的系统之间传输和存储数据。作用:数据交互配置应用程序和网站Ajax基石特点XML与操作系统、编程语言的开发平台无关实现不同系统之间的数据交换2XML文档结构王珊.NET高级编程包含C#框架和网络编程等李明明XML基础编程包含XML基础概念和基本作用2.1

- 【微信小程序】3D效果轮播图

cdgogo

小程序微信小程序

效果图:

- 小程序类毕业设计选题题目推荐 (29)

初尘屿风

毕业设计后端小程序课程设计springboot微信后端学习

基于微信小程序的设备故障报修管理系统设计与实现,SpringBoot+Vue+毕业论文基于微信小程序的设备故障报修管理系统设计与实现,SSM+Vue+毕业论文基于微信小程序的电影院购票小程序系统,SpringBoot+Vue+毕业论文+指导搭建视频基于微信小程序的宿舍报修管理系统设计与实现,SpringBoot(15500字)+Vue+毕业论文+指导搭建视频基于微信小程序的电影院订票选座系统的设计

- C语言流程控制学习笔记

前端熊猫

C语言c语言学习笔记

1.顺序结构顺序结构是程序中最基本的控制结构,代码按从上到下的顺序依次执行。大多数C语言程序都是由顺序结构组成的。2.选择结构选择结构根据条件的真假来决定执行哪一段代码。在C语言中,选择结构主要有以下几种:2.1if语句if语句用于根据条件的真假来执行相应的代码块。if(condition){//当条件为真时执行的代码}2.2if-else语句if-else语句用于在条件为真时执行一段代码,为假时

- C++ 多线程

lly202406

开发语言

C++多线程引言在计算机科学中,多线程是一种常用的技术,它允许一个程序同时执行多个任务。C++作为一门强大的编程语言,提供了多种多线程编程的机制。本文将详细介绍C++多线程编程的相关知识,包括多线程的概念、线程的创建与同步、互斥锁的使用等。一、多线程的概念1.1什么是多线程?多线程指的是在同一程序中,可以同时运行多个线程,每个线程都是程序的一个执行流。这些线程可以并行执行,从而提高程序的执行效率。

- 消息队列MQ技术的原理和IBM MQ的基本操作

Chelseady

pythonpython

消息队列技术是分布式应用间交换信息的一种技术。消息队列可驻留在内存或磁盘上,队列存储消息直到它们被应用程序读走。通过消息队列,应用程序可独立地执行--它们不需要知道彼此的位置、或在继续执行前不需要等待接收程序接收此消息。消息中间件概述消息队列技术是分布式应用间交换信息的一种技术。消息队列可驻留在内存或磁盘上,队列存储消息直到它们被应用程序读走。通过消息队列,应用程序可独立地执行--它们不需要知道彼

- 微信小程序之自定义轮播图实例 —— 微信小程序实战系列(3)

2401_84910072

程序员微信小程序小程序

由于微信小程序,整个项目编译后的大小不能超过1M查看做轮播图功能的一张图片大小都已经有100+k了那么我们可以把图片放在服务器上,发送请求来获取。index.wxml:这里使用小程序提供的组件autoplay:自动播放interval:自动切换时间duration:滑动动画的时长current:当前所在的页面bindchange:current改变时会触发change事件由于组件提供的指示点样式比

- 前端基础入门:HTML、CSS 和 JavaScript

阿绵

前端前端htmlcssjs

在现代网页开发中,前端技术扮演着至关重要的角色。无论是个人网站、企业官网,还是复杂的Web应用程序,前端开发的基础技术HTML、CSS和JavaScript都是每个开发者必须掌握的核心技能。本文将详细介绍这三者的基本概念及其应用一、HTML——网页的骨架HTML(HyperTextMarkupLanguage)是构建网页的基础语言。它是网页的结构和内容的标记语言,决定了网页上的文本、图像、表单等元

- Android 10 创建不了文件夹

燕满天

Android10改变了文件的存储方式可以在Androidmainfest里面的application添加android:requestLegacyExternalStorage="true"使用原来的存储方式或者,不要自己创文件夹了AndroidQ为每个应用程序提供了一个独立的在外部存储设备的存储沙箱,没有其他应用可以直接访问您应用的沙盒文件。由于文件是私有的,因此访问这些文件不再需要任何权限。

- JVM内存模型分区

Lionel·

java基础javajvm

JVM内存模型划分根据JVM规范,JVM内存共分为Java虚拟机栈,本地方法栈,堆,方法区,程序计数器,五个部分。1.Java堆(线程共享)Java堆是被所有线程共享的一块内存区域,在虚拟机启动时创建。此内存区域的唯一目的就是存放对象实例,几乎所有的对象实例以及数组都要在堆上分配。Java堆是垃圾收集器管理的主要区域,因此很多时候也被称做“GC堆”。从内存回收的角度看,由于现在收集器基本都采用分代

- 使用yii自带发邮件功能发送邮件

原克技术

yiiphp

邮件组件的配置取决于您选择的扩展名。通常,您的应用程序配置应如下所示:在配置文件中配置dirname(dirname(__DIR__)).'/vendor','timeZone'=>'Asia/Chongqing','components'=>['db'=>['class'=>'yii\db\Connection','dsn'=>'mysql:host=localhost;dbname=root'

- cmake linux模板 多目录_【转载】CMake 简介和 CMake 模板

weixin_39790738

cmakelinux模板多目录

如果你用Linux操作系统,使用cmake会简单很多,可以参考一个很好的教程:CMake入门实战|HaHack。如果你用Linux操作系统,而且只是运行一些小程序,可以看看我的另一篇博客:你就编译一个cpp,用CMake还不如用pkg-config呢。但如果你用Windows,很大的可能你会使用图形界面的CMake(cmake-gui.exe)和VisualStudio。本文先简单介绍使用CMak

- 运维分级发布_运维必备制度:故障分级和处罚规范

weixin_39599046

运维分级发布

正文互联网产品提供7*24小时服务,而因人为操作、程序BUG等原因导致服务不可用是影响服务持续运行的重要原因,为了提高各业务产品的运维和运营质量,规范各业务线的服务、故障响应,拟定和发布“故障分级和处罚规范”是非常必要的。故障分级标准运营故障中,对非不可抗力所造成的故障归类为“故障”,对于故障将追究故障的分级,故障责任人,及故障处理结果。下面将就各类故障级别进行定义说明,由于故障可能在多方面体现影

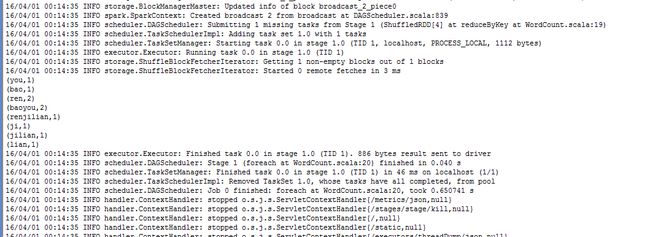

- 如果MLlib 中没有所需要的模型,如何使用 Spark 进行分布式训练?

是纯一呀

WSLDockerAIspark分布式mllib

如果MLlib中没有你所需要的模型,并且不打算结合更强大的框架(如TensorFlowOnSpark或Horovod),仍然可以使用Spark进行分布式训练,但需要手动处理训练任务的分配、数据准备、模型训练、结果合并和模型更新等过程。模型训练阶段将模型的训练任务分配到Spark集群的各个节点。数据并行:每个节点会处理数据的不同部分,并计算该部分的梯度或模型参数。自定义算法:如果使用的是自定义算法(

- 如何用 python 获取实时的股票数据?_python efinance(2)

元点三

2024年程序员学习pythonjavalinux

先自我介绍一下,小编浙江大学毕业,去过华为、字节跳动等大厂,目前阿里P7深知大多数程序员,想要提升技能,往往是自己摸索成长,但自己不成体系的自学效果低效又漫长,而且极易碰到天花板技术停滞不前!因此收集整理了一份《2024年最新Python全套学习资料》,初衷也很简单,就是希望能够帮助到想自学提升又不知道该从何学起的朋友。既有适合小白学习的零基础资料,也有适合3年以上经验的小伙伴深入学习提升的进阶课

- 多线程编程之存钱与取钱

周凡杨

javathread多线程存钱取钱

生活费问题是这样的:学生每月都需要生活费,家长一次预存一段时间的生活费,家长和学生使用统一的一个帐号,在学生每次取帐号中一部分钱,直到帐号中没钱时 通知家长存钱,而家长看到帐户还有钱则不存钱,直到帐户没钱时才存钱。

问题分析:首先问题中有三个实体,学生、家长、银行账户,所以设计程序时就要设计三个类。其中银行账户只有一个,学生和家长操作的是同一个银行账户,学生的行为是

- java中数组与List相互转换的方法

征客丶

JavaScriptjavajsonp

1.List转换成为数组。(这里的List是实体是ArrayList)

调用ArrayList的toArray方法。

toArray

public T[] toArray(T[] a)返回一个按照正确的顺序包含此列表中所有元素的数组;返回数组的运行时类型就是指定数组的运行时类型。如果列表能放入指定的数组,则返回放入此列表元素的数组。否则,将根据指定数组的运行时类型和此列表的大小分

- Shell 流程控制

daizj

流程控制if elsewhilecaseshell

Shell 流程控制

和Java、PHP等语言不一样,sh的流程控制不可为空,如(以下为PHP流程控制写法):

<?php

if(isset($_GET["q"])){

search(q);}else{// 不做任何事情}

在sh/bash里可不能这么写,如果else分支没有语句执行,就不要写这个else,就像这样 if else if

if 语句语

- Linux服务器新手操作之二

周凡杨

Linux 简单 操作

1.利用关键字搜寻Man Pages man -k keyword 其中-k 是选项,keyword是要搜寻的关键字 如果现在想使用whoami命令,但是只记住了前3个字符who,就可以使用 man -k who来搜寻关键字who的man命令 [haself@HA5-DZ26 ~]$ man -k

- socket聊天室之服务器搭建

朱辉辉33

socket

因为我们做的是聊天室,所以会有多个客户端,每个客户端我们用一个线程去实现,通过搭建一个服务器来实现从每个客户端来读取信息和发送信息。

我们先写客户端的线程。

public class ChatSocket extends Thread{

Socket socket;

public ChatSocket(Socket socket){

this.sock

- 利用finereport建设保险公司决策分析系统的思路和方法

老A不折腾

finereport金融保险分析系统报表系统项目开发

决策分析系统呈现的是数据页面,也就是俗称的报表,报表与报表间、数据与数据间都按照一定的逻辑设定,是业务人员查看、分析数据的平台,更是辅助领导们运营决策的平台。底层数据决定上层分析,所以建设决策分析系统一般包括数据层处理(数据仓库建设)。

项目背景介绍

通常,保险公司信息化程度很高,基本上都有业务处理系统(像集团业务处理系统、老业务处理系统、个人代理人系统等)、数据服务系统(通过

- 始终要页面在ifream的最顶层

林鹤霄

index.jsp中有ifream,但是session消失后要让login.jsp始终显示到ifream的最顶层。。。始终没搞定,后来反复琢磨之后,得到了解决办法,在这儿给大家分享下。。

index.jsp--->主要是加了颜色的那一句

<html>

<iframe name="top" ></iframe>

<ifram

- MySQL binlog恢复数据

aigo

mysql

1,先确保my.ini已经配置了binlog:

# binlog

log_bin = D:/mysql-5.6.21-winx64/log/binlog/mysql-bin.log

log_bin_index = D:/mysql-5.6.21-winx64/log/binlog/mysql-bin.index

log_error = D:/mysql-5.6.21-win

- OCX打成CBA包并实现自动安装与自动升级

alxw4616

ocxcab

近来手上有个项目,需要使用ocx控件

(ocx是什么?

http://baike.baidu.com/view/393671.htm)

在生产过程中我遇到了如下问题.

1. 如何让 ocx 自动安装?

a) 如何签名?

b) 如何打包?

c) 如何安装到指定目录?

2.

- Hashmap队列和PriorityQueue队列的应用

百合不是茶

Hashmap队列PriorityQueue队列

HashMap队列已经是学过了的,但是最近在用的时候不是很熟悉,刚刚重新看以一次,

HashMap是K,v键 ,值

put()添加元素

//下面试HashMap去掉重复的

package com.hashMapandPriorityQueue;

import java.util.H

- JDK1.5 returnvalue实例

bijian1013

javathreadjava多线程returnvalue

Callable接口:

返回结果并且可能抛出异常的任务。实现者定义了一个不带任何参数的叫做 call 的方法。

Callable 接口类似于 Runnable,两者都是为那些其实例可能被另一个线程执行的类设计的。但是 Runnable 不会返回结果,并且无法抛出经过检查的异常。

ExecutorService接口方

- angularjs指令中动态编译的方法(适用于有异步请求的情况) 内嵌指令无效

bijian1013

JavaScriptAngularJS

在directive的link中有一个$http请求,当请求完成后根据返回的值动态做element.append('......');这个操作,能显示没问题,可问题是我动态组的HTML里面有ng-click,发现显示出来的内容根本不执行ng-click绑定的方法!

- 【Java范型二】Java范型详解之extend限定范型参数的类型

bit1129

extend

在第一篇中,定义范型类时,使用如下的方式:

public class Generics<M, S, N> {

//M,S,N是范型参数

}

这种方式定义的范型类有两个基本的问题:

1. 范型参数定义的实例字段,如private M m = null;由于M的类型在运行时才能确定,那么我们在类的方法中,无法使用m,这跟定义pri

- 【HBase十三】HBase知识点总结

bit1129

hbase

1. 数据从MemStore flush到磁盘的触发条件有哪些?

a.显式调用flush,比如flush 'mytable'

b.MemStore中的数据容量超过flush的指定容量,hbase.hregion.memstore.flush.size,默认值是64M 2. Region的构成是怎么样?

1个Region由若干个Store组成

- 服务器被DDOS攻击防御的SHELL脚本

ronin47

mkdir /root/bin

vi /root/bin/dropip.sh

#!/bin/bash/bin/netstat -na|grep ESTABLISHED|awk ‘{print $5}’|awk -F:‘{print $1}’|sort|uniq -c|sort -rn|head -10|grep -v -E ’192.168|127.0′|awk ‘{if($2!=null&a

- java程序员生存手册-craps 游戏-一个简单的游戏

bylijinnan

java

import java.util.Random;

public class CrapsGame {

/**

*

*一个简单的赌*博游戏,游戏规则如下:

*玩家掷两个骰子,点数为1到6,如果第一次点数和为7或11,则玩家胜,

*如果点数和为2、3或12,则玩家输,

*如果和为其它点数,则记录第一次的点数和,然后继续掷骰,直至点数和等于第一次掷出的点

- TOMCAT启动提示NB: JAVA_HOME should point to a JDK not a JRE解决

开窍的石头

JAVA_HOME

当tomcat是解压的时候,用eclipse启动正常,点击startup.bat的时候启动报错;

报错如下:

The JAVA_HOME environment variable is not defined correctly

This environment variable is needed to run this program

NB: JAVA_HOME shou

- [操作系统内核]操作系统与互联网

comsci

操作系统

我首先申明:我这里所说的问题并不是针对哪个厂商的,仅仅是描述我对操作系统技术的一些看法

操作系统是一种与硬件层关系非常密切的系统软件,按理说,这种系统软件应该是由设计CPU和硬件板卡的厂商开发的,和软件公司没有直接的关系,也就是说,操作系统应该由做硬件的厂商来设计和开发

- 富文本框ckeditor_4.4.7 文本框的简单使用 支持IE11

cuityang

富文本框

<html xmlns="http://www.w3.org/1999/xhtml">

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8" />

<title>知识库内容编辑</tit

- Property null not found

darrenzhu

datagridFlexAdvancedpropery null

When you got error message like "Property null not found ***", try to fix it by the following way:

1)if you are using AdvancedDatagrid, make sure you only update the data in the data prov

- MySQl数据库字符串替换函数使用

dcj3sjt126com

mysql函数替换

需求:需要将数据表中一个字段的值里面的所有的 . 替换成 _

原来的数据是 site.title site.keywords ....

替换后要为 site_title site_keywords

使用的SQL语句如下:

updat

- mac上终端起动MySQL的方法

dcj3sjt126com

mysqlmac

首先去官网下载: http://www.mysql.com/downloads/

我下载了5.6.11的dmg然后安装,安装完成之后..如果要用终端去玩SQL.那么一开始要输入很长的:/usr/local/mysql/bin/mysql

这不方便啊,好想像windows下的cmd里面一样输入mysql -uroot -p1这样...上网查了下..可以实现滴.

打开终端,输入:

1

- Gson使用一(Gson)

eksliang

jsongson

转载请出自出处:http://eksliang.iteye.com/blog/2175401 一.概述

从结构上看Json,所有的数据(data)最终都可以分解成三种类型:

第一种类型是标量(scalar),也就是一个单独的字符串(string)或数字(numbers),比如"ickes"这个字符串。

第二种类型是序列(sequence),又叫做数组(array)

- android点滴4

gundumw100

android

Android 47个小知识

http://www.open-open.com/lib/view/open1422676091314.html

Android实用代码七段(一)

http://www.cnblogs.com/over140/archive/2012/09/26/2611999.html

http://www.cnblogs.com/over140/arch

- JavaWeb之JSP基本语法

ihuning

javaweb

目录

JSP模版元素

JSP表达式

JSP脚本片断

EL表达式

JSP注释

特殊字符序列的转义处理

如何查找JSP页面中的错误

JSP模版元素

JSP页面中的静态HTML内容称之为JSP模版元素,在静态的HTML内容之中可以嵌套JSP

- App Extension编程指南(iOS8/OS X v10.10)中文版

啸笑天

ext

当iOS 8.0和OS X v10.10发布后,一个全新的概念出现在我们眼前,那就是应用扩展。顾名思义,应用扩展允许开发者扩展应用的自定义功能和内容,能够让用户在使用其他app时使用该项功能。你可以开发一个应用扩展来执行某些特定的任务,用户使用该扩展后就可以在多个上下文环境中执行该任务。比如说,你提供了一个能让用户把内容分

- SQLServer实现无限级树结构

macroli

oraclesqlSQL Server

表结构如下:

数据库id path titlesort 排序 1 0 首页 0 2 0,1 新闻 1 3 0,2 JAVA 2 4 0,3 JSP 3 5 0,2,3 业界动态 2 6 0,2,3 国内新闻 1

创建一个存储过程来实现,如果要在页面上使用可以设置一个返回变量将至传过去

create procedure test

as

begin

decla

- Css居中div,Css居中img,Css居中文本,Css垂直居中div

qiaolevip

众观千象学习永无止境每天进步一点点css

/**********Css居中Div**********/

div.center {

width: 100px;

margin: 0 auto;

}

/**********Css居中img**********/

img.center {

display: block;

margin-left: auto;

margin-right: auto;

}

- Oracle 常用操作(实用)

吃猫的鱼

oracle

SQL>select text from all_source where owner=user and name=upper('&plsql_name');

SQL>select * from user_ind_columns where index_name=upper('&index_name'); 将表记录恢复到指定时间段以前

- iOS中使用RSA对数据进行加密解密

witcheryne

iosrsaiPhoneobjective c

RSA算法是一种非对称加密算法,常被用于加密数据传输.如果配合上数字摘要算法, 也可以用于文件签名.

本文将讨论如何在iOS中使用RSA传输加密数据. 本文环境

mac os

openssl-1.0.1j, openssl需要使用1.x版本, 推荐使用[homebrew](http://brew.sh/)安装.

Java 8

RSA基本原理

RS