Linux shedule 的发展历史.

慢慢来吧~~

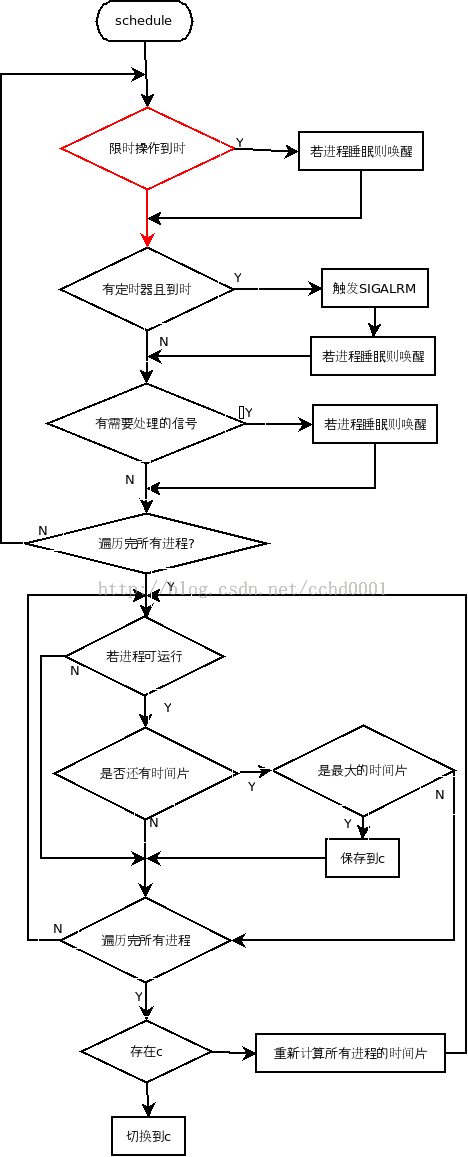

Linux V0.11

支持定时器和信号

流程图:

源码:

void schedule(void)

{

int i,next,c;

struct task_struct ** p;

/* check alarm, wake up any interruptible tasks that have got a signal */

for(p = &LAST_TASK ; p > &FIRST_TASK ; --p)

if (*p) {

if ((*p)->alarm && (*p)->alarm < jiffies) {

(*p)->signal |= (1<<(SIGALRM-1));

(*p)->alarm = 0;

}

if (((*p)->signal & ~(_BLOCKABLE & (*p)->blocked)) &&

(*p)->state==TASK_INTERRUPTIBLE)

(*p)->state=TASK_RUNNING;

}

/* this is the scheduler proper: */

while (1) {

c = -1;

next = 0;

i = NR_TASKS;

p = &task[NR_TASKS];

while (--i) {

if (!*--p)

continue;

if ((*p)->state == TASK_RUNNING && (*p)->counter > c)

c = (*p)->counter, next = i;

}

if (c) break;

for(p = &LAST_TASK ; p > &FIRST_TASK ; --p)

if (*p)

(*p)->counter = ((*p)->counter >> 1) +

(*p)->priority;

}

switch_to(next);

}

重新计算时间片:

time = oldtime / 2 + priority

相关接口:

1. pause , 暂时放弃CPU . 可打断.

int sys_pause(void)

{

current->state = TASK_INTERRUPTIBLE;

schedule();

return 0;

}

2. sleep_on 睡眠等待.不可打断. 比如等到某种资源的时候

void sleep_on(struct task_struct **p)

{

struct task_struct *tmp;

if (!p)

return;

if (current == &(init_task.task))

panic("task[0] trying to sleep");

tmp = *p;

*p = current;

current->state = TASK_UNINTERRUPTIBLE;

schedule();

if (tmp)

tmp->state=0;

}3 . 可打断睡眠 , 多用于多进程等待同一个资源的时候. 可以形成等待队列.

void interruptible_sleep_on(struct task_struct **p)

{

struct task_struct *tmp;

if (!p)

return;

if (current == &(init_task.task))

panic("task[0] trying to sleep");

tmp=*p;

*p=current;

repeat: current->state = TASK_INTERRUPTIBLE;

schedule();

if (*p && *p != current) {

(**p).state=0;

goto repeat;

}

*p=NULL;

if (tmp)

tmp->state=0;

}

4. 唤醒进程 . 不论进程处于何种状态都唤醒它.

void wake_up(struct task_struct **p)

{

if (p && *p) {

(**p).state=0;

*p=NULL;

}

}

5. nice . 降低优先级. 更愿意出让CPU . 但是设置的increment 必须不大于当前的优先级数值.

int sys_nice(long increment)

{

if (current->priority-increment>0)

current->priority -= increment;

return 0;

}

scheduel 的调用者: (system_call.s)

reschedule: pushl $ret_from_sys_call jmp _schedule _system_call: cmpl $nr_system_calls-1,%eax # 检查系统调用号 ja bad_sys_call ... # 各种压栈 call _sys_call_table(,%eax,4) # 调用对应接口 pushl %eax movl _current,%eax # 拿到scheduel后的进程结构体指针 cmpl $0,state(%eax) # 如果不是运行态 就回去重新调度 jne reschedule cmpl $0,counter(%eax) # 如果时间片刚好消耗没了, 就回去重新调度 je reschedule

相关结构体:

保存进程指针的数组. 所有的进程保存在一个固定大小的数组中,所以Linux系统支持的最大进程数目是固定的.

进程之间有指针组成链表.

struct task_struct * task[NR_TASKS] = {&(init_task.task), };

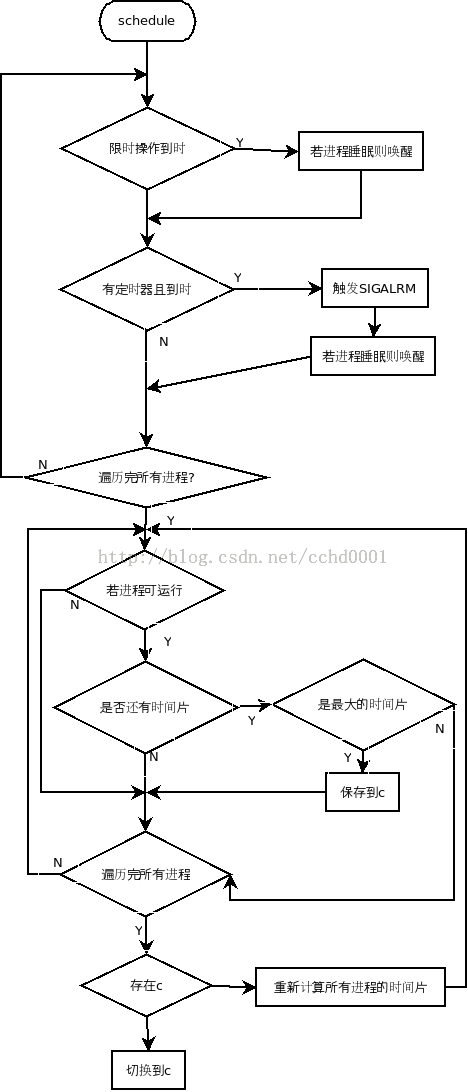

Linux V0.12

支持限时操作, 比如 select 支持最长等待时间.

schedual流程图:

源代码:

void schedule(void)

{

int i,next,c;

struct task_struct ** p;

/* check alarm, wake up any interruptible tasks that have got a signal */

for(p = &LAST_TASK ; p > &FIRST_TASK ; --p)

if (*p) {

if ((*p)->timeout && (*p)->timeout < jiffies) {

(*p)->timeout = 0;

if ((*p)->state == TASK_INTERRUPTIBLE)

(*p)->state = TASK_RUNNING;

}

if ((*p)->alarm && (*p)->alarm < jiffies) {

(*p)->signal |= (1<<(SIGALRM-1));

(*p)->alarm = 0;

}

if (((*p)->signal & ~(_BLOCKABLE & (*p)->blocked)) &&

(*p)->state==TASK_INTERRUPTIBLE)

(*p)->state=TASK_RUNNING;

}

/* this is the scheduler proper: */

while (1) {

c = -1;

next = 0;

i = NR_TASKS;

p = &task[NR_TASKS];

while (--i) {

if (!*--p)

continue;

if ((*p)->state == TASK_RUNNING && (*p)->counter > c)

c = (*p)->counter, next = i;

}

if (c) break;

for(p = &LAST_TASK ; p > &FIRST_TASK ; --p)

if (*p)

(*p)->counter = ((*p)->counter >> 1) +

(*p)->priority;

}

switch_to(next);

}

相关函数修改:

1. 添加接口 __sleep_on , 睡眠等待和可打断睡眠均直接调用此接口

static inline void __sleep_on(struct task_struct **p, int state)

{

struct task_struct *tmp;

if (!p)

return;

if (current == &(init_task.task))

panic("task[0] trying to sleep");

tmp = *p;

*p = current;

current->state = state;

repeat: schedule();

if (*p && *p != current) {

(**p).state = 0;

current->state = TASK_UNINTERRUPTIBLE;

goto repeat;

}

if (!*p)

printk("Warning: *P = NULL\n\r");

if (*p = tmp)

tmp->state=0;

}

void interruptible_sleep_on(struct task_struct **p)

{

__sleep_on(p,TASK_INTERRUPTIBLE);

}

void sleep_on(struct task_struct **p)

{

__sleep_on(p,TASK_UNINTERRUPTIBLE);

}

2. 唤醒进程的时候对已经停止的进程和僵尸进程进行警告

void wake_up(struct task_struct **p)

{

if (p && *p) {

if ((**p).state == TASK_STOPPED)

printk("wake_up: TASK_STOPPED");

if ((**p).state == TASK_ZOMBIE)

printk("wake_up: TASK_ZOMBIE");

(**p).state=0;

}

}

Linux V0.95

仅仅修改了一点, 关于signal 屏蔽位:

if (((*p)->signal & ~(_BLOCKABLE & (*p)->blocked)) && (*p)->state==TASK_INTERRUPTIBLE) (*p)->state=TASK_RUNNING;

==>

if (((*p)->signal & ~(*p)->blocked) && (*p)->state==TASK_INTERRUPTIBLE) (*p)->state=TASK_RUNNING;

因为V0.95 版本在sys_ssetmask函数中已经去掉了SIGKILL 和 SIGSTOP :

int sys_ssetmask(int newmask)

{

int old=current->blocked;

current->blocked = newmask & ~(1<<(SIGKILL-1)) & ~(1<<(SIGSTOP-1));

return old;

}

相关接口修改:

1. 暂时出让CPU的进程对SIG_IGN信号进行了屏蔽 :

int sys_pause(void)

{

unsigned long old_blocked;

unsigned long mask;

struct sigaction * sa = current->sigaction;

old_blocked = current->blocked;

for (mask=1 ; mask ; sa++,mask += mask)

if (sa->sa_handler == SIG_IGN)

current->blocked |= mask;

current->state = TASK_INTERRUPTIBLE;

schedule();

current->blocked = old_blocked;

return -EINTR;

}

2. __sleep_on 接口对标志寄存器进行了保护, 使用CTL STI 指令保证原子操作性.

static inline void __sleep_on(struct task_struct **p, int state)

{

struct task_struct *tmp;

unsigned int flags;

if (!p)

return;

if (current == &(init_task.task))

panic("task[0] trying to sleep");

__asm__("pushfl ; popl %0":"=r" (flags));

tmp = *p;

*p = current;

current->state = state;

/* make sure interrupts are enabled: there should be no more races here */

sti();

repeat: schedule();

if (*p && *p != current) {

current->state = TASK_UNINTERRUPTIBLE;

(**p).state = 0;

goto repeat;

}

if (*p = tmp)

tmp->state=0;

__asm__("pushl %0 ; popfl"::"r" (flags));

}3. nice函数处理了increment 比当前优先值大的情况: 直接减成 1

int sys_nice(long increment)

{

if (increment < 0 && !suser())

return -EPERM;

if (increment > current->priority)

increment = current->priority-1;

current->priority -= increment;

return 0;

}

Linux V0.95a

修改对限时操作的BUG:

if ((*p)->timeout && (*p)->timeout < jiffies) {

(*p)->timeout = 0;

if ((*p)->state == TASK_INTERRUPTIBLE)

(*p)->state = TASK_RUNNING;

}

==>

if ((*p)->timeout && (*p)->timeout < jiffies)

if ((*p)->state == TASK_INTERRUPTIBLE) {

(*p)->timeout = 0;

(*p)->state = TASK_RUNNING;

}

相关的接口修改:

1. 将next_wait 加入结构体 task_strcut. 从而使用它来维护睡眠等待列表, 不再唤醒僵尸进程和终止进程.

void wake_up(struct task_struct **p)

{

struct task_struct * wakeup_ptr, * tmp;

if (p && *p) {

wakeup_ptr = *p;

*p = NULL;

while (wakeup_ptr && wakeup_ptr != task[0]) {

if (wakeup_ptr->state == TASK_STOPPED)

printk("wake_up: TASK_STOPPED\n");

else if (wakeup_ptr->state == TASK_ZOMBIE)

printk("wake_up: TASK_ZOMBIE\n");

else

wakeup_ptr->state = TASK_RUNNING;

tmp = wakeup_ptr->next_wait;

wakeup_ptr->next_wait = task[0];

wakeup_ptr = tmp;

}

}

}

static inline void __sleep_on(struct task_struct **p, int state)

{

unsigned int flags;

if (!p)

return;

if (current == task[0])

panic("task[0] trying to sleep");

__asm__("pushfl ; popl %0":"=r" (flags));

current->next_wait = *p;

task[0]->next_wait = NULL;

*p = current;

current->state = state;

sti();

schedule();

if (current->next_wait != task[0])

wake_up(p);

current->next_wait = NULL;

__asm__("pushl %0 ; popfl"::"r" (flags));

}

Linux V0.95c

无相关修改

Linux V0.96a

添加了一项高优先级抢占功能:

当新唤醒的进程更优先的时候, 就重新调度.

实现:

1. scheduel 函数仅仅在开始加上一句代码:

need_resched = 0;

2. wake_up 函数检查新唤醒的进程优先级是否大于当前的. 是则设置need_resched = 1

void wake_up(struct task_struct **p)

{

struct task_struct * wakeup_ptr, * tmp;

if (p && *p) {

wakeup_ptr = *p;

*p = NULL;

while (wakeup_ptr && wakeup_ptr != task[0]) {

if (wakeup_ptr->state == TASK_ZOMBIE)

printk("wake_up: TASK_ZOMBIE\n");

else if (wakeup_ptr->state != TASK_STOPPED) {

wakeup_ptr->state = TASK_RUNNING;

if (wakeup_ptr->counter > current->counter)

need_resched = 1;

}

tmp = wakeup_ptr->next_wait;

wakeup_ptr->next_wait = task[0];

wakeup_ptr = tmp;

}

}

}

3. system_call 检查need_resched, 若非0 就重新调度:

reschedule: pushl $ret_from_sys_call jmp _schedule .align 2 _system_call: pushl %eax # save orig_eax SAVE_ALL cmpl _NR_syscalls,%eax jae bad_sys_call call _sys_call_table(,%eax,4) movl %eax,EAX(%esp) # save the return value ret_from_sys_call: cmpw $0x0f,CS(%esp) # was old code segment supervisor ? jne 2f cmpw $0x17,OLDSS(%esp) # was stack segment = 0x17 ? jne 2f 1: movl _current,%eax cmpl _task,%eax # task[0] cannot have signals je 2 cmpl $0,_need_resched # 检查need_resched, 若非0 就重新调度 jne reschedule cmpl $0,state(%eax) # state jne reschedule cmpl $0,counter(%eax) # counter je reschedule

相关接口修改:

1.修改nice 接口 ,保证优先级不会被改成0

int sys_nice(long increment)

{

if (increment < 0 && !suser())

return -EPERM;

if (increment >= current->priority) // 原先是 increment > current->priority

increment = current->priority-1;

current->priority -= increment;

return 0;

}

2. 修改system_call , 若进程调度后仍为同一进程则不再检查状态和时间片

1: movl _current,%eax cmpl _task,%eax # task[0] cannot have signals je 2f cmpl $0,_need_resched jne reschedule cmpl $0,state(%eax) # state jne reschedule cmpl $0,counter(%eax) # counter je reschedule

Linux V0.96b

将定时器部分益处scheduel, 这部分功能由 do_timer 接管.

流程图:

源代码:

void schedule(void)

{

int i,next,c;

struct task_struct ** p;

/* check alarm, wake up any interruptible tasks that have got a signal */

need_resched = 0;

for(p = &LAST_TASK ; p > &FIRST_TASK ; --p)

if (*p) {

if ((*p)->timeout && (*p)->timeout < jiffies)

if ((*p)->state == TASK_INTERRUPTIBLE) {

(*p)->timeout = 0;

(*p)->state = TASK_RUNNING;

}

if (((*p)->signal & ~(*p)->blocked) &&

(*p)->state==TASK_INTERRUPTIBLE)

(*p)->state=TASK_RUNNING;

}

/* this is the scheduler proper: */

while (1) {

c = -1;

next = 0;

i = NR_TASKS;

p = &task[NR_TASKS];

while (--i) {

if (!*--p)

continue;

if ((*p)->state == TASK_RUNNING && (*p)->counter > c)

c = (*p)->counter, next = i;

}

if (c) break;

for(p = &LAST_TASK ; p > &FIRST_TASK ; --p)

if (*p)

(*p)->counter = ((*p)->counter >> 1) +

(*p)->priority;

}

switch_to(next);

}

Linux V0.96c

无相关修改

Linux V0.97

添加 wake_one_task接口 ,修了scheduel的BUG , 使得调度的时候被唤醒的高优先级的进程也可以抢占.1. 新的schedule

void schedule(void)

{

int i,next,c;

struct task_struct ** p;

/* check alarm, wake up any interruptible tasks that have got a signal */

need_resched = 0;

for(p = &LAST_TASK ; p > &FIRST_TASK ; --p)

if (*p) {

if ((*p)->timeout && (*p)->timeout < jiffies)

if ((*p)->state == TASK_INTERRUPTIBLE) {

(*p)->timeout = 0;

wake_one_task(*p); // 原来直接改变p->state

}

if (((*p)->signal & ~(*p)->blocked) &&

(*p)->state==TASK_INTERRUPTIBLE)

wake_one_task(*p); // 原来直接改变p->state

}

/* this is the scheduler proper: */

while (1) {

c = -1;

next = 0;

i = NR_TASKS;

p = &task[NR_TASKS];

while (--i) {

if (!*--p)

continue;

if ((*p)->state == TASK_RUNNING && (*p)->counter > c)

c = (*p)->counter, next = i;

}

if (c)

break;

for(p = &LAST_TASK ; p > &FIRST_TASK ; --p)

if (*p)

(*p)->counter = ((*p)->counter >> 1) +

(*p)->priority;

}

sti(); // 保证原子操作

switch_to(next);

}

辅助接口 :

void wake_one_task(struct task_struct * p)

{

p->state = TASK_RUNNING;

if (p->counter > current->counter)

need_resched = 1;

}

2. 定义了 wait_queue .在 sched.h 里面添加了接口 : add_wait_queue 和 remove_wait_queue . 使得这个环状链表的操作更美观.

接口代码:

extern inline void add_wait_queue(struct wait_queue ** p, struct wait_queue * wait)

{

unsigned long flags;

struct wait_queue * tmp;

__asm__ __volatile__("pushfl ; popl %0 ; cli":"=r" (flags));

wait->next = *p;

tmp = wait;

while (tmp->next)

if ((tmp = tmp->next)->next == *p)

break;

*p = tmp->next = wait;

__asm__ __volatile__("pushl %0 ; popfl"::"r" (flags));

}

extern inline void remove_wait_queue(struct wait_queue ** p, struct wait_queue * wait)

{

unsigned long flags;

struct wait_queue * tmp;

__asm__ __volatile__("pushfl ; popl %0 ; cli":"=r" (flags));

if (*p == wait)

if ((*p = wait->next) == wait)

*p = NULL;

tmp = wait;

while (tmp && tmp->next != wait)

tmp = tmp->next;

if (tmp)

tmp->next = wait->next;

wait->next = NULL;

__asm__ __volatile__("pushl %0 ; popfl"::"r" (flags));

}

然后对对应的 __sleep_on 接口进行了修改

static inline void __sleep_on(struct wait_queue **p, int state)

{

unsigned long flags;

if (!p)

return;

if (current == task[0])

panic("task[0] trying to sleep");

if (current->wait.next)

printk("__sleep_on: wait->next exists\n");

__asm__ __volatile__("pushfl ; popl %0 ; cli":"=r" (flags));

current->state = state;

add_wait_queue(p,¤t->wait);

sti();

schedule();

remove_wait_queue(p,¤t->wait);

__asm__("pushl %0 ; popfl"::"r" (flags));

}

3. wake_up 函数也有改变, 但是只是将 while 改成了 do .. . while .

Linux V1.0

首先, scheduel函数重新接管了定时器, 当然代码更加复杂了. 但是流程图回到了 V1.2 版.

其次, 为了产生更高效率的机器码, 使用 for(;;) 代替while , 使用goto 代替if

最后, 不再依靠遍历task数组来遍历所有的进程,改为遍历环状链表.

源代码:

asmlinkage void schedule(void)

{

int c;

struct task_struct * p;

struct task_struct * next;

unsigned long ticks;

/* check alarm, wake up any interruptible tasks that have got a signal */

cli();

ticks = itimer_ticks;

itimer_ticks = 0;

itimer_next = ~0;

sti();

need_resched = 0;

p = &init_task;

for (;;) {

if ((p = p->next_task) == &init_task)

goto confuse_gcc1;

if (ticks && p->it_real_value) {

if (p->it_real_value <= ticks) {

send_sig(SIGALRM, p, 1);

if (!p->it_real_incr) {

p->it_real_value = 0;

goto end_itimer;

}

do {

p->it_real_value += p->it_real_incr;

} while (p->it_real_value <= ticks);

}

p->it_real_value -= ticks;

if (p->it_real_value < itimer_next)

itimer_next = p->it_real_value;

}

end_itimer:

if (p->state != TASK_INTERRUPTIBLE)

continue;

if (p->signal & ~p->blocked) {

p->state = TASK_RUNNING;

continue;

}

if (p->timeout && p->timeout <= jiffies) {

p->timeout = 0;

p->state = TASK_RUNNING;

}

}

confuse_gcc1:

/* this is the scheduler proper: */

#if 0

/* give processes that go to sleep a bit higher priority.. */

/* This depends on the values for TASK_XXX */

/* This gives smoother scheduling for some things, but */

/* can be very unfair under some circumstances, so.. */

if (TASK_UNINTERRUPTIBLE >= (unsigned) current->state &&

current->counter < current->priority*2) {

++current->counter;

}

#endif

c = -1;

next = p = &init_task;

for (;;) {

if ((p = p->next_task) == &init_task)

goto confuse_gcc2;

if (p->state == TASK_RUNNING && p->counter > c)

c = p->counter, next = p;

}

confuse_gcc2:

if (!c) {

for_each_task(p)

p->counter = (p->counter >> 1) + p->priority;

}

if(current != next)

kstat.context_swtch++;

switch_to(next);

/* Now maybe reload the debug registers */

if(current->debugreg[7]){

loaddebug(0);

loaddebug(1);

loaddebug(2);

loaddebug(3);

loaddebug(6);

};

}

相关函数修改:

1. wake_up 对环状列表的所有睡眠的进程进行唤醒

void wake_up(struct wait_queue **q)

{

struct wait_queue *tmp;

struct task_struct * p;

if (!q || !(tmp = *q))

return;

do {

if ((p = tmp->task) != NULL) {

if ((p->state == TASK_UNINTERRUPTIBLE) ||

(p->state == TASK_INTERRUPTIBLE)) {

p->state = TASK_RUNNING;

if (p->counter > current->counter)

need_resched = 1;

}

}

if (!tmp->next) {

printk("wait_queue is bad (eip = %08lx)\n",((unsigned long *) q)[-1]);

printk(" q = %p\n",q);

printk(" *q = %p\n",*q);

printk(" tmp = %p\n",tmp);

break;

}

tmp = tmp->next;

} while (tmp != *q);

}

2. 加入接口wake_up_interruptible, 仅唤醒可中断睡眠的进行

void wake_up_interruptible(struct wait_queue **q)

{

struct wait_queue *tmp;

struct task_struct * p;

if (!q || !(tmp = *q))

return;

do {

if ((p = tmp->task) != NULL) {

if (p->state == TASK_INTERRUPTIBLE) {

p->state = TASK_RUNNING;

if (p->counter > current->counter)

need_resched = 1;

}

}

if (!tmp->next) {

printk("wait_queue is bad (eip = %08lx)\n",((unsigned long *) q)[-1]);

printk(" q = %p\n",q);

printk(" *q = %p\n",*q);

printk(" tmp = %p\n",tmp);

break;

}

tmp = tmp->next;

} while (tmp != *q);

}

3. 为了保护竞争资源加入了__down接口实现了计数的信号量机制

void __down(struct semaphore * sem)

{

struct wait_queue wait = { current, NULL };

add_wait_queue(&sem->wait, &wait);

current->state = TASK_UNINTERRUPTIBLE;

while (sem->count <= 0) {

schedule();

current->state = TASK_UNINTERRUPTIBLE;

}

current->state = TASK_RUNNING;

remove_wait_queue(&sem->wait, &wait);

}

4. 通过nice接口, 限制优先级数值为1-35 .

asmlinkage int sys_nice(long increment)

{

int newprio;

if (increment < 0 && !suser())

return -EPERM;

newprio = current->priority - increment;

if (newprio < 1)

newprio = 1;

if (newprio > 35)

newprio = 35;

current->priority = newprio;

return 0;

}

Linux V1.1

加入了个对中断的输出:

if (intr_count) {

printk("Aiee: scheduling in interrupt\n");

intr_count = 0;

}

Linux V1.2

删除了system_call.s 文件. 添加了arch文件夹, 系统跳用移动到对应内核的entry.S

Linux V1.3

1. 添加了对 scheduel时处理tq_scheduler的支持.

run_task_queue(&tq_scheduler);

2. 每次统计当前运行的进程数目

nr_running = 0; ... nr_running++;

Linux V2.0

大量的修改 , 先看源码 ,再一一解释:

asmlinkage void schedule(void)

{

int c;

struct task_struct * p;

struct task_struct * prev, * next;

unsigned long timeout = 0;

int this_cpu=smp_processor_id();

/* check alarm, wake up any interruptible tasks that have got a signal */

if (intr_count)

goto scheduling_in_interrupt;

if (bh_active & bh_mask) {

intr_count = 1;

do_bottom_half(); // 1. 添加了对 buttom half 的支持.

intr_count = 0;

}

run_task_queue(&tq_scheduler);

need_resched = 0;

prev = current;

cli();

/* move an exhausted RR process to be last.. */

if (!prev->counter && prev->policy == SCHED_RR) {

prev->counter = prev->priority;

move_last_runqueue(prev); //2. 加入了runquene的概念

}

switch (prev->state) {

case TASK_INTERRUPTIBLE:

if (prev->signal & ~prev->blocked)

goto makerunnable;

timeout = prev->timeout;

if (timeout && (timeout <= jiffies)) {

prev->timeout = 0;

timeout = 0;

makerunnable:

prev->state = TASK_RUNNING;

break;

}

default:

del_from_runqueue(prev);

case TASK_RUNNING:

}

p = init_task.next_run;

sti();

#ifdef __SMP__

/*

* This is safe as we do not permit re-entry of schedule()

*/

prev->processor = NO_PROC_ID;

#define idle_task (task[cpu_number_map[this_cpu]])

#else

#define idle_task (&init_task)

#endif

/*

* Note! there may appear new tasks on the run-queue during this, as

* interrupts are enabled. However, they will be put on front of the

* list, so our list starting at "p" is essentially fixed.

*/

/* this is the scheduler proper: */

c = -1000;

next = idle_task;

while (p != &init_task) {

int weight = goodness(p, prev, this_cpu); //3. 新的优先级计算方式

if (weight > c)

c = weight, next = p;

p = p->next_run;

}

/* if all runnable processes have "counter == 0", re-calculate counters */

if (!c) {

for_each_task(p)

p->counter = (p->counter >> 1) + p->priority;

}

#ifdef __SMP__ // 4. 多CPU支持

/*

* Allocate process to CPU

*/

next->processor = this_cpu;

next->last_processor = this_cpu;

#endif

#ifdef __SMP_PROF__

/* mark processor running an idle thread */

if (0==next->pid)

set_bit(this_cpu,&smp_idle_map);

else

clear_bit(this_cpu,&smp_idle_map);

#endif

if (prev != next) {

struct timer_list timer;

kstat.context_swtch++;

if (timeout) {

init_timer(&timer);

timer.expires = timeout;

timer.data = (unsigned long) prev;

timer.function = process_timeout;

add_timer(&timer);

}

get_mmu_context(next);

switch_to(prev,next);

if (timeout)

del_timer(&timer);

}

return;

scheduling_in_interrupt:

printk("Aiee: scheduling in interrupt %p\n",

__builtin_return_address(0));

}

1. 添加了对 buttom half 的支持.

利用 bh_active 和 bh_mask 两个掩码来记录软中断信息. 每次scheduel统一执行之 .

if (bh_active & bh_mask) {

intr_count = 1;

do_bottom_half();

intr_count = 0;

}

2. 定义了runquene的概念(task_struct 加入俩指针) , 加入接口 add_to_runqueue , del_from_runqueue, move_last_runqueue , 来支持对runquene的支持.

其中除了双向环状链表的操作, 就是对多CPU的支持.

static inline void add_to_runqueue(struct task_struct * p)

{

#ifdef __SMP__

int cpu=smp_processor_id();

#endif

#if 1 /* sanity tests */

if (p->next_run || p->prev_run) {

printk("task already on run-queue\n");

return;

}

#endif

if (p->counter > current->counter + 3)

need_resched = 1;

nr_running++;

(p->prev_run = init_task.prev_run)->next_run = p;

p->next_run = &init_task;

init_task.prev_run = p;

#ifdef __SMP__

/* this is safe only if called with cli()*/

while(set_bit(31,&smp_process_available))

{

while(test_bit(31,&smp_process_available))

{

if(clear_bit(cpu,&smp_invalidate_needed))

{

local_flush_tlb();

set_bit(cpu,&cpu_callin_map[0]);

}

}

}

smp_process_available++;

clear_bit(31,&smp_process_available);

if ((0!=p->pid) && smp_threads_ready)

{

int i;

for (i=0;i<smp_num_cpus;i++)

{

if (0==current_set[cpu_logical_map[i]]->pid)

{

smp_message_pass(cpu_logical_map[i], MSG_RESCHEDULE, 0L, 0);

break;

}

}

}

#endif

}

static inline void del_from_runqueue(struct task_struct * p)

{

struct task_struct *next = p->next_run;

struct task_struct *prev = p->prev_run;

#if 1 /* sanity tests */

if (!next || !prev) {

printk("task not on run-queue\n");

return;

}

#endif

if (p == &init_task) {

static int nr = 0;

if (nr < 5) {

nr++;

printk("idle task may not sleep\n");

}

return;

}

nr_running--;

next->prev_run = prev;

prev->next_run = next;

p->next_run = NULL;

p->prev_run = NULL;

}

static inline void move_last_runqueue(struct task_struct * p)

{

struct task_struct *next = p->next_run;

struct task_struct *prev = p->prev_run;

/* remove from list */

next->prev_run = prev;

prev->next_run = next;

/* add back to list */

p->next_run = &init_task;

prev = init_task.prev_run;

init_task.prev_run = p;

p->prev_run = prev;

prev->next_run = p;

}

3. 加入新的优先级计算方式.

加入了进程调度测略概念:

/* * Scheduling policies */ #define SCHED_OTHER 0 //一般的进程 #define SCHED_FIFO 1 // 实时进程, 一个进程执行完才执行另一个 #define SCHED_RR 2 // 实时进程, 固定执行时间片,轮转依次执行

添加了优先级计算接口 goodness

/*

* This is the function that decides how desirable a process is..

* You can weigh different processes against each other depending

* on what CPU they've run on lately etc to try to handle cache

* and TLB miss penalties.

*

* Return values:

* -1000: never select this

* 0: out of time, recalculate counters (but it might still be

* selected)

* +ve: "goodness" value (the larger, the better)

* +1000: realtime process, select this.

*/

static inline int goodness(struct task_struct * p, struct task_struct * prev, int this_cpu)

{

int weight;

#ifdef __SMP__

/* We are not permitted to run a task someone else is running */

if (p->processor != NO_PROC_ID)

return -1000; // CPU 不支持直接

#ifdef PAST_2_0

/* This process is locked to a processor group */

if (p->processor_mask && !(p->processor_mask & (1<<this_cpu))

return -1000; // 绑定特定CPU , 不需要当前CPU

#endif

#endif

/*

* Realtime process, select the first one on the

* runqueue (taking priorities within processes

* into account).

*/

if (p->policy != SCHED_OTHER)

return 1000 + p->rt_priority; // 实时程序, 立刻执行

/*

* Give the process a first-approximation goodness value

* according to the number of clock-ticks it has left.

*

* Don't do any other calculations if the time slice is

* over..

*/

weight = p->counter;

if (weight) {

#ifdef __SMP__

/* Give a largish advantage to the same processor... */

/* (this is equivalent to penalizing other processors) */

if (p->last_processor == this_cpu)

weight += PROC_CHANGE_PENALTY; // 同一CPU

#endif

/* .. and a slight advantage to the current process */

if (p == prev)

weight += 1; // 还是上一个进程

}

return weight;

}

4. 支持多CPU调度

相关接口修改:

加入接口 wake_up_process , 利用add_runquene , 被wake_up 和 wake_up_interruputible 取代p->state = TASK_RUNNING调用.

inline void wake_up_process(struct task_struct * p)

{

unsigned long flags;

save_flags(flags);

cli();

p->state = TASK_RUNNING;

if (!p->next_run)

add_to_runqueue(p);

restore_flags(flags);

}

nice接口策略修改, 限定 优先值 0 - DEF_PRIORITY*2

asmlinkage int sys_nice(int increment)

{

unsigned long newprio;

int increase = 0;

newprio = increment;

if (increment < 0) {

if (!suser())

return -EPERM;

newprio = -increment;

increase = 1;

}

if (newprio > 40)

newprio = 40;

/*

* do a "normalization" of the priority (traditionally

* unix nice values are -20..20, linux doesn't really

* use that kind of thing, but uses the length of the

* timeslice instead (default 150 msec). The rounding is

* why we want to avoid negative values.

*/

newprio = (newprio * DEF_PRIORITY + 10) / 20;

increment = newprio;

if (increase)

increment = -increment;

newprio = current->priority - increment;

if (newprio < 1)

newprio = 1;

if (newprio > DEF_PRIORITY*2)

newprio = DEF_PRIORITY*2;

current->priority = newprio;

return 0;

}

Linux V2.1

相关函数修改:

唤醒函数修改,统一使用wait_quene

void wake_up(struct wait_queue **q)

{

struct wait_queue *next;

struct wait_queue *head;

if (!q || !(next = *q))

return;

head = WAIT_QUEUE_HEAD(q);

while (next != head) {

struct task_struct *p = next->task;

next = next->next;

if (p != NULL) {

if ((p->state == TASK_UNINTERRUPTIBLE) ||

(p->state == TASK_INTERRUPTIBLE))

wake_up_process(p);

}

if (!next)

goto bad;

}

return;

bad:

printk("wait_queue is bad (eip = %p)\n",

__builtin_return_address(0));

printk(" q = %p\n",q);

printk(" *q = %p\n",*q);

}

void wake_up_interruptible(struct wait_queue **q)

{

struct wait_queue *next;

struct wait_queue *head;

if (!q || !(next = *q))

return;

head = WAIT_QUEUE_HEAD(q);

while (next != head) {

struct task_struct *p = next->task;

next = next->next;

if (p != NULL) {

if (p->state == TASK_INTERRUPTIBLE)

wake_up_process(p);

}

if (!next)

goto bad;

}

return;

bad:

printk("wait_queue is bad (eip = %p)\n",

__builtin_return_address(0));

printk(" q = %p\n",q);

printk(" *q = %p\n",*q);

}

对 sleep_on 接口加入原子保护

修改nice接口的一个bug .

if (newprio < 1)

==>

if ((signed) newprio < 1)

Linux V2.2

修改较多.

1. 添加CPU的的更多支持.

2. 对每个队列操作添加了信号量保护

源码:

asmlinkage void schedule(void)

{

struct schedule_data * sched_data;

struct task_struct * prev, * next;

int this_cpu;

prev = current;

this_cpu = prev->processor;

/*

* 'sched_data' is protected by the fact that we can run

* only one process per CPU.

*/

sched_data = & aligned_data[this_cpu].schedule_data;

if (in_interrupt())

goto scheduling_in_interrupt;

release_kernel_lock(prev, this_cpu);

/* Do "administrative" work here while we don't hold any locks */

if (bh_active & bh_mask)

do_bottom_half();

run_task_queue(&tq_scheduler);

spin_lock(&scheduler_lock);

spin_lock_irq(&runqueue_lock);

/* move an exhausted RR process to be last.. */

prev->need_resched = 0;

if (!prev->counter && prev->policy == SCHED_RR) {

prev->counter = prev->priority;

move_last_runqueue(prev);

}

switch (prev->state) {

case TASK_INTERRUPTIBLE:

if (signal_pending(prev)) {

prev->state = TASK_RUNNING;

break;

}

default:

del_from_runqueue(prev);

case TASK_RUNNING:

}

sched_data->prevstate = prev->state;

{

struct task_struct * p = init_task.next_run;

/*

* This is subtle.

* Note how we can enable interrupts here, even

* though interrupts can add processes to the run-

* queue. This is because any new processes will

* be added to the front of the queue, so "p" above

* is a safe starting point.

* run-queue deletion and re-ordering is protected by

* the scheduler lock

*/

spin_unlock_irq(&runqueue_lock);

#ifdef __SMP__

prev->has_cpu = 0;

#endif

/*

* Note! there may appear new tasks on the run-queue during this, as

* interrupts are enabled. However, they will be put on front of the

* list, so our list starting at "p" is essentially fixed.

*/

/* this is the scheduler proper: */

{

int c = -1000;

next = idle_task;

while (p != &init_task) {

if (can_schedule(p)) {

int weight = goodness(p, prev, this_cpu);

if (weight > c)

c = weight, next = p;

}

p = p->next_run;

}

/* Do we need to re-calculate counters? */

if (!c) {

struct task_struct *p;

read_lock(&tasklist_lock);

for_each_task(p)

p->counter = (p->counter >> 1) + p->priority;

read_unlock(&tasklist_lock);

}

}

}

/*

* maintain the per-process 'average timeslice' value.

* (this has to be recalculated even if we reschedule to

* the same process) Currently this is only used on SMP:

*/

#ifdef __SMP__

{

cycles_t t, this_slice;

t = get_cycles();

this_slice = t - sched_data->last_schedule;

sched_data->last_schedule = t;

/*

* Simple, exponentially fading average calculation:

*/

prev->avg_slice = this_slice + prev->avg_slice;

prev->avg_slice >>= 1;

}

/*

* We drop the scheduler lock early (it's a global spinlock),

* thus we have to lock the previous process from getting

* rescheduled during switch_to().

*/

prev->has_cpu = 1;

next->has_cpu = 1;

next->processor = this_cpu;

spin_unlock(&scheduler_lock);

#endif /* __SMP__ */

if (prev != next) {

#ifdef __SMP__

sched_data->prev = prev;

#endif

kstat.context_swtch++;

get_mmu_context(next);

switch_to(prev,next);

__schedule_tail();

}

reacquire_kernel_lock(current);

return;

scheduling_in_interrupt:

printk("Scheduling in interrupt\n");

*(int *)0 = 0;

}

相关接口修改:

添加接口reschedule_idle, 对唤醒进程后重新调度之前做了更过工作

void wake_up_process(struct task_struct * p)

{

unsigned long flags;

spin_lock_irqsave(&runqueue_lock, flags);

p->state = TASK_RUNNING;

if (!p->next_run) {

add_to_runqueue(p);

reschedule_idle(p);

}

spin_unlock_irqrestore(&runqueue_lock, flags);

}主要是对多CPU的支持. 同时对优先级更高有了新的定义 : 至少大于3

static inline void reschedule_idle(struct task_struct * p)

{

if (p->policy != SCHED_OTHER || p->counter > current->counter + 3) {

current->need_resched = 1;

return;

}

#ifdef __SMP__

/*

* ("wakeup()" should not be called before we've initialized

* SMP completely.

* Basically a not-yet initialized SMP subsystem can be

* considered as a not-yet working scheduler, simply dont use

* it before it's up and running ...)

*

* SMP rescheduling is done in 2 passes:

* - pass #1: faster: 'quick decisions'

* - pass #2: slower: 'lets try and find another CPU'

*/

/*

* Pass #1

*

* There are two metrics here:

*

* first, a 'cutoff' interval, currently 0-200 usecs on

* x86 CPUs, depending on the size of the 'SMP-local cache'.

* If the current process has longer average timeslices than

* this, then we utilize the idle CPU.

*

* second, if the wakeup comes from a process context,

* then the two processes are 'related'. (they form a

* 'gang')

*

* An idle CPU is almost always a bad thing, thus we skip

* the idle-CPU utilization only if both these conditions

* are true. (ie. a 'process-gang' rescheduling with rather

* high frequency should stay on the same CPU).

*

* [We can switch to something more finegrained in 2.3.]

*/

if ((current->avg_slice < cacheflush_time) && related(current, p))

return;

reschedule_idle_slow(p);

#endif /* __SMP__ */

}

添加了新的睡眠接口,支持定时睡眠

signed long schedule_timeout(signed long timeout)

{

struct timer_list timer;

unsigned long expire;

switch (timeout)

{

case MAX_SCHEDULE_TIMEOUT:

/*

* These two special cases are useful to be comfortable

* in the caller. Nothing more. We could take

* MAX_SCHEDULE_TIMEOUT from one of the negative value

* but I' d like to return a valid offset (>=0) to allow

* the caller to do everything it want with the retval.

*/

schedule();

goto out;

default:

/*

* Another bit of PARANOID. Note that the retval will be

* 0 since no piece of kernel is supposed to do a check

* for a negative retval of schedule_timeout() (since it

* should never happens anyway). You just have the printk()

* that will tell you if something is gone wrong and where.

*/

if (timeout < 0)

{

printk(KERN_ERR "schedule_timeout: wrong timeout "

"value %lx from %p\n", timeout,

__builtin_return_address(0));

goto out;

}

}

expire = timeout + jiffies;

init_timer(&timer);

timer.expires = expire;

timer.data = (unsigned long) current;

timer.function = process_timeout;

add_timer(&timer);

schedule();

del_timer(&timer);

timeout = expire - jiffies;

out:

return timeout < 0 ? 0 : timeout;

}

/*

* This one aligns per-CPU data on cacheline boundaries.

*/

static union {

struct schedule_data {

struct task_struct * prev;

long prevstate;

cycles_t last_schedule;

} schedule_data;

char __pad [L1_CACHE_BYTES];

} aligned_data [NR_CPUS] __cacheline_aligned = { {{&init_task,0}}};

static inline void __schedule_tail (void)

{

#ifdef __SMP__

struct schedule_data * sched_data;

/*

* We might have switched CPUs:

*/

sched_data = & aligned_data[smp_processor_id()].schedule_data;

/*

* Subtle. In the rare event that we got a wakeup to 'prev' just

* during the reschedule (this is possible, the scheduler is pretty

* parallel), we should do another reschedule in the next task's

* context. schedule() will do the right thing next time around.

* this is equivalent to 'delaying' the wakeup until the reschedule

* has finished.

*/

if (sched_data->prev->state != sched_data->prevstate)

current->need_resched = 1;

/*

* Release the previous process ...

*

* We have dropped all locks, and we must make sure that we

* only mark the previous process as no longer having a CPU

* after all other state has been seen by other CPU's. Thus

* the write memory barrier!

*/

wmb();

sched_data->prev->has_cpu = 0;

#endif /* __SMP__ */

}

void interruptible_sleep_on(struct wait_queue **p)

{

SLEEP_ON_VAR

current->state = TASK_INTERRUPTIBLE;

SLEEP_ON_HEAD

schedule();

SLEEP_ON_TAIL

}

long interruptible_sleep_on_timeout(struct wait_queue **p, long timeout)

{

SLEEP_ON_VAR

current->state = TASK_INTERRUPTIBLE;

SLEEP_ON_HEAD

timeout = schedule_timeout(timeout);

SLEEP_ON_TAIL

return timeout;

}

void sleep_on(struct wait_queue **p)

{

SLEEP_ON_VAR

current->state = TASK_UNINTERRUPTIBLE;

SLEEP_ON_HEAD

schedule();

SLEEP_ON_TAIL

}

long sleep_on_timeout(struct wait_queue **p, long timeout)

{

SLEEP_ON_VAR

current->state = TASK_UNINTERRUPTIBLE;

SLEEP_ON_HEAD

timeout = schedule_timeout(timeout);

SLEEP_ON_TAIL

return timeout;

}

Linux V2.3

1. 对多CPU支持进行了更多修改, 这里不讨论.

2. scheduel函数本身逻辑并无太大修改,但是大量使用goto 替换原来的if { ... } \

3. 更多的使用信号量保护.

源码:

asmlinkage void schedule(void)

{

struct schedule_data * sched_data;

struct task_struct *prev, *next, *p;

int this_cpu, c;

if (tq_scheduler)

goto handle_tq_scheduler;

tq_scheduler_back:

prev = current;

this_cpu = prev->processor;

if (in_interrupt())

goto scheduling_in_interrupt;

release_kernel_lock(prev, this_cpu);

/* Do "administrative" work here while we don't hold any locks */

if (bh_mask & bh_active)

goto handle_bh;

handle_bh_back:

/*

* 'sched_data' is protected by the fact that we can run

* only one process per CPU.

*/

sched_data = & aligned_data[this_cpu].schedule_data;

spin_lock_irq(&runqueue_lock);

/* move an exhausted RR process to be last.. */

if (prev->policy == SCHED_RR)

goto move_rr_last;

move_rr_back:

switch (prev->state) {

case TASK_INTERRUPTIBLE:

if (signal_pending(prev)) {

prev->state = TASK_RUNNING;

break;

}

default:

del_from_runqueue(prev);

case TASK_RUNNING:

}

prev->need_resched = 0;

repeat_schedule:

/*

* this is the scheduler proper:

*/

p = init_task.next_run;

/* Default process to select.. */

next = idle_task(this_cpu);

c = -1000;

if (prev->state == TASK_RUNNING)

goto still_running;

still_running_back:

/*

* This is subtle.

* Note how we can enable interrupts here, even

* though interrupts can add processes to the run-

* queue. This is because any new processes will

* be added to the front of the queue, so "p" above

* is a safe starting point.

* run-queue deletion and re-ordering is protected by

* the scheduler lock

*/

/*

* Note! there may appear new tasks on the run-queue during this, as

* interrupts are enabled. However, they will be put on front of the

* list, so our list starting at "p" is essentially fixed.

*/

while (p != &init_task) {

if (can_schedule(p)) {

int weight = goodness(prev, p, this_cpu);

if (weight > c)

c = weight, next = p;

}

p = p->next_run;

}

/* Do we need to re-calculate counters? */

if (!c)

goto recalculate;

/*

* from this point on nothing can prevent us from

* switching to the next task, save this fact in

* sched_data.

*/

sched_data->curr = next;

#ifdef __SMP__

next->has_cpu = 1;

next->processor = this_cpu;

#endif

spin_unlock_irq(&runqueue_lock);

if (prev == next)

goto same_process;

#ifdef __SMP__

/*

* maintain the per-process 'average timeslice' value.

* (this has to be recalculated even if we reschedule to

* the same process) Currently this is only used on SMP,

* and it's approximate, so we do not have to maintain

* it while holding the runqueue spinlock.

*/

{

cycles_t t, this_slice;

t = get_cycles();

this_slice = t - sched_data->last_schedule;

sched_data->last_schedule = t;

/*

* Exponentially fading average calculation, with

* some weight so it doesnt get fooled easily by

* smaller irregularities.

*/

prev->avg_slice = (this_slice*1 + prev->avg_slice*1)/2;

}

/*

* We drop the scheduler lock early (it's a global spinlock),

* thus we have to lock the previous process from getting

* rescheduled during switch_to().

*/

#endif /* __SMP__ */

kstat.context_swtch++;

get_mmu_context(next);

switch_to(prev, next, prev);

__schedule_tail(prev);

same_process:

reacquire_kernel_lock(current);

return;

recalculate:

{

struct task_struct *p;

spin_unlock_irq(&runqueue_lock);

read_lock(&tasklist_lock);

for_each_task(p)

p->counter = (p->counter >> 1) + p->priority;

read_unlock(&tasklist_lock);

spin_lock_irq(&runqueue_lock);

goto repeat_schedule;

}

still_running:

c = prev_goodness(prev, prev, this_cpu);

next = prev;

goto still_running_back;

handle_bh:

do_bottom_half();

goto handle_bh_back;

handle_tq_scheduler:

run_task_queue(&tq_scheduler);

goto tq_scheduler_back;

move_rr_last:

if (!prev->counter) {

prev->counter = prev->priority;

move_last_runqueue(prev);

}

goto move_rr_back;

scheduling_in_interrupt:

printk("Scheduling in interrupt\n");

*(int *)0 = 0;

return;

}

相关函数修改:

唤醒函数

void __wake_up(struct wait_queue **q, unsigned int mode)

{

struct task_struct *p;

struct wait_queue *head, *next;

if (!q)

goto out;

/*

* this is safe to be done before the check because it

* means no deference, just pointer operations.

*/

head = WAIT_QUEUE_HEAD(q);

read_lock(&waitqueue_lock);

next = *q;

if (!next)

goto out_unlock;

while (next != head) {

p = next->task;

next = next->next;

if (p->state & mode) {

/*

* We can drop the read-lock early if this

* is the only/last process.

*/

if (next == head) {

read_unlock(&waitqueue_lock);

wake_up_process(p);

goto out;

}

wake_up_process(p);

}

}

out_unlock:

read_unlock(&waitqueue_lock);

out:

return;

}

同时 __schedule_tail 接口也开始调用 reschedule_idle . 仍然是针对多CPU支持

/*

* schedule_tail() is getting called from the fork return path. This

* cleans up all remaining scheduler things, without impacting the

* common case.

*/

static inline void __schedule_tail (struct task_struct *prev)

{

#ifdef __SMP__

if ((prev->state == TASK_RUNNING) &&

(prev != idle_task(smp_processor_id())))

reschedule_idle(prev);

wmb();

prev->has_cpu = 0;

#endif /* __SMP__ */

}

void schedule_tail (struct task_struct *prev)

{

__schedule_tail(prev);

}

Linux V2.4

1. scheduel添加了对内存的判断, 进程至少要有有效内存

if (!current->active_mm) BUG();

2. 添加了 prepare_to_switch 部分.

prepare_to_switch();

{

struct mm_struct *mm = next->mm;

struct mm_struct *oldmm = prev->active_mm;

if (!mm) {

if (next->active_mm) BUG();

next->active_mm = oldmm;

atomic_inc(&oldmm->mm_count);

enter_lazy_tlb(oldmm, next, this_cpu);

} else {

if (next->active_mm != mm) BUG();

switch_mm(oldmm, mm, next, this_cpu);

}

if (!prev->mm) {

prev->active_mm = NULL;

mmdrop(oldmm);

}

}

scheduel 源码 :

asmlinkage void schedule(void)

{

struct schedule_data * sched_data;

struct task_struct *prev, *next, *p;

struct list_head *tmp;

int this_cpu, c;

if (!current->active_mm) BUG();

need_resched_back:

prev = current;

this_cpu = prev->processor;

if (in_interrupt())

goto scheduling_in_interrupt;

release_kernel_lock(prev, this_cpu);

/* Do "administrative" work here while we don't hold any locks */

if (softirq_active(this_cpu) & softirq_mask(this_cpu))

goto handle_softirq;

handle_softirq_back:

/*

* 'sched_data' is protected by the fact that we can run

* only one process per CPU.

*/

sched_data = & aligned_data[this_cpu].schedule_data;

spin_lock_irq(&runqueue_lock);

/* move an exhausted RR process to be last.. */

if (prev->policy == SCHED_RR)

goto move_rr_last;

move_rr_back:

switch (prev->state) {

case TASK_INTERRUPTIBLE:

if (signal_pending(prev)) {

prev->state = TASK_RUNNING;

break;

}

default:

del_from_runqueue(prev);

case TASK_RUNNING:

}

prev->need_resched = 0;

/*

* this is the scheduler proper:

*/

repeat_schedule:

/*

* Default process to select..

*/

next = idle_task(this_cpu);

c = -1000;

if (prev->state == TASK_RUNNING)

goto still_running;

still_running_back:

list_for_each(tmp, &runqueue_head) {

p = list_entry(tmp, struct task_struct, run_list);

if (can_schedule(p, this_cpu)) {

int weight = goodness(p, this_cpu, prev->active_mm);

if (weight > c)

c = weight, next = p;

}

}

/* Do we need to re-calculate counters? */

if (!c)

goto recalculate;

/*

* from this point on nothing can prevent us from

* switching to the next task, save this fact in

* sched_data.

*/

sched_data->curr = next;

#ifdef CONFIG_SMP

next->has_cpu = 1;

next->processor = this_cpu;

#endif

spin_unlock_irq(&runqueue_lock);

if (prev == next)

goto same_process;

#ifdef CONFIG_SMP

/*

* maintain the per-process 'last schedule' value.

* (this has to be recalculated even if we reschedule to

* the same process) Currently this is only used on SMP,

* and it's approximate, so we do not have to maintain

* it while holding the runqueue spinlock.

*/

sched_data->last_schedule = get_cycles();

/*

* We drop the scheduler lock early (it's a global spinlock),

* thus we have to lock the previous process from getting

* rescheduled during switch_to().

*/

#endif /* CONFIG_SMP */

kstat.context_swtch++;

/*

* there are 3 processes which are affected by a context switch:

*

* prev == .... ==> (last => next)

*

* It's the 'much more previous' 'prev' that is on next's stack,

* but prev is set to (the just run) 'last' process by switch_to().

* This might sound slightly confusing but makes tons of sense.

*/

prepare_to_switch();

{

struct mm_struct *mm = next->mm;

struct mm_struct *oldmm = prev->active_mm;

if (!mm) {

if (next->active_mm) BUG();

next->active_mm = oldmm;

atomic_inc(&oldmm->mm_count);

enter_lazy_tlb(oldmm, next, this_cpu);

} else {

if (next->active_mm != mm) BUG();

switch_mm(oldmm, mm, next, this_cpu);

}

if (!prev->mm) {

prev->active_mm = NULL;

mmdrop(oldmm);

}

}

/*

* This just switches the register state and the

* stack.

*/

switch_to(prev, next, prev);

__schedule_tail(prev);

same_process:

reacquire_kernel_lock(current);

if (current->need_resched)

goto need_resched_back;

return;

recalculate:

{

struct task_struct *p;

spin_unlock_irq(&runqueue_lock);

read_lock(&tasklist_lock);

for_each_task(p)

p->counter = (p->counter >> 1) + NICE_TO_TICKS(p->nice);

read_unlock(&tasklist_lock);

spin_lock_irq(&runqueue_lock);

}

goto repeat_schedule;

still_running:

c = goodness(prev, this_cpu, prev->active_mm);

next = prev;

goto still_running_back;

handle_softirq:

do_softirq();

goto handle_softirq_back;

move_rr_last:

if (!prev->counter) {

prev->counter = NICE_TO_TICKS(prev->nice);

move_last_runqueue(prev);

}

goto move_rr_back;

scheduling_in_interrupt:

printk("Scheduling in interrupt\n");

BUG();

return;

}

相关代码修改:

1. 修改了对runquene的操作. 双向链表操作抽象在list.h中 , 提供各种接口

使用例子:

static inline void add_to_runqueue(struct task_struct * p)

{

list_add(&p->run_list, &runqueue_head);

nr_running++;

}

static inline void move_last_runqueue(struct task_struct * p)

{

list_del(&p->run_list);

list_add_tail(&p->run_list, &runqueue_head);

}

static inline void move_first_runqueue(struct task_struct * p)

{

list_del(&p->run_list);

list_add(&p->run_list, &runqueue_head);

}

2. reschedule_idle对多CPU做了更多的工作.

3. 添加异步唤醒接口, 唤醒后不去判断是否重新调度.

static inline void wake_up_process_synchronous(struct task_struct * p)

{

unsigned long flags;

/*

* We want the common case fall through straight, thus the goto.

*/

spin_lock_irqsave(&runqueue_lock, flags);

p->state = TASK_RUNNING;

if (task_on_runqueue(p))

goto out;

add_to_runqueue(p);

out:

spin_unlock_irqrestore(&runqueue_lock, flags);

}

4. 添加一系列唤醒接口, 可以根据不同的模式,选择CPU ,选择唤醒方式.

static inline void __wake_up_common (wait_queue_head_t *q, unsigned int mode,

unsigned int wq_mode, const int sync)

{

struct list_head *tmp, *head;

struct task_struct *p, *best_exclusive;

unsigned long flags;

int best_cpu, irq;

if (!q)

goto out;

best_cpu = smp_processor_id();

irq = in_interrupt();

best_exclusive = NULL;

wq_write_lock_irqsave(&q->lock, flags);

#if WAITQUEUE_DEBUG

CHECK_MAGIC_WQHEAD(q);

#endif

head = &q->task_list;

#if WAITQUEUE_DEBUG

if (!head->next || !head->prev)

WQ_BUG();

#endif

tmp = head->next;

while (tmp != head) {

unsigned int state;

wait_queue_t *curr = list_entry(tmp, wait_queue_t, task_list);

tmp = tmp->next;

#if WAITQUEUE_DEBUG

CHECK_MAGIC(curr->__magic);

#endif

p = curr->task;

state = p->state;

if (state & mode) {

#if WAITQUEUE_DEBUG

curr->__waker = (long)__builtin_return_address(0);

#endif

/*

* If waking up from an interrupt context then

* prefer processes which are affine to this

* CPU.

*/

if (irq && (curr->flags & wq_mode & WQ_FLAG_EXCLUSIVE)) {

if (!best_exclusive)

best_exclusive = p;

if (p->processor == best_cpu) {

best_exclusive = p;

break;

}

} else {

if (sync)

wake_up_process_synchronous(p);

else

wake_up_process(p);

if (curr->flags & wq_mode & WQ_FLAG_EXCLUSIVE)

break;

}

}

}

if (best_exclusive) {

if (sync)

wake_up_process_synchronous(best_exclusive);

else

wake_up_process(best_exclusive);

}

wq_write_unlock_irqrestore(&q->lock, flags);

out:

return;

}

void __wake_up(wait_queue_head_t *q, unsigned int mode, unsigned int wq_mode)

{

__wake_up_common(q, mode, wq_mode, 0);

}

void __wake_up_sync(wait_queue_head_t *q, unsigned int mode, unsigned int wq_mode)

{

__wake_up_common(q, mode, wq_mode, 1);

}

Linux V2.5

1. 不再大量的使用 goto , 作为替代对 if 使用 unlikely .

源码:

asmlinkage void schedule(void)

{

struct schedule_data * sched_data;

struct task_struct *prev, *next, *p;

struct list_head *tmp;

int this_cpu, c;

spin_lock_prefetch(&runqueue_lock);

if (!current->active_mm) BUG();

need_resched_back:

prev = current;

this_cpu = prev->processor;

if (unlikely(in_interrupt())) {

printk("Scheduling in interrupt\n");

BUG();

}

release_kernel_lock(prev, this_cpu);

/*

* 'sched_data' is protected by the fact that we can run

* only one process per CPU.

*/

sched_data = & aligned_data[this_cpu].schedule_data;

spin_lock_irq(&runqueue_lock);

/* move an exhausted RR process to be last.. */

if (unlikely(prev->policy == SCHED_RR))

if (!prev->counter) {

prev->counter = NICE_TO_TICKS(prev->nice);

move_last_runqueue(prev);

}

switch (prev->state) {

case TASK_INTERRUPTIBLE:

if (signal_pending(prev)) {

prev->state = TASK_RUNNING;

break;

}

default:

del_from_runqueue(prev);

case TASK_RUNNING:;

}

prev->need_resched = 0;

/*

* this is the scheduler proper:

*/

repeat_schedule:

/*

* Default process to select..

*/

next = idle_task(this_cpu);

c = -1000;

list_for_each(tmp, &runqueue_head) {

p = list_entry(tmp, struct task_struct, run_list);

if (can_schedule(p, this_cpu)) {

int weight = goodness(p, this_cpu, prev->active_mm);

if (weight > c)

c = weight, next = p;

}

}

/* Do we need to re-calculate counters? */

if (unlikely(!c)) {

struct task_struct *p;

spin_unlock_irq(&runqueue_lock);

read_lock(&tasklist_lock);

for_each_task(p)

p->counter = (p->counter >> 1) + NICE_TO_TICKS(p->nice);

read_unlock(&tasklist_lock);

spin_lock_irq(&runqueue_lock);

goto repeat_schedule;

}

/*

* from this point on nothing can prevent us from

* switching to the next task, save this fact in

* sched_data.

*/

sched_data->curr = next;

task_set_cpu(next, this_cpu);

spin_unlock_irq(&runqueue_lock);

if (unlikely(prev == next)) {

/* We won't go through the normal tail, so do this by hand */

prev->policy &= ~SCHED_YIELD;

goto same_process;

}

#ifdef CONFIG_SMP

/*

* maintain the per-process 'last schedule' value.

* (this has to be recalculated even if we reschedule to

* the same process) Currently this is only used on SMP,

* and it's approximate, so we do not have to maintain

* it while holding the runqueue spinlock.

*/

sched_data->last_schedule = get_cycles();

/*

* We drop the scheduler lock early (it's a global spinlock),

* thus we have to lock the previous process from getting

* rescheduled during switch_to().

*/

#endif /* CONFIG_SMP */

kstat.context_swtch++;

/*

* there are 3 processes which are affected by a context switch:

*

* prev == .... ==> (last => next)

*

* It's the 'much more previous' 'prev' that is on next's stack,

* but prev is set to (the just run) 'last' process by switch_to().

* This might sound slightly confusing but makes tons of sense.

*/

prepare_to_switch();

{

struct mm_struct *mm = next->mm;

struct mm_struct *oldmm = prev->active_mm;

if (!mm) {

if (next->active_mm) BUG();

next->active_mm = oldmm;

atomic_inc(&oldmm->mm_count);

enter_lazy_tlb(oldmm, next, this_cpu);

} else {

if (next->active_mm != mm) BUG();

switch_mm(oldmm, mm, next, this_cpu);

}

if (!prev->mm) {

prev->active_mm = NULL;

mmdrop(oldmm);

}

}

/*

* This just switches the register state and the

* stack.

*/

switch_to(prev, next, prev);

__schedule_tail(prev);

same_process:

reacquire_kernel_lock(current);

if (current->need_resched)

goto need_resched_back;

return;

}

相关函数修改:

1. 唤醒函数整合, 添加了参数有效性代码

static inline int try_to_wake_up(struct task_struct * p, int synchronous)

{

unsigned long flags;

int success = 0;

/*

* We want the common case fall through straight, thus the goto.

*/

spin_lock_irqsave(&runqueue_lock, flags);

p->state = TASK_RUNNING;

if (task_on_runqueue(p))

goto out;

add_to_runqueue(p);

if (!synchronous || !(p->cpus_allowed & (1 << smp_processor_id())))

reschedule_idle(p);

success = 1;

out:

spin_unlock_irqrestore(&runqueue_lock, flags);

return success;

}

inline int wake_up_process(struct task_struct * p)

{

return try_to_wake_up(p, 0);

}

oid __wake_up(wait_queue_head_t *q, unsigned int mode, int nr)

{

if (q) {

unsigned long flags;

wq_read_lock_irqsave(&q->lock, flags);

__wake_up_common(q, mode, nr, 0);

wq_read_unlock_irqrestore(&q->lock, flags);

}

}

void __wake_up_sync(wait_queue_head_t *q, unsigned int mode, int nr)

{

if (q) {

unsigned long flags;

wq_read_lock_irqsave(&q->lock, flags);

__wake_up_common(q, mode, nr, 1);

wq_read_unlock_irqrestore(&q->lock, flags);

}

}