一:前言

最近实在是太忙了,学习的kafka很想记下笔记,却一直忙于生产工作没有时间,这几天加班写了写,记录下自己的收获吧。

二:kafka简介

Kafka是一个分布式发布-订阅消息系统,使用 Scala语言编写,之后成为 Apache 项目的一部分。Kafka 是一个分布式的,可划分的,多订阅者,冗余备份的持久性的日志服务。

三:kafka的特点

1:分布式

kafka的producer,consumer,broker都是分布式的,可水平扩展,无需停机。

2:持久化

kafka将日志持久化到磁盘,通过将消息持久化到磁盘以及它的replication机制,保证数据的不丢失,通常一个topic会有多个分区,不同的分区分布在不同的server上,而每个分区又可以有多个副本,这样既提高了并行处理的能力,又保证了消息的可靠,因为越多的partitions意味着可以接受更多的consumer请求。

3:高吞吐

kafka采用磁盘直接进行线性读写而不是随机读写,大大提高了读写请求的速度。

四:kafka的核心概念

Producer 特指消息的生产者。

Consumer 特指消息的消费者。

Consumer Group 消费者组,可以并行消费Topic中partition的消息。

Broker:Kafka 集群中的一台服务器称为一个 broker。

Topic:kafka处理的消息分类,一个消息分类就是一种topic。

Partition:Topic的分区,一个 topic可以分为多个partition,每个partition是一个有序的队列,分区里面的消息都是按接收的顺序追加的且partition中的每条消息都会被分配一个有序的 id(offset)。

Producers:消息和数据生产者,向 Kafka 的一个 topic发布消息的过程叫做 producers。

Consumers:消息和数据消费者,订阅 topics 并处理其发布的消息的过程叫做 consumers。

4.1 kafka的Producers

在kafka中,生产的消息像kafka的topic发送的过程叫做producers,Producer能够决定将此消息发送到topic的哪个分区下面,可以通过配置文件配置也可在api里显示指定,其支持异步批量发送,批量发送可以有效的提高发送效率,先将消息缓存,然后批量发送给topic,减少网络IO。

4.2 kafka的Consumers

在kafka中,一个分区的消息可以被一个消费者组中一个consumer消费,但是一个consumer可以消费多个分区的消息。如果多个消费者同时在一个消费者组中,那么kafka会以轮询的方式,让消息在消费者之间负载均衡,如果不同的消费者存在不同的消费者组中,这就有点像zookeeper里面的发布-订阅模式,不同组的消费者可以同时消费某个分区的消息。

需要注意的是,kafka只能给我们保证某个分区的消息是按顺序被消费的,但它不能保证不同分区的消费按一定顺序。

4.3 kafka的broker

我们可以理解为一台机器就是一个broker,我们发送的消息(message)日志在broker中是以append的方式追加,并且broker会将我们的消息暂时的buffer起来,根据我们的配置,当消息的大小或者是个数达到了配置的值,broker会将消息一次性的刷新到磁盘,有效降低了每次消息磁盘调用的IO次数。

kafka中的broker没有状态,如果一个broker挂掉,这里面的消息也会丢掉,由于broker的无状态,所以消息的消费都记录在消费者那,并不记录在broker。已经被消费了的消息会根据配置在保存一定时间后自动删除,默认是7天。

4.4 kafka的message

在kafka中,一条message消息由几部分组成,offest代表消息偏移量,MessageSize表示消息的大小,data代表了消息的具体内容,kafka在记录message的时候,还会每隔一定的字节建立一个索引,当消费者需要消费指定某条消息的时候,kafka采用二分法查找索引的位置从而找到你需要消费的消息。

五:伪分布式环境搭建

[root@hadoop config]# cat server.properties # Licensed to the Apache Software Foundation (ASF) under one or more # contributor license agreements. See the NOTICE file distributed with # this work for additional information regarding copyright ownership. # The ASF licenses this file to You under the Apache License, Version 2.0 # (the "License"); you may not use this file except in compliance with # the License. You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # see kafka.server.KafkaConfig for additional details and defaults ############################# Server Basics ############################# # The id of the broker. This must be set to a unique integer for each broker. #brokerid 每个kafka机器是一个实例,id不能重复 broker.id=0 ############################# Socket Server Settings ############################# listeners=PLAINTEXT://:9092 # The port the socket server listens on port=9092 # Hostname the broker will bind to. If not set, the server will bind to all interfaces #如果是伪分布式,ip必须不能写成localhost host.name=ip # Hostname the broker will advertise to producers and consumers. If not set, it uses the # value for "host.name" if configured. Otherwise, it will use the value returned from # java.net.InetAddress.getCanonicalHostName(). advertised.host.name=ip # The port to publish to ZooKeeper for clients to use. If this is not set, # it will publish the same port that the broker binds to. #advertised.port=<port accessible by clients> # The number of threads handling network requests #broker处理消息的最大线程数,一般情况下为CPU核数 num.network.threads=3 # The number of threads doing disk I/O #broker处理磁盘IO的线程数 num.io.threads=8 # The send buffer (SO_SNDBUF) used by the socket server socket.send.buffer.bytes=102400 # The receive buffer (SO_RCVBUF) used by the socket server socket.receive.buffer.bytes=102400 # The maximum size of a request that the socket server will accept (protection against OOM) socket.request.max.bytes=104857600 ############################# Log Basics ############################# # A comma seperated list of directories under which to store log files log.dirs=/kafka_2.11-0.9.0.1/kafka-logs-1 # The default number of log partitions per topic. More partitions allow greater # parallelism for consumption, but this will also result in more files across # the brokers. #每个topic默认的分区 num.partitions=2 # The number of threads per data directory to be used for log recovery at startup and flushing at shutdown. # This value is recommended to be increased for installations with data dirs located in RAID array. num.recovery.threads.per.data.dir=1 ############################# Log Flush Policy ############################# # Messages are immediately written to the filesystem but by default we only fsync() to sync # the OS cache lazily. The following configurations control the flush of data to disk. # There are a few important trade-offs here: # 1. Durability: Unflushed data may be lost if you are not using replication. # 2. Latency: Very large flush intervals may lead to latency spikes when the flush does occur as there will be a lot of data to flush. # 3. Throughput: The flush is generally the most expensive operation, and a small flush interval may lead to exceessive seeks. # The settings below allow one to configure the flush policy to flush data after a period of time or # every N messages (or both). This can be done globally and overridden on a per-topic basis. # The number of messages to accept before forcing a flush of data to disk #log.flush.interval.messages=10000 # The maximum amount of time a message can sit in a log before we force a flush #log.flush.interval.ms=1000 ############################# Log Retention Policy ############################# # The following configurations control the disposal of log segments. The policy can # be set to delete segments after a period of time, or after a given size has accumulated. # A segment will be deleted whenever *either* of these criteria are met. Deletion always happens # from the end of the log. # The minimum age of a log file to be eligible for deletion #kafka server日志默认保存时间 log.retention.hours=24 log.cleaner.enable = true log.cleanup.policy=delete # A size-based retention policy for logs. Segments are pruned from the log as long as the remaining # segments don't drop below log.retention.bytes. #topic每个分区的最大文件大小,超过后会删除 log.retention.bytes=1073741824 # The maximum size of a log segment file. When this size is reached a new log segment will be created. #topic的分区是以一堆segment文件存储的,这个控制每个segment的大小 log.segment.bytes=1073741824 # The interval at which log segments are checked to see if they can be deleted according # to the retention policies log.retention.check.interval.ms=300000 ############################# Zookeeper ############################# # Zookeeper connection string (see zookeeper docs for details). # This is a comma separated host:port pairs, each corresponding to a zk # server. e.g. "127.0.0.1:3000,127.0.0.1:3001,127.0.0.1:3002". # You can also append an optional chroot string to the urls to specify the # root directory for all kafka znodes. zookeeper.connect=ip:2181,ip:2182,ip:2183 # Timeout in ms for connecting to zookeeper zookeeper.connection.timeout.ms=6000 伪分布式只需要cp几个server.properties文件即可,然后分别启动,需要注意的是配置文件里的broker.id和监听的端口不能重复

在配置完kafka后,首先需要启动zookeeper

bin/zkServer.sh start zoo.cfg

bin/zkServer.sh start zoo2.cfg

bin/zkServer.sh start zoo3.cfg

然后启动kafka

bin/kafka-server-start.sh config/server.properties & bin/kafka-server-start.sh config/server-1.properties & bin/kafka-server-start.sh config/server-2.properties &

查看是否启动成功:

[root@hadoop zookeeper-3.4.7]# jps 2717 Kafka 2285 QuorumPeerMain 2679 Kafka 2696 Kafka 2158 QuorumPeerMain 2622 ZooKeeperMain 2199 QuorumPeerMain 2908 Jps

OK!,你本地的kafka伪分布式环境已经搭建好了,下面我们来简单的看一下效果

使用kafka的命令来测试下kafka集群环境

1:创建一个分区

bin/kafka-topics.sh --create --zookeeper 192.168.8.88:2181 --replication-factor 3 --partitions 3 --topic first_topic

2:启动消费者

bin/kafka-console-consumer.sh --zookeeper 192.168.8.88:2181 --topic first_topic --from-beginning

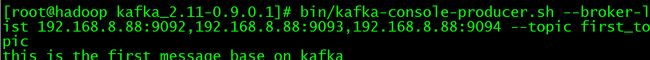

3:启动生产着

bin/kafka-console-producer.sh --broker-list 192.168.8.88:9092,192.168.8.88:9093,192.168.8.88:9094 --topic first_topic

执行完上面的操作后,会发现,生产的消息会立马被消费掉,效果图如下:

![]()

六:kafka在zookeeper中的节点存储结构

zookeeper在kafka中扮演了举足轻重的作用,kakfa的broker,消费者等相关的信息都存在zk的节点上,zk还提供了对kafka的动态负载均衡等机制,下面我们一一介绍

6.1:broker注册

在kafka中,每当一个broker启动后,会在zk的节点下存储相关的信息,这是一个临时节点(如果不清楚zk的节点类型,可以参考其官网介绍),所以当某个broker宕掉后,其对应的临时节点会消失.

[zk: ip:2181(CONNECTED) 0] ls /brokers [seqid, topics, ids] [zk: ip:2181(CONNECTED) 1] ls /brokers/topics [testKafka, __consumer_offsets, test, consumer_offsets, myfirstTopic, first_topic, kafka_first_topic] [zk: ip:2181(CONNECTED) 2] ls /brokers/ids [2, 1, 0]

可以看到,brokers节点下存储目前有多少台broker,该broker下有哪些topic,每个broker可以通过get来获取详细信息:

[zk: ip:2181(CONNECTED) 0] get /brokers/ids/2

{"jmx_port":-1,"timestamp":"1461828196728","endpoints":["PLAINTEXT://ip:9094"],"host":"ip","version":2,"port":9094}

cZxid = 0x490000006b

ctime = Thu Apr 28 19:23:16 GMT+12:00 2016

mZxid = 0x490000006b

mtime = Thu Apr 28 19:23:16 GMT+12:00 2016

pZxid = 0x490000006b

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x3545bbe83450003

dataLength = 135

numChildren = 0

[zk: ip:2181(CONNECTED) 1]

这里面有每个broker的ip,port,版本等信息,每个topic的分区消息分布在不同的broker上,

[zk: ip:2181(CONNECTED) 1] get /brokers/topics/kafka_first_topic

{"version":1,"partitions":{"2":[0,1,2],"1":[2,0,1],"0":[1,2,0]}}

cZxid = 0x4100000236

ctime = Sat Apr 16 04:48:10 GMT+12:00 2016

mZxid = 0x4100000236

mtime = Sat Apr 16 04:48:10 GMT+12:00 2016

pZxid = 0x410000023a

cversion = 1

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 64

numChildren = 1

[zk: 192.168.8.88:2181(CONNECTED) 2]

意思是说,kafka_first_topic这个topic有3个分区,每个不同的分区有3个备份,分别备份在broker id为0,1,2的机器上

6.2 消费者注册

前面已经说到,消费者有消费者组的概念,kafka会为每个消费者组分配一个唯一的ID,也为会每个消费者分配一个ID,

[zk: 192.168.8.88:2181(CONNECTED) 40] ls /consumers/console-consumer-85351/ids [console-consumer-85351_hadoop-1461831147033-3265fdb3]

意思是在消费者组console-consumer-85351下有一个id为如上的消费者。

而一个消费者组里面的某个消费者消费某个分区的消息,在zk中是这样记录的:

[zk: ip:2181(CONNECTED) 4] get /consumers/console-consumer-85351/owners/kafka_first_topic/2 console-consumer-85351_hadoop-1461831147033-3265fdb3-0 cZxid = 0x490000010f ctime = Thu Apr 28 20:12:28 GMT+12:00 2016 mZxid = 0x490000010f mtime = Thu Apr 28 20:12:28 GMT+12:00 2016 pZxid = 0x490000010f cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x1545bbe6fb80015 dataLength = 54 numChildren = 0

表示在消费者组console-consumer-85351的topic为kafka_first_topic的第二个分区下,有一个消费者id为console-consumer-85351_hadoop-1461831147033-3265fdb3-0正在消费每个topic有不同的分区,每个分区存储了消息的offest,当消费者重启后能够从该节点记录的值后开始继续消费

[zk: ip:2181(CONNECTED) 37] get /consumers/console-consumer-85351/offsets/kafka_first_topic/0 38 cZxid = 0x4900000117 ctime = Thu Apr 28 20:13:27 GMT+12:00 2016 mZxid = 0x4900000117 mtime = Thu Apr 28 20:13:27 GMT+12:00 2016 pZxid = 0x4900000117 cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 2 numChildren = 0

表示分区0的消息的消费偏移到了38这个位置,需要注意的是,这是一个临时节点,当我停掉消费者线程,你会发现在consumers组里面没有刚才那个消费者了。

6.3 controller

zookeeper节点contorller主要存储的是中央控制器(可以理解为leader)所在机器的信息,下面表示此集群leader是broker id为0这台机器

[zk: ip:2181(CONNECTED) 33] get /controller

{"version":1,"brokerid":0,"timestamp":"1461828122648"}

cZxid = 0x4900000007

ctime = Thu Apr 28 19:22:02 GMT+12:00 2016

mZxid = 0x4900000007

mtime = Thu Apr 28 19:22:02 GMT+12:00 2016

pZxid = 0x4900000007

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x2545bbe6fda0000

dataLength = 54

numChildren = 0

5.4:controller_epoch

zookeeper里面的controller_epoch存储的是leader选举的次数,比如一开始只有broker0这台机器,后面加入了broker1,那么就会重新进行leader选择,次数就会+1,这样依次类推:

[zk: ip:2181(CONNECTED) 2] get /controller_epoch 72 cZxid = 0x3700000014 ctime = Tue Apr 05 19:09:47 GMT+12:00 2016 mZxid = 0x4900000008 mtime = Thu Apr 28 19:22:02 GMT+12:00 2016 pZxid = 0x3700000014 cversion = 0 dataVersion = 71 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 2 numChildren = 0

6.6 动态负载均衡

6.6.1消费者负载均衡

消费者在注册的时候,会使用zookeeper的watcher监听机制来实现对消费者分组里的各个消费者,以及broker节点注册监听,

一旦某个消费者组里的某个消费者宕掉,或者某个broker宕掉,消费者会收到了事件监听回复,就会根据需要触发消费者负载均衡。

6.6.2生产着负载均衡

生产者在将消息发送给broker的时候,会注册watcher监听,监听broker节点的变化,每个topic的新增和减少,以便合理的发送消息到broker。