openstack 如何增加 compute 计算节点

背景介绍:

公司里已部署了openstack。一台控制节点(controller),一台计算节点(compute1)。compute1的配置为6核超线程,64gb,两块3TB硬盘做raid1。(如此配置是不是有点诡异。。。没办法,都是东拼西凑拿来用的哈。)

compute1运行了大约40多台windows7的实例(instances),主要是给客服同事登陆后台web系统,和编辑文档,每个instance的配置均为2vcpu,2GB内存,40GB硬盘。用了一段时间后有多位同事反馈说运行有卡顿现象。当然这种情况基本能猜到是cpu和硬盘都不够给力的原因了。但是我一向喜欢用数据来说话,所以我就将openstack这两台服务器加入到nagios的监控中来,观察了些时间发现,compute1节点的iowait果然很多时候超过了20,load average 15分钟平均负载也超标,最高飙到了20以上。

升级是必然了,服务体验必须要得到改善。我初步想了两个方案:

升级compute1节点,增加cpu核数,强化硬盘。cpu好办,因为此服务器还有一个cpu插槽空着没用,只要买个cpu插上就行。但是强化硬盘不容易,再加两块做raid10?那不得要重装系统?必然会牵涉到数据备份了,关键是服务可能会因此而暂停。

增加一个compute节点。这个方案是对现有服务进行扩展,且不影响现有服务。只是要说服领导再批我一台服务器。

最后还是说服了领导采用了第二个方案,因为我告诉领导,为了防止一个compute1的宕机,所以增加一个节点是有必要的。

具体操作:(此为liberty版本的操作流程,其他版本请勿模仿)

新来的服务器配置为12核超线程,32gb,四块1TB做raid10。装了centos7.0。关闭selinux。我的hosts文件中配置为compute2。

配置环境(compute2)

安装epel源

yum install http://dl.fedoraproject.org/pub/epel/7/x86_64/e/epel-release-7-5.noarch.rpm

安装openstack源

yum install centos-release-openstack-liberty

升级系统

yum upgrade

安装openstack客户端

yum install python-openstackclient

安装nova计算服务(compute2)

安装nova包

yum install openstack-nova-compute sysfsutils

修改 /etc/nova/nova.conf

配置rabbitmq

[DEFAULT] ... rpc_backend = rabbit [oslo_messaging_rabbit] ... rabbit_host = controller rabbit_userid = openstack rabbit_password = RABBIT_PASS

配置验证服务keystone

[DEFAULT] ... auth_strategy = keystone [keystone_authtoken] ... auth_uri = http://controller:5000 auth_url = http://controller:35357 auth_plugin = password project_domain_id = default user_domain_id = default project_name = service username = nova password = NOVA_PASS

配置本机ip地址

[DEFAULT] ... my_ip = MANAGEMENT_INTERFACE_IP_ADDRESS

配置网络服务

[DEFAULT] ... network_api_class = nova.network.neutronv2.api.API security_group_api = neutron linuxnet_interface_driver = nova.network.linux_net.NeutronLinuxBridgeInterfaceDriver firewall_driver = nova.virt.firewall.NoopFirewallDriver

配置vnc

[vnc] ... enabled = True vncserver_listen = 0.0.0.0 vncserver_proxyclient_address = $my_ip novncproxy_base_url = http://controller:6080/vnc_auto.html

配置glance

[glance] ... host = controller

配置lock路径

[oslo_concurrency] ... lock_path = /var/lib/nova/tmp

配置qemu或者kvm

egrep -c '(vmx|svm)' /proc/cpuinfo

如果返回值大于等于一,无需更改配置。

否则配置如下:

[libvirt] ... virt_type = qemu

保存 /etc/nova/nova.conf

启动计算服务

systemctl enable libvirtd.service openstack-nova-compute.service systemctl start libvirtd.service openstack-nova-compute.service

在控制节点验证

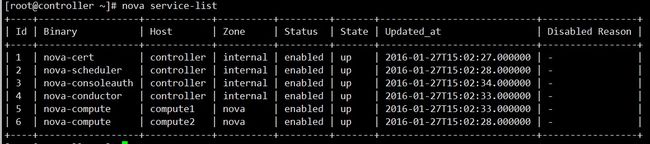

nova service-list

成功出现compute2!不要高兴,还没有大功告成!接下来还需配置网络!

配置网络服务(compute2)

安装网络组件

yum install openstack-neutron openstack-neutron-linuxbridge ebtables ipset

修改 /etc/neutron/neutron.conf

配置rabbitmq

[DEFAULT] ... rpc_backend = rabbit [oslo_messaging_rabbit] ... rabbit_host = controller rabbit_userid = openstack rabbit_password = RABBIT_PASS

配置验证服务keystone

[DEFAULT] ... auth_strategy = keystone [keystone_authtoken] ... auth_uri = http://controller:5000 auth_url = http://controller:35357 auth_plugin = password project_domain_id = default user_domain_id = default project_name = service username = neutron password = NEUTRON_PASS

配置lock路径

[oslo_concurrency] ... lock_path = /var/lib/neutron/tmp

配置neutron

[neutron] ... url = http://controller:9696 auth_url = http://controller:35357 auth_plugin = password project_domain_id = default user_domain_id = default region_name = RegionOne project_name = service username = neutron password = NEUTRON_PASS

修改 /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[linux_bridge] physical_interface_mappings = public:PUBLIC_INTERFACE_NAME ... [vxlan] enable_vxlan = False ... [agent] ... prevent_arp_spoofing = True ... [securitygroup] ... enable_security_group = True firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

重启nova服务

systemctl restart openstack-nova-compute.service

开启 linux bridge

systemctl enable neutron-linuxbridge-agent.service systemctl start neutron-linuxbridge-agent.service

至此,第二个 compute 节点就基本配置好了。打开dashboard看看。compute2 已经出现了,状态是up!

小结

第二结点配置完成了,服务器负载得到了减轻,可以满足业务的需求了,有成就感,但也不是那么的完美!首先,迁移测试还是没有成功,第二,新生成的实例无法主动选择计算节点,openstack会根据两个节点的资源配置自动分配。还需要继续探索!