通过案例对SparkStreaming 透彻理解三板斧之一:解密SparkStreaming另类实验

Spark中程序最容易出错的是流处理,流处理也是目前spark技术瓶颈之一,所以要做出一个优秀的spark发行版的话,对流处理的优化是必需的。

根据spark历史演进的趋势,spark graphX,机器学习已经发展得非常好。对它进行改进是重要的,单不是最重要的。最最重要的还是流处理,而流处理最为核心的是流处理结合机器学习,图计算的一体化结合使用,真正的实现一个堆栈rum them all .

1 流处理最容易出错

2 流处理结合图计算和机器学习将发挥出巨大的潜力

3 构造出复杂的实时数据处理的应用程序

流处理其实是构建在spark core之上的一个应用程序

一:代码案例:

import org.apache.spark.SparkConf

import org.apache.spark.storage.StorageLevel

import org.apache.spark.streaming.{Seconds, StreamingContext}

/**

* Created by hadoop on 2016/4/18.

* 背景描述 在广告点击计费系统中 我们在线过滤掉 黑名单的点击 进而保护广告商的利益

* 只有效的广告点击计费

*新浪微博:http://www.weibo.com/ilovepains

*/

object OnlineBlanckListFilter extends App{

//val basePath = "hdfs://master:9000/streaming"

val conf = new SparkConf().setAppName("SparkStreamingOnHDFS")

if(args.length == 0) conf.setMaster("spark://Master:7077")

val ssc = new StreamingContext(conf, Seconds(30))

val blackList = Array(("hadoop", true) , ("mahout", true), ("spark", false))

val backListRDD = ssc.sparkContext.parallelize(blackList)

val adsClickStream = ssc.socketTextStream("192.168.74.132", 9000, StorageLevel.MEMORY_AND_DISK_SER_2)

val rdd = adsClickStream.map{ads => (ads.split(" ")(1), ads)}

val validClicked = rdd.transform(userClickRDD => {

val joinedBlackRDD = userClickRDD.leftOuterJoin(backListRDD)

joinedBlackRDD.filter(joinedItem => {

if(joinedItem._2._2.getOrElse(false)){

false

}else{

true

}

})

})

validClicked.map(validClicked => {

validClicked._2._1

}).print()

ssc.start()

ssc.awaitTermination()

}

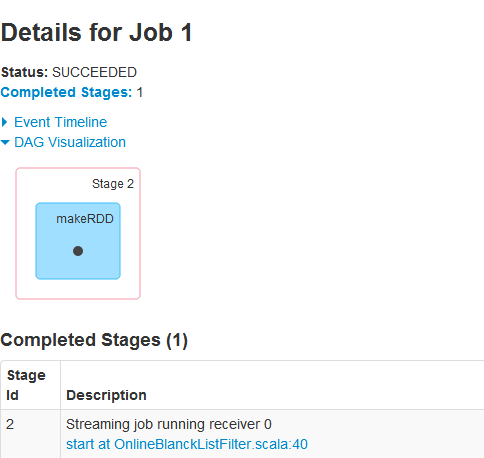

二:sparkStreamUI 观察任务状态:

思考:这里一共有5个JOB,第 2 3 4 是我们在代码中触发的JOB那么 第0和第1个JOB从何而来?

我们查看JOB0 的UI:

我们发现这个任务是我们的应用程序启动后就有了,思考:这个JOB是干什么的?

我们从源码出发

/**类名:ReceiverTracker

* Get the receivers from the ReceiverInputDStreams, distributes them to the

* worker nodes as a parallel collection, and runs them.

*/

private def launchReceivers(): Unit = {

val receivers = receiverInputStreams.map(nis => {

val rcvr = nis.getReceiver()

rcvr.setReceiverId(nis.id)

rcvr

})

runDummySparkJob()

logInfo("Starting " + receivers.length + " receivers")

endpoint.send(StartAllReceivers(receivers))

}

我们关注

runDummySparkJob

这个方法:

/**

* Run the dummy Spark job to ensure that all slaves have registered. This avoids all the

* receivers to be scheduled on the same node.

*

* TODO Should poll the executor number and wait for executors according to

* "spark.scheduler.minRegisteredResourcesRatio" and

* "spark.scheduler.maxRegisteredResourcesWaitingTime" rather than running a dummy job.

*/

private def runDummySparkJob(): Unit = {

if (!ssc.sparkContext.isLocal) {

ssc.sparkContext.makeRDD(1 to 50, 50).map(x => (x, 1)).reduceByKey(_ + _, 20).collect()

}

assert(getExecutors.nonEmpty)

}

这个注释清楚地说明了这个任务的作用,为了最大化第利用集群资源,避免数据接收都在一个节点上

现在我们继续关注JOB1 这个任务:

我们继续查看这个任务的详情:

笔者此次数据的来源是用 nc -lk 9000(在worker2 这个节点上运行的)

我们怀疑这个就是数据的接收点?

源码中找解释:

/**

* Start a receiver along with its scheduled executors

*/

private def startReceiver(

receiver: Receiver[_],

scheduledLocations: Seq[TaskLocation]): Unit = {

def shouldStartReceiver: Boolean = {

// It's okay to start when trackerState is Initialized or Started

!(isTrackerStopping || isTrackerStopped)

}

val receiverId = receiver.streamId

if (!shouldStartReceiver) {

onReceiverJobFinish(receiverId)

return

}

val checkpointDirOption = Option(ssc.checkpointDir)

val serializableHadoopConf =

new SerializableConfiguration(ssc.sparkContext.hadoopConfiguration)

// Function to start the receiver on the worker node

val startReceiverFunc: Iterator[Receiver[_]] => Unit =

(iterator: Iterator[Receiver[_]]) => {

if (!iterator.hasNext) {

throw new SparkException(

"Could not start receiver as object not found.")

}

if (TaskContext.get().attemptNumber() == 0) {

val receiver = iterator.next()

assert(iterator.hasNext == false)

val supervisor = new ReceiverSupervisorImpl(

receiver, SparkEnv.get, serializableHadoopConf.value, checkpointDirOption)

supervisor.start()

supervisor.awaitTermination()

} else {

// It's restarted by TaskScheduler, but we want to reschedule it again. So exit it.

}

}

// Create the RDD using the scheduledLocations to run the receiver in a Spark job

val receiverRDD: RDD[Receiver[_]] =

if (scheduledLocations.isEmpty) {

ssc.sc.makeRDD(Seq(receiver), 1)

} else {

val preferredLocations = scheduledLocations.map(_.toString).distinct

ssc.sc.makeRDD(Seq(receiver -> preferredLocations))

}

receiverRDD.setName(s"Receiver $receiverId")

ssc.sparkContext.setJobDescription(s"Streaming job running receiver $receiverId")

ssc.sparkContext.setCallSite(Option(ssc.getStartSite()).getOrElse(Utils.getCallSite()))

val future = ssc.sparkContext.submitJob[Receiver[_], Unit, Unit](

receiverRDD, startReceiverFunc, Seq(0), (_, _) => Unit, ())

// We will keep restarting the receiver job until ReceiverTracker is stopped

future.onComplete {

case Success(_) =>

if (!shouldStartReceiver) {

onReceiverJobFinish(receiverId)

} else {

logInfo(s"Restarting Receiver $receiverId")

self.send(RestartReceiver(receiver))

}

case Failure(e) =>

if (!shouldStartReceiver) {

onReceiverJobFinish(receiverId)

} else {

logError("Receiver has been stopped. Try to restart it.", e)

logInfo(s"Restarting Receiver $receiverId")

self.send(RestartReceiver(receiver))

}

}(submitJobThreadPool)

logInfo(s"Receiver ${receiver.streamId} started")

}

我们看到源码中有这样一句话:Create the RDD using the scheduledLocations to run the receiver in a Spark job

说明数据的接收者是以任务的方式运行在Worker节点上,这说明了SparkStreaming可以极大话地利用集群资源,各个节点 都可以接收数据,数据产生之后会放在BlockManager 里边(后续源码继续分析)。将数据存储起来。

思考:数据被收集起来,那么我们真正的计算发生在哪里?先查看下WEBUI:

这里我们看到了我们的代码逻辑。任务还是通过JobScheduler 这个类通过线程池的方式提交给集群运行的。

附上JOB提交源码:

def run() {

try {

val formattedTime = UIUtils.formatBatchTime(

job.time.milliseconds, ssc.graph.batchDuration.milliseconds, showYYYYMMSS = false)

val batchUrl = s"/streaming/batch/?id=${job.time.milliseconds}"

val batchLinkText = s"[output operation ${job.outputOpId}, batch time ${formattedTime}]"

ssc.sc.setJobDescription(

s"""Streaming job from <a href="$batchUrl">$batchLinkText</a>""")

ssc.sc.setLocalProperty(BATCH_TIME_PROPERTY_KEY, job.time.milliseconds.toString)

ssc.sc.setLocalProperty(OUTPUT_OP_ID_PROPERTY_KEY, job.outputOpId.toString)

// We need to assign `eventLoop` to a temp variable. Otherwise, because

// `JobScheduler.stop(false)` may set `eventLoop` to null when this method is running, then

// it's possible that when `post` is called, `eventLoop` happens to null.

var _eventLoop = eventLoop

if (_eventLoop != null) {

_eventLoop.post(JobStarted(job, clock.getTimeMillis()))

// Disable checks for existing output directories in jobs launched by the streaming

// scheduler, since we may need to write output to an existing directory during checkpoint

// recovery; see SPARK-4835 for more details.

PairRDDFunctions.disableOutputSpecValidation.withValue(true) {

job.run()

}

_eventLoop = eventLoop

if (_eventLoop != null) {

_eventLoop.post(JobCompleted(job, clock.getTimeMillis()))

}

} else {

// JobScheduler has been stopped.

}

} finally {

ssc.sc.setLocalProperty(JobScheduler.BATCH_TIME_PROPERTY_KEY, null)

ssc.sc.setLocalProperty(JobScheduler.OUTPUT_OP_ID_PROPERTY_KEY, null)

}

}

通过跟踪源码发现 job.run 方法指向了外部传入的一个方法:

/**

* Generate a SparkStreaming job for the given time. This is an internal method that

* should not be called directly. This default implementation creates a job

* that materializes the corresponding RDD. Subclasses of DStream may override this

* to generate their own jobs.

*/

private[streaming] def generateJob(time: Time): Option[Job] = {

getOrCompute(time) match {

case Some(rdd) => {

val jobFunc = () => {

val emptyFunc = { (iterator: Iterator[T]) => {} }

context.sparkContext.runJob(rdd, emptyFunc)

}

Some(new Job(time, jobFunc))

}

case None => None

}

}

也就是说是通过Dstream的generateJob方法来向集群提交任务的(DStreamGraph调用generateJobs触发了Dstream类 的generateJob这个方法)DStreamGraph记录了Dstream的逻辑转关系,最终将Dstream上的转换关系回溯生成RDD实 例,构 成了RDD的DAG,触发条件就是一个我们自定义的一个Batch,这里调用了getOrCompute 方法来回溯Dstream的 转换, 生 成了RDD实例和RDD的DAG。

附上一个sparkStreaming 整体流程图

从这里我们能得出什么结论?

1.数据的收集发生在spark集群中的Worker节点,数据接收器(Receiver),是以一个JOB来接收数据的!

2.真正的计算也发生在Worker上,spark集群把任务分发到各个节点,及数据的接收在一个节点,而数据的收集在一个节点 (针对此次实践)

3.sparkStreaming 中各个任务是配合起来工作的,至于为何要这样做后续继续分析

4.数据本地性,上边我们看到任务的本地性是 PROCESS_LOCAL 这个说明了数据是在内存中,而我们数据是在一个节上,那这里的数据必然要经过网络传输(需要经过Shuffle讲数据放在计算的节点),每次数据收集的时候会将数据分片,并 且将数据分发到各个计算节点上。

5.sparkStreaming是以时间为单位来生成JOB,本质上来讲是加上了时间维度的批处理任务

三: 瞬间理解Spark Streaming本质

DStream是一个没有边界的集合,没有大小的限制。

DStream代表了时空的概念。随着时间的推移,里面不断产生RDD。

时间已固定,我们就锁定到空间的操作。

从空间的维度来讲,就是处理层面。

对DStream的操作,构成了DStreamGraph。如以下图例所示:

上图中每个foreach都会触发一个作业,就会顺着依赖从后往前回溯,形成DAG,如下图所示:

空间维度确定之后,随着时间不断推进,会不断实例化RDD Graph,然后触发Job去执行处,及上面所说的(generateJobs)这个方法。

再次理解官网的一段话:

Spark Streaming provides a high-level abstraction called discretized stream or DStream, which represents a continuous stream of data. DStreams can be created either from input data streams from sources such as Kafka, Flume, and Kinesis, or by applying high-level operations on other DStreams. Internally, a DStream is represented as a sequence of RDDs.