HTML&CSS

Introduction

互联网上有很多信息并不是存在数据库中也不是API格式,这些数据存储网页上。提取这些数据的一个技术就是网页爬虫(web scraping)。

在Python中进行爬虫的过程大概就是:使用requests库加载这个网页,然后使用beautifulsoup 库从这个网页中提取出相关的信息。

Webpage Structure

网页是由HyperText Markup Language (HTML)编写的,HTML是一种标记语言(markup language),它有自己的语法规则,浏览器下载了这些网页根据这些规则将正确的内容呈现给用户。从这里可以看到HTML中所有的tag。

- 向http://dataquestio.github.io/web-scraping-pages/simple.html网页发出一个GET请求,使用response.content可以获取到网页的内容:

response = requests.get("http://dataquestio.github.io/web-scraping-pages/simple.html")

content = response.content

'''

bytes (<class 'bytes'>)

b'<!DOCTYPE html>\n<html>\n <head>\n <title>A simple example page</title>\n </head>\n <body>\n <p>Here is some simple content for this page.</p>\n </body>\n</html>'

'''Retrieving Elements From A Page

获得了HTML的所有内容后,我们需要解析这个网页。BeautifulSoup这个库可以提取HTML中的tags,HTML中的tags是层层嵌套的,可以组织成一个树形结构。

- 提取网页中的title,由于标签的层层嵌套的,因此我们需要层层拨开来获取title标签。

from bs4 import BeautifulSoup

# Initialize the parser, and pass in the content we grabbed earlier.

parser = BeautifulSoup(content, 'html.parser')

# 观察content的内容,可以发现p标签在body标签里面

body = parser.body

p = body.p

# Text is a property that gets the inside text of a tag.

print(p.text)

# 而title在head标签里面

head = parser.head

title = head.title

title_text = title.textUsing Find All

像上面这样直接使用tag这个属性虽然很直观,但是也很不健壮。我们可以使用find_all函数来获取某个标签的所有出现。

- 使用find_all函数获取title,由于我们知道title是在第一个head中,因此我们先获取到head,然后获取head中第一个title的内容:

parser = BeautifulSoup(content, 'html.parser')

# Get a list of all occurences of the body tag in the element.

body = parser.find_all("body")

# Get the paragraph tag

p = body[0].find_all("p")

# body中有很多段落p

print(p[0].text)

head = parser.find_all("head")

title = head[0].find_all("title")

title_text = title[0].textElement Ids

在HTML中,元素(tag)拥有独一无二的id,可以通过id来检索到元素(tag)。看个例子:

<title>A simple example page</title>

<div>

<p id="first">

First paragraph.

</p>

</div>

<p id="second">

<b>

Second paragraph.

</b>

</p>- div是一个分割标记,将网页划分逻辑单元,现在想要获取这个网页的第二个段落的内容,使用id:

# Get the page content and setup a new parser.

response = requests.get("http://dataquestio.github.io/web-scraping-pages/simple_ids.html")

content = response.content

parser = BeautifulSoup(content, 'html.parser')

# Pass in the id attribute to only get elements with a certain id.

first_paragraph = parser.find_all("p", id="first")[0]

print(first_paragraph.text)

''' First paragraph. '''

second_paragraph = parser.find_all("p", id="second")[0]

second_paragraph_text = second_paragraph.textElement Classes

HTML中的元素还有class属性,这个属性并不是全局唯一的,拥有相同class的元素表明它们有相同的特征,每个元素可以有多个classes.看个例子:

<title>A simple example page</title>

<div>

<p class="inner-text">

First inner paragraph.

</p>

<p class="inner-text">

Second inner paragraph.

</p>

</div>

<p class="outer-text">

<b>

First outer paragraph.

</b>

</p>

<p class="outer-text">

<b>

Second outer paragraph.

</b>

</p>- 可以通过class来获取标签里面的内容:

# Get the website that contains classes.

response = requests.get("http://dataquestio.github.io/web-scraping-pages/simple_classes.html")

content = response.content

parser = BeautifulSoup(content, 'html.parser')

# Get the first inner paragraph.

# Find all the paragraph tags with the class inner-text.

# Then take the first element in that list.

first_inner_paragraph = parser.find_all("p", class_="inner-text")[0]

print(first_inner_paragraph.text)

''' First paragraph. '''

second_inner_paragraph = parser.find_all("p", class_="inner-text")[1]

second_inner_paragraph_text = second_inner_paragraph.text

first_outer_paragraph = parser.find_all("p", class_="outer-text")[0]

first_outer_paragraph_text = first_outer_paragraph.textCSS Selectors

Cascading Style Sheets(CSS)是一种向HTML网页中添加风格的方法,前面我们展示的网页都是很简洁的没有任何风格,段落内容是黑色,并且字体大小相同。但是大部分网页的字体都是五颜六色的,这都是因为使用了CSS。CSS利用selectors 来选择元素以及元素的classes/id来确定在哪里添加某种风格,比如颜色字体大小等。

- 这会使得在p里面的内容字体都是红色

p{ color: red }- 这会使得p里面class是inner-text的字体是红色:

p.inner-text{ color: red }- 这会使得p里面id为first的字体为红色,我们使用#索引id:

#first{ color: red }- 这会使得花括号里所有class为inner-text的字体是红色

.inner-text{ color: red }CSS不仅仅是制作网页的风格,通常我们也会在爬虫时利用CSS来选择元素。

Using CSS Selectors

- 在BeautifulSoup中使用.select方法就可以对制定网页的风格,看个例子,其中我么发现一个元素可以既有id又有class,并且我们知道一个元素可以有多个classes,只要用空格将其分开就行,id是独一无二的:

<title>A simple example page</title>

<div>

<p class="inner-text first-item" id="first">

First paragraph.

</p>

<p class="inner-text">

Second paragraph.

</p>

</div>

<p class="outer-text first-item" id="second">

<b>

First outer paragraph.

</b>

</p>

<p class="outer-text">

<b>

Second outer paragraph.

</b>

</p>- 选择上面网页中所有class是outer-text的元素,并且选择id是second的元素:

# Get the website that contains classes and ids

response = requests.get("http://dataquestio.github.io/web-scraping-pages/ids_and_classes.html")

content = response.content

parser = BeautifulSoup(content, 'html.parser')

print(parser.select(".outer-text"))

print(parser.select("#second"))

'''

[<p class="outer-text first-item" id="second">

<b>

First outer paragraph.

</b>

</p>, <p class="outer-text">

<b>

Second outer paragraph.

</b>

</p>]

[<p class="outer-text first-item" id="second">

<b>

First outer paragraph.

</b>

</p>]

'''Nesting CSS Selectors

使用CSS Selector 也可以向前面那样找到嵌套的tag,我们可以使用CSS来完成复杂的爬虫任务:

- CSS Selector 将会选择div里面的所有段落:

div p- CSS Selector将会找到div标签里面的所有class为first-item的items

div .first-item- CSS Selector将会选择body标签里面的div标签里面的id为first的items:

.first-item #firstUsing Nested CSS Selectors

- 先看一个HTML:

<meta charset="UTF-8">

<title>2014 Superbowl Team Stats</title>

<table class="stats_table nav_table" id="team_stats">

<tbody>

<tr id="teams">

<th></th>

<th>SEA</th>

<th>NWE</th>

</tr>

<tr id="first-downs">

<td>First downs</td>

<td>20</td>

<td>25</td>

</tr>

<tr id="total-yards">

<td>Total yards</td>

<td>396</td>

<td>377</td>

</tr>

<tr id="turnovers">

<td>Turnovers</td>

<td>1</td>

<td>2</td>

</tr>

<tr id="penalties">

<td>Penalties-yards</td>

<td>7-70</td>

<td>5-36</td>

</tr>

<tr id="total-plays">

<td>Total Plays</td>

<td>53</td>

<td>72</td>

</tr>

<tr id="time-of-possession">

<td>Time of Possession</td>

<td>26:14</td>

<td>33:46</td>

</tr>

</tbody>

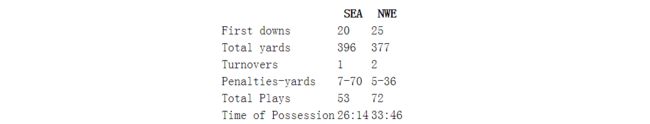

</table>- 网页呈现的内容如下:

这个内容是2014年超级碗的一段节选的成绩,成绩包含了每个团队的信息:每个团队赢了多少码,失误了多少次等等。如上面显示的网页呈现的是一个表格,第一列是Seattle Seahawks队,第二列是 New England Patriots队,每一行代表一个不同的数据。

- 下面获取New England Patriots队的成员的总数以及Seahawks队的总的码数:

# Get the super bowl box score data.

response = requests.get("http://dataquestio.github.io/web-scraping-pages/2014_super_bowl.html")

content = response.content

parser = BeautifulSoup(content, 'html.parser')

# #total-plays存储的是两个队的人数,第三个是New England Patriots,所以此处

patriots_total_plays_count = parser.select("#total-plays")[0].select("td")[2].text

# #total-yards存储的是两个队的码数,第一个td是Seahawks

seahawks_total_yards_count = parser.select("#total-yards")[0].select("td")[1].textBeyond The Basics

虽然我们观察网页的HTML文档就可以获取到我们想要的信息,但是如果我们想过的每个国家橄榄球联盟团队在每赛季的码数这个问题,如果只是手动的去查看是很无聊的,而我们可以使用网页爬虫编写一个脚本自动化的实现这个爬取任务。