CombineTextInputFormat用法

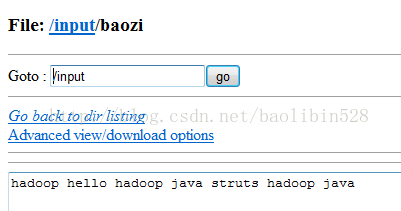

输入数据:

代码:

package inputformat;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.CombineTextInputFormat;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import org.apache.hadoop.mapreduce.lib.partition.HashPartitioner;

/*

* 处理的数据源是多个小文件

* 会把多个小文件合并处理,合并的大小如果小于128M,就当成一个InputSplit处理。

* 与SequenceFileInputFormat不同的是,SequenceFileInputFormat处理的数据源是合并好的SequencceFile类型的数据。

*/

public class CombineTextInputFormatTest {

public static class MyMapper extends

Mapper<LongWritable, Text, Text, LongWritable> {

final Text k2 = new Text();

final LongWritable v2 = new LongWritable();

protected void map(LongWritable key, Text value,

Mapper<LongWritable, Text, Text, LongWritable>.Context context)

throws InterruptedException, IOException {

final String line = value.toString();

final String[] splited = line.split("\\s");

for (String word : splited) {

k2.set(word);

v2.set(1);

context.write(k2, v2);

}

}

}

public static class MyReducer extends

Reducer<Text, LongWritable, Text, LongWritable> {

LongWritable v3 = new LongWritable();

protected void reduce(Text k2, Iterable<LongWritable> v2s,

Reducer<Text, LongWritable, Text, LongWritable>.Context context)

throws IOException, InterruptedException {

long count = 0L;

for (LongWritable v2 : v2s) {

count += v2.get();

}

v3.set(count);

context.write(k2, v3);

}

}

public static void main(String[] args) throws Exception {

final Configuration conf = new Configuration();

final Job job = Job.getInstance(conf, CombineTextInputFormatTest.class.getSimpleName());

// 1.1

FileInputFormat.setInputPaths(job,

"hdfs://192.168.1.10:9000/input");

//这里改了一下

job.setInputFormatClass(CombineTextInputFormat.class);

// 1.2

job.setMapperClass(MyMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

// 1.3 默认只有一个分区

job.setPartitionerClass(HashPartitioner.class);

job.setNumReduceTasks(1);

// 1.4省略不写

// 1.5省略不写

// 2.2

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

// 2.3

FileOutputFormat.setOutputPath(job, new Path(

"hdfs://192.168.1.10:9000/out2"));

job.setOutputFormatClass(TextOutputFormat.class);

// 执行打成jar包的程序时,必须调用下面的方法

job.setJarByClass(CombineTextInputFormatTest.class);

job.waitForCompletion(true);

}

}

console输出:

[root@i-love-you hadoop]# bin/hadoop jar data/ConbineText.jar

15/04/16 15:27:02 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

15/04/16 15:27:06 WARN mapreduce.JobSubmitter: Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this.

15/04/16 15:27:07 INFO input.FileInputFormat: Total input paths to process : 2

15/04/16 15:27:07 INFO input.CombineFileInputFormat: DEBUG: Terminated node allocation with : CompletedNodes: 1, size left: 79

15/04/16 15:27:07 INFO mapreduce.JobSubmitter: number of splits:1

15/04/16 15:27:08 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1429167587909_0003

15/04/16 15:27:08 INFO impl.YarnClientImpl: Submitted application application_1429167587909_0003

15/04/16 15:27:08 INFO mapreduce.Job: The url to track the job: http://i-love-you:8088/proxy/application_1429167587909_0003/

15/04/16 15:27:08 INFO mapreduce.Job: Running job: job_1429167587909_0003

15/04/16 15:27:23 INFO mapreduce.Job: Job job_1429167587909_0003 running in uber mode : false

15/04/16 15:27:23 INFO mapreduce.Job: map 0% reduce 0%

15/04/16 15:27:39 INFO mapreduce.Job: map 100% reduce 0%

15/04/16 15:28:07 INFO mapreduce.Job: map 100% reduce 100%

15/04/16 15:28:17 INFO mapreduce.Job: Job job_1429167587909_0003 completed successfully

15/04/16 15:28:18 INFO mapreduce.Job: Counters: 49

File System Counters

FILE: Number of bytes read=215

FILE: Number of bytes written=212395

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=259

HDFS: Number of bytes written=38

HDFS: Number of read operations=7

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Launched reduce tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=14359

Total time spent by all reduces in occupied slots (ms)=22113

Total time spent by all map tasks (ms)=14359

Total time spent by all reduce tasks (ms)=22113

Total vcore-seconds taken by all map tasks=14359

Total vcore-seconds taken by all reduce tasks=22113

Total megabyte-seconds taken by all map tasks=14703616

Total megabyte-seconds taken by all reduce tasks=22643712

Map-Reduce Framework

Map input records=4

Map output records=13

Map output bytes=183

Map output materialized bytes=215

Input split bytes=180

Combine input records=0

Combine output records=0

Reduce input groups=5

Reduce shuffle bytes=215

Reduce input records=13

Reduce output records=5

Spilled Records=26

Shuffled Maps =1

Failed Shuffles=0

Merged Map outputs=1

GC time elapsed (ms)=209

CPU time spent (ms)=2860

Physical memory (bytes) snapshot=313401344

Virtual memory (bytes) snapshot=1687605248

Total committed heap usage (bytes)=136450048

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=38

计算结果:

[root@i-love-you hadoop]# bin/hdfs dfs -text /out2/part-* hadoop 6 hello 2 java 3 me 1 struts 1

可见把两个小文件的数据合并在一起处理了,合并成一个InputSplit。