OpenCV Using Python——基于SURF特征提取和金字塔LK光流法的单目视觉三维重建

基于SURF特征提取和金字塔LK光流法的单目视觉三维重建

1. 单目视觉三维重建问题

在前面的文章中,笔者用SIFT提取特征后用radio测试剔除了匹配中异常的特征点,然后根据匹配合格的特征点计算基础矩阵和本征矩阵,对本征矩阵SVD分解来估计和构造透视矩阵,根据透视矩阵和齐次坐标变换后的特征点三角化获得特征点在三维空间中的坐标。

(1)找不到外极线

对于运动范围过大的两幅图像,有可能计算不出外极线。经过试验发现,运动范围过大的两帧图像由于SIFT特征点检测后特征点的个数大幅下降,或句话说,SIFT检测特征点没什么问题,但radio测试踢掉了好多异常特征点,特征点个数的减少造成基础矩阵计算得不准确,所以计算外极线时会出现找不到的情况。

(2)仿射结构变化敏感

SIFT检测特征点是很精确的,但为什么检测出的特征点在估计仿射结构时会出现外极点和外极线跳动大的情况呢?个人认为有以下几个方面原因:

a)SIFT检测过精确:SIFT的精确检测剔除了很多本可以匹配的特征点,特征点过少会造成外极线检测误差大,换句话说,SIFT的精确检测结果有可能造成“过拟合”问题;不过可以试试改改SIFT库函数的输入参数,可能会解决;

b)摄像头标定的参数不准确:径向畸变略大也会导致出现扭曲的图像特征点,SIFT检测时出现误检测;

c)图像噪声未补偿:高速运动中的图像需要适当的运动补偿,如果摄像机和跟踪的对象以不同的速度运动,前景和背景同时运动,必然会产生模糊的图像使SIFT特征点检测不准确。

主要出现的问题在a)。b)多次标定摄像头可以解决;c)肉眼观察得到的图像即可判断是否出现问题。

2. 解决单目视觉三维重建问题

主要问题在SIFT的特征提取。下面两种方法谈原理的博客有好多,并且笔者没从底层写过它俩,所以在此不赘述。那么改进在什么地方呢?

(1)SURF特征提取

SURF特征提取和SIFT差不多,只是用计算海塞(还是海森?反正是Hessian)矩阵代替了拉普拉斯滤波。SURF不会像基于浮点核的SIFT的特征点计算得过于精确,所以,SURF计算速度更快但降低了一点精确度。不过主要问题是在SIFT提取特征点后的radio测试,既然不用SIFT提取特征了,配套的radio测试也不要了。不客气地说,这一点笔者是在逃避特征点过分删除的问题。

(2)金字塔Lucas-Kanade光流法

Lucas-Kanade光流法的经典应用场合是稳定背景的运动前景提取。这里考虑到Lucas-Kanade光流法在运动范围过大造成大光流时计算偏差大,所以用金字塔的结构,自上而下修正运动量。同时,用光流法会匹配更多的特征点,使基础矩阵计算更加准确,重建也会有更多的空间点。

3. 代码实现和改进

(1)代码改进

a)基于上一篇文章封装源码,修正上一篇文章中代码出现的错误;

b)添加特征点匹配的图片方便检验特征点匹配结果;

c)在三维重建的结果中添加左视图,主视图和俯视图;

d)删除以数字为标记的难以辨认的形式;

e)匹配图片,外极线图片,三维重建的三视图以及三维重建的三维视图中的特征点采用同一种颜色,便于在不同的图中查找重建特征点的位置;

f )基于上一篇文章作对比试验,暴露上一篇出现的重建问题。

(2)代码实现

import cv2

import math

import numpy as np

from match import *

################################################################################

print 'Load Image'

img1 = cv2.imread('images/cat_1.bmp') #query image

img2 = cv2.imread('images/cat_2.bmp') #train image

rows, cols, channels = img1.shape

img1 = cv2.cvtColor(img1, cv2.COLOR_BGR2RGB)

img2 = cv2.cvtColor(img2, cv2.COLOR_BGR2RGB)

imgGray1 = cv2.cvtColor(img1, cv2.COLOR_RGB2GRAY)

imgGray2 = cv2.cvtColor(img2, cv2.COLOR_RGB2GRAY)

################################################################################

print 'SURF Feature Detection'

# initialize ORB object with default values

surf = cv2.SURF(800)

# find keypoints

keypoint1, descriptor1 = surf.detectAndCompute(imgGray1, None)

keypoint2, descriptor2 = surf.detectAndCompute(imgGray2, None)

################################################################################

def keypointToPoint(keypoint):

'''

from keypoints to points

'''

point = np.zeros(len(keypoint) * 2, np.float32)

for i in range(len(keypoint)):

point[i * 2] = keypoint[i].pt[0]

point[i * 2 + 1] = keypoint[i].pt[1]

point = point.reshape(-1,2)

return point

point1 = keypointToPoint(keypoint1)

rightFeatures = keypointToPoint(keypoint2)

################################################################################

print 'Calculate the Optical Flow Field'

# how each left points moved across the 2 images

lkParams = dict(winSize=(15,15), maxLevel=2, criteria=(3L,10,0.03))

point2, status, error = cv2.calcOpticalFlowPyrLK(imgGray1, imgGray2, point1, None, **lkParams)

# filter out points with high error

rightLowErrPoints = {}

for i in range(len(point2)):

if status[i][0] == 1 and error[i][0] < 12:

rightLowErrPoints[i] = point2[i]

else:

status[i] = 0

bf = cv2.BFMatcher(cv2.NORM_L2, crossCheck=True)

matches = bf.match(descriptor1, descriptor2)

print 'matches:', len(matches)

dist = []

for m in matches:

dist.append(m.distance)

# distance threshold

thresDist = np.median(dist)

good = []

for m in matches:

if m.distance < thresDist:

good.append(m)

print 'Good Matches:', len(good)

################################################################################

# select keypoints from good matches

points1 = []

points2 = []

for m in good:

points1.append(keypoint1[m.queryIdx].pt)

points2.append(keypoint2[m.trainIdx].pt)

points1 = np.float32(points1)

points2 = np.float32(points2)

################################################################################

# combine two images into one

view = drawMatches(img1, img2, points1, points2, colors)

img5, img3 = drawEpilines(img1, img2, points1, points2)

displayMatchImage(view, img5, img3)

# camera matrix from calibration

K = np.array([[517.67386649, 0.0, 268.65952163], [0.0, 519.75461699, 215.58959128], [0.0, 0.0, 1.0]])

P, P1, E = calcPespectiveMat(K, F)

pointCloudX, pointCloudY, pointCloudZ, reprojError = triangulatePoints(points1, points2, K, E, P, P1)

positionX, positionY, positionZ = transformToPosition(pointCloudX, pointCloudY, pointCloudZ, P1, K, scale=10.0)

plotPointCloud(positionX, positionY, positionZ, colors)

################################################################################

print 'Goodbye!'

4. 实验结果

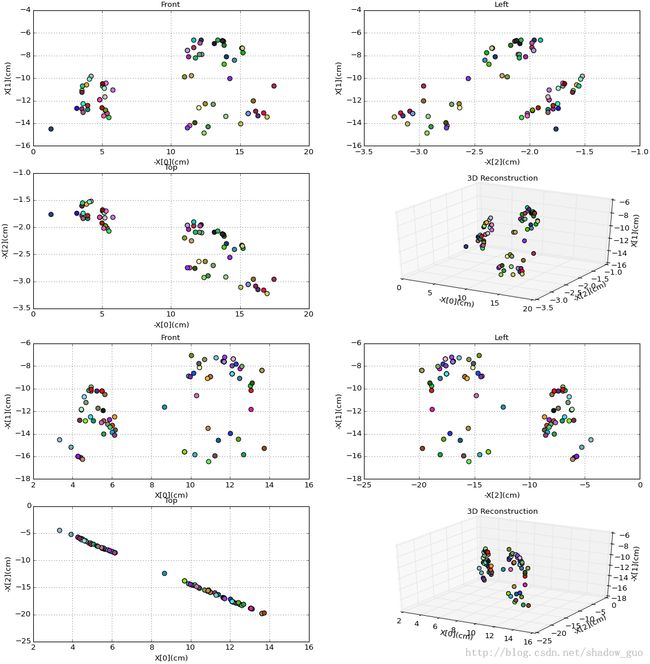

(1)基于SIFT特征提取的重建结果

先给出SIFT特征提取和外极线的计算结果,匹配很完美,外极线的计算也暂时看不出来什么问题。

再给出特征点三维重建后的结果。上图左半部分的特征点对应下图上半部分的特征点;上图右半部分的特征点对应下图下半部分的特征点。机器猫实际测量的高度约为10cm,宽度约为7cm。重投影误差为1.058632472和8.405183629个像素点。

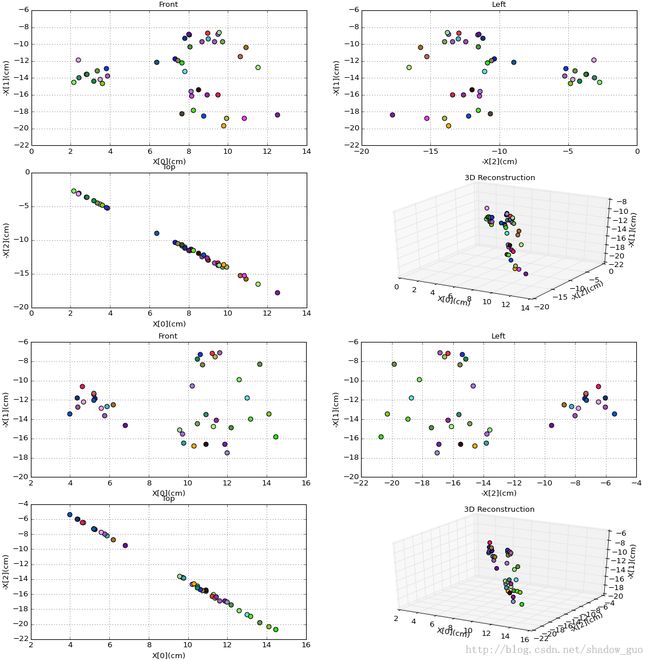

(2)基于SURF特征点提取和金字塔LK光流法的重建结果

继续给出改进后的特征点匹配和外极线计算结果。特征点匹配也很完美,外极线计算的结果和上面不一样。实际上,笔者采集这四张图片摄像头的运动范围很小;但上图上半部分最后的俯视图特征点的深度信息完全不接近一条直线,和实物对应的特征点聚类的情况不一致;上图外极点从中间跳跃到左边,可见SIFT特征提取对外极线计算的敏感。然而,下图外极点和外极线变化都不大。

最后给出改进后的三维重建结果。重建后的俯视图特征点接近一条直线,特征点分布更加符合实际情况。重投影误差分别为15.65128912和9.086802621个像素点,所以SIFT给出的重投影误差更小,说明仿射结构的准确性和重投影误差有关系,但没有那种你准我就一定小的关系。图片大小为394*524个像素点,所以改进后在更加贴近实际仿射结构的同时,重投影后的误差也挺小。

结语

本篇给出的是当前经典的单目视觉三维重建的思路,前一篇提到结果不太准,后来想想,从01年开始就有了从运动估计仿射结构的算法,当时不准可能是因为摄像头的制作工艺还不够,很多摄像头的模型没有今天的摄像头更加贴近理论假设;笔者采集的图像运动范围不大;采集的图像中选择的物体特征也算清晰。总之,结果不至于不准到不可用的地步。笔者觉得结果不准一方面是自己在编写代码时有些细节没理清,另一方面是对OpenCV库函数的参数的单位没了解,导致单位换算的问题出现。全文都在讲改进的地方,但本质上个人认为没有改进一说,只是根据现实的要求,在特征点过匹配和仿射结构的合理性之间将权衡点往后者移动了一点点。