UFLDL Tutorial_Sparse Autoencoder

Neural Networks

Consider a supervised learning problem where we have access to labeled training examples (x(i),y(i)). Neural networks give a way of defining a complex, non-linear form of hypotheses hW,b(x), with parameters W,b that we can fit to our data.

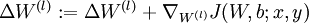

To describe neural networks, we will begin by describing the simplest possible neural network, one which comprises a single "neuron." We will use the following diagram to denote a single neuron:

This "neuron" is a computational unit that takes as input x1,x2,x3 (and a +1 intercept term), and outputs ![]() , where

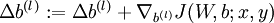

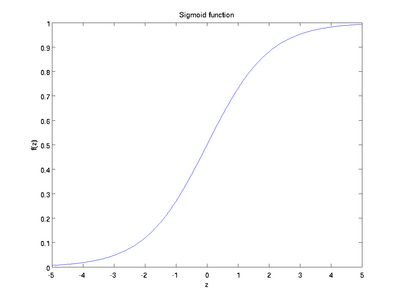

, where ![]() is called the activation function. In these notes, we will choose

is called the activation function. In these notes, we will choose ![]() to be the sigmoid function:

to be the sigmoid function:

Thus, our single neuron corresponds exactly to the input-output mapping defined by logistic regression.

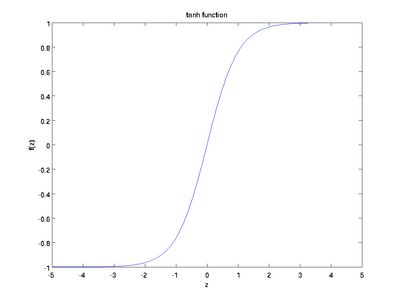

Although these notes will use the sigmoid function, it is worth noting that another common choice for f is the hyperbolic tangent, or tanh, function:

Here are plots of the sigmoid and tanh functions:

The tanh(z) function is a rescaled version of the sigmoid, and its output range is [ − 1,1] instead of [0,1].

Note that unlike some other venues (including the OpenClassroom videos, and parts of CS229), we are not using the convention here of x0 = 1. Instead, the intercept term is handled separately by the parameter b.

Finally, one identity that'll be useful later: If f(z) = 1 / (1 + exp( − z)) is the sigmoid function, then its derivative is given by f'(z) = f(z)(1 − f(z)). (If f is the tanh function, then its derivative is given by f'(z) = 1 − (f(z))2.) You can derive this yourself using the definition of the sigmoid (or tanh) function.

Neural Network model

A neural network is put together by hooking together many of our simple "neurons," so that the output of a neuron can be the input of another. For example, here is a small neural network:

In this figure, we have used circles to also denote the inputs to the network. The circles labeled "+1" are called bias units, and correspond to the intercept term. The leftmost layer of the network is called the input layer, and the rightmost layer the output layer (which, in this example, has only one node). The middle layer of nodes is called the hidden layer, because its values are not observed in the training set. We also say that our example neural network has 3 input units (not counting the bias unit), 3hidden units, and 1 output unit.

We will let nl denote the number of layers in our network; thus nl = 3 in our example. We label layer l as Ll, so layer L1 is the input layer, and layer ![]() the output layer. Our neural network has parameters (W,b) = (W(1),b(1),W(2),b(2)), where we write

the output layer. Our neural network has parameters (W,b) = (W(1),b(1),W(2),b(2)), where we write ![]() to denote the parameter (or weight) associated with the connection between unit j in layer l, and unit i in layer l + 1. (Note the order of the indices.) Also,

to denote the parameter (or weight) associated with the connection between unit j in layer l, and unit i in layer l + 1. (Note the order of the indices.) Also, ![]() is the bias associated with unit i in layer l + 1. Thus, in our example, we have

is the bias associated with unit i in layer l + 1. Thus, in our example, we have ![]() , and

, and ![]() . Note that bias units don't have inputs or connections going into them, since they always output the value +1. We also let sl denote the number of nodes in layer l (not counting the bias unit).

. Note that bias units don't have inputs or connections going into them, since they always output the value +1. We also let sl denote the number of nodes in layer l (not counting the bias unit).

We will write ![]() to denote the activation (meaning output value) of unit i in layer l. For l = 1, we also use

to denote the activation (meaning output value) of unit i in layer l. For l = 1, we also use ![]() to denote the i-th input. Given a fixed setting of the parameters W,b, our neural network defines a hypothesis hW,b(x) that outputs a real number. Specifically, the computation that this neural network represents is given by:

to denote the i-th input. Given a fixed setting of the parameters W,b, our neural network defines a hypothesis hW,b(x) that outputs a real number. Specifically, the computation that this neural network represents is given by:

In the sequel, we also let ![]() denote the total weighted sum of inputs to unit i in layer l, including the bias term (e.g.,

denote the total weighted sum of inputs to unit i in layer l, including the bias term (e.g., ![]() ), so that

), so that ![]() .

.

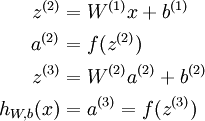

Note that this easily lends itself to a more compact notation. Specifically, if we extend the activation function ![]() to apply to vectors in an element-wise fashion (i.e., f([z1,z2,z3]) = [f(z1),f(z2),f(z3)]), then we can write the equations above more compactly as:

to apply to vectors in an element-wise fashion (i.e., f([z1,z2,z3]) = [f(z1),f(z2),f(z3)]), then we can write the equations above more compactly as:

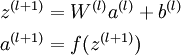

We call this step forward propagation. More generally, recalling that we also use a(1) = x to also denote the values from the input layer, then given layer l's activations a(l), we can compute layer l + 1's activations a(l + 1) as:

By organizing our parameters in matrices and using matrix-vector operations, we can take advantage of fast linear algebra routines to quickly perform calculations in our network.

We have so far focused on one example neural network, but one can also build neural networks with other architectures (meaning patterns of connectivity between neurons), including ones with multiple hidden layers. The most common choice is a ![]() -layered network where layer

-layered network where layer ![]() is the input layer, layer

is the input layer, layer ![]() is the output layer, and each layer

is the output layer, and each layer ![]() is densely connected to layer

is densely connected to layer ![]() . In this setting, to compute the output of the network, we can successively compute all the activations in layer

. In this setting, to compute the output of the network, we can successively compute all the activations in layer ![]() , then layer

, then layer ![]() , and so on, up to layer

, and so on, up to layer ![]() , using the equations above that describe the forward propagation step. This is one example of a feedforwardneural network, since the connectivity graph does not have any directed loops or cycles.

, using the equations above that describe the forward propagation step. This is one example of a feedforwardneural network, since the connectivity graph does not have any directed loops or cycles.

Neural networks can also have multiple output units. For example, here is a network with two hidden layers layers L2 and L3 and two output units in layer L4:

To train this network, we would need training examples (x(i),y(i)) where ![]() . This sort of network is useful if there're multiple outputs that you're interested in predicting. (For example, in a medical diagnosis application, the vector x might give the input features of a patient, and the different outputs yi's might indicate presence or absence of different diseases.)

. This sort of network is useful if there're multiple outputs that you're interested in predicting. (For example, in a medical diagnosis application, the vector x might give the input features of a patient, and the different outputs yi's might indicate presence or absence of different diseases.)

Backpropagation Algorithm

Suppose we have a fixed training set ![]() of m training examples. We can train our neural network using batch gradient descent. In detail, for a single training example (x,y), we define the cost function with respect to that single example to be:

of m training examples. We can train our neural network using batch gradient descent. In detail, for a single training example (x,y), we define the cost function with respect to that single example to be:

This is a (one-half) squared-error cost function. Given a training set of m examples, we then define the overall cost function to be:

The first term in the definition of J(W,b) is an average sum-of-squares error term. The second term is a regularization term (also called a weight decay term) that tends to decrease the magnitude of the weights, and helps prevent overfitting.

[Note: Usually weight decay is not applied to the bias terms ![]() , as reflected in our definition for J(W,b). Applying weight decay to the bias units usually makes only a small difference to the final network, however. If you've taken CS229 (Machine Learning) at Stanford or watched the course's videos on YouTube, you may also recognize this weight decay as essentially a variant of the Bayesian regularization method you saw there, where we placed a Gaussian prior on the parameters and did MAP (instead of maximum likelihood) estimation.]

, as reflected in our definition for J(W,b). Applying weight decay to the bias units usually makes only a small difference to the final network, however. If you've taken CS229 (Machine Learning) at Stanford or watched the course's videos on YouTube, you may also recognize this weight decay as essentially a variant of the Bayesian regularization method you saw there, where we placed a Gaussian prior on the parameters and did MAP (instead of maximum likelihood) estimation.]

The weight decay parameter λ controls the relative importance of the two terms. Note also the slightly overloaded notation:J(W,b;x,y) is the squared error cost with respect to a single example; J(W,b) is the overall cost function, which includes the weight decay term.

This cost function above is often used both for classification and for regression problems. For classification, we let y = 0 or 1represent the two class labels (recall that the sigmoid activation function outputs values in [0,1]; if we were using a tanh activation function, we would instead use -1 and +1 to denote the labels). For regression problems, we first scale our outputs to ensure that they lie in the [0,1] range (or if we were using a tanh activation function, then the [ − 1,1] range).

Our goal is to minimize J(W,b) as a function of W and b. To train our neural network, we will initialize each parameter ![]() and each

and each ![]() to a small random value near zero (say according to a Normal(0,ε2) distribution for some small ε, say 0.01), and then apply an optimization algorithm such as batch gradient descent. Since J(W,b) is a non-convex function, gradient descent is susceptible to local optima; however, in practice gradient descent usually works fairly well. Finally, note that it is important to initialize the parameters randomly, rather than to all 0's. If all the parameters start off at identical values, then all the hidden layer units will end up learning the same function of the input (more formally,

to a small random value near zero (say according to a Normal(0,ε2) distribution for some small ε, say 0.01), and then apply an optimization algorithm such as batch gradient descent. Since J(W,b) is a non-convex function, gradient descent is susceptible to local optima; however, in practice gradient descent usually works fairly well. Finally, note that it is important to initialize the parameters randomly, rather than to all 0's. If all the parameters start off at identical values, then all the hidden layer units will end up learning the same function of the input (more formally, ![]() will be the same for all values of i, so that

will be the same for all values of i, so that ![]() for any input x). The random initialization serves the purpose of symmetry breaking.

for any input x). The random initialization serves the purpose of symmetry breaking.

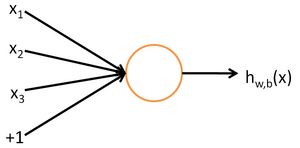

One iteration of gradient descent updates the parameters W,b as follows:

where α is the learning rate. The key step is computing the partial derivatives above. We will now describe the backpropagationalgorithm, which gives an efficient way to compute these partial derivatives.

We will first describe how backpropagation can be used to compute ![]() and

and ![]() , the partial derivatives of the cost function J(W,b;x,y) defined with respect to a single example (x,y). Once we can compute these, we see that the derivative of the overall cost function J(W,b) can be computed as:

, the partial derivatives of the cost function J(W,b;x,y) defined with respect to a single example (x,y). Once we can compute these, we see that the derivative of the overall cost function J(W,b) can be computed as:

The two lines above differ slightly because weight decay is applied to W but not b.

The intuition behind the backpropagation algorithm is as follows. Given a training example (x,y), we will first run a "forward pass" to compute all the activations throughout the network, including the output value of the hypothesis hW,b(x). Then, for each node i in layer l, we would like to compute an "error term" ![]() that measures how much that node was "responsible" for any errors in our output. For an output node, we can directly measure the difference between the network's activation and the true target value, and use that to define

that measures how much that node was "responsible" for any errors in our output. For an output node, we can directly measure the difference between the network's activation and the true target value, and use that to define ![]() (where layer nl is the output layer). How about hidden units? For those, we will compute

(where layer nl is the output layer). How about hidden units? For those, we will compute ![]() based on a weighted average of the error terms of the nodes that uses

based on a weighted average of the error terms of the nodes that uses ![]() as an input. In detail, here is the backpropagation algorithm:

as an input. In detail, here is the backpropagation algorithm:

- Perform a feedforward pass, computing the activations for layers L2, L3, and so on up to the output layer

.

. - For each output unit i in layer nl (the output layer), set

- For

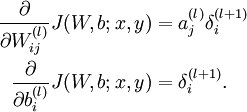

- Compute the desired partial derivatives, which are given as:

Finally, we can also re-write the algorithm using matrix-vectorial notation. We will use "![]() " to denote the element-wise product operator (denoted ".*" in Matlab or Octave, and also called the Hadamard product), so that if

" to denote the element-wise product operator (denoted ".*" in Matlab or Octave, and also called the Hadamard product), so that if ![]() , then

, then ![]() . Similar to how we extended the definition of

. Similar to how we extended the definition of ![]() to apply element-wise to vectors, we also do the same for

to apply element-wise to vectors, we also do the same for ![]() (so that

(so that ![]() ).

).

The algorithm can then be written:

- Perform a feedforward pass, computing the activations for layers

,

,  , up to the output layer

, up to the output layer  , using the equations defining the forward propagation steps

, using the equations defining the forward propagation steps - For the output layer (layer

), set

), set

-

- For

-

Set

-

-

Set

- Compute the desired partial derivatives:

Implementation note: In steps 2 and 3 above, we need to compute ![]() for each value of

for each value of ![]() . Assuming

. Assuming ![]() is the sigmoid activation function, we would already have

is the sigmoid activation function, we would already have ![]() stored away from the forward pass through the network. Thus, using the expression that we worked out earlier for

stored away from the forward pass through the network. Thus, using the expression that we worked out earlier for ![]() , we can compute this as

, we can compute this as ![]() .

.

Finally, we are ready to describe the full gradient descent algorithm. In the pseudo-code below, ![]() is a matrix (of the same dimension as

is a matrix (of the same dimension as ![]() ), and

), and ![]() is a vector (of the same dimension as

is a vector (of the same dimension as ![]() ). Note that in this notation, "

). Note that in this notation, "![]() " is a matrix, and in particular it isn't "

" is a matrix, and in particular it isn't "![]() times

times ![]() ." We implement one iteration of batch gradient descent as follows:

." We implement one iteration of batch gradient descent as follows:

- Set

,

,  (matrix/vector of zeros) for all

(matrix/vector of zeros) for all  .

. - For

to

to  ,

,

- Use backpropagation to compute

and

and  .

. - Set

.

. - Set

.

.

- Use backpropagation to compute

- Update the parameters:

To train our neural network, we can now repeatedly take steps of gradient descent to reduce our cost function ![]() .

.

Gradient checking and advanced optimization

Backpropagation is a notoriously difficult algorithm to debug and get right, especially since many subtly buggy implementations of it—for example, one that has an off-by-one error in the indices and that thus only trains some of the layers of weights, or an implementation that omits the bias term—will manage to learn something that can look surprisingly reasonable (while performing less well than a correct implementation). Thus, even with a buggy implementation, it may not at all be apparent that anything is amiss. In this section, we describe a method for numerically checking the derivatives computed by your code to make sure that your implementation is correct. Carrying out the derivative checking procedure described here will significantly increase your confidence in the correctness of your code.

Suppose we want to minimize ![]() as a function of

as a function of ![]() . For this example, suppose

. For this example, suppose ![]() , so that

, so that ![]() . In this 1-dimensional case, one iteration of gradient descent is given by

. In this 1-dimensional case, one iteration of gradient descent is given by

Suppose also that we have implemented some function ![]() that purportedly computes

that purportedly computes ![]() , so that we implement gradient descent using the update

, so that we implement gradient descent using the update ![]() . How can we check if our implementation of

. How can we check if our implementation of ![]() is correct?

is correct?

Recall the mathematical definition of the derivative as

Thus, at any specific value of ![]() , we can numerically approximate the derivative as follows:

, we can numerically approximate the derivative as follows:

In practice, we set EPSILON to a small constant, say around ![]() . (There's a large range of values of EPSILON that should work well, but we don't set EPSILON to be "extremely" small, say

. (There's a large range of values of EPSILON that should work well, but we don't set EPSILON to be "extremely" small, say ![]() , as that would lead to numerical roundoff errors.)

, as that would lead to numerical roundoff errors.)

Thus, given a function ![]() that is supposedly computing

that is supposedly computing ![]() , we can now numerically verify its correctness by checking that

, we can now numerically verify its correctness by checking that

The degree to which these two values should approximate each other will depend on the details of ![]() . But assuming

. But assuming ![]() , you'll usually find that the left- and right-hand sides of the above will agree to at least 4 significant digits (and often many more).

, you'll usually find that the left- and right-hand sides of the above will agree to at least 4 significant digits (and often many more).

Now, consider the case where ![]() is a vector rather than a single real number (so that we have

is a vector rather than a single real number (so that we have ![]() parameters that we want to learn), and

parameters that we want to learn), and ![]() . In our neural network example we used "

. In our neural network example we used "![]() ," but one can imagine "unrolling" the parameters

," but one can imagine "unrolling" the parameters ![]() into a long vector

into a long vector ![]() . We now generalize our derivative checking procedure to the case where

. We now generalize our derivative checking procedure to the case where ![]() may be a vector.

may be a vector.

Suppose we have a function ![]() that purportedly computes

that purportedly computes ![]() ; we'd like to check if

; we'd like to check if ![]() is outputting correct derivative values. Let

is outputting correct derivative values. Let ![]() , where

, where

is the ![]() -th basis vector (a vector of the same dimension as

-th basis vector (a vector of the same dimension as ![]() , with a "1" in the

, with a "1" in the ![]() -th position and "0"s everywhere else). So,

-th position and "0"s everywhere else). So, ![]() is the same as

is the same as ![]() , except its

, except its ![]() -th element has been incremented by EPSILON. Similarly, let

-th element has been incremented by EPSILON. Similarly, let ![]() be the corresponding vector with the

be the corresponding vector with the ![]() -th element decreased by EPSILON. We can now numerically verify

-th element decreased by EPSILON. We can now numerically verify ![]() 's correctness by checking, for each

's correctness by checking, for each ![]() , that:

, that:

When implementing backpropagation to train a neural network, in a correct implementation we will have that

This result shows that the final block of psuedo-code in Backpropagation Algorithm is indeed implementing gradient descent. To make sure your implementation of gradient descent is correct, it is usually very helpful to use the method described above to numerically compute the derivatives of ![]() , and thereby verify that your computations of

, and thereby verify that your computations of ![]() and

and ![]() are indeed giving the derivatives you want.

are indeed giving the derivatives you want.

Finally, so far our discussion has centered on using gradient descent to minimize ![]() . If you have implemented a function that computes

. If you have implemented a function that computes ![]() and

and ![]() , it turns out there are more sophisticated algorithms than gradient descent for trying to minimize

, it turns out there are more sophisticated algorithms than gradient descent for trying to minimize ![]() . For example, one can envision an algorithm that uses gradient descent, but automatically tunes the learning rate

. For example, one can envision an algorithm that uses gradient descent, but automatically tunes the learning rate ![]() so as to try to use a step-size that causes

so as to try to use a step-size that causes ![]() to approach a local optimum as quickly as possible. There are other algorithms that are even more sophisticated than this; for example, there are algorithms that try to find an approximation to the Hessian matrix, so that it can take more rapid steps towards a local optimum (similar to Newton's method). A full discussion of these algorithms is beyond the scope of these notes, but one example is the L-BFGS algorithm. (Another example is the conjugate gradient algorithm.) You will use one of these algorithms in the programming exercise. The main thing you need to provide to these advanced optimization algorithms is that for any

to approach a local optimum as quickly as possible. There are other algorithms that are even more sophisticated than this; for example, there are algorithms that try to find an approximation to the Hessian matrix, so that it can take more rapid steps towards a local optimum (similar to Newton's method). A full discussion of these algorithms is beyond the scope of these notes, but one example is the L-BFGS algorithm. (Another example is the conjugate gradient algorithm.) You will use one of these algorithms in the programming exercise. The main thing you need to provide to these advanced optimization algorithms is that for any ![]() , you have to be able to compute

, you have to be able to compute ![]() and

and ![]() . These optimization algorithms will then do their own internal tuning of the learning rate/step-size

. These optimization algorithms will then do their own internal tuning of the learning rate/step-size ![]() (and compute its own approximation to the Hessian, etc.) to automatically search for a value of

(and compute its own approximation to the Hessian, etc.) to automatically search for a value of ![]() that minimizes

that minimizes ![]() . Algorithms such as L-BFGS and conjugate gradient can often be much faster than gradient descent.

. Algorithms such as L-BFGS and conjugate gradient can often be much faster than gradient descent.

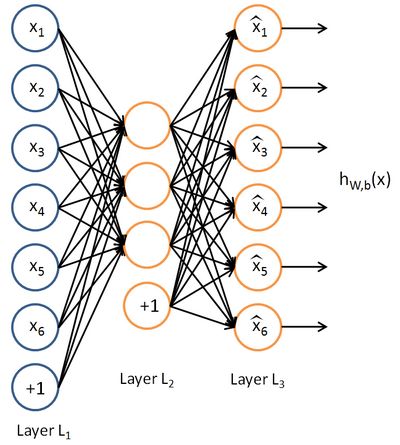

Autoencoders and Sparsity

So far, we have described the application of neural networks to supervised learning, in which we have labeled training examples. Now suppose we have only a set of unlabeled training examples ![]() , where

, where ![]() . An autoencoder neural network is an unsupervised learning algorithm that applies backpropagation, setting the target values to be equal to the inputs. I.e., it uses

. An autoencoder neural network is an unsupervised learning algorithm that applies backpropagation, setting the target values to be equal to the inputs. I.e., it uses![]() .

.

Here is an autoencoder:

The autoencoder tries to learn a function ![]() . In other words, it is trying to learn an approximation to the identity function, so as to output

. In other words, it is trying to learn an approximation to the identity function, so as to output ![]() that is similar to

that is similar to ![]() . The identity function seems a particularly trivial function to be trying to learn; but by placing constraints on the network, such as by limiting the number of hidden units, we can discover interesting structure about the data. As a concrete example, suppose the inputs

. The identity function seems a particularly trivial function to be trying to learn; but by placing constraints on the network, such as by limiting the number of hidden units, we can discover interesting structure about the data. As a concrete example, suppose the inputs ![]() are the pixel intensity values from a

are the pixel intensity values from a ![]() image (100 pixels) so

image (100 pixels) so ![]() , and there are

, and there are ![]() hidden units in layer

hidden units in layer ![]() . Note that we also have

. Note that we also have ![]() . Since there are only 50 hidden units, the network is forced to learn a compressed representation of the input. I.e., given only the vector of hidden unit activations

. Since there are only 50 hidden units, the network is forced to learn a compressed representation of the input. I.e., given only the vector of hidden unit activations ![]() , it must try to reconstruct the 100-pixel input

, it must try to reconstruct the 100-pixel input ![]() . If the input were completely random---say, each

. If the input were completely random---say, each ![]() comes from an IID Gaussian independent of the other features---then this compression task would be very difficult. But if there is structure in the data, for example, if some of the input features are correlated, then this algorithm will be able to discover some of those correlations. In fact, this simple autoencoder often ends up learning a low-dimensional representation very similar to PCAs.

comes from an IID Gaussian independent of the other features---then this compression task would be very difficult. But if there is structure in the data, for example, if some of the input features are correlated, then this algorithm will be able to discover some of those correlations. In fact, this simple autoencoder often ends up learning a low-dimensional representation very similar to PCAs.

Our argument above relied on the number of hidden units ![]() being small. But even when the number of hidden units is large (perhaps even greater than the number of input pixels), we can still discover interesting structure, by imposing other constraints on the network. In particular, if we impose a sparsity constraint on the hidden units, then the autoencoder will still discover interesting structure in the data, even if the number of hidden units is large.

being small. But even when the number of hidden units is large (perhaps even greater than the number of input pixels), we can still discover interesting structure, by imposing other constraints on the network. In particular, if we impose a sparsity constraint on the hidden units, then the autoencoder will still discover interesting structure in the data, even if the number of hidden units is large.

Informally, we will think of a neuron as being "active" (or as "firing") if its output value is close to 1, or as being "inactive" if its output value is close to 0. We would like to constrain the neurons to be inactive most of the time. This discussion assumes a sigmoid activation function. If you are using a tanh activation function, then we think of a neuron as being inactive when it outputs values close to -1.

Recall that ![]() denotes the activation of hidden unit

denotes the activation of hidden unit ![]() in the autoencoder. However, this notation doesn't make explicit what was the input

in the autoencoder. However, this notation doesn't make explicit what was the input ![]() that led to that activation. Thus, we will write

that led to that activation. Thus, we will write ![]() to denote the activation of this hidden unit when the network is given a specific input

to denote the activation of this hidden unit when the network is given a specific input ![]() . Further, let

. Further, let

be the average activation of hidden unit ![]() (averaged over the training set). We would like to (approximately) enforce the constraint

(averaged over the training set). We would like to (approximately) enforce the constraint

where ![]() is a sparsity parameter, typically a small value close to zero (say

is a sparsity parameter, typically a small value close to zero (say ![]() ). In other words, we would like the average activation of each hidden neuron

). In other words, we would like the average activation of each hidden neuron ![]() to be close to 0.05 (say). To satisfy this constraint, the hidden unit's activations must mostly be near 0.

to be close to 0.05 (say). To satisfy this constraint, the hidden unit's activations must mostly be near 0.

To achieve this, we will add an extra penalty term to our optimization objective that penalizes ![]() deviating significantly from

deviating significantly from ![]() . Many choices of the penalty term will give reasonable results. We will choose the following:

. Many choices of the penalty term will give reasonable results. We will choose the following:

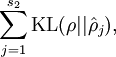

Here, ![]() is the number of neurons in the hidden layer, and the index

is the number of neurons in the hidden layer, and the index ![]() is summing over the hidden units in our network. If you are familiar with the concept of KL divergence, this penalty term is based on it, and can also be written

is summing over the hidden units in our network. If you are familiar with the concept of KL divergence, this penalty term is based on it, and can also be written

where ![]() is the Kullback-Leibler (KL) divergence between a Bernoulli random variable with mean

is the Kullback-Leibler (KL) divergence between a Bernoulli random variable with mean ![]() and a Bernoulli random variable with mean

and a Bernoulli random variable with mean ![]() . KL-divergence is a standard function for measuring how different two different distributions are. (If you've not seen KL-divergence before, don't worry about it; everything you need to know about it is contained in these notes.)

. KL-divergence is a standard function for measuring how different two different distributions are. (If you've not seen KL-divergence before, don't worry about it; everything you need to know about it is contained in these notes.)

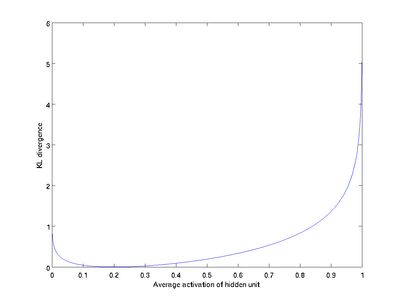

This penalty function has the property that ![]() if

if ![]() , and otherwise it increases monotonically as

, and otherwise it increases monotonically as ![]() diverges from

diverges from ![]() . For example, in the figure below, we have set

. For example, in the figure below, we have set ![]() , and plotted

, and plotted ![]() for a range of values of

for a range of values of ![]() :

:

We see that the KL-divergence reaches its minimum of 0 at ![]() , and blows up (it actually approaches

, and blows up (it actually approaches ![]() ) as

) as ![]() approaches 0 or 1. Thus, minimizing this penalty term has the effect of causing

approaches 0 or 1. Thus, minimizing this penalty term has the effect of causing ![]() to be close to

to be close to ![]() .

.

Our overall cost function is now

where ![]() is as defined previously, and

is as defined previously, and ![]() controls the weight of the sparsity penalty term. The term

controls the weight of the sparsity penalty term. The term ![]() (implicitly) depends on

(implicitly) depends on![]() also, because it is the average activation of hidden unit

also, because it is the average activation of hidden unit ![]() , and the activation of a hidden unit depends on the parameters

, and the activation of a hidden unit depends on the parameters ![]() .

.

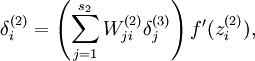

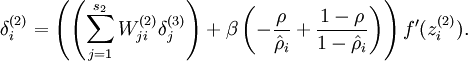

To incorporate the KL-divergence term into your derivative calculation, there is a simple-to-implement trick involving only a small change to your code. Specifically, where previously for the second layer (![]() ), during backpropagation you would have computed

), during backpropagation you would have computed

now instead compute

One subtlety is that you'll need to know ![]() to compute this term. Thus, you'll need to compute a forward pass on all the training examples first to compute the average activations on the training set, before computing backpropagation on any example. If your training set is small enough to fit comfortably in computer memory (this will be the case for the programming assignment), you can compute forward passes on all your examples and keep the resulting activations in memory and compute the

to compute this term. Thus, you'll need to compute a forward pass on all the training examples first to compute the average activations on the training set, before computing backpropagation on any example. If your training set is small enough to fit comfortably in computer memory (this will be the case for the programming assignment), you can compute forward passes on all your examples and keep the resulting activations in memory and compute the ![]() s. Then you can use your precomputed activations to perform backpropagation on all your examples. If your data is too large to fit in memory, you may have to scan through your examples computing a forward pass on each to accumulate (sum up) the activations and compute

s. Then you can use your precomputed activations to perform backpropagation on all your examples. If your data is too large to fit in memory, you may have to scan through your examples computing a forward pass on each to accumulate (sum up) the activations and compute ![]() (discarding the result of each forward pass after you have taken its activations

(discarding the result of each forward pass after you have taken its activations ![]() into account for computing

into account for computing ![]() ). Then after having computed

). Then after having computed![]() , you'd have to redo the forward pass for each example so that you can do backpropagation on that example. In this latter case, you would end up computing a forward pass twice on each example in your training set, making it computationally less efficient.

, you'd have to redo the forward pass for each example so that you can do backpropagation on that example. In this latter case, you would end up computing a forward pass twice on each example in your training set, making it computationally less efficient.

The full derivation showing that the algorithm above results in gradient descent is beyond the scope of these notes. But if you implement the autoencoder using backpropagation modified this way, you will be performing gradient descent exactly on the objective![]() . Using the derivative checking method, you will be able to verify this for yourself as well.

. Using the derivative checking method, you will be able to verify this for yourself as well.

Visualizing a Trained Autoencoder

Having trained a (sparse) autoencoder, we would now like to visualize the function learned by the algorithm, to try to understand what it has learned. Consider the case of training an autoencoder on ![]() images, so that

images, so that ![]() . Each hidden unit

. Each hidden unit ![]() computes a function of the input:

computes a function of the input:

We will visualize the function computed by hidden unit ![]() ---which depends on the parameters

---which depends on the parameters ![]() (ignoring the bias term for now)---using a 2D image. In particular, we think of

(ignoring the bias term for now)---using a 2D image. In particular, we think of ![]() as some non-linear feature of the input

as some non-linear feature of the input ![]() . We ask: What input image

. We ask: What input image ![]() would cause

would cause ![]() to be maximally activated? (Less formally, what is the feature that hidden unit

to be maximally activated? (Less formally, what is the feature that hidden unit ![]() is looking for?) For this question to have a non-trivial answer, we must impose some constraints on

is looking for?) For this question to have a non-trivial answer, we must impose some constraints on ![]() . If we suppose that the input is norm constrained by

. If we suppose that the input is norm constrained by ![]() , then one can show (try doing this yourself) that the input which maximally activates hidden unit

, then one can show (try doing this yourself) that the input which maximally activates hidden unit ![]() is given by setting pixel

is given by setting pixel ![]() (for all 100 pixels,

(for all 100 pixels, ![]() ) to

) to

By displaying the image formed by these pixel intensity values, we can begin to understand what feature hidden unit ![]() is looking for.

is looking for.

If we have an autoencoder with 100 hidden units (say), then we our visualization will have 100 such images---one per hidden unit. By examining these 100 images, we can try to understand what the ensemble of hidden units is learning.

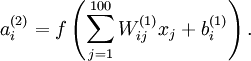

When we do this for a sparse autoencoder (trained with 100 hidden units on 10x10 pixel inputs1 we get the following result:

Each square in the figure above shows the (norm bounded) input image ![]() that maximally actives one of 100 hidden units. We see that the different hidden units have learned to detect edges at different positions and orientations in the image.

that maximally actives one of 100 hidden units. We see that the different hidden units have learned to detect edges at different positions and orientations in the image.

These features are, not surprisingly, useful for such tasks as object recognition and other vision tasks. When applied to other input domains (such as audio), this algorithm also learns useful representations/features for those domains too.

1 The learned features were obtained by training on whitened natural images. Whitening is a preprocessing step which removes redundancy in the input, by causing adjacent pixels to become less correlated.

Sparse Autoencoder Notation Summary

Here is a summary of the symbols used in our derivation of the sparse autoencoder:

| Symbol | Meaning |

|---|---|

| Input features for a training example, |

|

| Output/target values. Here, |

|

| The |

|

| Output of our hypothesis on input the same dimension as the target value |

|

| The parameter associated with the connection between unit unit |

|

| The bias term associated with unit |

|

| Our parameter vector. It is useful to think of this as the result of taking the parameters |

|

| Activation (output) of unit In addition, since layer |

|

| The activation function. Throughout these notes, we used |

|

| Total weighted sum of inputs to unit |

|

| Learning rate parameter | |

| Number of units in layer |

|

| Number layers in the network. Layer |

|

| Weight decay parameter. | |

| For an autoencoder, its output; i.e., its reconstruction of the input |

|

| Sparsity parameter, which specifies our desired level of sparsity | |

| The average activation of hidden unit |

|

| Weight of the sparsity penalty term (in the sparse autoencoder objective). |

Exercise:Sparse Autoencoder

Contents[hide]

|

Download Related Reading

- sparseae_reading.pdf

- sparseae_exercise.pdf

Sparse autoencoder implementation

In this problem set, you will implement the sparse autoencoder algorithm, and show how it discovers that edges are a good representation for natural images. (Images provided by Bruno Olshausen.) The sparse autoencoder algorithm is described in the lecture notes found on the course website.

In the file sparseae_exercise.zip, we have provided some starter code in Matlab. You should write your code at the places indicated in the files ("YOUR CODE HERE"). You have to complete the following files: sampleIMAGES.m, sparseAutoencoderCost.m, computeNumericalGradient.m. The starter code in train.m shows how these functions are used.

Specifically, in this exercise you will implement a sparse autoencoder, trained with 8×8 image patches using the L-BFGS optimization algorithm.

A note on the software: The provided .zip file includes a subdirectory minFunc with 3rd party software implementing L-BFGS, that is licensed under a Creative Commons, Attribute, Non-Commercial license. If you need to use this software for commercial purposes, you can download and use a different function (fminlbfgs) that can serve the same purpose, but runs ~3x slower for this exercise (and thus is less recommended). You can read more about this in the Fminlbfgs_Details page.

Step 1: Generate training set

The first step is to generate a training set. To get a single training example x, randomly pick one of the 10 images, then randomly sample an 8×8 image patch from the selected image, and convert the image patch (either in row-major order or column-major order; it doesn't matter) into a 64-dimensional vector to get a training example ![]()

Complete the code in sampleIMAGES.m. Your code should sample 10000 image patches and concatenate them into a 64×10000 matrix.

To make sure your implementation is working, run the code in "Step 1" of train.m. This should result in a plot of a random sample of 200 patches from the dataset.

Implementational tip: When we run our implemented sampleImages(), it takes under 5 seconds. If your implementation takes over 30 seconds, it may be because you are accidentally making a copy of an entire 512×512 image each time you're picking a random image. By copying a 512×512 image 10000 times, this can make your implementation much less efficient. While this doesn't slow down your code significantly for this exercise (because we have only 10000 examples), when we scale to much larger problems later this quarter with 106 or more examples, this will significantly slow down your code. Please implement sampleIMAGES so that you aren't making a copy of an entire 512×512 image each time you need to cut out an 8x8 image patch.

Step 2: Sparse autoencoder objective

Implement code to compute the sparse autoencoder cost function Jsparse(W,b) (Section 3 of the lecture notes) and the corresponding derivatives of Jsparse with respect to the different parameters. Use the sigmoid function for the activation function,![]() . In particular, complete the code in sparseAutoencoderCost.m.

. In particular, complete the code in sparseAutoencoderCost.m.

The sparse autoencoder is parameterized by matrices ![]() ,

, ![]() vectors

vectors ![]() ,

, ![]() . However, for subsequent notational convenience, we will "unroll" all of these parameters into a very long parameter vector θ with s1s2 + s2s3 +s2 + s3 elements. The code for converting between the (W(1),W(2),b(1),b(2)) and the θ parameterization is already provided in the starter code.

. However, for subsequent notational convenience, we will "unroll" all of these parameters into a very long parameter vector θ with s1s2 + s2s3 +s2 + s3 elements. The code for converting between the (W(1),W(2),b(1),b(2)) and the θ parameterization is already provided in the starter code.

Implementational tip: The objective Jsparse(W,b) contains 3 terms, corresponding to the squared error term, the weight decay term, and the sparsity penalty. You're welcome to implement this however you want, but for ease of debugging, you might implement the cost function and derivative computation (backpropagation) only for the squared error term first (this corresponds to setting λ = β = 0), and implement the gradient checking method in the next section to first verify that this code is correct. Then only after you have verified that the objective and derivative calculations corresponding to the squared error term are working, add in code to compute the weight decay and sparsity penalty terms and their corresponding derivatives.

Step 3: Gradient checking

Following Section 2.3 of the lecture notes, implement code for gradient checking. Specifically, complete the code incomputeNumericalGradient.m. Please use EPSILON = 10-4 as described in the lecture notes.

We've also provided code in checkNumericalGradient.m for you to test your code. This code defines a simple quadratic function ![]() given by

given by ![]() , and evaluates it at the point x = (4,10)T. It allows you to verify that your numerically evaluated gradient is very close to the true (analytically computed) gradient.

, and evaluates it at the point x = (4,10)T. It allows you to verify that your numerically evaluated gradient is very close to the true (analytically computed) gradient.

After using checkNumericalGradient.m to make sure your implementation is correct, next use computeNumericalGradient.m to make sure that yoursparseAutoencoderCost.m is computing derivatives correctly. For details, see Steps 3 in train.m. We strongly encourage you not to proceed to the next step until you've verified that your derivative computations are correct.

Implementational tip: If you are debugging your code, performing gradient checking on smaller models and smaller training sets (e.g., using only 10 training examples and 1-2 hidden units) may speed things up.

Step 4: Train the sparse autoencoder

Now that you have code that computes Jsparse and its derivatives, we're ready to minimize Jsparse with respect to its parameters, and thereby train our sparse autoencoder.

We will use the L-BFGS algorithm. This is provided to you in a function called minFunc (code provided by Mark Schmidt) included in the starter code. (For the purpose of this assignment, you only need to call minFunc with the default parameters. You do not need to know how L-BFGS works.) We have already provided code in train.m (Step 4) to call minFunc. The minFunc code assumes that the parameters to be optimized are a long parameter vector; so we will use the "θ" parameterization rather than the "(W(1),W(2),b(1),b(2))" parameterization when passing our parameters to it.

Train a sparse autoencoder with 64 input units, 25 hidden units, and 64 output units. In our starter code, we have provided a function for initializing the parameters. We initialize the biases ![]() to zero, and the weights

to zero, and the weights ![]() to random numbers drawn uniformly from the interval

to random numbers drawn uniformly from the interval ![\left[-\sqrt{\frac{6}{n_{\rm in}+n_{\rm out}+1}},\sqrt{\frac{6}{n_{\rm in}+n_{\rm out}+1}}\,\right]](http://img.e-com-net.com/image/info5/d7a2f5b579f14579991e13ab9993d1db.png) , where nin is the fan-in (the number of inputs feeding into a node) and nout is the fan-in (the number of units that a node feeds into).

, where nin is the fan-in (the number of inputs feeding into a node) and nout is the fan-in (the number of units that a node feeds into).

The values we provided for the various parameters (λ,β,ρ, etc.) should work, but feel free to play with different settings of the parameters as well.

Implementational tip: Once you have your backpropagation implementation correctly computing the derivatives (as verified using gradient checking in Step 3), when you are now using it with L-BFGS to optimize Jsparse(W,b), make sure you're not doing gradient-checking on every step. Backpropagation can be used to compute the derivatives of Jsparse(W,b) fairly efficiently, and if you were additionally computing the gradient numerically on every step, this would slow down your program significantly.

Step 5: Visualization

After training the autoencoder, use display_network.m to visualize the learned weights. (See train.m, Step 5.) Run "print -djpeg weights.jpg" to save the visualization to a file "weights.jpg" (which you will submit together with your code).

Results

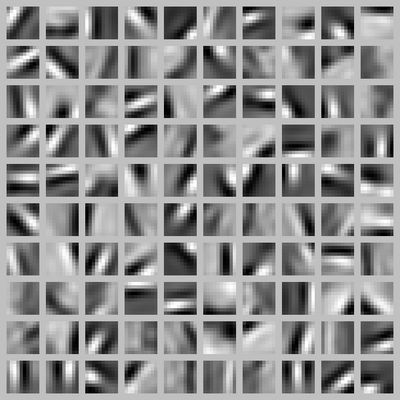

To successfully complete this assignment, you should demonstrate your sparse autoencoder algorithm learning a set of edge detectors. For example, this was the visualization we obtained:

Our implementation took around 5 minutes to run on a fast computer. In case you end up needing to try out multiple implementations or different parameter values, be sure to budget enough time for debugging and to run the experiments you'll need.

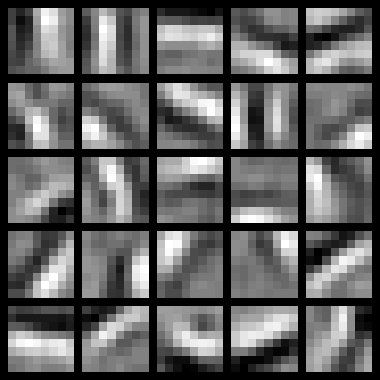

Also, by way of comparison, here are some visualizations from implementations that we do not consider successful (either a buggy implementation, or where the parameters were poorly tuned):

Contents

- CS294A/CS294W Programming Assignment Starter Code

- STEP 0: Here we provide the relevant parameters values that will

- STEP 1: Implement sampleIMAGES

- STEP 2: Implement sparseAutoencoderCost

- STEP 3: Gradient Checking

- STEP 4: After verifying that your implementation of

- STEP 5: Visualization

CS294A/CS294W Programming Assignment Starter Code

% Instructions % ------------ % % This file contains code that helps you get started on the % programming assignment. You will need to complete the code in sampleIMAGES.m, % sparseAutoencoderCost.m and computeNumericalGradient.m. % For the purpose of completing the assignment, you do not need to % change the code in this file. % %%======================================================================

STEP 0: Here we provide the relevant parameters values that will

allow your sparse autoencoder to get good filters; you do not need to change the parameters below.

visibleSize = 8*8; % number of input units hiddenSize = 25; % number of hidden units sparsityParam = 0.01; % desired average activation of the hidden units. % (This was denoted by the Greek alphabet rho, which looks like a lower-case "p", % in the lecture notes). lambda = 0.0001; % weight decay parameter beta = 3; % weight of sparsity penalty term %%======================================================================

STEP 1: Implement sampleIMAGES

After implementing sampleIMAGES, the display_network command should display a random sample of 200 patches from the dataset

patches = sampleIMAGES; display_network(patches(:,randi(size(patches,2),204,1)),8);%randi(size(patches,2),204,1) %为产生一个204维的列向量,每一维的值为0~10000 %中的随机数,说明是随机取204个patch来显示 % Obtain random parameters theta theta = initializeParameters(hiddenSize, visibleSize); %%======================================================================

Error using load Unable to read file 'IMAGES': no such file or directory. Error in sampleIMAGES (line 5) load IMAGES; % load images from disk Error in train (line 31) patches = sampleIMAGES;

STEP 2: Implement sparseAutoencoderCost

You can implement all of the components (squared error cost, weight decay term, sparsity penalty) in the cost function at once, but it may be easier to do it step-by-step and run gradient checking (see STEP 3) after each step. We suggest implementing the sparseAutoencoderCost function using the following steps:

(a) Implement forward propagation in your neural network, and implement the

squared error term of the cost function. Implement backpropagation to

compute the derivatives. Then (using lambda=beta=0), run Gradient Checking

to verify that the calculations corresponding to the squared error cost

term are correct.

(b) Add in the weight decay term (in both the cost function and the derivative

calculations), then re-run Gradient Checking to verify correctness.

(c) Add in the sparsity penalty term, then re-run Gradient Checking to

verify correctness.

Feel free to change the training settings when debugging your code. (For example, reducing the training set size or number of hidden units may make your code run faster; and setting beta and/or lambda to zero may be helpful for debugging.) However, in your final submission of the visualized weights, please use parameters we gave in Step 0 above.

[cost, grad] = sparseAutoencoderCost(theta, visibleSize, hiddenSize, lambda, ... sparsityParam, beta, patches); %%======================================================================

STEP 3: Gradient Checking

Hint: If you are debugging your code, performing gradient checking on smaller models and smaller training sets (e.g., using only 10 training examples and 1-2 hidden units) may speed things up.

% First, lets make sure your numerical gradient computation is correct for a % simple function. After you have implemented computeNumericalGradient.m, % run the following: %checkNumericalGradient(); % Now we can use it to check your cost function and derivative calculations % for the sparse autoencoder. % numgrad = computeNumericalGradient( @(x) sparseAutoencoderCost(x, visibleSize, ... % hiddenSize, lambda, ... % sparsityParam, beta, ... % patches), theta); % Use this to visually compare the gradients side by side %disp([numgrad grad]); % Compare numerically computed gradients with the ones obtained from backpropagation % diff = norm(numgrad-grad)/norm(numgrad+grad); % disp(diff); % Should be small. In our implementation, these values are % usually less than 1e-9. % When you got this working, Congratulations!!! %%======================================================================

STEP 4: After verifying that your implementation of

sparseAutoencoderCost is correct, You can start training your sparse autoencoder with minFunc (L-BFGS).

% Randomly initialize the parameters theta = initializeParameters(hiddenSize, visibleSize); % Use minFunc to minimize the function addpath minFunc/ options.Method = 'cg'; % Here, we use L-BFGS to optimize our cost % function. Generally, for minFunc to work, you % need a function pointer with two outputs: the % function value and the gradient. In our problem, % sparseAutoencoderCost.m satisfies this. options.maxIter = 400; % Maximum number of iterations of L-BFGS to run options.display = 'on'; [opttheta, cost] = minFunc( @(p) sparseAutoencoderCost(p, ... visibleSize, hiddenSize, ... lambda, sparsityParam, ... beta, patches), ... theta, options); %%======================================================================

STEP 5: Visualization

W1 = reshape(opttheta(1:hiddenSize*visibleSize), hiddenSize, visibleSize); b1 = opttheta(2*hiddenSize*visibleSize+1:2*hiddenSize*visibleSize+hiddenSize); aaa = logsig(W1*patches+repmat(b1,1,10000)); ind=find(abs(aaa)<0.01); aaa2=aaa; aaa2(ind)=0; featureMatrix=aaa2; figure; display_network(W1', 12); print -djpeg weights.jpg % save the visualization to a file

Contents

- ---------- YOUR CODE HERE --------------------------------------

- ---------------------------------------------------------------

- ---------------------------------------------------------------

function patches = sampleIMAGES()

% sampleIMAGES % Returns 10000 patches for training load IMAGES; % load images from disk patchsize = 8; % we'll use 8x8 patches numpatches = 1000; % Initialize patches with zeros. Your code will fill in this matrix--one % column per patch, 10000 columns. patches = zeros(patchsize*patchsize, numpatches);

Error using load Unable to read file 'IMAGES': no such file or directory. Error in sampleIMAGES (line 5) load IMAGES; % load images from disk

---------- YOUR CODE HERE --------------------------------------

Instructions: Fill in the variable called "patches" using data from IMAGES.

IMAGES is a 3D array containing 10 images For instance, IMAGES(:,:,6) is a 512x512 array containing the 6th image, and you can type "imagesc(IMAGES(:,:,6)), colormap gray;" to visualize it. (The contrast on these images look a bit off because they have been preprocessed using using "whitening." See the lecture notes for more details.) As a second example, IMAGES(21:30,21:30,1) is an image patch corresponding to the pixels in the block (21,21) to (30,30) of Image 1

for imageNum = 1:10%在每张图片中随机选取1000个patch,共1000个patch [rowNum colNum] = size(IMAGES(:,:,imageNum)); for patchNum = 1:1000%实现每张图片选取1000个patch xPos = randi([1,rowNum-patchsize+1]); yPos = randi([1, colNum-patchsize+1]); patches(:,(imageNum-1)*1000+patchNum) = reshape(IMAGES(xPos:xPos+7,yPos:yPos+7,... imageNum),64,1); end end

---------------------------------------------------------------

For the autoencoder to work well we need to normalize the data Specifically, since the output of the network is bounded between [0,1] (due to the sigmoid activation function), we have to make sure the range of pixel values is also bounded between [0,1]

patches = normalizeData(patches);

end

---------------------------------------------------------------

function patches = normalizeData(patches) % Squash data to [0.1, 0.9] since we use sigmoid as the activation % function in the output layer % Remove DC (mean of images). patches = bsxfun(@minus, patches, mean(patches)); % Truncate to +/-3 standard deviations and scale to -1 to 1 pstd = 3 * std(patches(:)); patches = max(min(patches, pstd), -pstd) / pstd;%因为根据3sigma法则,95%以上的数据都在该区域内 % 这里转换后将数据变到了-1到1之间 % Rescale from [-1,1] to [0.1,0.9] patches = (patches + 1) * 0.4 + 0.1; end

function [h, array] = display_network(A, opt_normalize, opt_graycolor, cols, opt_colmajor) % This function visualizes filters in matrix A. Each column of A is a % filter. We will reshape each column into a square image and visualizes % on each cell of the visualization panel. % All other parameters are optional, usually you do not need to worry % about it. % opt_normalize: whether we need to normalize the filter so that all of % them can have similar contrast. Default value is true. % opt_graycolor: whether we use gray as the heat map. Default is true. % cols: how many columns are there in the display. Default value is the % squareroot of the number of columns in A. % opt_colmajor: you can switch convention to row major for A. In that % case, each row of A is a filter. Default value is false. warning off all if ~exist('opt_normalize', 'var') || isempty(opt_normalize) opt_normalize= true; end if ~exist('opt_graycolor', 'var') || isempty(opt_graycolor) opt_graycolor= true; end if ~exist('opt_colmajor', 'var') || isempty(opt_colmajor) opt_colmajor = false; end % rescale A = A - mean(A(:)); if opt_graycolor, colormap(gray); end % compute rows, cols [L M]=size(A); sz=sqrt(L); buf=1; if ~exist('cols', 'var') if floor(sqrt(M))^2 ~= M n=ceil(sqrt(M)); while mod(M, n)~=0 && n<1.2*sqrt(M), n=n+1; end m=ceil(M/n); else n=sqrt(M); m=n; end else n = cols; m = ceil(M/n); end array=-ones(buf+m*(sz+buf),buf+n*(sz+buf)); if ~opt_graycolor array = 0.1.* array; end if ~opt_colmajor k=1; for i=1:m for j=1:n if k>M, continue; end clim=max(abs(A(:,k))); if opt_normalize array(buf+(i-1)*(sz+buf)+(1:sz),buf+(j-1)*(sz+buf)+(1:sz))=reshape(A(:,k),sz,sz)/clim; else array(buf+(i-1)*(sz+buf)+(1:sz),buf+(j-1)*(sz+buf)+(1:sz))=reshape(A(:,k),sz,sz)/max(abs(A(:))); end k=k+1; end end else k=1; for j=1:n for i=1:m if k>M, continue; end clim=max(abs(A(:,k))); if opt_normalize array(buf+(i-1)*(sz+buf)+(1:sz),buf+(j-1)*(sz+buf)+(1:sz))=reshape(A(:,k),sz,sz)/clim; else array(buf+(i-1)*(sz+buf)+(1:sz),buf+(j-1)*(sz+buf)+(1:sz))=reshape(A(:,k),sz,sz); end k=k+1; end end end if opt_graycolor h=imagesc(array,'EraseMode','none',[-1 1]); else h=imagesc(array,'EraseMode','none',[-1 1]); end axis image off drawnow; warning on all

Contents

- Initialize parameters randomly based on layer sizes.

function theta = initializeParameters(hiddenSize, visibleSize)

Initialize parameters randomly based on layer sizes.

r = sqrt(6) / sqrt(hiddenSize+visibleSize+1); % we'll choose weights uniformly from the interval [-r, r] W1 = rand(hiddenSize, visibleSize) * 2 * r - r; W2 = rand(visibleSize, hiddenSize) * 2 * r - r; b1 = zeros(hiddenSize, 1); b2 = zeros(visibleSize, 1); % Convert weights and bias gradients to the vector form. % This step will "unroll" (flatten and concatenate together) all % your parameters into a vector, which can then be used with minFunc. theta = [W1(:) ; W2(:) ; b1(:) ; b2(:)];

Error using initializeParameters (line 4) Not enough input arguments.

end

Contents

- ---------- YOUR CODE HERE --------------------------------------

function [cost,grad] = sparseAutoencoderCost(theta, visibleSize, hiddenSize, ... lambda, sparsityParam, beta, data)

% visibleSize: the number of input units (probably 64) % hiddenSize: the number of hidden units (probably 25) % lambda: weight decay parameter % sparsityParam: The desired average activation for the hidden units (denoted in the lecture % notes by the greek alphabet rho, which looks like a lower-case "p"). % beta: weight of sparsity penalty term % data: Our 64x10000 matrix containing the training data. So, data(:,i) is the i-th training example. % The input theta is a vector (because minFunc expects the parameters to be a vector). % We first convert theta to the (W1, W2, b1, b2) matrix/vector format, so that this % follows the notation convention of the lecture notes. %将长向量转换成每一层的权值矩阵和偏置向量值 W1 = reshape(theta(1:hiddenSize*visibleSize), hiddenSize, visibleSize); W2 = reshape(theta(hiddenSize*visibleSize+1:2*hiddenSize*visibleSize), visibleSize, hiddenSize); b1 = theta(2*hiddenSize*visibleSize+1:2*hiddenSize*visibleSize+hiddenSize); b2 = theta(2*hiddenSize*visibleSize+hiddenSize+1:end); % Cost and gradient variables (your code needs to compute these values). % Here, we initialize them to zeros. cost = 0; W1grad = zeros(size(W1)); W2grad = zeros(size(W2)); b1grad = zeros(size(b1)); b2grad = zeros(size(b2));

Error using sparseAutoencoderCost (line 17) Not enough input arguments.

---------- YOUR CODE HERE --------------------------------------

Instructions: Compute the cost/optimization objective J_sparse(W,b) for the Sparse Autoencoder,

and the corresponding gradients W1grad, W2grad, b1grad, b2grad.

W1grad, W2grad, b1grad and b2grad should be computed using backpropagation. Note that W1grad has the same dimensions as W1, b1grad has the same dimensions as b1, etc. Your code should set W1grad to be the partial derivative of J_sparse(W,b) with respect to W1. I.e., W1grad(i,j) should be the partial derivative of J_sparse(W,b) with respect to the input parameter W1(i,j). Thus, W1grad should be equal to the term [(1/m) \Delta W^{(1)} + \lambda W^{(1)}] in the last block of pseudo-code in Section 2.2 of the lecture notes (and similarly for W2grad, b1grad, b2grad).

Stated differently, if we were using batch gradient descent to optimize the parameters, the gradient descent update to W1 would be W1 := W1 - alpha * W1grad, and similarly for W2, b1, b2.

Jcost = 0;%直接误差 Jweight = 0;%权值惩罚 Jsparse = 0;%稀疏性惩罚 [n m] = size(data);%m为样本的个数,n为样本的特征数 %前向算法计算各神经网络节点的线性组合值和active值 z2 = W1*data+repmat(b1,1,m);%注意这里一定要将b1向量复制扩展成m列的矩阵 a2 = sigmoid(z2); z3 = W2*a2+repmat(b2,1,m); a3 = sigmoid(z3); % 计算预测产生的误差 Jcost = (0.5/m)*sum(sum((a3-data).^2)); %计算权值惩罚项 Jweight = (1/2)*(sum(sum(W1.^2))+sum(sum(W2.^2))); %计算稀释性规则项 rho = (1/m).*sum(a2,2);%求出第一个隐含层的平均值向量 Jsparse = sum(sparsityParam.*log(sparsityParam./rho)+ ... (1-sparsityParam).*log((1-sparsityParam)./(1-rho))); %损失函数的总表达式 cost = Jcost+lambda*Jweight+beta*Jsparse; %反向算法求出每个节点的误差值 d3 = -(data-a3).*sigmoidInv(z3); sterm = beta*(-sparsityParam./rho+(1-sparsityParam)./(1-rho));%因为加入了稀疏规则项,所以 %计算偏导时需要引入该项 d2 = (W2'*d3+repmat(sterm,1,m)).*sigmoidInv(z2); %计算W1grad W1grad = W1grad+d2*data'; W1grad = (1/m)*W1grad+lambda*W1; %计算W2grad W2grad = W2grad+d3*a2'; W2grad = (1/m).*W2grad+lambda*W2; %计算b1grad b1grad = b1grad+sum(d2,2); b1grad = (1/m)*b1grad;%注意b的偏导是一个向量,所以这里应该把每一行的值累加起来 %计算b2grad b2grad = b2grad+sum(d3,2); b2grad = (1/m)*b2grad; % %%方法二,每次处理1个样本,速度慢 % m=size(data,2); % rho=zeros(size(b1)); % for i=1:m % %feedforward % a1=data(:,i); % z2=W1*a1+b1; % a2=sigmoid(z2); % z3=W2*a2+b2; % a3=sigmoid(z3); % %cost=cost+(a1-a3)'*(a1-a3)*0.5; % rho=rho+a2; % end % rho=rho/m; % sterm=beta*(-sparsityParam./rho+(1-sparsityParam)./(1-rho)); % %sterm=beta*2*rho; % for i=1:m % %feedforward % a1=data(:,i); % z2=W1*a1+b1; % a2=sigmoid(z2); % z3=W2*a2+b2; % a3=sigmoid(z3); % cost=cost+(a1-a3)'*(a1-a3)*0.5; % %backpropagation % delta3=(a3-a1).*a3.*(1-a3); % delta2=(W2'*delta3+sterm).*a2.*(1-a2); % W2grad=W2grad+delta3*a2'; % b2grad=b2grad+delta3; % W1grad=W1grad+delta2*a1'; % b1grad=b1grad+delta2; % end % % kl=sparsityParam*log(sparsityParam./rho)+(1-sparsityParam)*log((1-sparsityParam)./(1-rho)); % %kl=rho.^2; % cost=cost/m; % cost=cost+sum(sum(W1.^2))*lambda/2.0+sum(sum(W2.^2))*lambda/2.0+beta*sum(kl); % W2grad=W2grad./m+lambda*W2; % b2grad=b2grad./m; % W1grad=W1grad./m+lambda*W1; % b1grad=b1grad./m; %------------------------------------------------------------------- % After computing the cost and gradient, we will convert the gradients back % to a vector format (suitable for minFunc). Specifically, we will unroll % your gradient matrices into a vector. grad = [W1grad(:) ; W2grad(:) ; b1grad(:) ; b2grad(:)];

end %------------------------------------------------------------------- % Here's an implementation of the sigmoid function, which you may find useful % in your computation of the costs and the gradients. This inputs a (row or % column) vector (say (z1, z2, z3)) and returns (f(z1), f(z2), f(z3)). function sigm = sigmoid(x) sigm = 1 ./ (1 + exp(-x)); end %sigmoid函数的逆函数 function sigmInv = sigmoidInv(x) sigmInv = sigmoid(x).*(1-sigmoid(x)); end

![\begin{align}\hat\rho_j = \frac{1}{m} \sum_{i=1}^m \left[ a^{(2)}_j(x^{(i)}) \right]\end{align}](http://img.e-com-net.com/image/info5/dffd98986a654ca2a6e86eabe318c1d6.png)