Android binder from Top to Bottom

本文分六部分介绍Android binder机制和结构; 理解和行文难免有误, 一切以代码为准.

转载请注明出处.

Android Binder机制之RefBase & smart pointer

在组件技术中必然需要引用计数的机制,用于控制指针所指向的本端代理对象和所对应的远端进程内的组件对象的生命周期。

LPC通用行为和smart pointer

如同Windows COM二进制兼容跨进程接口调用机制,LPC(Local Procedure Call)三要素:

QueryInterface

AddRef/ReleaseRef

Method Invoke

另外,实现上对于本进程内的组件,要可以直接引用,这关乎运行效率。这也是LPC实现普遍要考虑的一个点(特别是用socket实现时)。

对于Android Binder Framework,Interface Query由ServiceManager负责,AddRef/ReleaseRef由RefBase计数负责;Method Invoke由对应Service的Interface和Binder负责。

正是因为组件有计数功能,smart pointer的“smart”才有了着落,即通过指针对象自身的生命周期来增减引用的组件的计数,从而控制组件的生命周期。

在Android中,android::RefBase及其派生类负责计数,而smart pointer(sp<T>和wp<T>)负责所引用对象的计数增减的调用。下面将分别从对象角度和指针角度来考察android binder需求而引入的计数机制。

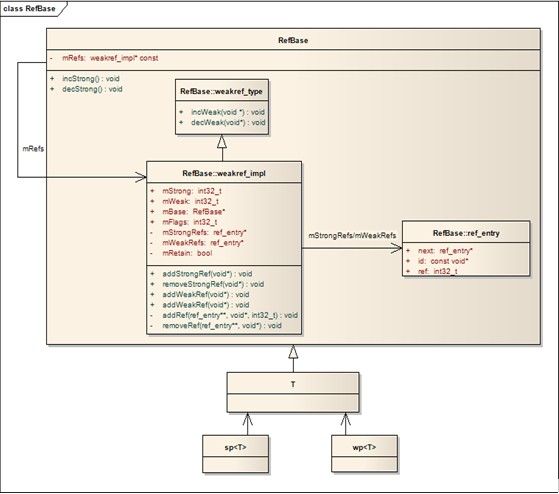

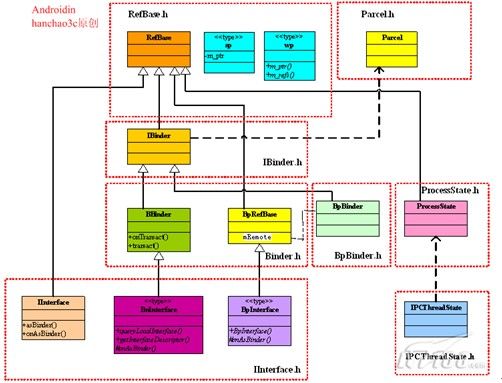

RefBase和sp/wp的静态关系如下图(此图从网络引来):

计数的祖宗---基类RefBase和LightRefBase

实现计数的对象的类是RefBase和LightRefBase。绝大多数情况下的类是继承自RefBase。

RefBase虽然借助关联的嵌套类对象实现了强引用计数和弱引用计数,但是对外只表露出了增减强引用计数的方法(如incStrong、decStrong)。

LightRefBase的Light表现在只实现强引用计数,没有弱引用计数!LightRefBase主要用在OpenGL ES需要的FrameBuffer NativeWindow实现中。

RefBase的设计原则

1. 因为调用incStrong/decStrong的时候,需要相应地调用incWeak/decWeak,如果表露出incWeak/decWeak就需要外部遵循不同情景的调用规则,可能会导致计数的混乱,所以incWeak/decWeak尽量不对外使用,内部实现类维护。

2. incStrong/decStrong表露出来是因为有些情况,直接由普通指针调用,否则,都不应该表露出,而是应该sp<T>友元类访问即可。

3. RefBase既然需要对象的强引用计数和弱引用计数,由于强引用指针和弱引用指针的相互引用和相互赋值及弱引用提升为强引用,所以,为了设计的方便和运行的效率,强/弱引用计数及其操作的内部实现又不能过于独立。

4. 弱引用计数接口(android::RefBase::weakref_type)供弱引用计数实现使用,需要分离出来。

综上设计原则,于是有了Android RefBase的略显怪胎式的实现:

In frameworks/base/include/utils/RefBase.h

class RefBase

{

public:

void incStrong(const void* id) const;

void decStrong(const void* id) const;

void forceIncStrong(const void* id) const;

//! DEBUGGING ONLY: Get current strong ref count.

int32_t getStrongCount() const;

class weakref_type

{

public:

RefBase* refBase() const;

void incWeak(const void* id);

void decWeak(const void* id);

// acquires a strong reference if there is already one.

bool attemptIncStrong(const void* id);

// acquires a weak reference if there is already one.

// This is not always safe. see ProcessState.cpp and BpBinder.cpp

// for proper use.

bool attemptIncWeak(const void* id);

//! DEBUGGING ONLY: Get current weak ref count.

int32_t getWeakCount() const;

//! DEBUGGING ONLY: Print references held on object.

void printRefs() const;

//! DEBUGGING ONLY: Enable tracking for this object.

// enable -- enable/disable tracking

// retain -- when tracking is enable, if true, then we save a stack trace

// for each reference and dereference; when retain == false, we

// match up references and dereferences and keep only the

// outstanding ones.

void trackMe(bool enable, bool retain);

};

weakref_type* createWeak(const void* id) const;

weakref_type* getWeakRefs() const;

//! DEBUGGING ONLY: Print references held on object.

inline void printRefs() const { getWeakRefs()->printRefs(); }

//! DEBUGGING ONLY: Enable tracking of object.

inline void trackMe(bool enable, bool retain)

{

getWeakRefs()->trackMe(enable, retain);

}

typedef RefBase basetype;

protected:

RefBase();

virtual ~RefBase();

//! Flags for extendObjectLifetime()

enum {

OBJECT_LIFETIME_STRONG = 0x0000,

OBJECT_LIFETIME_WEAK = 0x0001,

OBJECT_LIFETIME_MASK = 0x0001

};

void extendObjectLifetime(int32_t mode);

//! Flags for onIncStrongAttempted()

enum {

FIRST_INC_STRONG = 0x0001

};

virtual void onFirstRef();

virtual void onLastStrongRef(const void* id);

virtual bool onIncStrongAttempted(uint32_t flags, const void* id);

virtual void onLastWeakRef(const void* id);

private:

friend class ReferenceMover;

static void moveReferences(void* d, void const* s, size_t n,

const ReferenceConverterBase& caster);

private:

friend class weakref_type;

class weakref_impl;

RefBase(const RefBase& o); //copy constructor

RefBase& operator=(const RefBase& o); //assignment operator overload

weakref_impl* const mRefs;

};

In frameworks/base/libs/utils/RefBase.cpp

class RefBase::weakref_impl : public RefBase::weakref_type

{

public:

volatile int32_t mStrong;

volatile int32_t mWeak;

RefBase* const mBase; //de-refer to RefBase

volatile int32_t mFlags;

#if !DEBUG_REFS

weakref_impl(RefBase* base)

: mStrong(INITIAL_STRONG_VALUE)

, mWeak(0)

, mBase(base)

, mFlags(0)

{

}

void addStrongRef(const void* /*id*/) { }

void removeStrongRef(const void* /*id*/) { }

void renameStrongRefId(const void* /*old_id*/, const void* /*new_id*/) { }

void addWeakRef(const void* /*id*/) { }

void removeWeakRef(const void* /*id*/) { }

void renameWeakRefId(const void* /*old_id*/, const void* /*new_id*/) { }

void printRefs() const { }

void trackMe(bool, bool) { }

#else

………

mutable Mutex mMutex;

ref_entry* mStrongRefs;

ref_entry* mWeakRefs;

bool mTrackEnabled;

// Collect stack traces on addref and removeref, instead of deleting the stack references

// on removeref that match the address ones.

bool mRetain;

#endif

};

可以看出,RefBase::weakref_type采用内部类分离出来,做到弱引用接口的独立。class RefBase::weakref_impl继承自RefBase::weakref_type,实现了弱计数需要的接口incWeak/decWeak。在实现RefBase的弱计数功能时,调用weakref_impl弱引用计数相关的接口。RefBase::weakref_impl对象和被计数的 DerivedRefbase对象分离,是弱引用计数实现的需要,因为RefBase对象生命周期仅受强引用控制时,wp<T>.m_ptr可能已经是野指针,所以RefBase::weakref_impl对象内存要和被计数的 DerivedRefbase对象分离,用RefBase::weakref_impl对象看是否能wp<T>.promote()成功。

RefBase::weakref_impl的函数addStrongRef/ removeStrongRef,addWeakRef/ removeWeakRef等用来追踪对象的被哪些指针强弱引用的,可用于调试。

RefBase::incStrong/RefBase::decStrong则由RefBase类自己实现,会分别调用RefBase::weakref_type::incWeak/ RefBase::weakref_type::decWeak函数,即强引用计数时一定会伴随着弱引用计数发生。

总之,虽然可读性不怎么样,但是却体现了上述设计原则。

RefBase::weakref_impl己经不仅仅是个weakref实现,除了没有直接实现incStrong/decStrong函数,已经是一个强弱计数RefBase::ref_impl的实现。但是,反过来说,mStrong却一定不能放在RefBase基类中。

下面是强弱计数实现的两个示例函数。

void RefBase::incStrong(const void* id) const

{

weakref_impl* const refs = mRefs;

refs->incWeak(id);

refs->addStrongRef(id);

const int32_t c =android_atomic_inc(&refs->mStrong);

LOG_ASSERT(c > 0, "incStrong() called on %p after last strong ref", refs);

#if PRINT_REFS

LOGD("incStrong of %p from %p: cnt=%d\n", this, id, c);

#endif

if (c != INITIAL_STRONG_VALUE) {

return;

}

android_atomic_add(-INITIAL_STRONG_VALUE, &refs->mStrong);

refs->mBase->onFirstRef();

}

void RefBase::weakref_type::incWeak(const void* id)

{

weakref_impl* const impl = static_cast<weakref_impl*>(this);

impl->addWeakRef(id);

const int32_t c = android_atomic_inc(&impl->mWeak);

LOG_ASSERT(c >= 0, "incWeak called on %p after last weak ref", this);

}

从weaktype_impl代码中可以看到mStrong在其中,weaktype_impl对于强引用也有追踪,所以weaktype_impl可以直接实现强计数的几个接口,做为强弱引用计数的通用实现ref_impl。只在weaktype_impl中访问其成员如mStrong,也符合数据封装的原则。然后RefBase直接调用其对应的强引用计数接口实现即可。如:

inline Refbase::incStrong(const void* id) const

{

ref_impl* const refs = mRefs;

refs->incStrong(id);

}

另外,mStrong的初始值(INITIAL_STRONG_VALUE)不为0的原因是将初始值和递减计数到0做区别。系统中不可能有INITIAL_STRONG_VALUE这么多个引用到对象。

RefBase的生命周期

使用函数extendObjectLifetime改变默认的对象LIFETIME MODE,默认是LIFETIME_STRONG,即只受强计数控制;改为LIFETIME_WEAK,只有弱计数也为零时,才释放m_ptr。另,弱指针提升时用到attemptIncStrong。

BpBinder::BpBinder(int32_t handle)

: mHandle(handle)

, mAlive(1)

, mObitsSent(0)

, mObituaries(NULL)

{

LOGV("Creating BpBinder %p handle %d\n", this, mHandle);

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

IPCThreadState::self()->incWeakHandle(handle);

}

BpRefBase::BpRefBase(const sp<IBinder>& o)

: mRemote(o.get()), mRefs(NULL), mState(0)

{

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

if (mRemote) {

mRemote->incStrong(this); // Removed on first IncStrong().

mRefs = mRemote->createWeak(this); // Held for our entire lifetime.

}

}

void BpRefBase::onFirstRef()

{

android_atomic_or(kRemoteAcquired, &mState);

}

在此适度解释BpBinder和BpRefBase调用extendObjectLifetime的用途。

BpBinder and base class of BpSubInterface, BpRefBase are OBJECT_LIFETIME_WEAK life mode controlled; BpBinder和BpSubInterface两个对象本身是弱计数控制的。

BpSubInterface级别的BpRefBase增减BpBinder的强弱计数,而BpBinder增减远端组件的计数,准确的说是增减binder驱动中binder_ref的强弱计数通过控制binder_node的计数,间接控制BBinder子类的强弱计数。析构时,反之亦反。BpBinder从Binder级别控制;而BpRefbase从Interface级别控制。

如sp<ISubInterface> intf = sm->getService(servicename);

当getService返回binder handle构造出BpBinder时,使用inWeakHandle增加binder_ref的弱计数;构造BpSubInterface时,表明BpBinder被引用,增加BpBinder的强计数引发其onFirstRef通过incStrongHandle增加binder_ref强计数;赋值给sp<ISubInterface>时,BpSubInterface被引用,BpSubInterface::incStrong被调用且初次引用被调用onFirstRef,设置BpSubInterface状态为kRemoteAcquired by sp< ISubInterface >。

此处增加flow图。

考察分离对于生命周期控制的作用[超前]!!!可以考察以下语句的执行来探索(不考虑编译器优化,需要结合binder driver)。

sp<ISubInterface> intf0 = sm->getService(servicename);

sp<ISubInterface> intf1 = sm->getService(servicename);

sp<ISubInterface> intf2 = sm->getService(servicename);

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle)

{

sp<IBinder> result;

AutoMutex _l(mLock);

handle_entry* e = lookupHandleLocked(handle);

if (e != NULL) {

// We need to create a new BpBinder if there isn't currently one, OR we

// are unable to acquire a weak reference on this current one. See comment

// in getWeakProxyForHandle() for more info about this.

IBinder* b = e->binder;

if (b == NULL || !e->refs->attemptIncWeak(this)) {

b = new BpBinder(handle);

e->binder = b;

if (b) e->refs = b->getWeakRefs();

result = b;

} else {

// This little bit of nastyness is to allow us to add a primary

// reference to the remote proxy when this team doesn't have one

// but another team is sending the handle to us.

result.force_set(b);

e->refs->decWeak(this);

}

}

return result;

}

wp<IBinder> ProcessState::getWeakProxyForHandle(int32_t handle)

{

wp<IBinder> result;

AutoMutex _l(mLock);

handle_entry* e = lookupHandleLocked(handle);

if (e != NULL) {

// We need to create a new BpBinder if there isn't currently one, OR we

// are unable to acquire a weak reference on this current one. The

// attemptIncWeak() is safe because we know the BpBinder destructor will always

// call expungeHandle(), which acquires the same lock we are holding now.

// We need to do this because there is a race condition between someone

// releasing a reference on this BpBinder, and a new reference on its handle

// arriving from the driver.

IBinder* b = e->binder;

if (b == NULL || !e->refs->attemptIncWeak(this)) {

b = new BpBinder(handle);

result = b;

e->binder = b;

if (b) e->refs = b->getWeakRefs();

} else {

result = b;

e->refs->decWeak(this);

}

}

return result;

}

与binder object向service manager注册时有什么关系??

RefBase类的三个重要虚函数:

virtual void RefBase::onFirstRef() {}

virtual void RefBase::onLastStrongRef(const void* /*id*/) {}

virtual void RefBase::onLastWeakRef(const void* /*id*/) {}

此三个虚函数,默认实现为空。分别用于当开始强计数、强计数减为0和弱计数减为0时,提供一个处理时机;一个重要用途是提供代理类对组件类计数的调用点,其大致作用如下。

子类BpBinder和BBinder重载了这三个函数。BpBinder的重载如下:

void BpBinder::onFirstRef()

{

LOGV("onFirstRef BpBinder %p handle %d\n", this, mHandle);

IPCThreadState* ipc = IPCThreadState::self();

if (ipc) ipc->incStrongHandle(mHandle);

}

void BpBinder::onLastStrongRef(const void* id)

{

LOGV("onLastStrongRef BpBinder %p handle %d\n", this, mHandle);

IF_LOGV() {

printRefs();

}

IPCThreadState* ipc = IPCThreadState::self();

if (ipc) ipc->decStrongHandle(mHandle);

}

BBinder的会留给具体的服务类实现自己重载。onFirstRef主要用于完成服务的一些初始化工作。Binder组件对象是继承自BnSubInterface(BBinder的子类),收到BR_ACQUIRE和BR_RELEASE后,会使用RefBase的引用计数操作incStrong/decStrong等函数实现来响应,不同的是在使用android_atomic_inc/android_atomic_dec完成引用计数操作后,调用到onFirstRef和onLastStrongRef虚函数时,调用到的是继承自RefBase的两个空实现,什么也不做。这是因为本对象的计数操作是直接增减,已经操作完毕。

当客户和服务处于同一进程空间时,getService返回的IBinder指针直接指向BBinder类型对象,所以当incStrong、decStrong、incWeak、decWeak时也是直接调用BBinder的相应函数,调用到RefBase的相应实现,过程同客户和服务在不同进程时远端组件的响应过程。

总结:RefBase通过IncStrong/decStrong/inWeak/decWeak实现了指针直接所指的本地代理对象的引用计数的操作,代理子类BpBinder重载RefBase的onFirstRef和onLastStrongRef的默认空实现。

为什么只有一个onFirstRef而没有分成onFirstStrongRef和onFirstWeakRef?onFirstRef的准确意义就是onFirstStrongRef()。具体在sp<T>和wp<T>节末尾解释。

smart pointer ---sp<T> & wp<T>

Android下C++中的smart pointer分为强指针(Strong Pointer)sp和弱指针(Weak Pointer)wp。没有类似虚拟机层面的垃圾回收模块,而仅是利用c++的语言特性来解决内存使用和自动回收的一种手段。

wp in frameworks/base/include/utils/RefBase.h

template <typename T>

class wp

{

public:

typedef typename RefBase::weakref_type weakref_type;

inline wp() : m_ptr(0) { }

wp(T* other);

wp(const wp<T>& other);

wp(const sp<T>& other);

template<typename U> wp(U* other);

template<typename U> wp(const sp<U>& other);

template<typename U> wp(const wp<U>& other);

~wp();

// Assignment

wp& operator = (T* other);

wp& operator = (const wp<T>& other);

wp& operator = (const sp<T>& other);

template<typename U> wp& operator = (U* other);

template<typename U> wp& operator = (const wp<U>& other);

template<typename U> wp& operator = (const sp<U>& other);

void set_object_and_refs(T* other, weakref_type* refs);

// promotion to sp

sp<T> promote() const;

// Reset

void clear();

// Accessors

inline weakref_type* get_refs() const { return m_refs; }

inline T* unsafe_get() const { return m_ptr; }

// Operators

COMPARE_WEAK(==)

COMPARE_WEAK(!=)

COMPARE_WEAK(>)

COMPARE_WEAK(<)

COMPARE_WEAK(<=)

COMPARE_WEAK(>=)

inline bool operator == (const wp<T>& o) const {

return (m_ptr == o.m_ptr) && (m_refs == o.m_refs);

}

template<typename U>

inline bool operator == (const wp<U>& o) const {

return m_ptr == o.m_ptr;

}

inline bool operator > (const wp<T>& o) const {

return (m_ptr == o.m_ptr) ? (m_refs > o.m_refs) : (m_ptr > o.m_ptr);

}

template<typename U>

inline bool operator > (const wp<U>& o) const {

return (m_ptr == o.m_ptr) ? (m_refs > o.m_refs) : (m_ptr > o.m_ptr);

}

inline bool operator < (const wp<T>& o) const {

return (m_ptr == o.m_ptr) ? (m_refs < o.m_refs) : (m_ptr < o.m_ptr);

}

template<typename U>

inline bool operator < (const wp<U>& o) const {

return (m_ptr == o.m_ptr) ? (m_refs < o.m_refs) : (m_ptr < o.m_ptr);

}

inline bool operator != (const wp<T>& o) const { return m_refs != o.m_refs; }

template<typename U> inline bool operator != (const wp<U>& o) const { return !operator == (o); }

inline bool operator <= (const wp<T>& o) const { return !operator > (o); }

template<typename U> inline bool operator <= (const wp<U>& o) const { return !operator > (o); }

inline bool operator >= (const wp<T>& o) const { return !operator < (o); }

template<typename U> inline bool operator >= (const wp<U>& o) const { return !operator < (o); }

private:

template<typename Y> friend class sp;

template<typename Y> friend class wp;

T* m_ptr;

weakref_type* m_refs;

};

其中promote用来尝试提升为sp<T>,对返回sp<T>需要与NULL比较,检测是否提升成功。字段weakref_type* m_refs就是用于弱引用计数和提升使用的。相关代码如下:

wp<T>::wp(T* other)

: m_ptr(other)

{

if (other) m_refs = other->createWeak(this);

}

template<typename T>

sp<T> wp<T>::promote() const

{

sp<T> result;

if (m_ptr && m_refs->attemptIncStrong(&result)) {

result.set_pointer(m_ptr);

}

return result;

}

promote()不成功时,会直接返回空sp<T>并赋给原左值;所以只有wp<T>.m_ptr保持指针原值且强计数尝试增加成功时,返回值m_ptr才不是NULL。

attemptIncStrong在由弱指针提升到强指针时用到;而attemptIncWeak在OBJECT_LIFETIME_FOREVER时试图增加弱计数用到。

bool RefBase::weakref_type::attemptIncWeak(const void* id)

{

weakref_impl* const impl = static_cast<weakref_impl*>(this);

int32_t curCount = impl->mWeak;

LOG_ASSERT(curCount >= 0, "attemptIncWeak called on %p after underflow",

this);

while (curCount > 0) {

if (android_atomic_cmpxchg(curCount, curCount+1, &impl->mWeak) == 0) {

break;

}

curCount = impl->mWeak;

}

if (curCount > 0) {

impl->addWeakRef(id);

}

return curCount > 0;

}

bool RefBase::weakref_type::attemptIncStrong(const void* id)

{

incWeak(id);

weakref_impl* const impl = static_cast<weakref_impl*>(this);

int32_t curCount = impl->mStrong;

LOG_ASSERT(curCount >= 0, "attemptIncStrong called on %p after underflow",

this);

while (curCount > 0 && curCount != INITIAL_STRONG_VALUE) {

if (android_atomic_cmpxchg(curCount, curCount+1, &impl->mStrong) == 0) {

break;

}

curCount = impl->mStrong;

}

if (curCount <= 0 || curCount == INITIAL_STRONG_VALUE) {

bool allow;

if (curCount == INITIAL_STRONG_VALUE) {

// Attempting to acquire first strong reference... this is allowed

// if the object does NOT have a longer lifetime (meaning the

// implementation doesn't need to see this), or if the implementation

// allows it to happen.

allow = (impl->mFlags&OBJECT_LIFETIME_WEAK) != OBJECT_LIFETIME_WEAK

|| impl->mBase->onIncStrongAttempted(FIRST_INC_STRONG, id);

} else {

// Attempting to revive the object... this is allowed

// if the object DOES have a longer lifetime (so we can safely

// call the object with only a weak ref) and the implementation

// allows it to happen.

allow = (impl->mFlags&OBJECT_LIFETIME_WEAK) == OBJECT_LIFETIME_WEAK

&& impl->mBase->onIncStrongAttempted(FIRST_INC_STRONG, id);

}

if (!allow) {

decWeak(id);

return false;

}

curCount = android_atomic_inc(&impl->mStrong);

// If the strong reference count has already been incremented by

// someone else, the implementor of onIncStrongAttempted() is holding

// an unneeded reference. So call onLastStrongRef() here to remove it.

// (No, this is not pretty.) Note that we MUST NOT do this if we

// are in fact acquiring the first reference.

if (curCount > 0 && curCount < INITIAL_STRONG_VALUE) {

impl->mBase->onLastStrongRef(id);

}

}

impl->addStrongRef(id);

#if PRINT_REFS

LOGD("attemptIncStrong of %p from %p: cnt=%d\n", this, id, curCount);

#endif

if (curCount == INITIAL_STRONG_VALUE) {

android_atomic_add(-INITIAL_STRONG_VALUE, &impl->mStrong);

impl->mBase->onFirstRef();

}

return true;

}

Note:

android_atomic_cmpxchg return 0 for success, not failure.所以attemptInc时,是只要没有lock xchg成功就一直尝试增加,直至成功。

forceIncStrong在sp<T>::force_set时使用,表示能够保证sp<T>::m_ptr不是野指针时,即保证RefBase对象存在时(Bpinder和BpRefBase使用LIFETIME_WEAK extend),可以直接增加引用计数。对象计数为INITIAL_STRONG_VALUE和0时,均调用onFirstRef!!!

void RefBase::forceIncStrong(const void* id) const

{

weakref_impl* const refs = mRefs;

refs->incWeak(id);

refs->addStrongRef(id);

const int32_t c = android_atomic_inc(&refs->mStrong);

LOG_ASSERT(c >= 0, "forceIncStrong called on %p after ref count underflow",

refs);

#if PRINT_REFS

LOGD("forceIncStrong of %p from %p: cnt=%d\n", this, id, c);

#endif

switch (c) {

case INITIAL_STRONG_VALUE:

android_atomic_add(-INITIAL_STRONG_VALUE, &refs->mStrong);

// fall through...

case 0:

refs->mBase->onFirstRef();

}

}

sp in frameworks/base/include/utils/StrongPointer.h

template <typename T>

class sp

{

public:

inline sp() : m_ptr(0) { }

sp(T* other);

sp(const sp<T>& other);

template<typename U> sp(U* other);

template<typename U> sp(const sp<U>& other);

~sp();

// Assignment

sp& operator = (T* other);

sp& operator = (const sp<T>& other);

template<typename U> sp& operator = (const sp<U>& other);

template<typename U> sp& operator = (U* other);

//! Special optimization for use by ProcessState (and nobody else).

void force_set(T* other);

// Reset

void clear();

// Accessors

inline T& operator* () const { return *m_ptr; }

inline T* operator-> () const { return m_ptr; }

inline T* get() const { return m_ptr; }

// Operators

COMPARE(==)

COMPARE(!=)

COMPARE(>)

COMPARE(<)

COMPARE(<=)

COMPARE(>=)

private:

template<typename Y> friend class sp;

template<typename Y> friend class wp;

void set_pointer(T* ptr);

T* m_ptr;

};

sp<T>、wp<T>的成员T* m_ptr是指向RefBase子类的指针,使用模板多态。

可以看出wp<T>和sp<T>通过其构造函数、拷贝构造函数(含initialize)、assignment操作符重载、析构函数,还有clear()方法,来引发对象的计数函数,加加减减。

sp<T>重载了地址和指针操作符,

T& operator*(){return *m_ptr}

T* operator->(){return m_ptr}

这不仅仅是让智能指针的操作更像是指针;而是增加’->’左值结合,返回T*,从而能且仅能以指针形式访问T的成员或方法;wp<T>没有重载地址和指针操作符,只能以’.’操作符访问自己的成员或方法,从而也说明需要访问T时,wp必须promote()成sp来使用。

其余,sp<T>和wp<T>都重载了一些比较运算符等。很明显地,==、!=需要用来比较两个指针指向对的对象是否相同,指针指向对象是否为NULL等。

sp<T>::clear和wp<T>::clear用来减计数并将m_ptr赋零。

template<typename T>

void wp<T>::clear()

{

if (m_ptr) {

m_refs->decWeak(this);

m_ptr = 0;

}

}

template<typename T>

void sp<T>::clear()

{

if (m_ptr) {

m_ptr->decStrong(this);

m_ptr = 0;

}

}

对象内存布局引用关系如:

+-------------------------------------------------+

+---------------+---------------->+------------------+ |-->+--------------------+

| wp | +---->|===========|---------------->| |

+---------------+ | | | | weakref_impl |

+---------------+ | | RefBase | | |

| sp |--------+ | | +--------------------+

+---------------+ +------------------+

现在来描述上节的问题为什么RefBase的onFirstRef()就是onFirstStrongRef而不可能有onFirstWeakRef?因为只有弱计数即只通过wp<T>引用时,是只能访问m_refs而不能访问m_ptr的(m_ptr指向对象可能不存在),onFirstRef ()虚函数只能通过对象的虚方法表vtabl调用到。所以赋值给wp<T>后,m_ptr->onFirstRef()是不会调用到的。如下面代码片段:

int * mem = (int*)malloc(sizeof(DerivedRefBase));

DerivedRefBase*embryo = (DerivedRefBase*)mem;

mem[OFF_REFS] = new RefBase::weakref_impl(embyro); // to access privateRefBase::mRefs

//对象还未构造;不会出错,对象不被使用wp<T>::m_ptr访问; onFirstRef不被调用

wp<DerivedRefBase> wobj(embryo);

// 在内存上构造对象;注,DerivedRefBase使构造函数为public;会新建并覆盖mRefs

DerivedRefBase * p = new (mem) DerivedRefBase;

// 替换新创建的没有引用计数的mRefs,为wobj中记录的

delete((RefBase::weakref_impl*)mem[OFF_REFS]);

mem[OFF_REFS] = wobj. get_refs();

// 在AttemptIncStrong中如果成功,会调用onFirstRef

sp<DerivedRefBase> sobj = wobj.promote();

/* 参考代码

template<typename T>

wp<T>::wp(T* other) : m_ptr(other)

{

if (other) m_refs = other->createWeak(this);

}

RefBase::weakref_type* RefBase::createWeak(const void* id) const

{

mRefs->incWeak(id);

return mRefs;

}

RefBase::RefBase() : mRefs(new weakref_impl(this))

{

}

*/

Android Binder机制之IBinder & IInterface

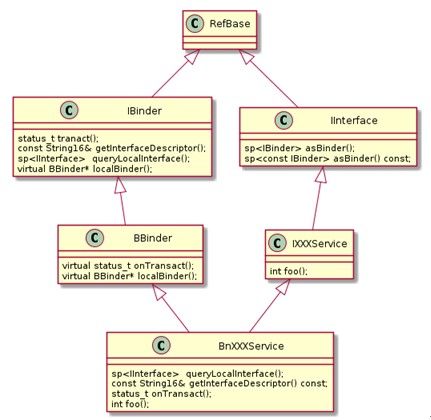

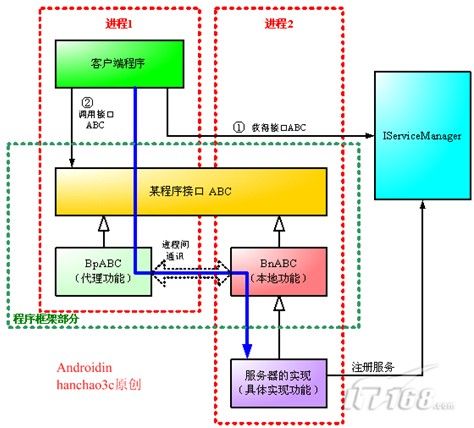

Binder的工作机制示意图如下图(摘自网络,出处如标):

IBinder接口需要为IInterface提供基础通信能力,而IInterface接口则负责定义具体的功能。对于通用的跨进程调用机制的三个要素,Query[Remote]Interface由ServiceManager管理,AddRef/ReleaseRef由基类Refbase实现并通过onFirstRef/onLastXxxxRef形成引用菊花链;Method Invoke分别由IBinder::transact(…)函数实现。

下面将通过查看代码的方式分析binder和interface。

IBinder & BpBinder & BBinder

IBinder接口的定义摘录如下:

/**

* Base class and low-level protocol for a remotable object.

* You can derive from this class to create an object for which other

* processes can hold references to it. Communication between processes

* (method calls, property get and set) is down through a low-level

* protocol implemented on top of the transact() API.

*/

class IBinder : publicvirtual RefBase

{

public:

enum {

FIRST_CALL_TRANSACTION = 0x00000001,

LAST_CALL_TRANSACTION = 0x00ffffff,

PING_TRANSACTION = B_PACK_CHARS('_','P','N','G'),

DUMP_TRANSACTION = B_PACK_CHARS('_','D','M','P'),

INTERFACE_TRANSACTION = B_PACK_CHARS('_', 'N', 'T', 'F'),

// Corresponds to TF_ONE_WAY -- an asynchronous call.

FLAG_ONEWAY = 0x00000001

};

IBinder();

/**

* Check if this IBinder implements the interface named by

* @a descriptor. If it does, the base pointer to it is returned,

* which you can safely static_cast<> to the concrete C++ interface.

*/

virtual sp<IInterface> queryLocalInterface(const String16& descriptor);

/**

* Return thecanonical name of the interface provided by this IBinder

* object.

*/

virtual const String16&getInterfaceDescriptor() const = 0;

virtual bool isBinderAlive() const = 0;

virtual status_t pingBinder() = 0;

virtual status_t dump(int fd, const Vector<String16>& args) = 0;

virtual status_t transact( uint32_t code, const Parcel& data, Parcel* reply, uint32_tflags = 0) = 0;

/**

* This method allows you to add data that is transported through

* IPC along with your IBinder pointer. When implementing a Binder

* object, override it to write your desired data in to @a outData.

* You can then call getConstantData() on your IBinder to retrieve

* that data, from any process. You MUST return the number of bytes

* written in to the parcel (including padding).

*/

class DeathRecipient : publicvirtual RefBase

{

public:

virtual voidbinderDied(const wp<IBinder>& who) = 0;

};

/**

* Register the @a recipient for a notification if this binder

* goes away. If this binder object unexpectedly goes away

* (typically because its hosting process has been killed),

* then DeathRecipient::binderDied() will be called with a reference

* to this.

*

* The @a cookie is optional -- if non-NULL, it should be a

* memory address that you own (that is, you know it is unique).

*

* @note You will only receive death notifications for remote binders,

* as local binders by definition can't die without you dying as well.

* Trying to use this function on a local binder will result in an

* INVALID_OPERATION code being returned and nothing happening.

*

* @note This link always holds a weak reference to its recipient.

*

* @note You will only receive a weak reference to the dead

* binder. You should not try to promote this to a strong reference. (the binder nodehas died. lzz)

* (Nor should you need to, as there is nothing useful you can

* directly do with it now that it has passed on.)

*/

virtual status_t linkToDeath(const sp<DeathRecipient>& recipient,

void* cookie = NULL,

uint32_t flags = 0) = 0;

/**

* Remove a previously registered death notification.

* The @a recipient will no longer be called if this object

* dies. The @a cookie is optional. If non-NULL, you can

* supply a NULL @a recipient, and the recipient previously

* added with that cookie will be unlinked.

*/

virtual status_t unlinkToDeath( const wp<DeathRecipient>& recipient,

void* cookie = NULL,

uint32_t flags = 0,

wp<DeathRecipient>* outRecipient = NULL) = 0;

virtual bool checkSubclass(const void* subclassID) const;

typedef void (*object_cleanup_func)(const void* id, void* obj, void* cleanupCookie);

virtual void attachObject( const void* objectID,

void* object,

void* cleanupCookie,

object_cleanup_func func) = 0;

virtual void* findObject(const void* objectID) const = 0;

virtual void detachObject(const void* objectID) = 0;

virtual BBinder* localBinder();

virtual BpBinder* remoteBinder();

protected:

virtual ~IBinder();

private:

};

可以看到这几个函数非虚即纯虚,在设计上,这说明子类BpBinder和BBinder会重载这几个函数。QueryLocalInterface、localBinder、remoteBinder三个虚函数的默认实现都是返回NULL,基本是空实现,子类根据需要重载特定的即可;而transact是要子类实现特定跨进程调用Method,基类(接口)transact当然是纯虚的。

其中,localBinder用于判断Binder是否是和客户同进程的服务,若是,则直接返回指向BBinder对象的指针;remoteBinder用于判断服务是否是远端服务,若是,则返回本端代理对象BpBinder的指针。BpBinder重载了remoteBinder {return this;},而BBinder重载了localBinder {return this;},这就为IBinder对象提供了一种非常初级的能力,用于判断实际组件是本端还是远端。在flatten_binder_object(...)/unflatten_binder_object(...)中会使用。

queryLocalInterface()虚函数默认实现返回NULL,从Binder到Interface的转换会用到, BBinder和BpBinder类都没有重载,BBinder子类BnInterface<INTERFACE>会重载;与getInterfaceDescriptor()结合看。

getInterfaceDescriptor()纯虚函数,用于Check Interface Token。BBinder和BpBinder分别做了实现,BpBinder通过Binder通信获得BBinder的InterfaceDescriptor。BBinder的实现基本是个空实现。后面会看到子类BnInterface<INTERFACE>会重载BBinder的实现,从而返回Interface自己的Descriptor,如果子类不重载,会打印出warning。

const String16& BBinder::getInterfaceDescriptor() const @ Binder.cpp

{

// This is a local static rather than a global static,

// to avoid static initializer ordering issues.

static String16 sEmptyDescriptor;

LOGW("reached BBinder::getInterfaceDescriptor (this=%p)", this);

return sEmptyDescriptor;

}

const String16& BpBinder::getInterfaceDescriptor() const @ BpBinder.cpp

{

if (isDescriptorCached() == false) {

Parcel send, reply;

// do the IPC without a lock held.

status_t err = const_cast<BpBinder*>(this)->transact(

INTERFACE_TRANSACTION, send, &reply);

if (err == NO_ERROR) {

String16 res(reply.readString16());

Mutex::Autolock _l(mLock);

// mDescriptorCache could have been assigned while the lock was

// released.

if (mDescriptorCache.size() == 0)

mDescriptorCache = res;

}

}

// we're returning a reference to a non-static object here. Usually this

// is not something smart to do, however, with binder objects it is

// (usually) safe because they are reference-counted.

return mDescriptorCache;

}

status_t BBinder::onTransact(uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

switch (code) {

case INTERFACE_TRANSACTION:

reply->writeString16(getInterfaceDescriptor());

return NO_ERROR;

case DUMP_TRANSACTION: {

.......

}

default:

return UNKNOWN_TRANSACTION;

}

}

linkToDeath/unlinkToDeath/ DeathRecipient::binderDied,用于注册binder死亡通知。因为binder服务组件是否还存在,其进程是否还存在,这是客户端要关心的。

attachObject/findObject/detachObject从名字就可以看出是用于client side将BpBinder绑定到已存在的对象上。主要是用于java binder object。

BpBinder用于client端的通讯处理,类声明如下:

In frameworks/base/include/binder/BpBinder.h

class BpBinder : public IBinder

{

public:

BpBinder(int32_t handle);

inline int32_t handle() const { return mHandle; }

virtual const String16& getInterfaceDescriptor() const;

virtual bool isBinderAlive() const;

virtual status_t pingBinder();

virtual status_t dump(int fd, const Vector<String16>& args);

virtual status_t transact( uint32_t code,

const Parcel& data,

Parcel* reply,

uint32_t flags = 0);

virtual status_t linkToDeath(const sp<DeathRecipient>& recipient,

void* cookie = NULL,

uint32_t flags = 0);

virtual status_t unlinkToDeath( const wp<DeathRecipient>& recipient,

void* cookie = NULL,

uint32_t flags = 0,

wp<DeathRecipient>* outRecipient = NULL);

virtual void attachObject( const void* objectID,

void* object,

void* cleanupCookie,

object_cleanup_func func);

virtual void* findObject(const void* objectID) const;

virtual void detachObject(const void* objectID);

virtual BpBinder* remoteBinder();

status_t setConstantData(const void* data, size_t size);

void sendObituary();

class ObjectManager

{

public:

ObjectManager();

~ObjectManager();

void attach( const void* objectID,

void* object,

void* cleanupCookie,

IBinder::object_cleanup_func func);

void* find(const void* objectID) const;

void detach(const void* objectID);

void kill();

private:

ObjectManager(const ObjectManager&);

ObjectManager& operator=(const ObjectManager&);

struct entry_t

{

void* object;

void* cleanupCookie;

IBinder::object_cleanup_func func;

};

KeyedVector<const void*, entry_t> mObjects;

};

protected:

virtual ~BpBinder();

virtual void onFirstRef();

virtual void onLastStrongRef(const void* id);

virtual bool onIncStrongAttempted(uint32_t flags, const void* id);

private:

const int32_t mHandle;

struct Obituary {

wp<DeathRecipient> recipient;

void* cookie;

uint32_t flags;

};

void reportOneDeath(const Obituary& obit);

bool isDescriptorCached() const;

mutable Mutex mLock; // lock used to operate Obituaries

volatile int32_t mAlive;

volatile int32_t mObitsSent; // if obituary is sent

Vector<Obituary>* mObituaries; // Obituaries registered to receive binder death notification

ObjectManager mObjects; // Java objects attached to

Parcel* mConstantData;

mutable String16 mDescriptorCache;

};

BpBinder的最实质性的内容就是包含了mHandle to binder ref。其余成员见注释,函数功能同IBinder接口。

BBinder用于service端的通讯处理,类声明如下:

In frameworks/base/include/binder/Binder.h

class BBinder : public IBinder

{

public:

BBinder();

virtual const String16& getInterfaceDescriptor() const;

virtual bool isBinderAlive() const;

virtual status_t pingBinder();

virtual status_t dump(int fd, const Vector<String16>& args);

virtual status_t transact( uint32_t code,

const Parcel& data,

Parcel* reply,

uint32_t flags = 0);

virtual status_t linkToDeath(const sp<DeathRecipient>& recipient,

void* cookie = NULL,

uint32_t flags = 0);

virtual status_t unlinkToDeath( const wp<DeathRecipient>& recipient,

void* cookie = NULL,

uint32_t flags = 0,

wp<DeathRecipient>* outRecipient = NULL);

virtual void attachObject( const void* objectID,

void* object,

void* cleanupCookie,

object_cleanup_func func);

virtual void* findObject(const void* objectID) const;

virtual void detachObject(const void* objectID);

virtual BBinder* localBinder();

protected:

virtual ~BBinder();

virtual status_t onTransact( uint32_t code,

const Parcel& data,

Parcel* reply,

uint32_t flags = 0);

private:

BBinder(const BBinder& o);

BBinder& operator=(const BBinder& o);

class Extras;

Extras* mExtras;

void* mReserved0;

};

class BBinder::Extras

{

public:

Mutex mLock;

BpBinder::ObjectManagermObjects;

};

mExtras作用同BpBinder的mObjects,用于service side的java binder node object绑定。

IInterface & BpInterface & BnInterface

IInterface用来定义接口的跨进程调用方法(Interface的特指),是所有自定义ISubInterface接口(IBinder当然是例外)的基类,在frameworks/base/includes/binder/Iinterface.h中,声明如下:

class IInterface : publicvirtual RefBase

{

public:

sp<IBinder> asBinder();

sp<const IBinder> asBinder() const;

protected:

virtual IBinder* onAsBinder() = 0;

};

从类声明可以看出这个基类中的函数主要是由IInterface到IBinder的转换,得到对应的binder。使用代码示例:

sp<ISubInterface> intf = servicemgr->getService(servicename);

sp<IBinder> binder = intf->asBinder();

还是在IInterface.h中,定义了一个interface_cast模板函数,用于从IBinder转换得到IInterface。

template<typename INTERFACE>

inline sp<INTERFACE> interface_cast(const sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

}

使用代码示例:

sp<ICamera> camera = interface_cast<ICamera>(data.readStrongBinder());

BnInterface和BpInterface模板类继承于自定义的ISubInterface(模板中记为即为INTERFACE)。声明在IInterface.h中:

template<typename INTERFACE>

class BnInterface : public INTERFACE, public BBinder

{

public:

virtual sp<IInterface> queryLocalInterface(const String16& _descriptor);

virtual const String16& getInterfaceDescriptor() const;

protected:

virtual IBinder* onAsBinder();

};

template<typename INTERFACE>

class BpInterface : public INTERFACE, public BpRefBase

{

public:

BpInterface(const sp<IBinder>& remote);

protected:

virtual IBinder* onAsBinder();

};

class BpRefBase : public virtual RefBase

{

protected:

BpRefBase(const sp<IBinder>& o);

virtual ~BpRefBase();

virtual void onFirstRef();

virtual void onLastStrongRef(const void* id);

virtual bool onIncStrongAttempted(uint32_t flags, const void* id);

inline IBinder* remote() { return mRemote; }

inline IBinder* remote() const { return mRemote; }

private:

BpRefBase(const BpRefBase& o);

BpRefBase& operator=(const BpRefBase& o);

IBinder* const mRemote; //注意是普通指针

RefBase::weakref_type* mRefs; // BpRefBase ‘s mRefs, not private mRefs of base class.

volatile int32_t mState;

};

继承关系图如下:

RefBase RefBase

/ \ / \

IBinder IInterface BpBinder<--BpRefBase IInterface

| | | |

BBinder ISubInterface | ISubInterface

\ / \ /

BnInterface<ISubInterface> BpInterface< ISubInterface >

| |

BnSubInterface BpSubInterface

思考对象内存布局(注意virtual继承)。

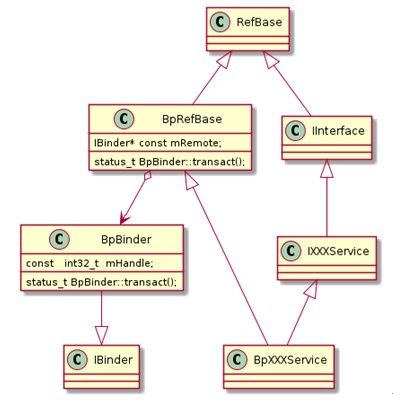

两张更专业和漂亮的图如下(引用自网络):

BnInterface和BpInterface模板类继承都采用多重继承,BnInterface<INTERFACE>在Binder的通信能力加上INTERFACE的接口功能定制化,BpInterface在BpRefBase的基础上加上INTERFACE的接口功能定制化,这样功能丰满起来;熟悉ATL的话,看到这些代码会感到眼熟,体现了同样的设计理念。

在设计上,为什么BpInterface<INTERFACE>不继承自BpBinder,象BnInterface< INTERFACE >继承自BBinder那样,而是继承自采用关联关系维护BpBinder指针的BpRefBase?

答案是BpBinder的生命周期与BpInterface<INTERFACE>显然不同,BpBinder和BpInterface<INTERFACE>分别有其独立的RefBase实例及对应weakref_impl实例控制生命周期(考虑内存布局),因而在BpInterface<INTERFACE>的父类BpRefBase中关联BpBinder,具体体现是BpBinder指针。ProcessState维护了一个IBinder(准确地说是struct handle_entry)的Vector,Binder的handle(实际上handle由binder driver生成,是binder_ref.desc)就是这个vector的索引,由这个Vector完成handle到BpBinder的对应,每个binder handle对应的Binder是唯一的;每次从binder device读出这个handle查找到BpBinder后,都要interface_cast成BpInterface<ISubInterface>来使用。

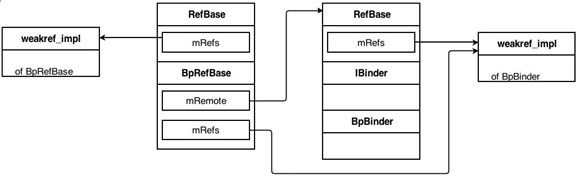

BpRefBase的两个字段mRemote和mRefs的作用:跨进程binder意义上的强和弱引用,角色就像wp时,对m_ptr和m_refs的作用。内存布局如下。

在由IInterface得到关联的Binder时,IInterface::asBinder() calls IInterface::onAsBinder()=0,显然BpInterface<INTERFACE>和BnInterface<INTERFACE>要对纯虚函数onAsBinder做重载。这就是Interface to binder背后的逻辑。

In frameworks/base/include/binder/IInterface.h

template<typename INTERFACE>

IBinder* BnInterface<INTERFACE>::onAsBinder()

{

return this; // the BBinder itself.

}

template<typename INTERFACE>

inline IBinder* BpInterface<INTERFACE>::onAsBinder()

{

return remote(); // the mRemote whcih is a BpBinder encapsuling the binder handle.

}

对于BpInterface和BpBinder,结合对象内存布局可知转换是得到对应的对象,而不是同一个对象的upcast或downcast。

引入IInterface后,涉及的静态类结构图(引用自网络):

跨进程方法调用流程图如下图(引用自网络):

下面,从客户和服务同进程和不同进程两种情况来详细审视一下interface_cast Binder to Interface背后的逻辑。

首先,ISubInterface的类声明引用DECLARE_META_INTERFACE(INTERFACE)宏,在实现文件中要引用宏IMPLEMENT_META_INTERFACE(INTERFACE, NAME)实现定义。

#define DECLARE_META_INTERFACE(INTERFACE) \

static const android::String16 descriptor; \

static android::sp<I##INTERFACE> asInterface( \

const android::sp<android::IBinder>& obj); \

virtual const android::String16& getInterfaceDescriptor() const; \

I##INTERFACE(); \

virtual ~I##INTERFACE(); \

#define IMPLEMENT_META_INTERFACE(INTERFACE, NAME) \

const android::String16 I##INTERFACE::descriptor(NAME); \

const android::String16& \

I##INTERFACE::getInterfaceDescriptor() const { \

return I##INTERFACE::descriptor; \

} \

android::sp<I##INTERFACE> I##INTERFACE::asInterface( \

const android::sp<android::IBinder>& obj) \

{ \

android::sp<I##INTERFACE> intr; \

if (obj != NULL) { \

intr = static_cast<I##INTERFACE*>( \

obj->queryLocalInterface( \

I##INTERFACE::descriptor).get()); \

if (intr == NULL) { \

intr =new Bp##INTERFACE(obj); \

} \

} \

return intr; \

} \

I##INTERFACE::I##INTERFACE() { } \

I##INTERFACE::~I##INTERFACE() { } \

#define CHECK_INTERFACE(interface, data, reply) \

if (!data.checkInterface(this)) { return PERMISSION_DENIED; } \

INTERFACE倒把自己搞的像个MFC。这样ISubInterface就有静态成员descriptor,静态方法asInterface()和虚方法queryInterfaceDescriptor(),暂且不管queryInterfaceDescriptor()。

由interface_cast实现调到ISubInterface::asInterface(sp<IBinder>),关键处就在binder的queryLocalInterface(String16&)处,

BnInterface<INTERFACE>重载了这个虚函数,覆盖了IBinder::queryLocalInterface() {return NULL;}的默认实现:

template<typename INTERFACE>

inline sp<IInterface> BnInterface<INTERFACE>::queryLocalInterface(

const String16& _descriptor)

{

if (_descriptor == INTERFACE::descriptor) return this;

return NULL;

}

从而,如果参数sp<ISubInterface>是引用BnInterface<ISubInterface> : BBinder,则会直接返回sp<ISubInterface>.get() {return m_ptr;} BBinder对象本身的普通指针,这是同进程的情况;而如果sp<>是引用BpBinder,则返回NULL,从而关联BpBinder构造BpSubInterface()对象,这是跨进程的情况。

预为熟悉,BpSubIinterface构造过程中将BpBinder指针赋值给BpRefBase的IBinder* mRemote 变量。incStrong会增加远端Binder的引用计数,通过transact(BC_ACQUIRE)通信完成。

template<typename INTERFACE>

inline BpInterface<INTERFACE>::BpInterface(const sp<IBinder>& remote): BpRefBase(remote) {}

下面介绍getInterfaceDescriptor():

template<typename INTERFACE>

inline const String16& BnInterface<INTERFACE>::getInterfaceDescriptor() const

{

return INTERFACE::getInterfaceDescriptor();

}

IBinder和ISubInterface均有各自的getInterfaceDescriptor()虚方法,所以调用时,要加上namespace,如BnSunbInterface可以用this->BBinder::getInterfaceDescriptor(),this->ISubInterface::getInterfaceDescriptor();而BpSubInterface可以直接用getInterfaceDescriptor(),等同于this->ISubInterface::getInterfaceDescriptor(),用使用BpBinder的getInterfaceDescriptor()可以使用this->remote()->getInterfaceDescriptor()。

CHECK_INTERFACE宏,用于接口检查,目前代码中用于BnSubInterface实现中。从Parcel中读取InterfaceDescriptor与自己this(downcast to IBinder *)->getInterfaceDescriptor()比较,如果失败,终止处理,返回。

下面接着考察BBinder和BpBinder对IBinder的重要的纯虚函数transact的实现,即LPC功能的实现:

status_t BpBinder::transact(uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status =IPCThreadState::self()->transact(mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

status_t BBinder::transact(uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

data.setDataPosition(0);

status_t err = NO_ERROR;

switch (code) {

case PING_TRANSACTION:

reply->writeInt32(pingBinder());

break;

default:

err = onTransact(code, data, reply, flags); // virtual, overload it in derived class!

break;

}

if (reply != NULL) {

reply->setDataPosition(0);

}

return err;

}

flag 参数同步调用是0,异步调用时用IBinder.FLAG_ONEWAY。FLAG_ONEWAY是class IBinder的匿名enum,所以不是IBinder::FLAG_ONEWAY,对应驱动的FLAG_ONE_WAY。

最后,对于ISubInterfaceClient/BpSubInterfaceClient/BnSubInterfaceClient等带”Client”字样的类,一是用于service端的client管理,BnSubInterfaceClient实现由client端使用,BpSubInterfaceClient实现由service端使用;更重要的,是实现一种异步的event sink机制。

Android Binder机制之defaultServiceManager

在继续探讨binder通信之前,首先大致分析defaultServicemanager,会预引入binder driver里面一些结构及BC/BR。泛泛描述一节,目的是从servicemanager视角对binder通信数据包结构有所了解。

defaultServiceManager提供服务的添加、获取和枚举功能,先于system_init启动各种服务之前启动,因为system_init中启动的服务需要向defaultServiceManager进行注册。binder component分为命名binder和不具名binder,角色类似于socket中的知名监听端口和accept后的普通端口。命名binder向ServiceManager注册用于寻找服务。

在Phone和Emulator平台(TARGET_SIMULATOR=false)上和Simulator平台(TARGET_SIMULATOR=true)上,defaultServiceManager使用不同的实现。但是,注意,TARGET_SIMULATOR = true几乎已经绝迹!!!

Should TARGET_SIMULATOR be set to true when using QEMU?

no, the simulator is a special target forruning the dalivk vm on Linux and it is not maintained for a long time. It has nothing to do with qemu.

在手机和Qemu上运行时,编译选项TARGET_SIMULATOR = false,ServiceManager的启动在Init.rc中以如下服务启动:

service servicemanager/system/bin/servicemanager

class core

user system

group system

critical

onrestart restart zygote

onrestart restart media

onrestart restart surfaceflinger

onrestart restart drm

编译控制frameworks/base/cmds/servicemanager/Android.mk:

1LOCAL_PATH:= $(call my-dir)

2

3#include $(CLEAR_VARS)

4#LOCAL_SRC_FILES := bctest.c binder.c

5#LOCAL_MODULE := bctest

6#include $(BUILD_EXECUTABLE)

7

8include $(CLEAR_VARS)

9LOCAL_SHARED_LIBRARIES := liblog

10LOCAL_SRC_FILES := servicemanager.c binder.c

11LOCAL_MODULE := servicemanager

12ifeq ($(BOARD_USE_LVMX),true)

13 LOCAL_CFLAGS += -DLVMX

14endif

15include $(BUILD_EXECUTABLE)

作为servicemanager。servicemanager在service_manager.c文件中没有使用IServiceManager接口,直接按照binder和serviceemanager的通信协议使用c实现,启动方式如下:

int main(int argc, char **argv) // service_manager.c forservicemanager

{

struct binder_state *bs;

void *svcmgr = BINDER_SERVICE_MANAGER;

bs = binder_open(128*1024);

// 打开/dev/binder,并mmap

/* the only binder_become_context_manager call*/

if (binder_become_context_manager(bs))

// 给使用ioctl给binder driver发送BINDER_SET_CONTEXT_MGR命令,在驱动里面会处理

{

LOGE("cannot become context manager (%s)\n", strerror(errno));

return -1;

}

svcmgr_handle = svcmgr;

binder_loop(bs, svcmgr_handler);

// BC_ENTER_LOOPER通知driver本线程进入线程池;进入主服务循环,阻塞读在fd上

return 0;

}

ServiceManager的功能比较简单,阻塞读在binder fd上,接收其他进程的请求处理即可,因为只有GET_SERVICE_TRANSACTION、CHECK_SERVICE_TRANSACTION、ADD_SERVICE_TRANSACTION和LIST_SERVICES_TRANSACTION几个请求且处理很简单,所以根本不需要多线程并行处理之类的复杂模型。代码就在service_manager.c和binder.c两个文件中。在列出几个重要的数据结构后,再详细考察svcmgr_handler。

In frameworks/cmds/servicemanager/binder.h (except struct binder_write_read)

struct binder_object

{

uint32_t type;

uint32_t flags;

void *pointer;

void *cookie;

};

binder对象。

struct binder_write_read {

signed long write_size;

signed long write_consumed;

unsigned long write_buffer;

signed long read_size;

signed long read_consumed;

unsigned long read_buffer;

};

传给ioctl输入输出缓冲区参数,用于数据的IO。当传送给binder driver的IOCTL CODE是BINDER_WRITE_READ时,传送此结构的参数。可以简单看成IOCTL的IO数据缓冲区。

struct binder_txn

{

void *target;

void *cookie;

uint32_t code;

uint32_t flags;

uint32_t sender_pid;

uint32_t sender_euid;

uint32_t data_size;

uint32_t offs_size;

void *data;

void *offs;

};

同驱动中的binder_transaction_data结构,Binder route和权限控制,序列化数据buffer地址,是binder通信命令BC_TRANSANCTION和BC_REPLY的负载数据。

struct binder_io

{

char *data; /* pointer to read/write from */

uint32_t *offs; /* array of offsets */

uint32_t data_avail; /* bytes available in data buffer */

uint32_t offs_avail; /* entries available in offsets array */

char *data0; /* start of data buffer */

uint32_t *offs0; /* start of offsets buffer */

uint32_t flags;

uint32_t unused;

};

主要用作binder_txn的offs和data字段指向的缓冲区。是通过binder_txn /binder_transaction_data route到特定binder,给该binder的LPC方法ID和参数序列化数据,作用同Parcel类和驱动中的binder_buffer。

binder_io.offs/binder_txn.offs中记录的是跨进程通信中的struct flat_binder_object对象,驱动根据offs中的偏移量找到每个struct flat_binder_object对象在.data中的位置,对binder对象进行转换。如果target binder所在的进程是target process,那么binder.type会被驱动转成BINDER_TYPE_BINDER/BINDER_TYPE_WEAK_BINDER,并可用于BnSubInterface/BBinder的直接寻址;不同时(从服务进程传到客户端进程或从ServiceManager进程传到客户端进程),则binder.type会是BINDER_TYPE_HANDLE/BINDER_TYPE_WEAK_HANDLE。

struct binder_death {

void (*func)(struct binder_state *bs, void *ptr);

void *ptr;

};

/* the one magic object */

#define BINDER_SERVICE_MANAGER ((void*) 0)

#define SVC_MGR_NAME "android.os.IServiceManager"

enum {

SVC_MGR_GET_SERVICE = 1,

SVC_MGR_CHECK_SERVICE,

SVC_MGR_ADD_SERVICE,

SVC_MGR_LIST_SERVICES,

};

虽未多描述,但此处,希望完全记清楚这几个数据结构的层次关系;因附下图(x)。

int binder_write(struct binder_state *bs, void *data, unsigned len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (unsigned) data;

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n",

strerror(errno));

}

return res;

}

int binder_read(struct binder_state *bs, void *data, unsigned len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer =0;

bwr.read_size = len;

bwr.read_consumed = 0;

bwr.read_buffer = (unsigned) data;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf(stderr,"binder_write: ioctl failed (%s)\n",

strerror(errno));

}

return bwr.read_consumed;

}

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res; int len;

unsigned readbuf[32]; // unsigned int default.

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(unsigned)); // Enter thread pool as main work thread

for (;;) {

res = binder_read(bs->fd, readbuf, sizeof(readbuf)); // block read the request

if (res < 0) {

LOGE("binder_loop: ioctl failed (%s)\n", strerror(errno));

break;

}

len = res;

res =binder_parse(bs, 0, readbuf, len, func); // parse request and handle, callback func if BR_TRANSACTION

if (res == 0) {

LOGE("binder_loop: unexpected reply?!\n");

break;

}

if (res < 0) {

LOGE("binder_loop: io error %d %s\n", res, strerror(errno));

break;

}

}

}

svcmgr_handler是ServiceManager实现自己功能的回调函数,角色同BBinder::onTransact()。对于BR_TRANSACTION,binder_parse会调用回调函数func进行处理。

int svcmgr_handler(struct binder_state *bs,

struct binder_txn *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

unsigned len;

void *ptr;

uint32_t strict_policy;

// LOGI("target=%p code=%d pid=%d uid=%d\n",

// txn->target, txn->code, txn->sender_pid, txn->sender_euid);

if (txn->target != svcmgr_handle)

return -1;

// Equivalent to Parcel::enforceInterface(), reading the RPC

// header with the strict mode policy mask and the interface name.

// Note that we ignore the strict_policy and don't propagate it

// further (since we do no outbound RPCs anyway).

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len);

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

fprintf(stderr,"invalid id %s\n", str8(s));

return -1;

}

switch(txn->code) {

case SVC_MGR_GET_SERVICE:

case SVC_MGR_CHECK_SERVICE:

s = bio_get_string16(msg, &len);

ptr = do_find_service(bs, s, len);

if (!ptr)

break;

bio_put_ref(reply, ptr);

return 0;

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

ptr = bio_get_ref(msg);

if (do_add_service(bs, s, len, ptr, txn->sender_euid))

return -1;

break;

case SVC_MGR_LIST_SERVICES: {

unsigned n = bio_get_uint32(msg);

si = svclist;

while ((n-- > 0) && si)

si = si->next;

if (si) {

bio_put_string16(reply, si->name);

return 0;

}

return -1;

}

default:

LOGE("unknown code %d\n", txn->code);

return -1;

}

bio_put_uint32(reply, 0);

return 0;

}

可以看出,回调完成SVC_MGR_[GET/ CHECK/ ADD/ LIST] _SERVICE的处理,就是用个链表的结点插入、查找、删除操作。

对于SVC_MGR_ADD_SERVICE,ServiceManager最终会使用下列函数处理,注意binder_io中的binder_object已经是被binder driver转换过的binder ref,是handle形式。

int do_add_service(struct binder_state *bs,

uint16_t *s, unsigned len,

void *ptr, unsigned uid)

{

struct svcinfo *si;

// LOGI("add_service('%s',%p) uid=%d\n", str8(s), ptr, uid);

if (!ptr || (len == 0) || (len > 127))

return -1;

if (!svc_can_register(uid, s)) {

LOGE("add_service('%s',%p) uid=%d - PERMISSION DENIED\n",

str8(s), ptr, uid);

return -1;

}

si = find_svc(s, len);

if (si) {

if (si->ptr) {

LOGE("add_service('%s',%p) uid=%d - ALREADY REGISTERED, OVERRIDE\n",

str8(s), ptr, uid);

svcinfo_death(bs, si);

}

si->ptr = ptr;

} else {

si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t));

if (!si) {

LOGE("add_service('%s',%p) uid=%d - OUT OF MEMORY\n",

str8(s), ptr, uid);

return -1;

}

si->ptr = ptr;

si->len = len;

memcpy(si->name, s, (len + 1) * sizeof(uint16_t));

si->name[len] = '\0';

si->death.func = svcinfo_death;

si->death.ptr = si;

si->next = svclist;

svclist = si;

}

binder_acquire(bs, ptr);

binder_link_to_death(bs, ptr, &si->death);

return 0;

}

service_manager会立刻发送BC_ACQUIRE命令给binder driver增加对service binder_node的binder_ref的强计数(binder_ref.strong);而没有发送BC_INCREFS增加binder_ref.weak;这是与service的普通客户端不同的地方。从servicemanger的debugfs/binder/proc中的binder_ref可以看到这种差异。具体后面协议分析时详述。

注意service实现端调用sm->addService(name, new BnSubInterface)返回前会收到BR_INCREFS和BR_ACQUIRE增加强弱计数(BR_ACQUIRE不是因为此处的BC_ACQUIRE而产生),从而将组件钳住,避免临时对象sp<ISubInterface>销毁时减BnSubInterface对象的强计数引起BnSubInterface组件的销毁。

对于工作线程返回给请求者不具名binder node时,同样有此需求和逻辑。

问题[超前]:调用sm->addService(name, new BnSubInterface)时,通常用户态main线程还没有joiThreadPool,Service所在进程的worker thread还没有spawn出来,内核如何调度在用户态实现BR_INCREFS和BR_ACQUIRE的处理?答案:在driver中需要进行BINDER_LOOPER_STATE_ENTERED判断的是binder工作线程。此时虽然是在Service进程中,但是是作为对sm的请求者线程,而请求者线程是不需要进行判断BINDER_LOOPER_STATE_ENTERED的,所以可以实现在addService返回前增加组件计数。问题中是混淆了请求者线程和工作线程处理BR_INCREFS和BR_ACQUIRE的情景。详细后述。

然后BC_REQUEST_DEATH_NOTIFICATION注册binder node death notification。

BR_TRANSACTION调用func后,会BC_REPLY,函数如下(注意同时发送了BC_FREE_BUFFER):

void binder_send_reply(struct binder_state *bs,

struct binder_io *reply,

void *buffer_to_free,

int status)

{

struct {

uint32_t cmd_free;

void *buffer;

uint32_t cmd_reply;

struct binder_txn txn;

} __attribute__((packed)) data;

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

data.cmd_reply =BC_REPLY;

data.txn.target = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

data.txn.flags = TF_STATUS_CODE;

data.txn.data_size = sizeof(int);

data.txn.offs_size = 0;

data.txn.data = &status;

data.txn.offs = 0;

} else {

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offs_size = ((char*) reply->offs) - ((char*) reply->offs0);

data.txn.data = reply->data0;

data.txn.offs = reply->offs0;

}

binder_write(bs, &data, sizeof(data));

}

在向ServiceManager中注册服务时,会进行注册进程权限的检查,函数是svc_can_register,可以看出只有来自0号进程(init)、system_server、在allowed数组中项(AID_MEDIA, AID_RADIO, etc)才能注册。

int svc_can_register(unsigned uid, uint16_t *name)

{

unsigned n;

if ((uid == 0) || (uid == AID_SYSTEM))

return 1;

for (n = 0; n < sizeof(allowed) / sizeof(allowed[0]); n++)

if ((uid == allowed[n].uid) && str16eq(name, allowed[n].name))

return 1;

return 0;

}

static struct {

unsigned uid;

const char *name;

} allowed[] = {

#ifdef LVMX

{ AID_MEDIA, "com.lifevibes.mx.ipc" },

#endif

{ AID_MEDIA, "media.audio_flinger" },

{ AID_MEDIA, "media.player" },

{ AID_MEDIA, "media.camera" },

{ AID_MEDIA, "media.audio_policy" },

{ AID_DRM, "drm.drmManager" },

{ AID_NFC, "nfc" },

{ AID_RADIO, "radio.phone" },

{ AID_RADIO, "radio.sms" },

{ AID_RADIO, "radio.phonesubinfo" },

{ AID_RADIO, "radio.simphonebook" },

/* TODO: remove after phone services are updated: */

{ AID_RADIO, "phone" },

{ AID_RADIO, "sip" },

{ AID_RADIO, "isms" },

{ AID_RADIO, "iphonesubinfo" },

{ AID_RADIO, "simphonebook" },

{ AID_RADIO, "phone_msim" },

{ AID_RADIO, "simphonebook_msim" },

{ AID_RADIO, "iphonesubinfo_msim" },

{ AID_RADIO, "isms_msim" },

};

system_server中的进程可以参阅《Android的系统服务一览》

http://blog.csdn.net/freshui/article/details/5993195

更详细地,要知道init和system_server会注册哪些service,可以参阅Android启动过程。

在SIMULATOR上,编译选项TARGET_SIMULATOR = true时,runtime service。在init.rc中如下:

service runtime /system/bin/runtime

user system

group system

源码在frameworks/base/cmds/runtime/目录下,编译出模块runtime。在该服务中进行一些设置,启动其中内置实现的servicemanager,然后启动Zygote,启动system_server。在硬件平台和Qemu上,这些是分开的,在frameworks/base/cmds/目录下分别启动,如system_server是在system_server/目录下。

defaultServiceManager使用和普通service相同的实现方式,继承关系BServiceManager->BnServiceManager->BnInterface<IServiceManager>。启动如下:

static void boot_init() ------>main() ---Main_Runtime.cpp runtime

{

LOGI("Entered boot_init()!\n");

sp<ProcessState> proc(ProcessState::self());

// proc(ProcessState::self())单例,其构造函数会打开/dev/binder设备,

// 并使用mmap将binder fd映射到一段内存空间,就是用于获取一段用户态的内存空间。

LOGD("ProcessState: %p\n", proc.get());

proc->becomeContextManager(contextChecker, NULL);

// 直接调用ioctl(mDriverFD, BINDER_SET_CONTEXT_MGR, &dummy),

// 通过BINDER_SET_CONTEXT_MGR命令,driver会建立一个binder_node,

// 并作为CONTEXT_MGR。

if (proc->supportsProcesses()) {

LOGI("Binder driver opened. Multiprocess enabled.\n"); //taken

} else {

LOGI("Binder driver not found. Processes not supported.\n");

}

sp<BServiceManager> sm = new BServiceManager;

// BServiceManager继承自BnServiceManager,真正实现Manager功能。

// 通过维护一张服务向量表,实现getService/checkService/addService/listService操作。

// BnServiceManager的onTransact将GET_SERVICE_TRANSACTION/

// CHECK_SERVICE_TRANSACTION、ADD_SERVICE_TRANSACTION和

// LIST_SERVICES_TRANSACTION分别用上述四个函数处理。

proc->setContextObject(sm);

// 将sm用” default”做名字首先将其注册到KeyedVector向量表中

}

boot_init()之后,

static void finish_system_init(sp<ProcessState>& proc)

{

// If we are running multiprocess, we now need to have the

// thread pool started here. We don't do this in boot_init()

// because when running single process we need to start the

// thread pool after the Android runtime has been started (so

// the pool uses Dalvik threads).

if (proc->supportsProcesses()) {

proc->startThreadPool(); //--> spawnPooledThread()新spawn一个用于处理服务的线程

}

}

SIMULATOR用的很少,通讯协议相同,不再叙述。代码使用BServiceManager : BnServiceManager和ProcessState,需要可以查阅。

Android Binder机制之IPCThreadState & Parcel

本小节描述应用层远端方式下,client端的transant实现和service的onTransact如何被调用到;同时描述binder在Framework层的线程模型。试图描述大致结构及设计理念,不涉及协议处理细节;但仍然将重要代码列出。

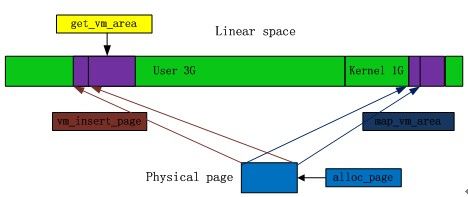

这个实现主要涉及三个重要的类,ProcessState、IPCThreadState和Parcel,其中ProcessState主要用于打开/dev/binder,进行mmap,和维护进程状态。我们主要讨论后面的两个,IPCThreadState用于实现binder通信的线程模型和Binder通信协议,Parcel则负责数据的序列化(serialization)。

数据序列化

Parcel是通信数据的封装类,和servicemanger中的binder_io角色对等。由于binder对象需要在内核中进行管理,每进程内的binder需要一个唯一个key。详细的需求是对于BINDER_TYPE_BINDER,binder_driver中会有一个binder_node结构与之对应,对于BINDER_TYPE_HANDLE,binder driver中会有一个binder_ref与之对应。Binder_node需要使用一个唯一的key挂接到内核中binder_proc维护的一棵rb_tree中,flatten就是要使BINDER_TYPE_BINDER/BINDER_TYPE_HANDLE时,pointer字段唯一。这是通过在用户空间中使用struct flat_binder_object结构,完成每进程空间中binder comp的唯一标记。

Parcel.cpp中的几个辅助函数列出如下。

status_t flatten_binder(const sp<ProcessState>& proc, const sp<IBinder>& binder, Parcel* out)

{

flat_binder_object obj;

obj.flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

if (binder != NULL) {

IBinder *local = binder->localBinder();

if (!local) {

BpBinder *proxy = binder->remoteBinder();

if (proxy == NULL) {

LOGE("null proxy");

}

const int32_t handle = proxy ? proxy->handle() : 0;

obj.type = BINDER_TYPE_HANDLE;

obj.handle = handle;

obj.cookie = NULL;

} else {

obj.type = BINDER_TYPE_BINDER;

obj.binder = local->getWeakRefs();

obj.cookie = local;

}

} else {

obj.type = BINDER_TYPE_BINDER;

obj.binder = NULL;

obj.cookie = NULL;

}

return finish_flatten_binder(binder, obj, out);

}

status_t flatten_binder(const sp<ProcessState>& proc, const wp<IBinder>& binder, Parcel* out)

{

flat_binder_object obj;

obj.flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

if (binder != NULL) {

sp<IBinder> real = binder.promote();

if (real != NULL) {

IBinder *local = real->localBinder();

if (!local) {

BpBinder *proxy = real->remoteBinder();

if (proxy == NULL) {

LOGE("null proxy");

}

const int32_t handle = proxy ? proxy->handle() : 0;

obj.type = BINDER_TYPE_WEAK_HANDLE;

obj.handle = handle;

obj.cookie = NULL;

} else {

obj.type = BINDER_TYPE_WEAK_BINDER;

obj.binder = binder.get_refs();

obj.cookie = binder.unsafe_get();

}

return finish_flatten_binder(real, obj, out);

}

// XXX How to deal? In order to flatten the given binder,

// we need to probe it for information, which requires a primary

// reference... but we don't have one.

//

// The OpenBinder implementation uses a dynamic_cast<> here,

// but we can't do that with the different reference counting

// implementation we are using.

LOGE("Unable to unflatten Binder weak reference!");

obj.type = BINDER_TYPE_BINDER;

obj.binder = NULL;

obj.cookie = NULL;

return finish_flatten_binder(NULL, obj, out);

} else {

obj.type = BINDER_TYPE_BINDER;

obj.binder = NULL;

obj.cookie = NULL;

return finish_flatten_binder(NULL, obj, out);

}

}

status_t unflatten_binder(const sp<ProcessState>& proc, const Parcel& in, sp<IBinder>* out)

{

const flat_binder_object* flat = in.readObject(false);

if (flat) {

switch (flat->type) {

case BINDER_TYPE_BINDER:

*out = static_cast<IBinder*>(flat->cookie);

return finish_unflatten_binder(NULL, *flat, in);

case BINDER_TYPE_HANDLE:

*out = proc->getStrongProxyForHandle(flat->handle);

return finish_unflatten_binder(

static_cast<BpBinder*>(out->get()), *flat, in);

}

}

return BAD_TYPE;

}

status_t unflatten_binder(const sp<ProcessState>& proc, const Parcel& in, wp<IBinder>* out)

{

const flat_binder_object* flat = in.readObject(false);

if (flat) {

switch (flat->type) {

case BINDER_TYPE_BINDER:

*out = static_cast<IBinder*>(flat->cookie);

return finish_unflatten_binder(NULL, *flat, in);

case BINDER_TYPE_WEAK_BINDER:

if (flat->binder != NULL) {

out->set_object_and_refs(

static_cast<IBinder*>(flat->cookie),

static_cast<RefBase::weakref_type*>(flat->binder));

} else {

*out = NULL;

}

return finish_unflatten_binder(NULL, *flat, in);

case BINDER_TYPE_HANDLE:

case BINDER_TYPE_WEAK_HANDLE:

*out = proc->getWeakProxyForHandle(flat->handle);

return finish_unflatten_binder(

static_cast<BpBinder*>(out->unsafe_get()), *flat, in);

}

}

return BAD_TYPE;

}

void acquire_object(const sp<ProcessState>& proc, const flat_binder_object& obj, const void* who)

{

switch (obj.type) {

case BINDER_TYPE_BINDER:

if (obj.binder) {

LOG_REFS("Parcel %p acquiring reference on local %p", who, obj.cookie);

static_cast<IBinder*>(obj.cookie)->incStrong(who);

}

return;

case BINDER_TYPE_WEAK_BINDER:

if (obj.binder)

static_cast<RefBase::weakref_type*>(obj.binder)->incWeak(who);

return;

case BINDER_TYPE_HANDLE: {

const sp<IBinder> b = proc->getStrongProxyForHandle(obj.handle);

if (b != NULL) {

LOG_REFS("Parcel %p acquiring reference on remote %p", who, b.get());

b->incStrong(who);

}

return;

}

case BINDER_TYPE_WEAK_HANDLE: {

const wp<IBinder> b = proc->getWeakProxyForHandle(obj.handle);

if (b != NULL) b.get_refs()->incWeak(who);

return;

}

case BINDER_TYPE_FD: {

// intentionally blank -- nothing to do to acquire this, but we do

// recognize it as a legitimate object type.

return;

}

}

LOGD("Invalid object type 0x%08lx", obj.type);

}

void release_object(const sp<ProcessState>& proc, const flat_binder_object& obj, const void* who)

{

switch (obj.type) {

case BINDER_TYPE_BINDER:

if (obj.binder) {

LOG_REFS("Parcel %p releasing reference on local %p", who, obj.cookie);

static_cast<IBinder*>(obj.cookie)->decStrong(who);

}

return;

case BINDER_TYPE_WEAK_BINDER:

if (obj.binder)

static_cast<RefBase::weakref_type*>(obj.binder)->decWeak(who);

return;

case BINDER_TYPE_HANDLE: {

const sp<IBinder> b = proc->getStrongProxyForHandle(obj.handle);

if (b != NULL) {

LOG_REFS("Parcel %p releasing reference on remote %p", who, b.get());

b->decStrong(who);

}

return;

}

case BINDER_TYPE_WEAK_HANDLE: {

const wp<IBinder> b = proc->getWeakProxyForHandle(obj.handle);

if (b != NULL) b.get_refs()->decWeak(who);

return;

}

case BINDER_TYPE_FD: {

if (obj.cookie != (void*)0) close(obj.handle);

return;

}

}

LOGE("Invalid object type 0x%08lx", obj.type);

}

struct flat_binder_object结构如下:

struct flat_binder_object{

unsigned long type;

unsigned long flags;

union {

void *binder; /* local object */

signed long handle; /* remote object */

};

void *cookie; /* address of bbinder if local binder*/

};

| .type\ |

TYPE_BINDER |

TYPE_HANDLE |

TYPE_WEAK_BINDER |

TYPE_WEAK_HANDLE |

| union {binder; handle}.pointer |

mRefs |

handle |

mRefs |

handle |

| .cookie |

BBinder |

NULL |

BBinder |

NULL |

binder.type为BINDER_TYPE_BINDER/ BINDER_TYPE_WEAK_BINDER时,维护mRefs和BBinder两个字段指针的用途是显然的,回看RefBase和RefBase::weakref_impl对象在内存中关系。

线程模型

下表格图是service端的线程模型。

| interface |

| binder component pool |

| binder worker thread pool |

| binder driver |

线程池和对象池

因为所有binder对象包括BBinder和BpBinder都是可路由的,所以binder worker thread并不与特定binder component绑定,而是寻找到目标binder,执行其代码逻辑;执行transaction时,根据接口函数代码派送execution到特定目标函数。

线程池可以设定最少和最大工作线程数,可以主动或接受dirvier指示spawn出的最大线程数默认是0x10,这些线程的名字是Binder Thread #num(ICS)或Binder_num(JB)。为了不拖延程序启动速度,创建线程池时,只创建一个isMain线程;然后main线程如果没有更多工作,也会加入线程池,main主线程不占用0x10这个数目。当工作线程不够用时,binder drvier会指示用户层创建non-isMain工作线程并加入到线程池中。用户态主动创建的线程,其属性isMian为true,通过BC_ENTER_LOOPER通知driver进入工作循环;而根据driver指示spawn出来的线程,其isMain属性为false,通过BC_REGISTER_LOOPER通知driver进入工作循环。当non-isMian工作线程长时间无事可做时,可以退出,但是android binder并没有完全实现这一点;isMain线程不退出。

工作线程优先级模型

binder driver会根据请求者线程的优先级设置工作线程的优先级,高优先级的请求者可以以高优先级调度,保证用户体验。binder node可以设置执行自己逻辑的工作线程的最低优先级,binder driver也会根据这个优先级设置nice运行其逻辑的工作线程的优先级。当工作线程服务完毕后,恢复其默认优先级,一般是其主线程的优先级。

此处加FOREGROUD BKGROUD和线程优先级部分。

IO效率

线程通过系统调用ioctl完成数据IO,考虑到频繁的系统调用的效率开销,会将多个命令打包进行一个IO,通常除transaction外,其余命令都不是紧急的。talkWithDriver还通过其它技巧尽量减少IO次数,降低系统调用开销,提高效率,具体详见代码。

同步异步模型

支持一发一收的双向同步方式,发送-阻塞-接收;也支持只发送不接收的ONE_WAY方式,发送后非阻塞返回,可以使用event sink通知机制。

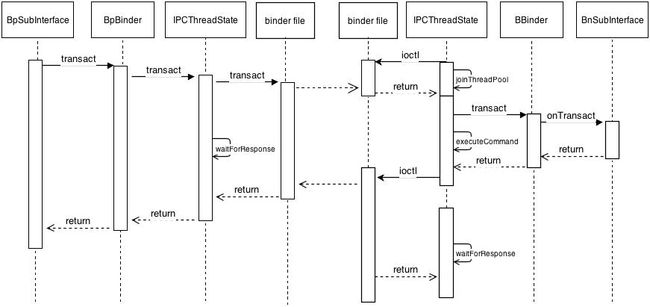

waitForResponse和executeCommand

executeCommand处理的命令集合S = { BR_ERROR/BR_OK、BR_INCREFS/BR_ACQUIRE/BR_DECREFS/BR_RELEASE、BR_TRANSACTION、BR_... }(注意不包含BR_REPLY)。因为工作线程joinThreadPool后,阻塞读等待命令,所以talkWithDriver返回后,接收到的一定是集合S中的命令,因此只需要调用executeCommand处理。

请求者线程发送命令和工作线程答复命令时,数据写后都需要检查数据写入结果,即在waitForResponse中检查明确列出的BR_CMDs,命令集合W= { BR_DEAD_REPLY, BR_FAILED_REPLY, BR_ACQUIRE_RESULT, BR_REPLY}。

BR_NOOP,可能用来做标记作用,区分什么东西;或者将来做hook用?

对于Transaction请求者线程等待的回应包括两部分内容,一部分是transaction_write是否成功(此处描述先这么意会吧),一部分是BR_REPLY数据包;但是有两种情况会更复杂一点:一是调用ServiceManager::addService注册binder node和向服务端传送event sink binder comp时,需要waitForResponse->executeCommand(BR_INCREFS/BR_ACQUIRE);二是交叉式transaction时,需要waitForResponse->executeCommand(BR_TRANSACTION)。

而对于工作线程,当向请求者返回不具名binder comp时,显然也需要executeCommand(BR_INCREFS/BR_ACQUIRE);而交叉式transaction时,也需要waitForResponse{ handle BR_REPLY }。BR_TRANSACTION和BR_REPLY普通情况下前者是在binder工作线程W1中,后者在请求者线程T1中。但交叉式嵌套binder调用时,则线程角色互换,即BR_TRANSACTION在T1中,BR_REPLY在W1中。当然这需要binder drvier的鼎力支持,找到正确的线程并唤醒,然后才是用户态transaction处理的嵌套。

针对这些情形,所以源码实现中有executeCommand和waitForResponse嵌套调用的地方。具体情形具体流程,参考源码。

重要函数的简单标注

本节为初始版本文档中的描述,未作增补。

IPCThreadState* IPCThreadState::self()

每线程对应的线程对象表达,由static代码寻找实体对象。实现方法:若是线程组中第一次调用此函数,在TLS中申请一个slot。每个线程将IPCThreadState* this保存其中。以后每次self(),取出返回即可。

void IPCThreadState::joinThreadPool(bool isMain)

工作线程使用该函数加入到线程池。进入循环后,首先处理延迟(deferred)的减引用计数,为什么有延迟减计数?这是因为在executeCommand中处理减计数命令时,并没有立刻进行减计数,而是延迟操作。

然后主体部分是talkWithDriver,若接收到数据,则调用executeCommand进行处理。

void setTheContextObject(sp<BBinder> obj)

调用本函数成为全系统唯一的ServiceManager;the_context_object全局变量。但系统的ServiceManager却不是调用本函数设置的,而是调用ProcessState::setContextObject(const sp<IBinder>& object);实现的。本函数没有被调用。那么executeCommand()中对于BR_TRANSACTION中的if (tr.target.ptr)段如何解释呢?这是因为即使对于ServiceManager,执行到此处时,tr.target.ptr总是指向有效的BBinder对象,恒不为零。除非ProcessState::setContextObject(NULL)??

进入run后会进入joinThreadLoop循环,如果执行完一次循环后

// Let this thread exit the thread pool if it is no longer

// needed and it is not the main process thread.

if(result == TIMED_OUT && !isMain)

break;

那么,循环退出,执行到proc->setContextObject(NULL);,需要设置新的ServiceManager。但是除非TIMED_OUT,否则这种情况不会发生,因为run()中IPCThreadState::self()->joinThreadPool();而IPCThreadState::self()->joinThreadPool(isMain=true);默认参数isMain为true。

status_t IPCThreadState::talkWithDriver(bool doReceive)

调用ioctl进行数据收发,当参数doReceive为true,且输入缓冲区中数据不够时,则读数据,并且捎带要发送的数据;参数doReceive为true,且输入缓冲区中数据足够时,不读,也不发送,直接返回;若是需要立刻发送数据,使用doReceive=false参数,如flushCommands即是如此。

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

在transaction写入数据后调用本函数等待应答。Initiator在writeTransactionData后调用时,对于binder driver发出的响应要求直接进行处理;对于作为responser接收到initiator发来的命令时,转executeCommand进行处理。注意其可被executeCommand嵌套。

status_t IPCThreadState::executeCommand(int32_t cmd)

处理initiator发送的BC_TRANSACTION经过binder driver转换投递的BR_TRANSACTION命令和binder driver发出的BR_XXXXX命令,其命令集合如前所述。对于BR_TRANSACTION会调用BBinder::transact(),从而会调用到BBinder::onTransact()。

void IPCThreadState::freeBuffer(……)

用于释放mmap中的内核写入数据用户态读取数据的缓冲区(binder_transaction_data),在BR_TRANSACTION和BR_REPLY命令处理中使用完该缓冲区后释放,或者设置freeBuffer为Parcel的mOwner回调(通过Parcel::ipcSetDataReference()将数据写入Parcel时设置),在Parcel清理数据时自动调用,从而保持Parcel中数据和mmap缓冲区中数据同步。还有一个重要功能,内核中释放缓冲区时,做binder的减计数。

status_t IPCThreadState::writeTransactionData(int32_t cmd, ……)

向对端发送数据,是交互的initiator时,使用BC_TRANSACTION cmd,是交互的responser回答(sendReply())initiator时,使用BC_REPLY cmd。writeTransactionData仅仅是将要发送的数据写入了Parcel mOut输出缓冲区中,等下一轮talkWithDriver时发送,

对于控制生命周期的计数命令和无应答的单向命令(TF_ONE_WAY)并不需要立刻发送。对于需要应答的命令,则是writeTransactionData之后,立刻使用waitForResponse调用talkWithDriver(),由于需要接收,所以数据会立刻发送出去,并且挂起等待对端应答。

Client & Server通信的大致框图如下:

附代码带注释

void IPCThreadState::joinThreadPool(bool isMain) // PoolThread的主体joinThreadPool

{

LOG_THREADPOOL("**** THREAD %p (PID %d) IS JOINING THE THREAD POOL\n", (void*)pthread_self(), getpid());

mOut.writeInt32(isMain ? BC_ENTER_LOOPER : BC_REGISTER_LOOPER);

// 对于main pool thread和spawn pool thread使用不同命令通知driver。

// This thread may have been spawned by a thread that was in the background

// scheduling group, so first we will make sure it is in the default/foreground

// one to avoid performing an initial transaction in the background.

androidSetThreadSchedulingGroup(mMyThreadId, ANDROID_TGROUP_DEFAULT);

// 改变线程优先级,优先响应用户操作,用户体验为主

status_t result;

do {

int32_t cmd;

//When we've cleared the incoming command queue, process any pending derefs

if (mIn.dataPosition() >= mIn.dataSize()) {

size_t numPending = mPendingWeakDerefs.size();

if (numPending > 0) {

for (size_t i = 0; i < numPending; i++) {

RefBase::weakref_type* refs = mPendingWeakDerefs[i];

refs->decWeak(mProcess.get());

}

mPendingWeakDerefs.clear();

}

numPending = mPendingStrongDerefs.size();

if (numPending > 0) {

for (size_t i = 0; i < numPending; i++) {

BBinder* obj = mPendingStrongDerefs[i];

obj->decStrong(mProcess.get());

}

mPendingStrongDerefs.clear();

}

}

// now get the next command to be processed, waiting if necessary

result = talkWithDriver();

if (result >= NO_ERROR) {

size_t IN = mIn.dataAvail();

if (IN < sizeof(int32_t)) continue;

cmd = mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing top-level Command: "

<< getReturnString(cmd) << endl;

}

result = executeCommand(cmd);

}

// After executing the command, ensure that the thread is returned to the

// default cgroup before rejoining the pool. The driver takes care of

// restoring the priority, but doesn't do anything with cgroups so we

// need to take care of that here in userspace. Note that we do make

// sure to go in the foreground after executing a transaction, but

// there are other callbacks into user code that could have changed

// our group so we want to make absolutely sure it is put back.

androidSetThreadSchedulingGroup(mMyThreadId, ANDROID_TGROUP_DEFAULT);

// Let this thread exit the thread pool if it is no longer

// needed and it is not the main process thread.

if(result == TIMED_OUT && !isMain) {

break;

}

} while (result != -ECONNREFUSED && result != -EBADF);

LOG_THREADPOOL("**** THREAD %p (PID %d) IS LEAVING THE THREAD POOL err=%p\n",

(void*)pthread_self(), getpid(), (void*)result);

mOut.writeInt32(BC_EXIT_LOOPER);

talkWithDriver(false);

}

status_t IPCThreadState::talkWithDriver(bool doReceive = true) //IO,参数指定是IO方向。

{

LOG_ASSERT(mProcess->mDriverFD >= 0, "Binder driver is not opened");

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// 如果当前读指针已超出缓冲区中数据,则读,提高效率。正常情况下,不会出现命令码和半个

// 包的情况,应该是整的命令数据包,足够一个命令处理循环。

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

// 发送数据,两个OR条件:

// 1 doReceive为false,说明是写操作

// 2 needRead需要读数据时,向外写命令,可以认为是延迟写稍带写,减少ioctl系统调用,

// 提高效率;但是反过来说,读响应,必然需要将以前的命令全部发送出去

bwr.write_size = outAvail;

bwr.write_buffer = (long unsigned int)mOut.data();

// This is what we'll read.

// 指示读操作,则结合是否真有必要读取

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (long unsigned int)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

if (outAvail != 0) {

alog << "Sending commands to driver: " << indent;

const void* cmds = (const void*)bwr.write_buffer;

const void* end = ((const uint8_t*)cmds)+bwr.write_size;

alog << HexDump(cmds, bwr.write_size) << endl;

while (cmds < end) cmds = printCommand(alog, cmds);

alog << dedent;

}

alog << "Size of receive buffer: " << bwr.read_size

<< ", needRead: " << needRead << ", doReceive: " << doReceive << endl;

}

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

IF_LOG_COMMANDS() {

alog << "About to read/write, write size = " << mOut.dataSize() << endl;

}

#if defined(HAVE_ANDROID_OS)

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

IF_LOG_COMMANDS() {

alog << "Finished read/write, write size = " << mOut.dataSize() << endl;

}

} while (err == -EINTR);

IF_LOG_COMMANDS() {

alog << "Our err: " << (void*)err << ", write consumed: "

<< bwr.write_consumed << " (of " << mOut.dataSize()

<< "), read consumed: " << bwr.read_consumed << endl;

}

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < (ssize_t)mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

alog << "Remaining data size: " << mOut.dataSize() << endl;

alog << "Received commands from driver: " << indent;

const void* cmds = mIn.data();

const void* end = mIn.data() + mIn.dataSize();

alog << HexDump(cmds, mIn.dataSize()) << endl;