mediaplyer分析

mediaplayer.cpp:

154 status_t MediaPlayer::setDataSource(

155 const char *url, const KeyedVector<String8, String8> *headers)

156 {

157 LOGV("setDataSource(%s)", url);

158 status_t err = BAD_VALUE;

159 if (url != NULL) {

160 const sp<IMediaPlayerService>& service(getMediaPlayerService());

161 if (service != 0) {

162 sp<IMediaPlayer> player(service->create(getpid(), this, mAudioSessionId));

163 if (NO_ERROR != player->setDataSource(url, headers)) {

164 player.clear();

165 }

166 /* add by Gary. start {{----------------------------------- */

167 /* 2011-9-28 16:28:24 */

168 /* save properties before creating the real player */

169 if(player != 0) {

170 //player->setSubGate(mSubGate);

171 player->setSubColor(mSubColor);

172 player->setSubFrameColor(mSubFrameColor);

173 player->setSubPosition(mSubPosition);

174 player->setSubDelay(mSubDelay);

175 player->setSubFontSize(mSubFontSize);

176 player->setSubCharset(mSubCharset);

177 player->switchSub(mSubIndex);

178 player->switchTrack(mTrackIndex);

179 player->setChannelMuteMode(mMuteMode); // 2012-03-07, set audio channel mute

180 }

181 /* add by Gary. end -----------------------------------}} */

182 err = attachNewPlayer(player);

162行,给player赋值,调用的是远程MediaPlayerService的create方法,所以进MediaPlayerService.cpp看看:

395 sp<IMediaPlayer> MediaPlayerService::create(pid_t pid, const sp<IMediaPlayerClient>& client,

396 int audioSessionId)

397 {

398 int32_t connId = android_atomic_inc(&mNextConnId);

399

400 sp<Client> c = new Client(

401 this, pid, connId, client, audioSessionId,

402 IPCThreadState::self()->getCallingUid());

403

404 LOGV("Create new client(%d) from pid %d, uid %d, ", connId, pid,

405 IPCThreadState::self()->getCallingUid());

406 /* add by Gary. start {{----------------------------------- */

407 c->setScreen(mScreen);

408 /* add by Gary. end -----------------------------------}} */

409

410 /* add by Gary. start {{----------------------------------- */

411 /* 2011-11-14 */

412 /* support adjusting colors while playing video */

413 c->setVppGate(mVppGate);

414 c->setLumaSharp(mLumaSharp);

415 c->setChromaSharp(mChromaSharp);

416 c->setWhiteExtend(mWhiteExtend);

417 c->setBlackExtend(mBlackExtend);

418 c->setBlackExtend(mBlackExtend);

419 c->setSubGate(mGlobalSubGate); // 2012-03-12, add the global interfaces to control the subtitle gate

420 /* add by Gary. end -----------------------------------}} */

421

422

423 wp<Client> w = c;

424 {

425 Mutex::Autolock lock(mLock);

426 mClients.add(w);

427 }

428 return c;

429 }

400行,创建一个client,而这里传进去的第四个参数client又是什么东东啊?

627 MediaPlayerService::Client::Client(

628 const sp<MediaPlayerService>& service, pid_t pid,

629 int32_t connId, const sp<IMediaPlayerClient>& client,

630 int audioSessionId, uid_t uid)

631 {

632 LOGV("Client(%d) constructor", connId);

633 mPid = pid;

634 mConnId = connId;

635 mService = service;

636 mClient = client;

637 mLoop = false;

638 mStatus = NO_INIT;

639 mAudioSessionId = audioSessionId;

640 mUID = uid;

641

642 /* add by Gary. start {{----------------------------------- */

643 mHasSurface = 0;

644 LOGV("mHasSurface is inited as 0");

645 /* add by Gary. end -----------------------------------}} */

646 /* add by Gary. start {{----------------------------------- */

647 /* 2011-9-28 16:28:24 */

648 /* save properties before creating the real player */

649 mSubGate = true;

650 mSubColor = 0xFFFFFFFF;

651 mSubFrameColor = 0xFF000000;

652 mSubPosition = 0;

653 mSubDelay = 0;

654 mSubFontSize = 24;

655 strcpy(mSubCharset, CHARSET_GBK);

656 mSubIndex = 0;

657 mTrackIndex = 0;

658 mMuteMode = AUDIO_CHANNEL_MUTE_NONE; // 2012-03-07, set audio channel mute

659 /* add by Gary. end -----------------------------------}} */

660

661 /* add by Gary. start {{----------------------------------- */

662 /* 2011-11-14 */

663 /* support scale mode */

664 mEnableScaleMode = false;

看来只能进入IMediaPlayerService.cpp看看这个参数client是什么东东了:

333 status_t BnMediaPlayerService::onTransact(

334 uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

335 {

336 switch(code) {

337 case CREATE: {

338 CHECK_INTERFACE(IMediaPlayerService, data, reply);

339 pid_t pid = data.readInt32();

340 sp<IMediaPlayerClient> client =

341 interface_cast<IMediaPlayerClient>(data.readStrongBinder());

342 int audioSessionId = data.readInt32();

343 sp<IMediaPlayer> player = create(pid, client, audioSessionId);

344 reply->writeStrongBinder(player->asBinder());

345 return NO_ERROR;

346 } break;

这里341行,又是binder通讯,烦死了,展开最终变成new BpMediaPlayerClient(new BpBinder(handle));这里的handle为mediaplay的binder实体的一个句柄,哇,看来不单只有一个

IMediaPlayerService.cpp,还有一个IMediaPlayer.cpp,意思是什么呢,说明mediaplayer要操作mediaplayerservice,反过来mediaplayerservice也要操作MediaPlayer,

看看MediaPlayer有没有继承Bnxxx对象就知道了:

172 class MediaPlayer : public BnMediaPlayerClient,

173 public virtual IMediaDeathNotifier

174 {

果然是继承了BnMediaPlayerClient,那就准确一点,反过来mediaplayerservice也要操作MediaPlayerClient的onTransact方法.

所以回到上面的问题,我们知道了client参数就是一个BpMediaPlayerClient代理。

这像不像驱动中的回调函数呢?把客户端自己注册到服务中去,同时又给了一个句柄给服务操作,服务就起了一个资源的统筹作用,最后服务通过句柄反过来支配客户端

回到最前面,MediaPlayer::setDataSource的player的到了一个MediaPlayerService::Client::Client对象,接着163行player->setDataSource:

919 status_t MediaPlayerService::Client::setDataSource(

920 const char *url, const KeyedVector<String8, String8> *headers)

921 {

922 LOGV("setDataSource(%s)", url);

923 if (url == NULL)

924 return UNKNOWN_ERROR;

925

926 if ((strncmp(url, "http://", 7) == 0) ||

927 (strncmp(url, "https://", 8) == 0) ||

928 (strncmp(url, "rtsp://", 7) == 0)) {

929 if (!checkPermission("android.permission.INTERNET")) {

930 return PERMISSION_DENIED;

931 }

932 }

933

934 if (strncmp(url, "content://", 10) == 0) {

935 // get a filedescriptor for the content Uri and

936 // pass it to the setDataSource(fd) method

937

938 String16 url16(url);

939 int fd = android::openContentProviderFile(url16);

940 if (fd < 0)

941 {

942 LOGE("Couldn't open fd for %s", url);

943 return UNKNOWN_ERROR;

944 }

945 setDataSource(fd, 0, 0x7fffffffffLL); // this sets mStatus

946 close(fd);

947 return mStatus;

948 } else {

949 player_type playerType = getPlayerType(url);

950 LOGV("player type = %d", playerType);

951

952 // create the right type of player

953 sp<MediaPlayerBase> p = createPlayer(playerType);

954 if (p == NULL) return NO_INIT;

955

956 if (!p->hardwareOutput()) {

957 mAudioOutput = new AudioOutput(mAudioSessionId);

958 static_cast<MediaPlayerInterface*>(p.get())->setAudioSink(mAudioOutput);

959 }

........................................

992 p->setScreen(mScreen);

993 p->setVppGate(mVppGate);

994 p->setLumaSharp(mLumaSharp);

995 p->setChromaSharp(mChromaSharp);

996 p->setWhiteExtend(mWhiteExtend);

997 p->setBlackExtend(mBlackExtend);

998 /* add by Gary. end -----------------------------------}} */

999

1000 // now set data source

1001 LOGV(" setDataSource");

1002 mStatus = p->setDataSource(url, headers);

1003 if (mStatus == NO_ERROR) {

1004 mPlayer = p;

1005 } else {

1006 LOGE(" error: %d", mStatus);

1007 }

1008 return mStatus;

1009 }

1010 }

949行getPlayerType,在预先定义的数组查找资源的后缀名字,

237 extmap FILE_EXTS [] = {

238 {".ogg", STAGEFRIGHT_PLAYER},

239 {".mp3", STAGEFRIGHT_PLAYER},

240 {".wav", STAGEFRIGHT_PLAYER},

241 {".amr", STAGEFRIGHT_PLAYER},

242 {".flac", STAGEFRIGHT_PLAYER},

243 {".m4a", STAGEFRIGHT_PLAYER},

244 {".m4r", STAGEFRIGHT_PLAYER},

245 {".out", STAGEFRIGHT_PLAYER},

246 {".mp3?nolength", STAGEFRIGHT_PLAYER},

247 {".ogg?nolength", STAGEFRIGHT_PLAYER},

248 //{".3gp", STAGEFRIGHT_PLAYER},

...................................

找到的话就返回,没有找到的话返回一个默认的:

714 static player_type getDefaultPlayerType() {

715 return CEDARX_PLAYER;

716 //return STAGEFRIGHT_PLAYER;

717 }

接着953行,createPlayer:

900 sp<MediaPlayerBase> MediaPlayerService::Client::createPlayer(player_type playerType)

901 {

902 // determine if we have the right player type

903 sp<MediaPlayerBase> p = mPlayer;

904 if ((p != NULL) && (p->playerType() != playerType)) {

905 LOGV("delete player");

906 p.clear();

907 }

908 if (p == NULL) {

909 p = android::createPlayer(playerType, this, notify);

910 }

911

912 if (p != NULL) {

913 p->setUID(mUID);

914 }

915

916 return p;

917 }

这里第一次进来mPlayer为空,执行android::createPlayer,意思是执行 MediaPlayerService类的方法:

854 static sp<MediaPlayerBase> createPlayer(player_type playerType, void* cookie,

855 notify_callback_f notifyFunc)

856 {

857 sp<MediaPlayerBase> p;

858 switch (playerType) {

859 case CEDARX_PLAYER:

860 LOGV(" create CedarXPlayer");

861 p = new CedarPlayer;

862 break;

863 case CEDARA_PLAYER:

864 LOGV(" create CedarAPlayer");

865 p = new CedarAPlayerWrapper;

866 break;

867 case SONIVOX_PLAYER:

868 LOGV(" create MidiFile");

869 p = new MidiFile();

870 break;

871 case STAGEFRIGHT_PLAYER:

872 LOGV(" create StagefrightPlayer");

873 p = new StagefrightPlayer;

874 break;

875 case NU_PLAYER:

876 LOGV(" create NuPlayer");

877 p = new NuPlayerDriver;

878 break;

879 case TEST_PLAYER:

880 LOGV("Create Test Player stub");

881 p = new TestPlayerStub();

882 break;

883 default:

884 LOGE("Unknown player type: %d", playerType);

885 return NULL;

886 }

887 if (p != NULL) {

888 if (p->initCheck() == NO_ERROR) {

889 p->setNotifyCallback(cookie, notifyFunc);

890 } else {

891 p.clear();

892 }

这里几个播放器的类型,说明如下:

TEST_PLAYER url以test:开始的。如test:xxx

NU_PLAYER url以http://或https://开始的,且url以.m3u8结束或url中包含有m3u8字符串

SONIVOX_PLAYER 处理url中扩展名为:.mid,.midi,.smf,.xmf,.imy,.rtttl,.rtx,.ota的媒体文件

STAGEFRIGHT_PLAYER 它是一个大好人,前面三位不能处理的都交给它来处理,不知能力是否有如此强大

我们就选择STAGEFRIGHT_PLAYER来介绍吧,在StagefrightPlayer.cpp中的构造函数:

30 StagefrightPlayer::StagefrightPlayer()

31 : mPlayer(new AwesomePlayer) {

32 LOGV("StagefrightPlayer");

33

34 mPlayer->setListener(this);

35 }

31行,new AwesomePlayer,AwesomePlayer.cpp中:

182 AwesomePlayer::AwesomePlayer()

183 : mQueueStarted(false),

184 mUIDValid(false),

185 mTimeSource(NULL),

186 mVideoRendererIsPreview(false),

187 mAudioPlayer(NULL),

188 mDisplayWidth(0),

189 mDisplayHeight(0),

190 mFlags(0),

191 mExtractorFlags(0),

192 mVideoBuffer(NULL),

193 mDecryptHandle(NULL),

194 mLastVideoTimeUs(-1),

195 mTextPlayer(NULL) {

196 CHECK_EQ(mClient.connect(), (status_t)OK);

197

198 DataSource::RegisterDefaultSniffers();

199

200 mVideoEvent = new AwesomeEvent(this, &AwesomePlayer::onVideoEvent);

201 mVideoEventPending = false;

202 mStreamDoneEvent = new AwesomeEvent(this, &AwesomePlayer::onStreamDone);

203 mStreamDoneEventPending = false;

204 mBufferingEvent = new AwesomeEvent(this, &AwesomePlayer::onBufferingUpdate);

205 mBufferingEventPending = false;

206 mVideoLagEvent = new AwesomeEvent(this, &AwesomePlayer::onVideoLagUpdate);

207 mVideoEventPending = false;

208

209 mCheckAudioStatusEvent = new AwesomeEvent(

210 this, &AwesomePlayer::onCheckAudioStatus);

211

212 mAudioStatusEventPending = false;

213

214 reset();

215 }

里面的几个函数先不分析,后续用到再看,继续往下,然后是34行setListener:

244 void AwesomePlayer::setListener(const wp<MediaPlayerBase> &listener) {

245 Mutex::Autolock autoLock(mLock);

246 mListener = listener;

247 }

回到player->setDataSource中来,957行,new AudioOutput(mAudioSessionId),看来是准备音频输出了吧,

1002行p->setDataSource:

56 status_t StagefrightPlayer::setDataSource(

57 const char *url, const KeyedVector<String8, String8> *headers) {

58 return mPlayer->setDataSource(url, headers);

59 }

----------------->

256 status_t AwesomePlayer::setDataSource(

257 const char *uri, const KeyedVector<String8, String8> *headers) {

258 Mutex::Autolock autoLock(mLock);

259 return setDataSource_l(uri, headers);

260 }

这样,setDataSource就算结束了

紧接着,MediaPlayer::prepare:

277 status_t MediaPlayer::prepare()

278 {

279 LOGV("prepare");

280 Mutex::Autolock _l(mLock);

281 mLockThreadId = getThreadId();

282 if (mPrepareSync) {

283 mLockThreadId = 0;

284 return -EALREADY;

285 }

286 mPrepareSync = true;

287 status_t ret = prepareAsync_l();

288 if (ret != NO_ERROR) {

289 mLockThreadId = 0;

290 return ret;

291 }

292

293 if (mPrepareSync) {

294 mSignal.wait(mLock); // wait for prepare done

295 mPrepareSync = false;

296 }

297 LOGV("prepare complete - status=%d", mPrepareStatus);

298 mLockThreadId = 0;

299 return mPrepareStatus;

300 }

287行:

262 status_t MediaPlayer::prepareAsync_l()

263 {

264 if ( (mPlayer != 0) && ( mCurrentState & ( MEDIA_PLAYER_INITIALIZED | MEDIA_PLAYER_STOPPED) ) ) {

265 mPlayer->setAudioStreamType(mStreamType);

266 mCurrentState = MEDIA_PLAYER_PREPARING;

267 return mPlayer->prepareAsync();

268 }

269 LOGE("prepareAsync called in state %d", mCurrentState);

270 return INVALID_OPERATION;

271 }

265行设置音频输出类型,267行:

1318 status_t MediaPlayerService::Client::prepareAsync()

1319 {

1320 LOGV("[%d] prepareAsync", mConnId);

1321 sp<MediaPlayerBase> p = getPlayer();

1322 if (p == 0) return UNKNOWN_ERROR;

1323 status_t ret = p->prepareAsync();

1324 #if CALLBACK_ANTAGONIZER

1325 LOGV("start Antagonizer");

1326 if (ret == NO_ERROR) mAntagonizer->start();

1327 #endif

1328 return ret;

1329 }

这里的getPlayer()就是返回mPlayer,他就是前面创建的createPlayer(playerType)对象,所以1323行调用:

1913 status_t AwesomePlayer::prepareAsync() {

1914 Mutex::Autolock autoLock(mLock);

1915

1916 if (mFlags & PREPARING) {

1917 return UNKNOWN_ERROR; // async prepare already pending

1918 }

1919

1920 mIsAsyncPrepare = true;

1921 return prepareAsync_l();

1922 }

---------------------->

1924 status_t AwesomePlayer::prepareAsync_l() {

1925 if (mFlags & PREPARING) {

1926 return UNKNOWN_ERROR; // async prepare already pending

1927 }

1928

1929 if (!mQueueStarted) {

1930 mQueue.start();

1931 mQueueStarted = true;

1932 }

1933

1934 modifyFlags(PREPARING, SET);

1935 mAsyncPrepareEvent = new AwesomeEvent(

1936 this, &AwesomePlayer::onPrepareAsyncEvent);

1937

1938 mQueue.postEvent(mAsyncPrepareEvent);

1939

1940 return OK;

1941 }

这样就返回了一个extractor。

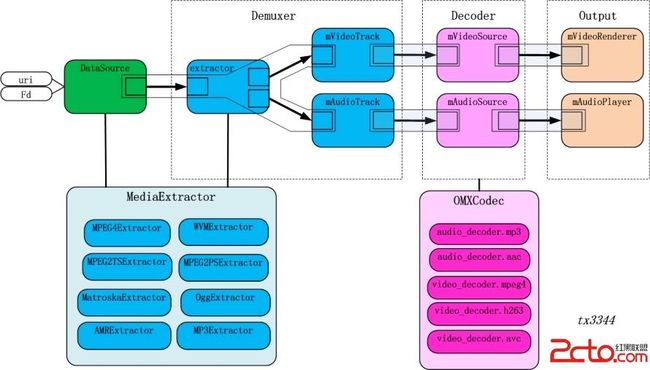

mVideoSource、mAudioSource组成了播放器模型中的decoder部分。给出一个网上的流程图:

这里启动线程ThreadWrapper:

回调的是event->fire函数,这里的event是什么时候加入队列中的呢,就是在前面mQueue.postEvent(mAsyncPrepareEvent),所以调用的是mAsyncPrepareEvent的fire函数, 还可以用另外一个postEventWithDelay函数,表示延时执行的。

看来果然没有错,就是调用了mMethod指向的函数,而这个函数就是onPrepareAsyncEvent啦

310 {

311 LOGV("start");

312 Mutex::Autolock _l(mLock);

313 if (mCurrentState & MEDIA_PLAYER_STARTED)

314 return NO_ERROR;

315 if ( (mPlayer != 0) && ( mCurrentState & ( MEDIA_PLAYER_PREPARED |

316 MEDIA_PLAYER_PLAYBACK_COMPLETE | MEDIA_PLAYER_PAUSED ) ) ) {

317 mPlayer->setLooping(mLoop);

318 mPlayer->setVolume(mLeftVolume, mRightVolume);

319 mPlayer->setAuxEffectSendLevel(mSendLevel);

320 mCurrentState = MEDIA_PLAYER_STARTED;

321 status_t ret = mPlayer->start();

322 if (ret != NO_ERROR) {

323 mCurrentState = MEDIA_PLAYER_STATE_ERROR;

324 } else {

325 if (mCurrentState == MEDIA_PLAYER_PLAYBACK_COMPLETE) {

326 LOGV("playback completed immediately following start()");

327 }

328 }

329 return ret;

330 }

331 LOGE("start called in state %d", mCurrentState);

332 return INVALID_OPERATION;

333 }

设置循环和音量后,调用mPlayer->start:

1331 status_t MediaPlayerService::Client::start()

1332 {

1333 LOGV("[%d] start", mConnId);

1334 sp<MediaPlayerBase> p = getPlayer();

1335 if (p == 0) return UNKNOWN_ERROR;

1336 p->setLooping(mLoop);

1337 return p->start();

1338 }

--------------------->

87 status_t StagefrightPlayer::start() {

88 LOGV("start");

89

90 return mPlayer->play();

91 }

--------------------->

832 status_t AwesomePlayer::play() {

833 Mutex::Autolock autoLock(mLock);

834

835 modifyFlags(CACHE_UNDERRUN, CLEAR);

836

837 return play_l();

838 }

play_l是一个很长的函数,主要调用 postVideoEvent_l(),它调用了软解码或者硬解码,启动音频播放,还有不断循环读取解码后的数据来显示:

1803 void AwesomePlayer::postVideoEvent_l(int64_t delayUs) {

1804 if (mVideoEventPending) {

1805 return;

1806 }

1807

1808 mVideoEventPending = true;

1809 mQueue.postEventWithDelay(mVideoEvent, delayUs < 0 ? 10000 : delayUs);

1810 }

postEventWithDelay会启动mVideoEvent的对应事件,即AwesomePlayer::onVideoEvent,它主要进行了for循环:

1608 for (;;) {

1609 status_t err = mVideoSource->read(&mVideoBuffer, &options);

1610 options.clearSeekTo();

1611

1612 if (err != OK) {

1613 CHECK(mVideoBuffer == NULL);

1614

1615 if (err == INFO_FORMAT_CHANGED) {

1616 LOGV("VideoSource signalled format change.");

1617

1618 notifyVideoSize_l();

1619

1620 if (mVideoRenderer != NULL) {

1621 mVideoRendererIsPreview = false;

1622 initRenderer_l();

1623 }

1624 continue;

1625 }

1626

1627 // So video playback is complete, but we may still have

1628 // a seek request pending that needs to be applied

1629 // to the audio track.

1630 if (mSeeking != NO_SEEK) {

1631 LOGV("video stream ended while seeking!");

1632 }

1633 finishSeekIfNecessary(-1);

参考文章:http://blog.csdn.net/myarrow/article/details/7067574

http://blog.csdn.net/mci2004/article/details/7629146

154 status_t MediaPlayer::setDataSource(

155 const char *url, const KeyedVector<String8, String8> *headers)

156 {

157 LOGV("setDataSource(%s)", url);

158 status_t err = BAD_VALUE;

159 if (url != NULL) {

160 const sp<IMediaPlayerService>& service(getMediaPlayerService());

161 if (service != 0) {

162 sp<IMediaPlayer> player(service->create(getpid(), this, mAudioSessionId));

163 if (NO_ERROR != player->setDataSource(url, headers)) {

164 player.clear();

165 }

166 /* add by Gary. start {{----------------------------------- */

167 /* 2011-9-28 16:28:24 */

168 /* save properties before creating the real player */

169 if(player != 0) {

170 //player->setSubGate(mSubGate);

171 player->setSubColor(mSubColor);

172 player->setSubFrameColor(mSubFrameColor);

173 player->setSubPosition(mSubPosition);

174 player->setSubDelay(mSubDelay);

175 player->setSubFontSize(mSubFontSize);

176 player->setSubCharset(mSubCharset);

177 player->switchSub(mSubIndex);

178 player->switchTrack(mTrackIndex);

179 player->setChannelMuteMode(mMuteMode); // 2012-03-07, set audio channel mute

180 }

181 /* add by Gary. end -----------------------------------}} */

182 err = attachNewPlayer(player);

162行,给player赋值,调用的是远程MediaPlayerService的create方法,所以进MediaPlayerService.cpp看看:

395 sp<IMediaPlayer> MediaPlayerService::create(pid_t pid, const sp<IMediaPlayerClient>& client,

396 int audioSessionId)

397 {

398 int32_t connId = android_atomic_inc(&mNextConnId);

399

400 sp<Client> c = new Client(

401 this, pid, connId, client, audioSessionId,

402 IPCThreadState::self()->getCallingUid());

403

404 LOGV("Create new client(%d) from pid %d, uid %d, ", connId, pid,

405 IPCThreadState::self()->getCallingUid());

406 /* add by Gary. start {{----------------------------------- */

407 c->setScreen(mScreen);

408 /* add by Gary. end -----------------------------------}} */

409

410 /* add by Gary. start {{----------------------------------- */

411 /* 2011-11-14 */

412 /* support adjusting colors while playing video */

413 c->setVppGate(mVppGate);

414 c->setLumaSharp(mLumaSharp);

415 c->setChromaSharp(mChromaSharp);

416 c->setWhiteExtend(mWhiteExtend);

417 c->setBlackExtend(mBlackExtend);

418 c->setBlackExtend(mBlackExtend);

419 c->setSubGate(mGlobalSubGate); // 2012-03-12, add the global interfaces to control the subtitle gate

420 /* add by Gary. end -----------------------------------}} */

421

422

423 wp<Client> w = c;

424 {

425 Mutex::Autolock lock(mLock);

426 mClients.add(w);

427 }

428 return c;

429 }

400行,创建一个client,而这里传进去的第四个参数client又是什么东东啊?

627 MediaPlayerService::Client::Client(

628 const sp<MediaPlayerService>& service, pid_t pid,

629 int32_t connId, const sp<IMediaPlayerClient>& client,

630 int audioSessionId, uid_t uid)

631 {

632 LOGV("Client(%d) constructor", connId);

633 mPid = pid;

634 mConnId = connId;

635 mService = service;

636 mClient = client;

637 mLoop = false;

638 mStatus = NO_INIT;

639 mAudioSessionId = audioSessionId;

640 mUID = uid;

641

642 /* add by Gary. start {{----------------------------------- */

643 mHasSurface = 0;

644 LOGV("mHasSurface is inited as 0");

645 /* add by Gary. end -----------------------------------}} */

646 /* add by Gary. start {{----------------------------------- */

647 /* 2011-9-28 16:28:24 */

648 /* save properties before creating the real player */

649 mSubGate = true;

650 mSubColor = 0xFFFFFFFF;

651 mSubFrameColor = 0xFF000000;

652 mSubPosition = 0;

653 mSubDelay = 0;

654 mSubFontSize = 24;

655 strcpy(mSubCharset, CHARSET_GBK);

656 mSubIndex = 0;

657 mTrackIndex = 0;

658 mMuteMode = AUDIO_CHANNEL_MUTE_NONE; // 2012-03-07, set audio channel mute

659 /* add by Gary. end -----------------------------------}} */

660

661 /* add by Gary. start {{----------------------------------- */

662 /* 2011-11-14 */

663 /* support scale mode */

664 mEnableScaleMode = false;

看来只能进入IMediaPlayerService.cpp看看这个参数client是什么东东了:

333 status_t BnMediaPlayerService::onTransact(

334 uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

335 {

336 switch(code) {

337 case CREATE: {

338 CHECK_INTERFACE(IMediaPlayerService, data, reply);

339 pid_t pid = data.readInt32();

340 sp<IMediaPlayerClient> client =

341 interface_cast<IMediaPlayerClient>(data.readStrongBinder());

342 int audioSessionId = data.readInt32();

343 sp<IMediaPlayer> player = create(pid, client, audioSessionId);

344 reply->writeStrongBinder(player->asBinder());

345 return NO_ERROR;

346 } break;

这里341行,又是binder通讯,烦死了,展开最终变成new BpMediaPlayerClient(new BpBinder(handle));这里的handle为mediaplay的binder实体的一个句柄,哇,看来不单只有一个

IMediaPlayerService.cpp,还有一个IMediaPlayer.cpp,意思是什么呢,说明mediaplayer要操作mediaplayerservice,反过来mediaplayerservice也要操作MediaPlayer,

看看MediaPlayer有没有继承Bnxxx对象就知道了:

172 class MediaPlayer : public BnMediaPlayerClient,

173 public virtual IMediaDeathNotifier

174 {

果然是继承了BnMediaPlayerClient,那就准确一点,反过来mediaplayerservice也要操作MediaPlayerClient的onTransact方法.

所以回到上面的问题,我们知道了client参数就是一个BpMediaPlayerClient代理。

这像不像驱动中的回调函数呢?把客户端自己注册到服务中去,同时又给了一个句柄给服务操作,服务就起了一个资源的统筹作用,最后服务通过句柄反过来支配客户端

回到最前面,MediaPlayer::setDataSource的player的到了一个MediaPlayerService::Client::Client对象,接着163行player->setDataSource:

919 status_t MediaPlayerService::Client::setDataSource(

920 const char *url, const KeyedVector<String8, String8> *headers)

921 {

922 LOGV("setDataSource(%s)", url);

923 if (url == NULL)

924 return UNKNOWN_ERROR;

925

926 if ((strncmp(url, "http://", 7) == 0) ||

927 (strncmp(url, "https://", 8) == 0) ||

928 (strncmp(url, "rtsp://", 7) == 0)) {

929 if (!checkPermission("android.permission.INTERNET")) {

930 return PERMISSION_DENIED;

931 }

932 }

933

934 if (strncmp(url, "content://", 10) == 0) {

935 // get a filedescriptor for the content Uri and

936 // pass it to the setDataSource(fd) method

937

938 String16 url16(url);

939 int fd = android::openContentProviderFile(url16);

940 if (fd < 0)

941 {

942 LOGE("Couldn't open fd for %s", url);

943 return UNKNOWN_ERROR;

944 }

945 setDataSource(fd, 0, 0x7fffffffffLL); // this sets mStatus

946 close(fd);

947 return mStatus;

948 } else {

949 player_type playerType = getPlayerType(url);

950 LOGV("player type = %d", playerType);

951

952 // create the right type of player

953 sp<MediaPlayerBase> p = createPlayer(playerType);

954 if (p == NULL) return NO_INIT;

955

956 if (!p->hardwareOutput()) {

957 mAudioOutput = new AudioOutput(mAudioSessionId);

958 static_cast<MediaPlayerInterface*>(p.get())->setAudioSink(mAudioOutput);

959 }

........................................

992 p->setScreen(mScreen);

993 p->setVppGate(mVppGate);

994 p->setLumaSharp(mLumaSharp);

995 p->setChromaSharp(mChromaSharp);

996 p->setWhiteExtend(mWhiteExtend);

997 p->setBlackExtend(mBlackExtend);

998 /* add by Gary. end -----------------------------------}} */

999

1000 // now set data source

1001 LOGV(" setDataSource");

1002 mStatus = p->setDataSource(url, headers);

1003 if (mStatus == NO_ERROR) {

1004 mPlayer = p;

1005 } else {

1006 LOGE(" error: %d", mStatus);

1007 }

1008 return mStatus;

1009 }

1010 }

949行getPlayerType,在预先定义的数组查找资源的后缀名字,

237 extmap FILE_EXTS [] = {

238 {".ogg", STAGEFRIGHT_PLAYER},

239 {".mp3", STAGEFRIGHT_PLAYER},

240 {".wav", STAGEFRIGHT_PLAYER},

241 {".amr", STAGEFRIGHT_PLAYER},

242 {".flac", STAGEFRIGHT_PLAYER},

243 {".m4a", STAGEFRIGHT_PLAYER},

244 {".m4r", STAGEFRIGHT_PLAYER},

245 {".out", STAGEFRIGHT_PLAYER},

246 {".mp3?nolength", STAGEFRIGHT_PLAYER},

247 {".ogg?nolength", STAGEFRIGHT_PLAYER},

248 //{".3gp", STAGEFRIGHT_PLAYER},

...................................

找到的话就返回,没有找到的话返回一个默认的:

714 static player_type getDefaultPlayerType() {

715 return CEDARX_PLAYER;

716 //return STAGEFRIGHT_PLAYER;

717 }

接着953行,createPlayer:

900 sp<MediaPlayerBase> MediaPlayerService::Client::createPlayer(player_type playerType)

901 {

902 // determine if we have the right player type

903 sp<MediaPlayerBase> p = mPlayer;

904 if ((p != NULL) && (p->playerType() != playerType)) {

905 LOGV("delete player");

906 p.clear();

907 }

908 if (p == NULL) {

909 p = android::createPlayer(playerType, this, notify);

910 }

911

912 if (p != NULL) {

913 p->setUID(mUID);

914 }

915

916 return p;

917 }

这里第一次进来mPlayer为空,执行android::createPlayer,意思是执行 MediaPlayerService类的方法:

854 static sp<MediaPlayerBase> createPlayer(player_type playerType, void* cookie,

855 notify_callback_f notifyFunc)

856 {

857 sp<MediaPlayerBase> p;

858 switch (playerType) {

859 case CEDARX_PLAYER:

860 LOGV(" create CedarXPlayer");

861 p = new CedarPlayer;

862 break;

863 case CEDARA_PLAYER:

864 LOGV(" create CedarAPlayer");

865 p = new CedarAPlayerWrapper;

866 break;

867 case SONIVOX_PLAYER:

868 LOGV(" create MidiFile");

869 p = new MidiFile();

870 break;

871 case STAGEFRIGHT_PLAYER:

872 LOGV(" create StagefrightPlayer");

873 p = new StagefrightPlayer;

874 break;

875 case NU_PLAYER:

876 LOGV(" create NuPlayer");

877 p = new NuPlayerDriver;

878 break;

879 case TEST_PLAYER:

880 LOGV("Create Test Player stub");

881 p = new TestPlayerStub();

882 break;

883 default:

884 LOGE("Unknown player type: %d", playerType);

885 return NULL;

886 }

887 if (p != NULL) {

888 if (p->initCheck() == NO_ERROR) {

889 p->setNotifyCallback(cookie, notifyFunc);

890 } else {

891 p.clear();

892 }

这里几个播放器的类型,说明如下:

TEST_PLAYER url以test:开始的。如test:xxx

NU_PLAYER url以http://或https://开始的,且url以.m3u8结束或url中包含有m3u8字符串

SONIVOX_PLAYER 处理url中扩展名为:.mid,.midi,.smf,.xmf,.imy,.rtttl,.rtx,.ota的媒体文件

STAGEFRIGHT_PLAYER 它是一个大好人,前面三位不能处理的都交给它来处理,不知能力是否有如此强大

我们就选择STAGEFRIGHT_PLAYER来介绍吧,在StagefrightPlayer.cpp中的构造函数:

30 StagefrightPlayer::StagefrightPlayer()

31 : mPlayer(new AwesomePlayer) {

32 LOGV("StagefrightPlayer");

33

34 mPlayer->setListener(this);

35 }

31行,new AwesomePlayer,AwesomePlayer.cpp中:

182 AwesomePlayer::AwesomePlayer()

183 : mQueueStarted(false),

184 mUIDValid(false),

185 mTimeSource(NULL),

186 mVideoRendererIsPreview(false),

187 mAudioPlayer(NULL),

188 mDisplayWidth(0),

189 mDisplayHeight(0),

190 mFlags(0),

191 mExtractorFlags(0),

192 mVideoBuffer(NULL),

193 mDecryptHandle(NULL),

194 mLastVideoTimeUs(-1),

195 mTextPlayer(NULL) {

196 CHECK_EQ(mClient.connect(), (status_t)OK);

197

198 DataSource::RegisterDefaultSniffers();

199

200 mVideoEvent = new AwesomeEvent(this, &AwesomePlayer::onVideoEvent);

201 mVideoEventPending = false;

202 mStreamDoneEvent = new AwesomeEvent(this, &AwesomePlayer::onStreamDone);

203 mStreamDoneEventPending = false;

204 mBufferingEvent = new AwesomeEvent(this, &AwesomePlayer::onBufferingUpdate);

205 mBufferingEventPending = false;

206 mVideoLagEvent = new AwesomeEvent(this, &AwesomePlayer::onVideoLagUpdate);

207 mVideoEventPending = false;

208

209 mCheckAudioStatusEvent = new AwesomeEvent(

210 this, &AwesomePlayer::onCheckAudioStatus);

211

212 mAudioStatusEventPending = false;

213

214 reset();

215 }

里面的几个函数先不分析,后续用到再看,继续往下,然后是34行setListener:

244 void AwesomePlayer::setListener(const wp<MediaPlayerBase> &listener) {

245 Mutex::Autolock autoLock(mLock);

246 mListener = listener;

247 }

回到player->setDataSource中来,957行,new AudioOutput(mAudioSessionId),看来是准备音频输出了吧,

1002行p->setDataSource:

56 status_t StagefrightPlayer::setDataSource(

57 const char *url, const KeyedVector<String8, String8> *headers) {

58 return mPlayer->setDataSource(url, headers);

59 }

----------------->

256 status_t AwesomePlayer::setDataSource(

257 const char *uri, const KeyedVector<String8, String8> *headers) {

258 Mutex::Autolock autoLock(mLock);

259 return setDataSource_l(uri, headers);

260 }

这样,setDataSource就算结束了

紧接着,MediaPlayer::prepare:

277 status_t MediaPlayer::prepare()

278 {

279 LOGV("prepare");

280 Mutex::Autolock _l(mLock);

281 mLockThreadId = getThreadId();

282 if (mPrepareSync) {

283 mLockThreadId = 0;

284 return -EALREADY;

285 }

286 mPrepareSync = true;

287 status_t ret = prepareAsync_l();

288 if (ret != NO_ERROR) {

289 mLockThreadId = 0;

290 return ret;

291 }

292

293 if (mPrepareSync) {

294 mSignal.wait(mLock); // wait for prepare done

295 mPrepareSync = false;

296 }

297 LOGV("prepare complete - status=%d", mPrepareStatus);

298 mLockThreadId = 0;

299 return mPrepareStatus;

300 }

287行:

262 status_t MediaPlayer::prepareAsync_l()

263 {

264 if ( (mPlayer != 0) && ( mCurrentState & ( MEDIA_PLAYER_INITIALIZED | MEDIA_PLAYER_STOPPED) ) ) {

265 mPlayer->setAudioStreamType(mStreamType);

266 mCurrentState = MEDIA_PLAYER_PREPARING;

267 return mPlayer->prepareAsync();

268 }

269 LOGE("prepareAsync called in state %d", mCurrentState);

270 return INVALID_OPERATION;

271 }

265行设置音频输出类型,267行:

1318 status_t MediaPlayerService::Client::prepareAsync()

1319 {

1320 LOGV("[%d] prepareAsync", mConnId);

1321 sp<MediaPlayerBase> p = getPlayer();

1322 if (p == 0) return UNKNOWN_ERROR;

1323 status_t ret = p->prepareAsync();

1324 #if CALLBACK_ANTAGONIZER

1325 LOGV("start Antagonizer");

1326 if (ret == NO_ERROR) mAntagonizer->start();

1327 #endif

1328 return ret;

1329 }

这里的getPlayer()就是返回mPlayer,他就是前面创建的createPlayer(playerType)对象,所以1323行调用:

1913 status_t AwesomePlayer::prepareAsync() {

1914 Mutex::Autolock autoLock(mLock);

1915

1916 if (mFlags & PREPARING) {

1917 return UNKNOWN_ERROR; // async prepare already pending

1918 }

1919

1920 mIsAsyncPrepare = true;

1921 return prepareAsync_l();

1922 }

---------------------->

1924 status_t AwesomePlayer::prepareAsync_l() {

1925 if (mFlags & PREPARING) {

1926 return UNKNOWN_ERROR; // async prepare already pending

1927 }

1928

1929 if (!mQueueStarted) {

1930 mQueue.start();

1931 mQueueStarted = true;

1932 }

1933

1934 modifyFlags(PREPARING, SET);

1935 mAsyncPrepareEvent = new AwesomeEvent(

1936 this, &AwesomePlayer::onPrepareAsyncEvent);

1937

1938 mQueue.postEvent(mAsyncPrepareEvent);

1939

1940 return OK;

1941 }

1936行的onPrepareAsyncEvent:

void AwesomePlayer::onPrepareAsyncEvent() {

Mutex::Autolock autoLock(mLock);

if (mFlags & PREPARE_CANCELLED) {

LOGI("prepare was cancelled before doing anything");

abortPrepare(UNKNOWN_ERROR);

return;

}

if (mUri.size() > 0) {

status_t err = finishSetDataSource_l();

if (err != OK) {

abortPrepare(err);

return;

}

}

if (mVideoTrack != NULL && mVideoSource == NULL) {

status_t err = initVideoDecoder();

if (err != OK) {

abortPrepare(err);

return;

}

}

if (mAudioTrack != NULL && mAudioSource == NULL) {

status_t err = initAudioDecoder();

if (err != OK) {

abortPrepare(err);

return;

}

}

finishSetDataSource_l()的作用主要是根据datasource的类型来产生不同的extractor,有很多音视频格式,所以会有不同的extractor,代码中就有如下的:

AACExtractor.cpp AVIExtractor.cpp FLACExtractor.cpp MP3Extractor.cpp OggExtractor.cpp WVMExtractor.cpp

AMRExtractor.cpp DRMExtractor.cpp MediaExtractor.cpp MPEG4Extractor.cpp WAVExtractor.cpp

最终,finishSetDataSource_l()函数会调用 MediaExtractor::Create():

sp<MediaExtractor> MediaExtractor::Create(

const sp<DataSource> &source, const char *mime) {

....................................

MediaExtractor *ret = NULL;

if (!strcasecmp(mime, MEDIA_MIMETYPE_CONTAINER_MPEG4)

|| !strcasecmp(mime, "audio/mp4")) {

ret = new MPEG4Extractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_MPEG)) {

ret = new MP3Extractor(source, meta);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_AMR_NB)

|| !strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_AMR_WB)) {

ret = new AMRExtractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_FLAC)) {

ret = new FLACExtractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_CONTAINER_WAV)) {

ret = new WAVExtractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_CONTAINER_OGG)) {

ret = new OggExtractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_CONTAINER_MATROSKA)) {

ret = new MatroskaExtractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_CONTAINER_MPEG2TS)) {

ret = new MPEG2TSExtractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_CONTAINER_WVM)) {

ret = new WVMExtractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_AAC_ADTS)) {

ret = new AACExtractor(source);

} else if (!strcasecmp(mime, MEDIA_MIMETYPE_CONTAINER_MPEG2PS)) {

ret = new MPEG2PSExtractor(source);

}

if (ret != NULL) {

if (isDrm) {

ret->setDrmFlag(true);

} else {

ret->setDrmFlag(false);

}

}

return ret;

这样就返回了一个extractor。

接着返回到onPrepareAsyncEvent中,调用initVideoDecoder,initVideoDecoder。依赖上面产生的mVideoTrack(视频)、mAudioTrack(音频)数据流。生成了mVideoSource和mAudioSource这两个音视频解码器。不同类型匹配不同的解码器。

if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_RAW)) {

mAudioSource = mAudioTrack;

} else {

mAudioSource = OMXCodec::Create(

mClient.interface(), mAudioTrack->getFormat(),

false, // createEncoder

mAudioTrack);

mVideoSource = OMXCodec::Create(

mClient.interface(), mVideoTrack->getFormat(),

false, // createEncoder

mVideoTrack,

NULL, flags, USE_SURFACE_ALLOC ? mNativeWindow : NULL);

mVideoSource、mAudioSource组成了播放器模型中的decoder部分。给出一个网上的流程图:

看完onPrepareAsyncEvent函数, 我们还要知道什么时候才调用这个函数呢,因为它只是作为函数指针参数传进去而已,所以要看 new AwesomeEvent()和mQueue.postEvent(mAsyncPrepareEvent):

先要了解mQueue的机制,它是一个TimedEventQueue类,实际上是创建一个线程,不断的读取队列中的数据,如果不为空,就回调注册进来的函数,从它的start为入口开始:

void TimedEventQueue::start() {

if (mRunning) {

return;

}

mStopped = false;

pthread_attr_t attr;

pthread_attr_init(&attr);

pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_JOINABLE);

pthread_create(&mThread, &attr, ThreadWrapper, this);

pthread_attr_destroy(&attr);

mRunning = true;

}

这里启动线程ThreadWrapper:

void *TimedEventQueue::ThreadWrapper(void *me) {

.....................................

androidSetThreadPriority(0, ANDROID_PRIORITY_FOREGROUND);

static_cast<TimedEventQueue *>(me)->threadEntry();

.....................................

return NULL;

}

进入threadEntry:

void TimedEventQueue::threadEntry() {

prctl(PR_SET_NAME, (unsigned long)"TimedEventQueue", 0, 0, 0);

for (;;) {

int64_t now_us = 0;

sp<Event> event;

....................................................

status_t err = mQueueHeadChangedCondition.waitRelative(

mLock, delay_us * 1000ll);

....................................................

event = removeEventFromQueue_l(eventID);

}

if (event != NULL) {

// Fire event with the lock NOT held.

event->fire(this, now_us);

}

回调的是event->fire函数,这里的event是什么时候加入队列中的呢,就是在前面mQueue.postEvent(mAsyncPrepareEvent),所以调用的是mAsyncPrepareEvent的fire函数, 还可以用另外一个postEventWithDelay函数,表示延时执行的。

struct AwesomeEvent : public TimedEventQueue::Event {

AwesomeEvent(

AwesomePlayer *player,

void (AwesomePlayer::*method)())

: mPlayer(player),

mMethod(method) {

}

protected:

virtual ~AwesomeEvent() {}

virtual void fire(TimedEventQueue *queue, int64_t /* now_us */) {

(mPlayer->*mMethod)();

}

看来果然没有错,就是调用了mMethod指向的函数,而这个函数就是onPrepareAsyncEvent啦

然后接着 MediaPlayer::start():

309 status_t MediaPlayer::start()310 {

311 LOGV("start");

312 Mutex::Autolock _l(mLock);

313 if (mCurrentState & MEDIA_PLAYER_STARTED)

314 return NO_ERROR;

315 if ( (mPlayer != 0) && ( mCurrentState & ( MEDIA_PLAYER_PREPARED |

316 MEDIA_PLAYER_PLAYBACK_COMPLETE | MEDIA_PLAYER_PAUSED ) ) ) {

317 mPlayer->setLooping(mLoop);

318 mPlayer->setVolume(mLeftVolume, mRightVolume);

319 mPlayer->setAuxEffectSendLevel(mSendLevel);

320 mCurrentState = MEDIA_PLAYER_STARTED;

321 status_t ret = mPlayer->start();

322 if (ret != NO_ERROR) {

323 mCurrentState = MEDIA_PLAYER_STATE_ERROR;

324 } else {

325 if (mCurrentState == MEDIA_PLAYER_PLAYBACK_COMPLETE) {

326 LOGV("playback completed immediately following start()");

327 }

328 }

329 return ret;

330 }

331 LOGE("start called in state %d", mCurrentState);

332 return INVALID_OPERATION;

333 }

设置循环和音量后,调用mPlayer->start:

1331 status_t MediaPlayerService::Client::start()

1332 {

1333 LOGV("[%d] start", mConnId);

1334 sp<MediaPlayerBase> p = getPlayer();

1335 if (p == 0) return UNKNOWN_ERROR;

1336 p->setLooping(mLoop);

1337 return p->start();

1338 }

--------------------->

87 status_t StagefrightPlayer::start() {

88 LOGV("start");

89

90 return mPlayer->play();

91 }

--------------------->

832 status_t AwesomePlayer::play() {

833 Mutex::Autolock autoLock(mLock);

834

835 modifyFlags(CACHE_UNDERRUN, CLEAR);

836

837 return play_l();

838 }

play_l是一个很长的函数,主要调用 postVideoEvent_l(),它调用了软解码或者硬解码,启动音频播放,还有不断循环读取解码后的数据来显示:

1803 void AwesomePlayer::postVideoEvent_l(int64_t delayUs) {

1804 if (mVideoEventPending) {

1805 return;

1806 }

1807

1808 mVideoEventPending = true;

1809 mQueue.postEventWithDelay(mVideoEvent, delayUs < 0 ? 10000 : delayUs);

1810 }

postEventWithDelay会启动mVideoEvent的对应事件,即AwesomePlayer::onVideoEvent,它主要进行了for循环:

1608 for (;;) {

1609 status_t err = mVideoSource->read(&mVideoBuffer, &options);

1610 options.clearSeekTo();

1611

1612 if (err != OK) {

1613 CHECK(mVideoBuffer == NULL);

1614

1615 if (err == INFO_FORMAT_CHANGED) {

1616 LOGV("VideoSource signalled format change.");

1617

1618 notifyVideoSize_l();

1619

1620 if (mVideoRenderer != NULL) {

1621 mVideoRendererIsPreview = false;

1622 initRenderer_l();

1623 }

1624 continue;

1625 }

1626

1627 // So video playback is complete, but we may still have

1628 // a seek request pending that needs to be applied

1629 // to the audio track.

1630 if (mSeeking != NO_SEEK) {

1631 LOGV("video stream ended while seeking!");

1632 }

1633 finishSeekIfNecessary(-1);

参考文章:http://blog.csdn.net/myarrow/article/details/7067574

http://blog.csdn.net/mci2004/article/details/7629146