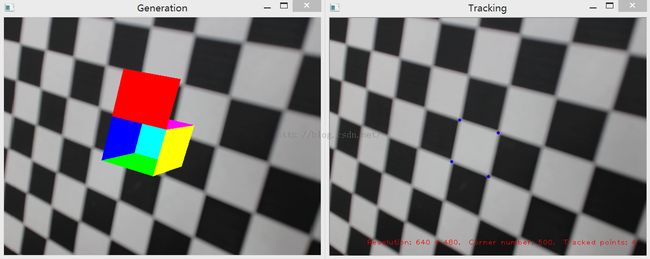

关于OpenCV的那些事——画AR物体(单目控制)

这段时间把项目的剩余部分全部完成了,包括角点检测改进和恢复追踪。这一节先继续讲利用OpenGL画AR物体。

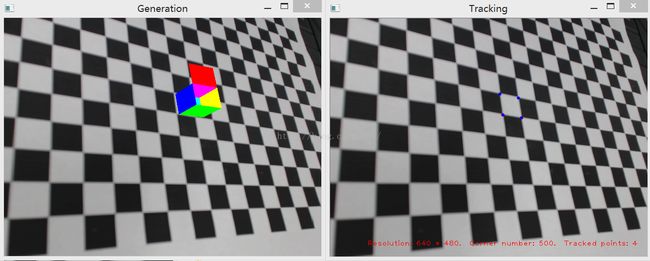

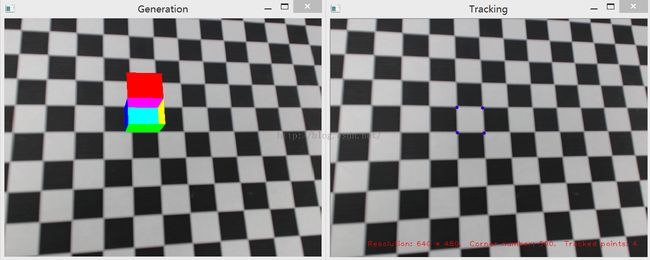

上一节中我们利用SolvePnP得到了相机的姿态(rotation和translation),利用姿态信息我们可以通过加载model_view_matrix来控制opengl里的相机。

首先我试了用cmake重新编译with_opengl版的opencv,但是失败了(后来有了成功编译with_openni的经验后,回来再试with_opengl成功了,但是项目都写完了改起来太麻烦,以后有机会会改的),所以我使用了pthread库创建了另一个线程去跑之前tracking的程序,主线程跑opengl画图程序。双线程并行运行也是项目的核心思想,可以大大减少时间,提高系统运行速度。pthread的配置和使用方法网上有很多,这里就不提了。OpenGL的用法网上也有很多方法,这里也不提了。

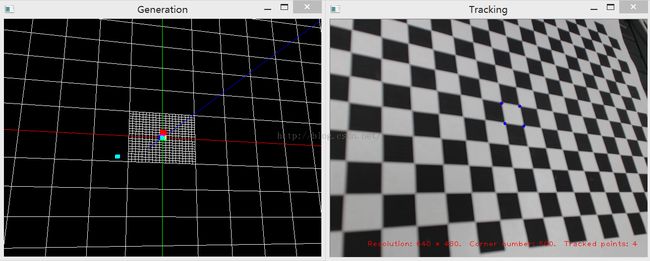

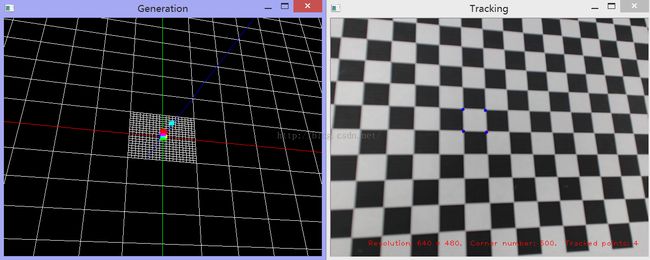

项目中我们的opengl窗口有两个功能,一个是draw_map()在真实场景上画AR物体,另一个是draw_camera()画三维坐标系,方便我们观察opengl中相机的位置。前者无法用鼠标控制,后者可以用鼠标调节显示角度。

void display(void)

{

glClear (GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glLoadIdentity(); //加载单位矩阵

if(drawmap)

{

gluLookAt(0,0,2,

0,0,0,

0,1,0);

draw_map();

}

else

{

CalEyePostion();

gluLookAt(eye[0], eye[1], eye[2],

center[0], center[1], center[2],

0, 1, 0);

draw_camera();

}

waitKey(15);

glutSwapBuffers();

}

void draw_map()

{

if(image.data!=NULL)

{

cvtColor(image, texttmp, CV_BGR2RGB);

flip(texttmp,texttmp,0);

glEnable(GL_TEXTURE_2D);

glTexImage2D(GL_TEXTURE_2D, 0, 3, 640, 480 , 0, GL_RGB, GL_UNSIGNED_BYTE, texttmp.data);

glPushMatrix();

glTranslated(0, 0, -30);

glScaled(1.0/640.0, 1.0/480.0, 1.0);

glScaled(31, 31, 1);

glTranslated(-320, -240, 0.0);

glBegin(GL_QUADS);

glTexCoord2i(0, 0); glVertex2i(0, 0);

glTexCoord2i(1, 0); glVertex2i(640, 0);

glTexCoord2i(1, 1); glVertex2i(640, 480);

glTexCoord2i(0, 1); glVertex2i(0, 480);

glEnd();

glPopMatrix();

}

glDisable(GL_TEXTURE_2D);

if(needtomap && trackingpoints == 4)

{

/* Use depth buffering for hidden surface elimination. */

glEnable(GL_DEPTH_TEST);

Mat rotM(3,3,CV_64FC1);

Rodrigues(rvec,rotM);

glPushMatrix();

double model_view_matrix[16]={

rotM.at<double>(0,0),-rotM.at<double>(1,0),-rotM.at<double>(2,0),0,

rotM.at<double>(0,1),-rotM.at<double>(1,1),-rotM.at<double>(2,1),0,

rotM.at<double>(0,2),-rotM.at<double>(1,2),-rotM.at<double>(2,2),0,

tv[0],-tv[1],-tv[2],1

};

glLoadMatrixd(model_view_matrix);

glRotated(180.0,1.0,0.0,0.0);

draw_object();

/* Use depth buffering for hidden surface elimination. */

glDisable(GL_DEPTH_TEST);

glPopMatrix();

}

}

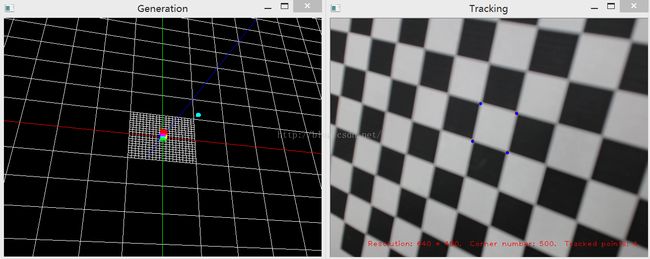

draw_camera()部分,画了网格,坐标轴以及物体,接着根据opencv中的相机姿态计算opengl中相机对于坐标原点的位置(先求旋转矩阵的转置矩阵,然后乘以平移矩阵),最后把opengl中的相机画出来。代码如下:

void draw_camera()

{

glColor3f(1.0,1.0,1.0);

glPushMatrix();

glScalef(0.25,0.25,0.25);

glBegin(GL_LINES);

for(float i = -5;i<5.1;i+=0.5)

{

glVertex3f(-5,i,0);

glVertex3f(5,i,0);

glVertex3f(i,-5,0);

glVertex3f(i,5,0);

}

glEnd();

glBegin(GL_LINES);

for(int i = -50;i<51;i+=5)

{

glVertex3i(-50,i,0);

glVertex3i(50,i,0);

glVertex3i(i,-50,0);

glVertex3i(i,50,0);

}

glEnd();

glColor3f(1.0,0.0,0.0);

glBegin(GL_LINES);

glVertex3i(-50,0,0);

glVertex3i(100,0,0);

glEnd();

glColor3f(0.0,1.0,0.0);

glBegin(GL_LINES);

glVertex3i(0,-50,0);

glVertex3i(0,100,0);

glEnd();

glColor3f(0.0,0.0,1.0);

glBegin(GL_LINES);

glVertex3i(0,0,-10);

glVertex3i(0,0,50);

glEnd();

glEnable(GL_DEPTH_TEST);

draw_object();

glDisable(GL_DEPTH_TEST);

if(needtomap && trackingpoints == num_track)

{

glPushMatrix();

Mat tempR;

Mat rotM(3,3,CV_64FC1);

Rodrigues(rvecm,rotM);

rotM.copyTo(tempR);

tempR = tempR.t();

vector<double> tempv(3);

Mat tempT(tempv);

tvecm.copyTo(tempT);

tempT = tempR * tempT;

glTranslated(-tempv[0],tempv[1],tempv[2]);

glutSolidCube(0.5);

glPopMatrix();

}

glPopMatrix();

}

结果如下(不同角度):

完整版代码点这里

到此项目基本结束。下一节将改进orb算法,还将尝试特征点跟踪失败后的跟踪恢复。