Memcached源码阅读2

Memcached源码阅读之线程交互

Memcached按之前的分析可以知道,其是典型的Master-Worker线程模型,这种模型很典型,其工作模型是Master绑定端口,监听网络连接,接受网络连接之后,通过线程间通信来唤醒Worker线程,Worker线程已经连接的描述符执行读写操作,这种模型简化了整个通信模型,下面分析下这个过程。

- case conn_listening:

- addrlen = sizeof(addr);

- //Master线程(main)进入状态机之后执行accept操作,这个操作也是非阻塞的。

- if ((sfd = accept(c->sfd, (struct sockaddr *) &addr, &addrlen)) == -1)

- {

- //非阻塞模型,这个错误码继续等待

- if (errno == EAGAIN || errno == EWOULDBLOCK)

- {

- stop = true;

- }

- //连接超载

- else if (errno == EMFILE)

- {

- if (settings.verbose > 0)

- fprintf(stderr, "Too many open connections\n");

- accept_new_conns(false);

- stop = true;

- }

- else

- {

- perror("accept()");

- stop = true;

- }

- break;

- }

- //已经accept成功,将accept之后的描述符设置为非阻塞的

- if ((flags = fcntl(sfd, F_GETFL, 0)) < 0

- || fcntl(sfd, F_SETFL, flags | O_NONBLOCK) < 0)

- {

- perror("setting O_NONBLOCK");

- close(sfd);

- break;

- }

- //判断是否超过最大连接数

- if (settings.maxconns_fast

- && stats.curr_conns + stats.reserved_fds

- >= settings.maxconns - 1)

- {

- str = "ERROR Too many open connections\r\n";

- res = write(sfd, str, strlen(str));

- close(sfd);

- STATS_LOCK();

- stats.rejected_conns++;

- STATS_UNLOCK();

- }

- else

- { //直线连接分发

- dispatch_conn_new(sfd, conn_new_cmd, EV_READ | EV_PERSIST,

- DATA_BUFFER_SIZE, tcp_transport);

- }

- stop = true;

- break;

- void dispatch_conn_new(int sfd, enum conn_states init_state, int event_flags,

- int read_buffer_size, enum network_transport transport) {

- CQ_ITEM *item = cqi_new();//创建一个连接队列

- char buf[1];

- int tid = (last_thread + 1) % settings.num_threads;//通过round-robin算法选择一个线程

- LIBEVENT_THREAD *thread = threads + tid;//thread数组存储了所有的工作线程

- last_thread = tid;//缓存这次的线程编号,下次待用

- item->sfd = sfd;//sfd表示accept之后的描述符

- item->init_state = init_state;

- item->event_flags = event_flags;

- item->read_buffer_size = read_buffer_size;

- item->transport = transport;

- cq_push(thread->new_conn_queue, item);//投递item信息到Worker线程的工作队列中

- MEMCACHED_CONN_DISPATCH(sfd, thread->thread_id);

- buf[0] = 'c';

- //在Worker线程的notify_send_fd写入字符c,表示有连接

- if (write(thread->notify_send_fd, buf, 1) != 1) {

- perror("Writing to thread notify pipe");

- }

- }

投递到子线程的连接队列之后,同时,通过忘子线程的PIPE管道写入字符c来,下面我们看看子线程是如何处理的?

- //子线程会在PIPE管道读上面建立libevent事件,事件回调函数是thread_libevent_process

- event_set(&me->notify_event, me->notify_receive_fd,

- EV_READ | EV_PERSIST, thread_libevent_process, me);

- static void thread_libevent_process(int fd, short which, void *arg) {

- LIBEVENT_THREAD *me = arg;

- CQ_ITEM *item;

- char buf[1];

- if (read(fd, buf, 1) != 1)//PIPE管道读取一个字节的数据

- if (settings.verbose > 0)

- fprintf(stderr, "Can't read from libevent pipe\n");

- switch (buf[0]) {

- case 'c'://如果是c,则处理网络连接

- item = cq_pop(me->new_conn_queue);//从连接队列读出Master线程投递的消息

- if (NULL != item) {

- conn *c = conn_new(item->sfd, item->init_state, item->event_flags,

- item->read_buffer_size, item->transport, me->base);//创建连接

- if (c == NULL) {

- if (IS_UDP(item->transport)) {

- fprintf(stderr, "Can't listen for events on UDP socket\n");

- exit(1);

- } else {

- if (settings.verbose > 0) {

- fprintf(stderr, "Can't listen for events on fd %d\n",

- item->sfd);

- }

- close(item->sfd);

- }

- } else {

- c->thread = me;

- }

- cqi_free(item);

- }

- break;

- }

- }

- event_set(&c->event, sfd, event_flags, event_handler, (void *) c);

- event_base_set(base, &c->event);

而event_handler的执行流程最终会进入到业务处理的状态机中,关于状态机,后续分析。

Memcached源码分析之状态机(一)

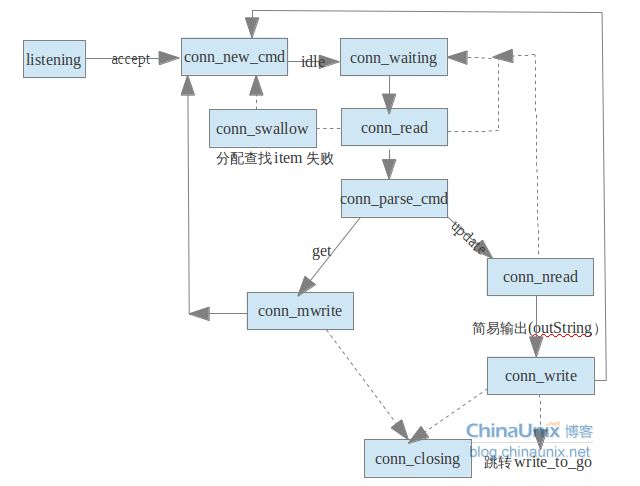

按我们之前的描述,Master线程建立连接之后,分发给Worker线程,而Worker线程处理业务逻辑时,会进入状态机,状态机按不同的状态处理业务逻辑,我们在分析连接分发时,已经看到了Master线程进入状态机时在有新连接建立的时候,后续的状态都是业务逻辑的状态,其处理流程如下图所示:

总共有10个状态(代码中的状态不止这些,有些没什么用,此处就没展现),状态listenning状态是Master建立连接的过程,我们已经分析过了,我们接下来分不同的文章分析其余的9中状态。

- enum conn_states {

- conn_listening, //监听状态

- conn_new_cmd, //为新连接做一些准备

- conn_waiting, //等待读取一个数据包

- conn_read, //读取网络数据

- conn_parse_cmd, //解析缓冲区的数据

- conn_write, //简单的回复数据

- conn_nread, //读取固定数据的网络数据

- conn_swallow, //处理不需要的写缓冲区的数据

- conn_closing, //关闭连接

- conn_mwrite, //顺序的写多个item数据

- conn_max_state //最大状态,做断言使用

- };

这篇文件先分析conn_new_cmd和conn_wating状态,子线程最初进入的状态就是conn_new_cmd状态,这个状态主要是做一些清理。

- case conn_new_cmd:

- --nreqs;//全局变量,记录每个libevent实例处理的事件,通过初始启动参数配置

- if (nreqs >= 0)//还可以处理请求

- {

- reset_cmd_handler(c);//整理缓冲区

- }

- else//拒绝请求

- {

- pthread_mutex_lock(&c->thread->stats.mutex);

- c->thread->stats.conn_yields++;//更新统计数据

- pthread_mutex_unlock(&c->thread->stats.mutex);

- if (c->rbytes > 0)//如果缓冲区有数据,则需要处理

- {

- if (!update_event(c, EV_WRITE | EV_PERSIST))//更新libevent状态

- {

- if (settings.verbose > 0)

- fprintf(stderr, "Couldn't update event\n");

- conn_set_state(c, conn_closing);//关闭连接

- }

- }

- stop = true;

- }

- break;

- //整理缓冲区

- static void reset_cmd_handler(conn *c)

- {

- c->cmd = -1;

- c->substate = bin_no_state;

- if (c->item != NULL)//还有item

- {

- item_remove(c->item);//删除item,本篇不分析其实现,后续分析

- c->item = NULL;

- }

- conn_shrink(c);//整理缓冲区

- if (c->rbytes > 0)//缓冲区还有数据

- {

- conn_set_state(c, conn_parse_cmd);//更新状态

- }

- else//如果没有数据

- {

- conn_set_state(c, conn_waiting);//进入等待状态,状态机没有数据要处理,就进入这个状态

- }

- }

- //缩小缓冲区

- static void conn_shrink(conn *c)

- {

- assert(c != NULL);

- if (IS_UDP(c->transport))//如果是UDP协议,不牵涉缓冲区管理

- return;

- //读缓冲区空间大小>READ_BUFFER_HIGHWAT && 已经读到的数据还没解析的数据小于 DATA_BUFFER_SIZE

- if (c->rsize > READ_BUFFER_HIGHWAT && c->rbytes < DATA_BUFFER_SIZE)

- {

- char *newbuf;

- if (c->rcurr != c->rbuf)

- memmove(c->rbuf, c->rcurr, (size_t) c->rbytes);//目前数据是从rcurr开始的,移动数据到rbuf中

- newbuf = (char *) realloc((void *) c->rbuf, DATA_BUFFER_SIZE);//按DATA_BUFFER_SIZE扩大缓冲区

- if (newbuf)

- {

- c->rbuf = newbuf;//更新读缓冲区

- c->rsize = DATA_BUFFER_SIZE;//更新读缓冲区大小

- }

- c->rcurr = c->rbuf;

- }

- if (c->isize > ITEM_LIST_HIGHWAT)//需要写出的item的个数,也就是要发送给客户端的item的个数

- {

- item **newbuf = (item**) realloc((void *) c->ilist,ITEM_LIST_INITIAL * sizeof(c->ilist[0]));//增大存放item的空间

- if (newbuf)

- {

- c->ilist = newbuf;//更新信息

- c->isize = ITEM_LIST_INITIAL;//更新信息

- }

- }

- if (c->msgsize > MSG_LIST_HIGHWAT)//msghdr的个数,memcached发送消息是通过sendmsg批量发送的

- {

- struct msghdr *newbuf = (struct msghdr *) realloc((void *) c->msglist,MSG_LIST_INITIAL * sizeof(c->msglist[0]));//增大空间

- if (newbuf)

- {

- c->msglist = newbuf;//更新信息

- c->msgsize = MSG_LIST_INITIAL;//更新信息

- }

- }

- if (c->iovsize > IOV_LIST_HIGHWAT)//msghdr里面iov的数量

- {

- struct iovec *newbuf = (struct iovec *) realloc((void *) c->iov,IOV_LIST_INITIAL * sizeof(c->iov[0]));//增大空间

- if (newbuf)

- {

- c->iov = newbuf;//更新信息

- c->iovsize = IOV_LIST_INITIAL;//更新信息

- }

- }

- }

从conn_new_cmd状态会进入conn_parse_cmd状态(如果有数据)或者conn_waiting(如果没有数据)状态,下面看看conn_waiting状态。

- case conn_waiting:

- if (!update_event(c, EV_READ | EV_PERSIST))//修改libevent状态,读取数据

- {

- if (settings.verbose > 0)

- fprintf(stderr, "Couldn't update event\n");

- conn_set_state(c, conn_closing);

- break;

- }

- conn_set_state(c, conn_read);//进入读数据状态

- stop = true;

- break;

- //更新libevent状态,也就是删除libevent事件后,重新注册libevent事件

- static bool update_event(conn *c, const int new_flags)

- {

- assert(c != NULL);

- struct event_base *base = c->event.ev_base;

- if (c->ev_flags == new_flags)

- return true;

- if (event_del(&c->event) == -1)//删除旧的事件

- return false;

- event_set(&c->event, c->sfd, new_flags, event_handler, (void *) c);//注册新事件

- event_base_set(base, &c->event);

- c->ev_flags = new_flags;

- if (event_add(&c->event, 0) == -1)

- return false;

- return true;

- }

备注:图片参考地址,http://blog.chinaunix.net/uid-27767798-id-3415510.html

Memcached源码分析之状态机(二)

通过前面一篇文章分析得知,conn_wating状态是在等待读取数据,conn_wating通过修改libevent事件(修改为读事件)之后就进入了conn_read状态,该状态就是从网络中读取数据,下面我们详细分析conn_read状态。

- case conn_read:

- res = IS_UDP(c->transport) ? try_read_udp(c) : try_read_network(c);//判断采用UDP协议还是TCP协议

- switch (res)

- {

- case READ_NO_DATA_RECEIVED://未读取到数据

- conn_set_state(c, conn_waiting);//继续等待

- break;

- case READ_DATA_RECEIVED://读取数据

- conn_set_state(c, conn_parse_cmd);//开始解析数据

- break;

- case READ_ERROR://读取发生错误

- conn_set_state(c, conn_closing);//关闭连接

- break;

- case READ_MEMORY_ERROR: //申请内存空间错误,继续尝试

- break;

- }

- break;

- //采用TCP协议,从网络读取数据

- static enum try_read_result try_read_network(conn *c)

- {

- enum try_read_result gotdata = READ_NO_DATA_RECEIVED;

- int res;

- int num_allocs = 0;

- assert(c != NULL);

- //rcurr标记读缓冲区的开始位置,如果不在,通过memmove调整

- if (c->rcurr != c->rbuf)

- {

- if (c->rbytes != 0)

- memmove(c->rbuf, c->rcurr, c->rbytes);

- c->rcurr = c->rbuf;//rcurr指向读缓冲区起始位置

- }

- while (1)//循环读取

- {

- if (c->rbytes >= c->rsize)//已经读取到的数据大于读缓冲区的大小

- {

- if (num_allocs == 4)

- {

- return gotdata;

- }

- ++num_allocs;

- char *new_rbuf = realloc(c->rbuf, c->rsize * 2);//按2倍扩容空间

- if (!new_rbuf)//realloc发生错误,也就是申请内存失败

- {

- if (settings.verbose > 0)

- fprintf(stderr, "Couldn't realloc input buffer\n");

- c->rbytes = 0; //忽略已经读取到的数据

- out_string(c, "SERVER_ERROR out of memory reading request");

- c->write_and_go = conn_closing;//下一个状态就是conn_closing状态

- return READ_MEMORY_ERROR;//返回错误

- }

- c->rcurr = c->rbuf = new_rbuf;//读缓冲区指向新的缓冲区

- c->rsize *= 2;//读缓冲区的大小扩大2倍

- }

- int avail = c->rsize - c->rbytes;//读缓冲区剩余空间

- res = read(c->sfd, c->rbuf + c->rbytes, avail);//执行网络读取,这个是非阻塞的读

- if (res > 0)//如果读取到了数据

- {

- pthread_mutex_lock(&c->thread->stats.mutex);

- c->thread->stats.bytes_read += res;//更新线程的统计数据

- pthread_mutex_unlock(&c->thread->stats.mutex);

- gotdata = READ_DATA_RECEIVED;//返回读取到数据的状态

- c->rbytes += res;//读取到的数据个数增加res

- if (res == avail)//最多读取到avail个,如果已经读到了,则可以尝试继续读取

- {

- continue;

- }

- else//否则,小于avail,表示已经没数据了,退出循环。

- {

- break;

- }

- }

- if (res == 0)//表示已经断开网络连接了

- {

- return READ_ERROR;

- }

- if (res == -1)//因为是非阻塞的,所以会返回下面的两个错误码

- {

- if (errno == EAGAIN || errno == EWOULDBLOCK)

- {

- break;

- }

- return READ_ERROR;//如果返回为负数,且不是上面两个数,则表示发生了其他错误,返回READ_ERROR

- }

- }

- return gotdata;

- }

上面描述的是TCP的数据读取,下面我们分析下UDP的数据读取,UDP是数据报的形式,读取到一个,就是一个完整的数据报,所以其处理过程简单。

- //UDP读取网络数据

- static enum try_read_result try_read_udp(conn *c)

- {

- int res;

- assert(c != NULL);

- c->request_addr_size = sizeof(c->request_addr);

- res = recvfrom(c->sfd, c->rbuf, c->rsize, 0, &c->request_addr,

- &c->request_addr_size);//执行UDP的网络读取

- if (res > 8)//UDP数据包大小大于8,已经有可能是业务数据包

- {

- unsigned char *buf = (unsigned char *) c->rbuf;

- pthread_mutex_lock(&c->thread->stats.mutex);

- c->thread->stats.bytes_read += res;//更新每个线程的统计数据

- pthread_mutex_unlock(&c->thread->stats.mutex);

- /* Beginning of UDP packet is the request ID; save it. */

- c->request_id = buf[0] * 256 + buf[1];//UDP为了防止丢包,增加了确认字段

- /* If this is a multi-packet request, drop it. */

- if (buf[4] != 0 || buf[5] != 1)//一些业务的特征信息判断

- {

- out_string(c, "SERVER_ERROR multi-packet request not supported");

- return READ_NO_DATA_RECEIVED;

- }

- /* Don't care about any of the rest of the header. */

- res -= 8;

- memmove(c->rbuf, c->rbuf + 8, res);//调整缓冲区

- c->rbytes = res;//更新信息

- c->rcurr = c->rbuf;

- return READ_DATA_RECEIVED;

- }

- return READ_NO_DATA_RECEIVED;

- }

Memcached源码分析之状态机(三)

按前面2篇文章的分析可以知道,从网络读取了数据之后,将会进入conn_parse_cmd状态,该状态是按协议来解析读取到的网络数据。

- case conn_parse_cmd:

- //解析数据

- if (try_read_command(c) == 0)

- {

- //如果读取到的数据不够,我们继续等待,等读取到的数据够了,再进行解析

- conn_set_state(c, conn_waiting);

- }

- break;

- //memcached支持二进制协议和文本协议

- static int try_read_command(conn *c)

- {

- assert(c != NULL);

- assert(c->rcurr <= (c->rbuf + c->rsize));

- assert(c->rbytes > 0);

- if (c->protocol == negotiating_prot || c->transport == udp_transport)

- {

- //二进制协议有标志,按标志进行区分

- if ((unsigned char) c->rbuf[0] == (unsigned char) PROTOCOL_BINARY_REQ)

- {

- c->protocol = binary_prot;//二进制协议

- }

- else

- {

- c->protocol = ascii_prot;//文本协议

- }

- if (settings.verbose > 1)

- {

- fprintf(stderr, "%d: Client using the %s protocol\n", c->sfd,

- prot_text(c->protocol));

- }

- }

- //如果是二进制协议

- if (c->protocol == binary_prot)

- {

- //二进制协议读取到的数据小于二进制协议的头部长度

- if (c->rbytes < sizeof(c->binary_header))

- {

- //返回继续读数据

- return 0;

- }

- else

- {

- #ifdef NEED_ALIGN

- //如果需要对齐,则按8字节对齐,对齐能提高CPU读取的效率

- if (((long)(c->rcurr)) % 8 != 0)

- {

- //调整缓冲区

- memmove(c->rbuf, c->rcurr, c->rbytes);

- c->rcurr = c->rbuf;

- if (settings.verbose > 1)

- {

- fprintf(stderr, "%d: Realign input buffer\n", c->sfd);

- }

- }

- #endif

- protocol_binary_request_header* req;//二进制协议头

- req = (protocol_binary_request_header*) c->rcurr;

- //调试信息

- if (settings.verbose > 1)

- {

- /* Dump the packet before we convert it to host order */

- int ii;

- fprintf(stderr, "<%d Read binary protocol data:", c->sfd);

- for (ii = 0; ii < sizeof(req->bytes); ++ii)

- {

- if (ii % 4 == 0)

- {

- fprintf(stderr, "\n<%d ", c->sfd);

- }

- fprintf(stderr, " 0x%02x", req->bytes[ii]);

- }

- fprintf(stderr, "\n");

- }

- c->binary_header = *req;

- c->binary_header.request.keylen = ntohs(req->request.keylen);//二进制协议相关内容

- c->binary_header.request.bodylen = ntohl(req->request.bodylen);

- c->binary_header.request.cas = ntohll(req->request.cas);

- //判断魔数是否合法,魔数用来防止TCP粘包

- if (c->binary_header.request.magic != PROTOCOL_BINARY_REQ)

- {

- if (settings.verbose)

- {

- fprintf(stderr, "Invalid magic: %x\n",

- c->binary_header.request.magic);

- }

- conn_set_state(c, conn_closing);

- return -1;

- }

- c->msgcurr = 0;

- c->msgused = 0;

- c->iovused = 0;

- if (add_msghdr(c) != 0)

- {

- out_string(c, "SERVER_ERROR out of memory");

- return 0;

- }

- c->cmd = c->binary_header.request.opcode;

- c->keylen = c->binary_header.request.keylen;

- c->opaque = c->binary_header.request.opaque;

- //清除客户端传递的cas值

- c->cas = 0;

- dispatch_bin_command(c);//协议数据处理

- c->rbytes -= sizeof(c->binary_header);//更新已经读取到的字节数据

- c->rcurr += sizeof(c->binary_header);//更新缓冲区的路标信息

- }

- }

文本协议的过程和二进制协议的过程类似,此处不分析,另外dispatch_bin_command是处理具体的(比如get,set等)操作的,和是二进制协议具体相关的,解析完一些数据之后,会进入到conn_nread的流程,也就是读取指定数目数据的过程,这个过程主要是做具体的操作了,比如get,add,set操作。

- case bin_read_set_value:

- complete_update_bin(c);//执行Update操作

- break;

- case bin_reading_get_key:

- process_bin_get(c);//执行get操作

- break;

上文来自:http://blog.csdn.net/lcli2009?viewmode=contents