Centos 6.5 Zabbix HA

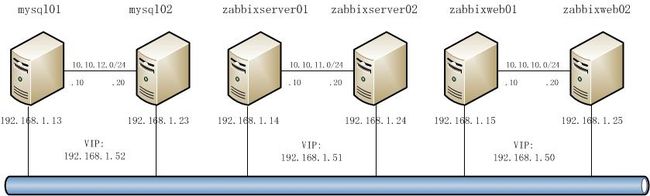

Network Topology:

zabbix mysql server

1. Make sure you have successfully set up DNS resolution and NTP time synchronization for both your Linux Cluster nodes.

vi /etc/sysconfig/network

设定主机名为short name, not FQDN

here, I use /etc/hosts to simplify

vi /etc/hosts

# eth0 network for production

192.168.1.13 mysql01

192.168.1.23 mysql02

# for dedicated heartbeat network to avoid problems

# eth1 network

10.10.12.10 mysql01p

10.10.12.20 mysql02p

2. wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/network:ha-clustering:Stable.repo -O /etc/yum.repos.d/ha.repo

3. on two nodes

yum -y install pacemaker cman crmsh

4. on two nodes

vi /etc/sysconfig/cman

CMAN_QUORUM_TIMEOUT=0

5. on two nodes

vi /etc/cluster/cluster.conf

<?xml version="1.0"?>

<cluster config_version="1" name="mycluster">

<logging debug="off"/>

<clusternodes>

<clusternode name="mysql01p" nodeid="1">

<fence>

<method name="pcmk-redirect">

<device name="pcmk" port="mysql01p"/>

</method>

</fence>

</clusternode>

<clusternode name="mysql02p" nodeid="2">

<fence>

<method name="pcmk-redirect">

<device name="pcmk" port="mysql02p"/>

</method>

</fence>

</clusternode>

</clusternodes>

<fencedevices>

<fencedevice name="pcmk" agent="fence_pcmk"/>

</fencedevices>

</cluster>

6. on two nodes

ccs_config_validate

7. on node1 then node2

service cman start

check nodes status with cman_tool nodes

8. on node1 then node2

service pacemaker start

chkconfig cman on

chkconfig pacemaker on

9. for a two nodes cluster, run from one node

crm configure property stonith-enabled="false"

crm configure property no-quorum-policy="ignore"

#crm configure rsc_defaults resource-stickiness="100"

10. crm_verify -LV

crm status

11. on two nodes

yum -y install mysql mysql-server mysql-devel

chkconfig mysqld off

12. on two nodes

mkdir -p /new/mysql; chown -R mysql.mysql /new/mysql

vi /etc/my.cnf

[mysqld]

datadir=/new/mysql

socket=/new/mysql/mysql.sock

default-storage-engine=INNODB

[client]

socket=/new/mysql/mysql.sock

13. on two nodes

Install DRBD 8.3

rpm -ivh http://www.elrepo.org/elrepo-release-6-5.el6.elrepo.noarch.rpm

yum -y install drbd83-utils kmod-drbd83

chkconfig drbd off

14. on two nodes

create a new 100M disk just for testing purpose

vi /etc/drbd.d/zabbixdata.res

resource zabbixdata {

device /dev/drbd0;

disk /dev/sdb;

meta-disk internal;

syncer {

verify-alg sha1;

}

on mysql01.test.com {

address 192.168.1.13:7789;

}

on mysql02.test.com {

address 192.168.1.23:7789;

}

}

15. on mysql01

drbdadm create-md zabbixdata

modprobe drbd

drbdadm up zabbixdata

16. on mysql02

drbdadm create-md zabbixdata

modprobe drbd

drbdadm up zabbixdata

17. make mysql01 primary

drbdadm -- --overwrite-data-of-peer primary zabbixdata

cat /proc/drbd

Note: you should wait until sync finished

18. on mysql01

mkfs.ext4 /dev/drbd0

19. #crm(live)configure

primitive clusterip ocf:heartbeat:IPaddr2 params ip="192.168.1.52" cidr_netmask="24" nic="eth0"

commit

run crm status to check

primitive zabbixdata ocf:linbit:drbd params drbd_resource="zabbixdata"

ms zabbixdataclone zabbixdata meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

primitive zabbixfs ocf:heartbeat:Filesystem params device="/dev/drbd/by-res/zabbixdata" directory="/new/mysql" fstype="ext4"

group myzabbix zabbixfs clusterip

colocation myzabbix_on_drbd inf: myzabbix zabbixdataclone:Master

order myzabbix_after_drbd inf: zabbixdataclone:promote myzabbix:start

op_defaults timeout=240s

commit

20. ensure resource is on mysql01

on mysql01

run mount to see if /dev/drbd0 --> /new/mysql

service mysqld start

Create zabbix database and user on MySQL.

mysql -u root

mysql> create database zabbix character set utf8;

mysql> grant all privileges on zabbix.* to zabbix@'%' identified by 'zabbix';

mysql> flush privileges;

mysql> exit

Import initial schema and data.

copy zabbix server /usr/share/doc/zabbix-server-mysql-2.2.1/create/* to local

mysql -uroot zabbix < schema.sql

mysql -uroot zabbix < images.sql

mysql -uroot zabbix < data.sql

service mysqld stop

21. #crm(live)configure

primitive mysqld lsb:mysqld

edit myzabbix

like this -- group myzabbix zabbixfs clusterip mysqld

commit

22. put mysql01 to standby

crm node standby mysql01p

23. on mysql02 to check mount and mysql -uroot

24. put mysql01 to online

crm node online mysql01p

zabbix server

1. Make sure you have successfully set up DNS resolution and NTP time synchronization for both your Linux Cluster nodes.

vi /etc/sysconfig/network

设定主机名为short name, not FQDN

here, I use /etc/hosts to simplify

vi /etc/hosts

# eth0 network for production

192.168.1.14 zabbixserver01

192.168.1.24 zabbixserver02

# for dedicated heartbeat network to avoid problems

# eth1 network

10.10.11.10 zabbixserver01p

10.10.11.20 zabbixserver02p

2. wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/network:ha-clustering:Stable.repo -O /etc/yum.repos.d/ha.repo

3. on two nodes

yum -y install pacemaker cman crmsh

4. on two nodes

vi /etc/sysconfig/cman

CMAN_QUORUM_TIMEOUT=0

5. on two nodes

vi /etc/cluster/cluster.conf

<?xml version="1.0"?>

<cluster config_version="1" name="mycluster">

<logging debug="off"/>

<clusternodes>

<clusternode name="zabbixserver01p" nodeid="1">

<fence>

<method name="pcmk-redirect">

<device name="pcmk" port="zabbixserver01p"/>

</method>

</fence>

</clusternode>

<clusternode name="zabbixserver02p" nodeid="2">

<fence>

<method name="pcmk-redirect">

<device name="pcmk" port="zabbixserver02p"/>

</method>

</fence>

</clusternode>

</clusternodes>

<fencedevices>

<fencedevice name="pcmk" agent="fence_pcmk"/>

</fencedevices>

</cluster>

6. on two nodes

ccs_config_validate

7. on two nodes

service cman start

check nodes status with cman_tool nodes

8. on two nodes

service pacemaker start

chkconfig cman on

chkconfig pacemaker on

9. for a two nodes cluster, run from one node

crm configure property stonith-enabled="false"

crm configure property no-quorum-policy="ignore"

#crm configure rsc_defaults resource-stickiness="100"

10. crm_verify -LV

crm status

11. on two nodes

yum -y install zlib-devel glibc-devel libcurl-devel OpenIPMI-devel libssh2-devel net-snmp-devel openldap-devel

rpm -ivh http://mirrors.sohu.com/fedora-epel/6Server/x86_64/epel-release-6-8.noarch.rpm

yum -y install fping iksemel-devel

12. on two nodes

rpm -ivh http://repo.zabbix.com/zabbix/2.2/rhel/6/x86_64/zabbix-release-2.2-1.el6.noarch.rpm

yum -y install zabbix-server-mysql

chkconfig zabbix-server off

vi /etc/zabbix/zabbix_server.conf

DBHost=192.168.1.52 #mysql server cluster ip

DBName=zabbix

DBUser=zabbix

DBPassword=zabbix

DBSocket=/new/mysql/mysql.sock

SourceIP=192.168.1.51

ListenIP=192.168.1.51

13.#crm(live)configure

primitive clusterip ocf:heartbeat:IPaddr2 params ip="192.168.1.51" cidr_netmask="24" nic="eth0"

commit

run crm status to check

14. ensure resource is on zabbixserver01

on zabbixserver01

service zabbix-server start

check /var/log/zabbix/zabbix_server.log

service zabbix-server stop

15. put zabbixserver01 to standby

crm node standby zabbixserver01p

16. on zabbixserver02

service zabbix-server start

check /var/log/zabbix/zabbix_server.log

service zabbix-server stop

17. put zabbixserver01 to online

crm node online zabbixserver01p

18. #crm(live)configure

primitive myzabbixserver lsb:zabbix-server

group myzabbix clusterip myzabbixserver

commit

19. for Zabbix agent -- on zabbix server

yum -y install zabbix-agent

chkconfig zabbix-agent off

20. for zabbix agent configuration

vi /etc/zabbix/zabbix_agentd.conf

#Server=[zabbix server ip] --This is for zabbix agent Passive check, port 10050

#ServerActive=[zabbix server ip] --This is for zabbix agent(active) Active check, port 10051

#Hostname=[ Hostname of client system ]

#hostname value set on the agent side should exactly match the ”Host name” configured for the host in the web frontend

Server=192.168.1.51

ServerActive=192.168.1.51

Hostname=Zabbix server

21. ensure resource is on zabbixserver01

on zabbixserver01

service zabbix-agent start

check /var/log/zabbix/zabbix_agent.log

service zabbix-agent stop

22. put zabbixserver01 to standby

crm node standby zabbixserver01p

23. on zabbixserver02

service zabbix-agent start

check /var/log/zabbix/zabbix_agent.log

service zabbix-agent stop

24. put zabbixserver01 to online

crm node online zabbixserver01p

25. #crm(live)configure

primitive zabbixagent lsb:zabbix-agent

edit myzabbix

like this -- group myzabbix clusterip myzabbixserver zabbixagent

commit

zabbix web

1. Make sure you have successfully set up DNS resolution and NTP time synchronization for both your Linux Cluster nodes.

vi /etc/sysconfig/network

设定主机名为short name, not FQDN

here, I use /etc/hosts to simplify

vi /etc/hosts

# eth0 network for production

192.168.1.15 zabbixweb01

192.168.1.25 zabbixweb02

# for dedicated heartbeat network to avoid problems

# eth1 network

10.10.10.10 zabbixweb01p

10.10.10.20 zabbixweb02p

2. wget http://download.opensuse.org/repositories/network:/ha-clustering:/Stable/CentOS_CentOS-6/network:ha-clustering:Stable.repo -O /etc/yum.repos.d/ha.repo

3. on two nodes

yum -y install pacemaker cman crmsh

4. on two nodes

vi /etc/sysconfig/cman

CMAN_QUORUM_TIMEOUT=0

5. on two nodes

vi /etc/cluster/cluster.conf

<?xml version="1.0"?>

<cluster config_version="1" name="mycluster">

<logging debug="off"/>

<clusternodes>

<clusternode name="zabbixweb01p" nodeid="1">

<fence>

<method name="pcmk-redirect">

<device name="pcmk" port="zabbixweb01p"/>

</method>

</fence>

</clusternode>

<clusternode name="zabbixweb02p" nodeid="2">

<fence>

<method name="pcmk-redirect">

<device name="pcmk" port="zabbixweb02p"/>

</method>

</fence>

</clusternode>

</clusternodes>

<fencedevices>

<fencedevice name="pcmk" agent="fence_pcmk"/>

</fencedevices>

</cluster>

6. on two nodes

ccs_config_validate

7. on two nodes

service cman start

check nodes status with cman_tool nodes

8. on two nodes

service pacemaker start

chkconfig cman on

chkconfig pacemaker on

9. for a two nodes cluster, run from one node

crm configure property stonith-enabled="false"

crm configure property no-quorum-policy="ignore"

#crm configure rsc_defaults resource-stickiness="100"

10. crm_verify -LV

crm status

11. on two nodes

yum -y install httpd httpd-devel php php-cli php-common php-devel php-pear php-gd php-bcmath php-mbstring php-mysql php-xml wget

chkconfig httpd off

12. on two nodes

rpm -ivh http://repo.zabbix.com/zabbix/2.2/rhel/6/x86_64/zabbix-release-2.2-1.el6.noarch.rpm

yum -y install zabbix-web-mysql

13. on two nodes

Apache configuration file for Zabbix frontend is located in /etc/httpd/conf.d/zabbix.conf. Some PHP settings are already configured.

php_value max_execution_time 300

php_value memory_limit 128M

php_value post_max_size 16M

php_value upload_max_filesize 2M

php_value max_input_time 300

php_value date.timezone Asia/Shanghai

14. on two nodes

vi /etc/httpd/conf/httpd.conf

<Location /server-status>

SetHandler server-status

Order deny,allow

Deny from all

Allow from 127.0.0.1 192.168.1.0/24

</Location>

15. on zabbixweb01

service httpd start

run http://192.168.1.15/zabbix to finish zabbix setup, put 192.168.1.52 as mysql database address

service httpd stop

16. on zabbixweb02

service httpd start

run http://192.168.1.25/zabbix to finish zabbix setup, put 192.168.1.52 as mysql database address

service httpd stop

17. #crm(live)configure

op_defaults timeout=240s

primitive clusterip ocf:heartbeat:IPaddr2 params ip="192.168.1.50" cidr_netmask="24" nic="eth0"

commit

run crm status to check

primitive website ocf:heartbeat:apache params configfile="/etc/httpd/conf/httpd.conf"

group myweb clusterip website

commit

Note:

1. 在使用专属心跳网卡eth1建立群集后,把某个节点置于standby/online时,必须附加节点名字:

crm node standby node_name

crm node online node_name

2. 如果机器down了,重装系统,然后按步骤1-8即可重新加入群集。