Python, dlib, OpenCV换脸进行时

from:http://matthewearl.github.io/2015/07/28/switching-eds-with-python/

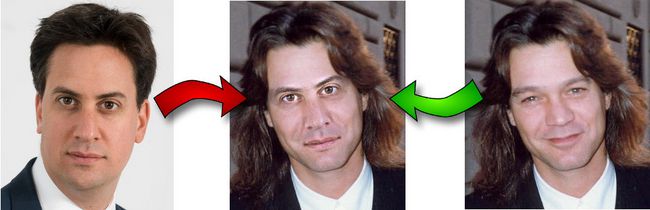

Switching Eds: Face swapping with Python, dlib, and OpenCV

Image credit

Introduction

In this post I’ll describe how I wrote a short (200 line) Python script toautomatically replace facial features on an image of a face, with the facialfeatures from a second image of a face.

The process breaks down into four steps:

- Detecting facial landmarks.

- Rotating, scaling, and translating the second image to fit over the first.

- Adjusting the colour balance in the second image to match that of the first.

- Blending features from the second image on top of the first.

The full source-code for the script can be found here.

1. Using dlib to extract facial landmarks

The script uses dlib’s Python bindings to extract faciallandmarks:

Image credit

Dlib implements the algorithm described in the paper One Millisecond FaceAlignment with an Ensemble of Regression Trees, by Vahid Kazemi andJosephine Sullivan. The algorithm itself is very complex, but dlib’s interfacefor using it is incredibly simple:

PREDICTOR_PATH = "/home/matt/dlib-18.16/shape_predictor_68_face_landmarks.dat"

detector = dlib.get_frontal_face_detector()

predictor = dlib.shape_predictor(PREDICTOR_PATH)

def get_landmarks(im):

rects = detector(im, 1)

if len(rects) > 1:

raise TooManyFaces

if len(rects) == 0:

raise NoFaces

return numpy.matrix([[p.x, p.y] for p in predictor(im, rects[0]).parts()])The function get_landmarks() takes an image in the form of a numpy array, andreturns a 68x2 element matrix, each row of which corresponding with thex, y coordinates of a particular feature point in the input image.

The feature extractor (predictor) requires a rough bounding box as input tothe algorithm. This is provided by a traditional face detector (detector)which returns a list of rectangles, each of which corresponding with a face inthe image.

To make the predictor a pre-trained model is required. Such a model can bedownloaded from the dlib sourceforge repository.

##2. Aligning faces with a procrustes analysis

So at this point we have our two landmark matrices, each row having coordinatesto a particular facial feature (eg. the 30th row gives the coordinates of thetip of the nose). We’re now going to work out how to rotate, translate, andscale the points of the first vector such that they fit as closely as possibleto the points in the second vector, the idea being that the same transformationcan be used to overlay the second image over the first.

To put it more mathematically, we seek T

, s, and Rsuchthat:

is minimized, where R

is an orthogonal 2x2 matrix, s is ascalar, T is a 2-vector, and pi and qiare the rowsof the landmark matrices calculated above.

It turns out that this sort of problem can be solved with anOrdinary Procrustes Analysis:

def transformation_from_points(points1, points2):

points1 = points1.astype(numpy.float64)

points2 = points2.astype(numpy.float64)

c1 = numpy.mean(points1, axis=0)

c2 = numpy.mean(points2, axis=0)

points1 -= c1

points2 -= c2

s1 = numpy.std(points1)

s2 = numpy.std(points2)

points1 /= s1

points2 /= s2

U, S, Vt = numpy.linalg.svd(points1.T * points2)

R = (U * Vt).T

return numpy.vstack([numpy.hstack(((s2 / s1) * R,

c2.T - (s2 / s1) * R * c1.T)),

numpy.matrix([0., 0., 1.])])Stepping through the code:

- Convert the input matrices into floats. This is required for the operationsthat are to follow.

- Subtract the centroid form each of the point sets. Once an optimal scalingand rotation has been found for the resulting point sets, the centroids

c1andc2can be used to find the full solution. - Similarly, divide each point set by its standard deviation. This removes thescaling component of the problem.

- Calculate the rotation portion using the Singular ValueDecomposition.See the wikipedia article on the Orthogonal Procrustes Problem for details ofhow this works.

- Return the complete transformaton as an affine transformation matrix.

The result can then be plugged into OpenCV’s cv2.warpAffine function to mapthe second image onto the first:

def warp_im(im, M, dshape):

output_im = numpy.zeros(dshape, dtype=im.dtype)

cv2.warpAffine(im,

M[:2],

(dshape[1], dshape[0]),

dst=output_im,

borderMode=cv2.BORDER_TRANSPARENT,

flags=cv2.WARP_INVERSE_MAP)

return output_imWhich produces the following alignment:

Image credit

3. Colour correcting the second image

If we tried to overlay facial features at this point, we’d soon see we have aproblem:

Image credit

The issue is that differences in skin-tone and lighting between the two imagesis causing a discontinuity around the edges of the overlaid region. Let’s tryto correct that:

COLOUR_CORRECT_BLUR_FRAC = 0.6

LEFT_EYE_POINTS = list(range(42, 48))

RIGHT_EYE_POINTS = list(range(36, 42))

def correct_colours(im1, im2, landmarks1):

blur_amount = COLOUR_CORRECT_BLUR_FRAC * numpy.linalg.norm(

numpy.mean(landmarks1[LEFT_EYE_POINTS], axis=0) -

numpy.mean(landmarks1[RIGHT_EYE_POINTS], axis=0))

blur_amount = int(blur_amount)

if blur_amount % 2 == 0:

blur_amount += 1

im1_blur = cv2.GaussianBlur(im1, (blur_amount, blur_amount), 0)

im2_blur = cv2.GaussianBlur(im2, (blur_amount, blur_amount), 0)

# Avoid divide-by-zero errors.

im2_blur += 128 * (im2_blur <= 1.0)

return (im2.astype(numpy.float64) * im1_blur.astype(numpy.float64) /

im2_blur.astype(numpy.float64))And the result:

Image credit

This function attempts to change the colouring of im2 to match that of im1.It does this by dividing im2 by a gaussian blur of im2, and thenmultiplying by a gaussian blur of im1. The idea here is that of a RGBscalingcolour-correction, but instead of a constant scale factor acrossall of the image, each pixel has its own localised scale factor.

With this approach differences in lighting between the two images can beaccounted for, to some degree. For example, if image 1 is lit from one sidebut image 2 has uniform lighting then the colour corrected image 2 will appear darker on the unlit side aswell.

That said, this is a fairly crude solution to the problem and an appropriatesize gaussian kernel is key. Too small and facial features from the firstimage will show up in the second. Too large and kernel strays outside of theface area for pixels being overlaid, and discolouration occurs. Here a kernelof 0.6 * the pupillary distance is used.

4. Blending features from the second image onto the first

A mask is used to select which parts of image 2 and which parts of image 1should be shown in the final image:

Regions with value 1 (shown white here) correspond with areas where image 2should show, and regions with colour 0 (shown black here) correspond with areaswhere image 1 should show. Value in between 0 and 1 correspond with a mixtureof image 1 and image2.

Here’s the code to generate the above:

LEFT_EYE_POINTS = list(range(42, 48))

RIGHT_EYE_POINTS = list(range(36, 42))

LEFT_BROW_POINTS = list(range(22, 27))

RIGHT_BROW_POINTS = list(range(17, 22))

NOSE_POINTS = list(range(27, 35))

MOUTH_POINTS = list(range(48, 61))

OVERLAY_POINTS = [

LEFT_EYE_POINTS + RIGHT_EYE_POINTS + LEFT_BROW_POINTS + RIGHT_BROW_POINTS,

NOSE_POINTS + MOUTH_POINTS,

]

FEATHER_AMOUNT = 11

def draw_convex_hull(im, points, color):

points = cv2.convexHull(points)

cv2.fillConvexPoly(im, points, color=color)

def get_face_mask(im, landmarks):

im = numpy.zeros(im.shape[:2], dtype=numpy.float64)

for group in OVERLAY_POINTS:

draw_convex_hull(im,

landmarks[group],

color=1)

im = numpy.array([im, im, im]).transpose((1, 2, 0))

im = (cv2.GaussianBlur(im, (FEATHER_AMOUNT, FEATHER_AMOUNT), 0) > 0) * 1.0

im = cv2.GaussianBlur(im, (FEATHER_AMOUNT, FEATHER_AMOUNT), 0)

return im

mask = get_face_mask(im2, landmarks2)

warped_mask = warp_im(mask, M, im1.shape)

combined_mask = numpy.max([get_face_mask(im1, landmarks1), warped_mask],

axis=0)Let’s break this down:

- A routine

get_face_mask()is defined to generate a mask for an image and alandmark matrix. It draws two convex polygons in white: One surrounding theeye area, and one surrounding the nose and mouth area. It then feathers theedge of the mask outwards by 11 pixels. The feathering helps hide anyremaning discontinuities. - Such a face mask is generated for both images. The mask for the second istransformed into image 1’s coordinate space, using the same transformation asin step 2.

- The masks are then combined into one by taking an element-wise maximum.Combining both masks ensures that the features from image 1 are covered up,and that the features from image 2 show through.

Finally, the mask is applied to give the final image:

output_im = im1 * (1.0 - combined_mask) + warped_corrected_im2 * combined_maskImage credit

Credits

Original Ed Miliband image by the Department of Energy, licensed under the Open Government License v1.0.

Original Eddie Van Halen image by Alan Light,licensed under the Creative Commons Attribution 2.0 Generic license