Flume-常见错误

Flume-常见错误

1 Closing file failed. Will retry again in 120 seconds.

1.1 报错现象和解决

详细报错信息如下:

09 Aug 2019 17:00:31,787 WARN [SinkRunner-PollingRunner-DefaultSinkProcessor] (org.apache.flume.sink.hdfs.BucketWriter$CloseHandler.close:348) - Closing file: hdfs://xxx/20190813/.aaa.lzo.tmp failed. Will retry again in 120 seconds.

java.io.IOException: Callable timed out after 300000 ms on file: hdfs://xxx/20190813/.aaa.lzo.tmp

at org.apache.flume.sink.hdfs.BucketWriter.callWithTimeout(BucketWriter.java:741)

at org.apache.flume.sink.hdfs.BucketWriter.access$1000(BucketWriter.java:60)

at org.apache.flume.sink.hdfs.BucketWriter$CloseHandler.close(BucketWriter.java:345)

at org.apache.flume.sink.hdfs.BucketWriter.doClose(BucketWriter.java:440)

at org.apache.flume.sink.hdfs.BucketWriter.close(BucketWriter.java:426)

at org.apache.flume.sink.hdfs.BucketWriter.close(BucketWriter.java:410)

at org.apache.flume.sink.hdfs.BucketWriter.close(BucketWriter.java:312)

at org.apache.flume.sink.hdfs.BucketWriter.append(BucketWriter.java:597)

at org.apache.flume.sink.hdfs.HDFSEventSink.process(HDFSEventSink.java:412)

at org.apache.flume.sink.DefaultSinkProcessor.process(DefaultSinkProcessor.java:67)

at org.apache.flume.SinkRunner$PollingRunner.run(SinkRunner.java:145)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.util.concurrent.TimeoutException

at java.util.concurrent.FutureTask.get(FutureTask.java:205)

at org.apache.flume.sink.hdfs.BucketWriter.callWithTimeout(BucketWriter.java:734)

... 11 more

09 Aug 2019 17:00:31,787 WARN [DataStreamer for file /xxx/20190813/.aaa.lzo.tmp block BP-3264412962-192.168.1.1-1476857514193:blk_1770743824_711658976] (org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder:869) - Caught exception

java.lang.InterruptedException

at java.lang.Object.wait(Native Method)

at java.lang.Thread.join(Thread.java:1252)

at java.lang.Thread.join(Thread.java:1326)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeResponder(DFSOutputStream.java:867)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.closeInternal(DFSOutputStream.java:835)

at org.apache.hadoop.hdfs.DFSOutputStream$DataStreamer.run(DFSOutputStream.java:831)

09 Aug 2019 17:00:31,792 INFO [hdfs-sink-2-call-runner-2] (org.apache.flume.sink.hdfs.BucketWriter$7.call:681) - Renaming hdfs://xxx/20190813/.aaa.lzo.tmp to hdfs://xxx/20190813/aaa.lzo

09 Aug 2019 17:04:31,789 ERROR [hdfs-sink-2-call-runner-6] (org.apache.flume.sink.hdfs.AbstractHDFSWriter.hflushOrSync:269) - Error while trying to hflushOrSync!

09 Aug 2019 17:04:31,789 WARN [hdfs-sink-2-call-runner-6] (org.apache.hadoop.security.UserGroupInformation.doAs:1696) - PriviledgedActionException as:tom (auth:PROXY) via tom (auth:SIMPLE) cause:java.nio.channels.ClosedChannelException

09 Aug 2019 17:02:31,788 WARN [hdfs-sink-2-roll-timer-0] (org.apache.flume.sink.hdfs.BucketWriter$CloseHandler.close:348) - Closing file: hdfs://xxx/20190813/.aaa.lzo.tmp failed. Will retry again in 120 seconds.

java.nio.channels.ClosedChannelException

at org.apache.hadoop.hdfs.DFSOutputStream.checkClosed(DFSOutputStream.java:1866)

at org.apache.hadoop.hdfs.DFSOutputStream.flushOrSync(DFSOutputStream.java:2277)

at org.apache.hadoop.hdfs.DFSOutputStream.hflush(DFSOutputStream.java:2222)

at org.apache.hadoop.fs.FSDataOutputStream.hflush(FSDataOutputStream.java:130)

at sun.reflect.GeneratedMethodAccessor8.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.flume.sink.hdfs.AbstractHDFSWriter.hflushOrSync(AbstractHDFSWriter.java:266)

at org.apache.flume.sink.hdfs.HDFSCompressedDataStream.close(HDFSCompressedDataStream.java:153)

at org.apache.flume.sink.hdfs.BucketWriter$3.call(BucketWriter.java:319)

at org.apache.flume.sink.hdfs.BucketWriter$3.call(BucketWriter.java:316)

at org.apache.flume.sink.hdfs.BucketWriter$8$1.run(BucketWriter.java:727)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)

at org.apache.flume.auth.UGIExecutor.execute(UGIExecutor.java:46)

at org.apache.flume.sink.hdfs.BucketWriter$8.call(BucketWriter.java:724)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

09 Aug 2019 17:04:31,789 ERROR [hdfs-sink-2-call-runner-6] (org.apache.flume.sink.hdfs.AbstractHDFSWriter.hflushOrSync:269) - Error while trying to hflushOrSync!

09 Aug 2019 17:04:31,789 WARN [hdfs-sink-2-call-runner-6] (org.apache.hadoop.security.UserGroupInformation.doAs:1696) - PriviledgedActionException as:tom (auth:PROXY) via tom (auth:SIMPLE) cause:java.nio.channels.ClosedChannelException

09 Aug 2019 17:02:31,788 WARN [hdfs-sink-2-roll-timer-0] (org.apache.flume.sink.hdfs.BucketWriter$CloseHandler.close:348) - Closing file: hdfs://xxx/20190813/.aaa.lzo.tmp failed. Will retry again in 120 seconds.

java.nio.channels.ClosedChannelException

# 后面一直重复上述错误

我们发现Hive数据少了,然后查看flume.log报上述错误。

随后使用以下指令查看了HDFS文件状态:

hdfs fsck /xxx/20190813 -openforwrite | grep OPENFORWRITE |awk -F ' ' '{print $1}'

输出类似如下:

............/xxx/20190813/aaa.lzo 2091 bytes, 1 block(s), OPENFORWRITE: .......................................................................................

也就是说,文件.aaa.lzo.tmp已经成功被重命名为aaa.lzo,但状态是OPENFORWRITE,并未正常关闭。我们通过以下命令对该文件进行手动关闭:

hdfs debug recoverLease -path /xxx/20190813/aaa.lzo

随后该文件不再处于OPENFORWRITE状态,重新执行Hive查询,发现数据已经补足了。

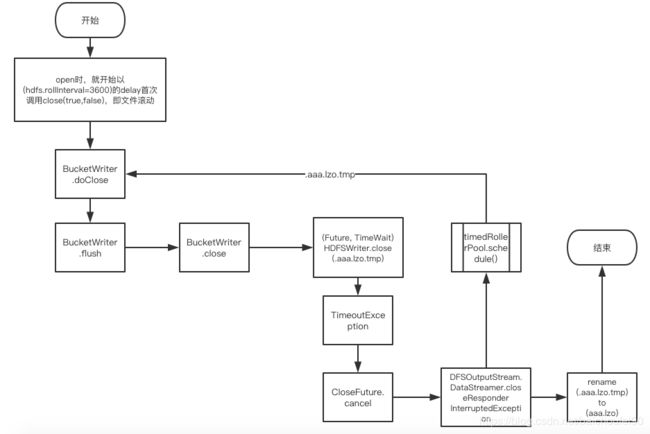

1.2 原理分析

上图就是Flume HDFSSink RollInterval时的异常流程,可以发现虽然在HDFSWriter.close(.aaa.lzo.tmp)时发生了TimeoutException,进行了Cancel操作造成InterruptedException,并使用线程池进行文件close的重试。但是rename过程是依旧会继续执行的,因为上述放入close重试过程是通过submit()方法放入线程池异步执行的。

也就是说,文件名.aaa.lzo.tmp已经被修改为aaa.lzo,但一直在以旧的文件名.aaa.lzo.tmp重复close文件。

我写了一个模拟该错误的代码如下:

import com.google.common.util.concurrent.ThreadFactoryBuilder;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.concurrent.Executors;

import java.util.concurrent.ScheduledExecutorService;

import java.util.concurrent.ScheduledFuture;

import static java.util.concurrent.TimeUnit.SECONDS;

/** * @Author: chengc * @Date: 2019-08-16 14:45 */

public class FlumeBucketWriterErrorSimulation {

private static final Logger logger = LoggerFactory.getLogger(FlumeBucketWriterErrorSimulation.class);

private static int rollTimerPoolSize = 20;

private static ScheduledExecutorService timedRollerPool = Executors.newScheduledThreadPool(rollTimerPoolSize,

new ThreadFactoryBuilder().setNameFormat("hdfs-sink-2-roll-timer-%d").build());

private static volatile ScheduledFuture<Void> timedRollFuture ;

public static void close(String fileName, boolean first){

logger.info("start to close file:{}", fileName);

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

if(first){

logger.warn("TimeoutException happened, will retry, file:{}", fileName);

}else{

logger.warn("ClosedChannelException happened, will retry, file:{}", fileName);

}

timedRollerPool.schedule(() -> {

close(fileName, false);

return null;

}, 5, SECONDS);

}

/** * 按指定delay调度一次线程 */

public static void doClose(String fileName){

timedRollFuture = timedRollerPool.schedule(() -> {

logger.info("doClose file:{}", fileName);

close(fileName, true);

if (timedRollFuture != null && !timedRollFuture.isDone()) {

logger.info("timedRollFuture.cancel");

// do not cancel myself if running!

timedRollFuture.cancel(false);

timedRollFuture = null;

}

String newName = "aaa.lzo";

logger.info(" Renaming file:{} to {}", fileName, newName);

return null;

}, 5, SECONDS);

}

public static void main(String[] args) {

doClose(".aaa.lzo.tmp");

}

}

执行后部分输出如下:

2019-08-16 14:46:35.912 INFO [hdfs-sink-2-roll-timer-0] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,50 - doClose file:.aaa.lzo.tmp

2019-08-16 14:46:35.915 INFO [hdfs-sink-2-roll-timer-0] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,28 - start to close file:.aaa.lzo.tmp

2019-08-16 14:46:36.922 WARN [hdfs-sink-2-roll-timer-0] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,35 - TimeoutException happened, will retry, file:.aaa.lzo.tmp

2019-08-16 14:46:36.923 INFO [hdfs-sink-2-roll-timer-0] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,55 - timedRollFuture.cancel

2019-08-16 14:46:36.923 INFO [hdfs-sink-2-roll-timer-0] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,62 - Renaming file:.aaa.lzo.tmp to aaa.lzo

2019-08-16 14:46:41.927 INFO [hdfs-sink-2-roll-timer-1] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,28 - start to close file:.aaa.lzo.tmp

2019-08-16 14:46:42.930 WARN [hdfs-sink-2-roll-timer-1] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,37 - ClosedChannelException happened, will retry, file:.aaa.lzo.tmp

2019-08-16 14:46:47.936 INFO [hdfs-sink-2-roll-timer-0] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,28 - start to close file:.aaa.lzo.tmp

2019-08-16 14:46:48.939 WARN [hdfs-sink-2-roll-timer-0] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,37 - ClosedChannelException happened, will retry, file:.aaa.lzo.tmp

2019-08-16 14:46:53.942 INFO [hdfs-sink-2-roll-timer-1] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,28 - start to close file:.aaa.lzo.tmp

2019-08-16 14:46:54.948 WARN [hdfs-sink-2-roll-timer-1] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,37 - ClosedChannelException happened, will retry, file:.aaa.lzo.tmp

2019-08-16 14:46:59.950 INFO [hdfs-sink-2-roll-timer-2] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,28 - start to close file:.aaa.lzo.tmp

2019-08-16 14:47:00.954 WARN [hdfs-sink-2-roll-timer-2] concurrent.thread.executorservice.scheduled.FlumeBucketWriterErrorSimulation,37 - ClosedChannelException happened, will retry, file:.aaa.lzo.tmp

1.3 解决

本来想改源码,但需要快速解决,目前的方案就是改两个flume参数:

# 调用hdfs命令时超时时间,这里调大一倍

test.sinks.sink.hdfs.callTimeout=600000

# 关闭HDFS文件出错时重试次数,之前设为0即无限重试。现在改为20

test.sinks.sink.hdfs.closeTries=20

还搞了一个脚本,定期对目录下的文件手动close。

这是一个临时解决方案,未来需要改动源码,必须先close文件成功后再进行rename。