fabric1.4.0-rc2快速入门

软件环境

VMware 10.0.4

CentOS-7-x86_64-Minimal-1708

搭建过程

go安装

docker安装

docker-compose安装

一、fabric的编译和安装

1. 创建目录(GOPATH变量在安装go的时候就配置好了)

mkdir -p $GOPATH/src/github.com/hyperledger

2. 下载fabric源码

进入上述目录后,下载源码

git clone https://github.com/hyperledger/fabric.git

3. 安装相关依赖软件

go get github.com/golang/protobuf/protoc-gen-go

(go get下载的文件会自动存放到$GOBIN对应的目录中,如果没有设置GOBIN,则会存放到$GOPATH/bin下面)

创建目录

mkdir -p $GOPATH/src/github.com/hyperledger/fabric/.build/docker/gotools/bin

(注意:build前有一个点“.”,遗漏的话会导致在make docker时出现找不到protoc-gen-go的错误)

将下载的文件复制到上一步创建的目录下

cp $GOPATH/bin/protoc-gen-go $GOPATH/src/github.com/hyperledger/fabric/.build/docker/gotools/bin

4. 编译fabric模块

首先进入fabric安装目录

然后执行make release,如果出现以下错误,则说明没有安装gcc,需要先安装gcc:yum install gcc

[root@master1 fabric]# make release

Building release/linux-amd64/bin/configtxgen for linux-amd64

mkdir -p release/linux-amd64/bin

CGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/configtxgen -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/configtxgen/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/configtxgen

# runtime/cgo

exec: "gcc": executable file not found in $PATH

make: *** [release/linux-amd64/bin/configtxgen] 错误 2

make release的正确过程:

[root@master1 fabric]# make release

Building release/linux-amd64/bin/configtxgen for linux-amd64

mkdir -p release/linux-amd64/bin

CGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/configtxgen -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/configtxgen/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/configtxgen

Building release/linux-amd64/bin/cryptogen for linux-amd64

mkdir -p release/linux-amd64/bin

CGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/cryptogen -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/cryptogen/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/cryptogen

Building release/linux-amd64/bin/idemixgen for linux-amd64

mkdir -p release/linux-amd64/bin

CGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/idemixgen -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/idemixgen/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/idemixgen

Building release/linux-amd64/bin/discover for linux-amd64

mkdir -p release/linux-amd64/bin

CGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/discover -tags "" -ldflags "-X github.com/hyperledger/fabric/cmd/discover/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/cmd/discover

Building release/linux-amd64/bin/configtxlator for linux-amd64

mkdir -p release/linux-amd64/bin

CGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/configtxlator -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/configtxlator/metadata.CommitSHA=e91c57c" github.com/hyperledger/fabric/common/tools/configtxlator

Building release/linux-amd64/bin/peer for linux-amd64

mkdir -p release/linux-amd64/bin

CGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/peer -tags "" -ldflags "-X github.com/hyperledger/fabric/common/metadata.Version=1.4.1 -X github.com/hyperledger/fabric/common/metadata.CommitSHA=e91c57c -X github.com/hyperledger/fabric/common/metadata.BaseVersion=0.4.14 -X github.com/hyperledger/fabric/common/metadata.BaseDockerLabel=org.hyperledger.fabric -X github.com/hyperledger/fabric/common/metadata.DockerNamespace=hyperledger -X github.com/hyperledger/fabric/common/metadata.BaseDockerNamespace=hyperledger" github.com/hyperledger/fabric/peer

Building release/linux-amd64/bin/orderer for linux-amd64

mkdir -p release/linux-amd64/bin

CGO_CFLAGS=" " GOOS=linux GOARCH=amd64 go build -o /root/gopath/src/github.com/hyperledger/fabric/release/linux-amd64/bin/orderer -tags "" -ldflags "-X github.com/hyperledger/fabric/common/metadata.Version=1.4.1 -X github.com/hyperledger/fabric/common/metadata.CommitSHA=e91c57c -X github.com/hyperledger/fabric/common/metadata.BaseVersion=0.4.14 -X github.com/hyperledger/fabric/common/metadata.BaseDockerLabel=org.hyperledger.fabric -X github.com/hyperledger/fabric/common/metadata.DockerNamespace=hyperledger -X github.com/hyperledger/fabric/common/metadata.BaseDockerNamespace=hyperledger" github.com/hyperledger/fabric/orderer

mkdir -p release/linux-amd64/bin

make release之后再执行make docker,其运行过程如下:(其中的model是我改的名字,原本是builder,可以不改)

[root@master1 fabric]# make docker

mkdir -p .build/image/peer/payload

cp .build/docker/bin/peer .build/sampleconfig.tar.bz2 .build/image/peer/payload

mkdir -p .build/image/orderer/payload

cp .build/docker/bin/orderer .build/sampleconfig.tar.bz2 .build/image/orderer/payload

mkdir -p .build/image/ccenv/payload

cp .build/docker/gotools/bin/protoc-gen-go .build/bin/chaintool .build/goshim.tar.bz2 .build/image/ccenv/payload

mkdir -p .build/image/buildenv/payload

cp .build/gotools.tar.bz2 .build/docker/gotools/bin/protoc-gen-go .build/image/buildenv/payload

Building docker tools-image

docker build -t hyperledger/fabric-tools -f .build/image/tools/Dockerfile .

Sending build context to Docker daemon 389.7MB

Step 1/15 : FROM hyperledger/fabric-baseimage:amd64-0.4.14 AS model

amd64-0.4.14: Pulling from hyperledger/fabric-baseimage

Digest: sha256:d7760dcfbff0c7072627bf1acc9bc9b88213d7467ea071f68c7f8ccde124ab2b

Status: Downloaded newer image for hyperledger/fabric-baseimage:amd64-0.4.14

---> 8c01cc0574ab

Step 2/15 : WORKDIR /opt/gopath

---> Using cache

---> f5c9c2a0fd1e

Step 3/15 : RUN mkdir src && mkdir pkg && mkdir bin

---> Using cache

---> d81b2e2a2e76

Step 4/15 : ADD . src/github.com/hyperledger/fabric

---> df3fe354f7a9

Step 5/15 : WORKDIR /opt/gopath/src/github.com/hyperledger/fabric

---> Running in 8ebe1db720c8

Removing intermediate container 8ebe1db720c8

---> f32f8b0e57d8

Step 6/15 : ENV EXECUTABLES go git curl

---> Running in afafcd4cab19

Removing intermediate container afafcd4cab19

---> 69281d9fef0e

Step 7/15 : RUN make configtxgen configtxlator cryptogen peer discover idemixgen

---> Running in 30ea735a3d14

.build/bin/configtxgen

CGO_CFLAGS=" " GOBIN=/opt/gopath/src/github.com/hyperledger/fabric/.build/bin go install -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/configtxgen/metadata.CommitSHA=b87ec80" github.com/hyperledger/fabric/common/tools/configtxgen

Binary available as .build/bin/configtxgen

.build/bin/configtxlator

CGO_CFLAGS=" " GOBIN=/opt/gopath/src/github.com/hyperledger/fabric/.build/bin go install -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/configtxlator/metadata.CommitSHA=b87ec80" github.com/hyperledger/fabric/common/tools/configtxlator

Binary available as .build/bin/configtxlator

.build/bin/cryptogen

CGO_CFLAGS=" " GOBIN=/opt/gopath/src/github.com/hyperledger/fabric/.build/bin go install -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/cryptogen/metadata.CommitSHA=b87ec80" github.com/hyperledger/fabric/common/tools/cryptogen

Binary available as .build/bin/cryptogen

.build/bin/peer

CGO_CFLAGS=" " GOBIN=/opt/gopath/src/github.com/hyperledger/fabric/.build/bin go install -tags "" -ldflags "-X github.com/hyperledger/fabric/common/metadata.Version=1.4.0-rc2 -X github.com/hyperledger/fabric/common/metadata.CommitSHA=b87ec80 -X github.com/hyperledger/fabric/common/metadata.BaseVersion=0.4.14 -X github.com/hyperledger/fabric/common/metadata.BaseDockerLabel=org.hyperledger.fabric -X github.com/hyperledger/fabric/common/metadata.DockerNamespace=hyperledger -X github.com/hyperledger/fabric/common/metadata.BaseDockerNamespace=hyperledger" github.com/hyperledger/fabric/peer

Binary available as .build/bin/peer

.build/bin/discover

CGO_CFLAGS=" " GOBIN=/opt/gopath/src/github.com/hyperledger/fabric/.build/bin go install -tags "" -ldflags "-X github.com/hyperledger/fabric/cmd/discover/metadata.Version=1.4.0-rc2-snapshot-b87ec80" github.com/hyperledger/fabric/cmd/discover

Binary available as .build/bin/discover

.build/bin/idemixgen

CGO_CFLAGS=" " GOBIN=/opt/gopath/src/github.com/hyperledger/fabric/.build/bin go install -tags "" -ldflags "-X github.com/hyperledger/fabric/common/tools/idemixgen/metadata.CommitSHA=b87ec80" github.com/hyperledger/fabric/common/tools/idemixgen

Binary available as .build/bin/idemixgen

Removing intermediate container 30ea735a3d14

---> 7291cefca676

Step 8/15 : FROM hyperledger/fabric-baseimage:amd64-0.4.14

---> 8c01cc0574ab

Step 9/15 : ENV FABRIC_CFG_PATH /etc/hyperledger/fabric

---> Using cache

---> 160d2d3c28d1

Step 10/15 : ENV DEBIAN_FRONTEND noninteractive

---> Running in cc7aecc2daf3

Removing intermediate container cc7aecc2daf3

---> 5db0cb9fa088

Step 11/15 : RUN apt-get update && apt-get install -y jq

---> Running in ad2c21ba47bf

Get:1 http://security.ubuntu.com/ubuntu xenial-security InRelease [109 kB]

Get:2 http://archive.ubuntu.com/ubuntu xenial InRelease [247 kB]

Get:3 http://archive.ubuntu.com/ubuntu xenial-updates InRelease [109 kB]

Get:4 http://archive.ubuntu.com/ubuntu xenial-backports InRelease [107 kB]

Get:5 http://security.ubuntu.com/ubuntu xenial-security/universe Sources [125 kB]

Get:6 http://archive.ubuntu.com/ubuntu xenial/universe Sources [9802 kB]

Get:7 http://security.ubuntu.com/ubuntu xenial-security/main amd64 Packages [785 kB]

Get:8 http://archive.ubuntu.com/ubuntu xenial/main amd64 Packages [1558 kB]

Get:9 http://archive.ubuntu.com/ubuntu xenial/restricted amd64 Packages [14.1 kB]

Get:10 http://archive.ubuntu.com/ubuntu xenial/universe amd64 Packages [9827 kB]

Get:11 http://archive.ubuntu.com/ubuntu xenial/multiverse amd64 Packages [176 kB]

Get:12 http://archive.ubuntu.com/ubuntu xenial-updates/universe Sources [309 kB]

Get:13 http://archive.ubuntu.com/ubuntu xenial-updates/main amd64 Packages [1179 kB]

Get:14 http://archive.ubuntu.com/ubuntu xenial-updates/restricted amd64 Packages [13.1 kB]

Get:15 http://archive.ubuntu.com/ubuntu xenial-updates/universe amd64 Packages [938 kB]

Get:16 http://archive.ubuntu.com/ubuntu xenial-updates/multiverse amd64 Packages [19.1 kB]

Get:17 http://archive.ubuntu.com/ubuntu xenial-backports/main amd64 Packages [7942 B]

Get:18 http://archive.ubuntu.com/ubuntu xenial-backports/universe amd64 Packages [8532 B]

Get:19 http://security.ubuntu.com/ubuntu xenial-security/restricted amd64 Packages [12.7 kB]

Get:20 http://security.ubuntu.com/ubuntu xenial-security/universe amd64 Packages [540 kB]

Get:21 http://security.ubuntu.com/ubuntu xenial-security/multiverse amd64 Packages [6116 B]

Fetched 25.9 MB in 29s (887 kB/s)

Reading package lists...

Reading package lists...

Building dependency tree...

Reading state information...

The following additional packages will be installed:

libonig2

The following NEW packages will be installed:

jq libonig2

0 upgraded, 2 newly installed, 0 to remove and 77 not upgraded.

Need to get 231 kB of archives.

After this operation, 797 kB of additional disk space will be used.

Get:1 http://archive.ubuntu.com/ubuntu xenial-updates/universe amd64 libonig2 amd64 5.9.6-1ubuntu0.1 [86.7 kB]

Get:2 http://archive.ubuntu.com/ubuntu xenial-updates/universe amd64 jq amd64 1.5+dfsg-1ubuntu0.1 [144 kB]

Fetched 231 kB in 8s (25.9 kB/s)

Selecting previously unselected package libonig2:amd64.

(Reading database ... 22650 files and directories currently installed.)

Preparing to unpack .../libonig2_5.9.6-1ubuntu0.1_amd64.deb ...

Unpacking libonig2:amd64 (5.9.6-1ubuntu0.1) ...

Selecting previously unselected package jq.

Preparing to unpack .../jq_1.5+dfsg-1ubuntu0.1_amd64.deb ...

Unpacking jq (1.5+dfsg-1ubuntu0.1) ...

Processing triggers for libc-bin (2.23-0ubuntu10) ...

Setting up libonig2:amd64 (5.9.6-1ubuntu0.1) ...

Setting up jq (1.5+dfsg-1ubuntu0.1) ...

Processing triggers for libc-bin (2.23-0ubuntu10) ...

Removing intermediate container ad2c21ba47bf

---> 1f40cf22ab98

Step 12/15 : VOLUME /etc/hyperledger/fabric

---> Running in 653c51cf2a2b

Removing intermediate container 653c51cf2a2b

---> ecec94de3119

Step 13/15 : COPY --from=model /opt/gopath/src/github.com/hyperledger/fabric/.build/bin /usr/local/bin

---> 73ee783e6400

Step 14/15 : COPY --from=model /opt/gopath/src/github.com/hyperledger/fabric/sampleconfig $FABRIC_CFG_PATH

---> 25647b585ef9

Step 15/15 : LABEL org.hyperledger.fabric.version=1.4.0-rc2 org.hyperledger.fabric.base.version=0.4.14

---> Running in 52c3d478c83b

Removing intermediate container 52c3d478c83b

---> f45ddffeb1be

Successfully built f45ddffeb1be

Successfully tagged hyperledger/fabric-tools:latest

docker tag hyperledger/fabric-tools hyperledger/fabric-tools:amd64-1.4.0-rc2-snapshot-b87ec80

docker tag hyperledger/fabric-tools hyperledger/fabric-tools:amd64-latest

在make docker时遇到了很多问题,差点崩溃。现在将主要的几个问题及解决方案记录在这里仅供参考。

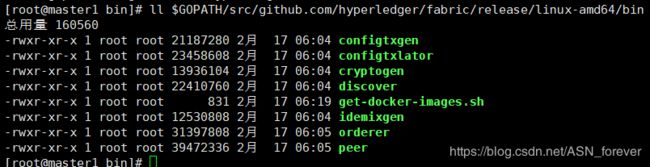

上述make release和make docker操作完成后,会自动将编译好的二进制文件存放在以下路径中:

$GOPATH/src/github.com/hyperledger/fabric/release/linux-amd64/bin

具体内容如下:

5. fabric模块的安装

编译完之后,这些模块就可以被运行了,但目前只能在编译文件所在的文件夹中运行这些模块,非常不方便。为了在系统的任何路径下都能运行,需要通过下面的命令将这些模块的可执行文件复制到系统目录中:

[root@master1 bin]# cp $GOPATH/src/github.com/hyperledger/fabric/release/linux-amd64/bin/* /usr/local/bin/

6. fabric模块安装结果检查

peer模块

[root@master1 bin]# peer version

peer:

Version: 1.4.0-rc2

Commit SHA: b87ec80

Go version: go1.11.5

OS/Arch: linux/amd64

Chaincode:

Base Image Version: 0.4.14

Base Docker Namespace: hyperledger

Base Docker Label: org.hyperledger.fabric

Docker Namespace: hyperledger

orderer模块

[root@master1 bin]# orderer version

orderer:

Version: 1.4.0-rc2

Commit SHA: b87ec80

Go version: go1.11.5

OS/Arch: linux/amd64

cryptogen模块

[root@master1 bin]# cryptogen version

cryptogen:

Version: 1.4.0

Commit SHA: b87ec80

Go version: go1.11.5

OS/Arch: linux/amd64

configtxgen模块

[root@master1 bin]# configtxgen -version

configtxgen:

Version: 1.4.0

Commit SHA: b87ec80

Go version: go1.11.5

OS/Arch: linux/amd64

configtxlator模块

[root@master1 bin]# configtxlator version

configtxlator:

Version: 1.4.0

Commit SHA: b87ec80

Go version: go1.11.5

OS/Arch: linux/amd64

如果全都显示正确,则说明fabric安装成功了!

二、快速运行一个简单的fabric网络

下面采用直接运行模块可执行文件的方式进行,而非采用docker的方式!

1. 创建一个目录来存放命令执行过程中生成的相关文件

[root@master1 bin]# mkdir -p /opt/hyperledger

2. 生成fabric需要的证书文件

首先创建存放证书的文件夹

[root@master1 fabricconfig]# mkdir -p /opt/hyperledger/fabricconfig

在fabricconfig文件夹下,用cryptogen showtemplate命令将cryptogen模块所需的配置文件的模板打印出来,根据模板修改后保存到crypto-config.yaml文件中。我的crypto-config.yaml配置信息如下:

# ---------------------------------------------------------------------------

# "OrdererOrgs" - Definition of organizations managing orderer nodes

# ---------------------------------------------------------------------------

OrdererOrgs:

# ---------------------------------------------------------------------------

# Orderer

# ---------------------------------------------------------------------------

- Name: Orderer

Domain: asn417.com

# ---------------------------------------------------------------------------

# "Specs" - See PeerOrgs below for complete description

# ---------------------------------------------------------------------------

Specs:

- Hostname: orderer

# ---------------------------------------------------------------------------

# "PeerOrgs" - Definition of organizations managing peer nodes

# ---------------------------------------------------------------------------

PeerOrgs:

# ---------------------------------------------------------------------------

# Org1

# ---------------------------------------------------------------------------

- Name: Org1

Domain: org1.asn417.com

EnableNodeOUs: false

# ---------------------------------------------------------------------------

# "CA"

# ---------------------------------------------------------------------------

# Uncomment this section to enable the explicit definition of the CA for this

# organization. This entry is a Spec. See "Specs" section below for details.

# ---------------------------------------------------------------------------

# CA:

# Hostname: ca # implicitly ca.org1.example.com

# Country: US

# Province: California

# Locality: San Francisco

# OrganizationalUnit: Hyperledger Fabric

# StreetAddress: address for org # default nil

# PostalCode: postalCode for org # default nil

# ---------------------------------------------------------------------------

# "Specs"

# ---------------------------------------------------------------------------

# Uncomment this section to enable the explicit definition of hosts in your

# configuration. Most users will want to use Template, below

#

# Specs is an array of Spec entries. Each Spec entry consists of two fields:

# - Hostname: (Required) The desired hostname, sans the domain.

# - CommonName: (Optional) Specifies the template or explicit override for

# the CN. By default, this is the template:

#

# "{{.Hostname}}.{{.Domain}}"

#

# which obtains its values from the Spec.Hostname and

# Org.Domain, respectively.

# - SANS: (Optional) Specifies one or more Subject Alternative Names

# to be set in the resulting x509. Accepts template

# variables {{.Hostname}}, {{.Domain}}, {{.CommonName}}. IP

# addresses provided here will be properly recognized. Other

# values will be taken as DNS names.

# NOTE: Two implicit entries are created for you:

# - {{ .CommonName }}

# - {{ .Hostname }}

# ---------------------------------------------------------------------------

# Specs:

# - Hostname: foo # implicitly "foo.org1.example.com"

# CommonName: foo27.org5.example.com # overrides Hostname-based FQDN set above

# SANS:

# - "bar.{{.Domain}}"

# - "altfoo.{{.Domain}}"

# - "{{.Hostname}}.org6.net"

# - 172.16.10.31

# - Hostname: bar

# - Hostname: baz

# ---------------------------------------------------------------------------

# "Template"

# ---------------------------------------------------------------------------

# Allows for the definition of 1 or more hosts that are created sequentially

# from a template. By default, this looks like "peer%d" from 0 to Count-1.

# You may override the number of nodes (Count), the starting index (Start)

# or the template used to construct the name (Hostname).

#

# Note: Template and Specs are not mutually exclusive. You may define both

# sections and the aggregate nodes will be created for you. Take care with

# name collisions

# ---------------------------------------------------------------------------

Template:

Count: 2

# Start: 5

# Hostname: {{.Prefix}}{{.Index}} # default

# SANS:

# - "{{.Hostname}}.alt.{{.Domain}}"

# ---------------------------------------------------------------------------

# "Users"

# ---------------------------------------------------------------------------

# Count: The number of user accounts _in addition_ to Admin

# ---------------------------------------------------------------------------

Users:

Count: 3

# ---------------------------------------------------------------------------

# Org2: See "Org1" for full specification

# ---------------------------------------------------------------------------

- Name: Org2

Domain: org2.asn417.com

EnableNodeOUs: false

Template:

Count: 2

Users:

Count: 2

然后执行以下命令,生成证书文件crypto-config:

[root@slave1 fabricconfig]# cryptogen generate --config=crypto-config.yaml --output ./crypto-config

org1.asn417.com

org2.asn417.com

然后将以下信息添加到/etc/hosts中:

192.168.89.132 orderer.asn417.com

192.168.89.132 peer0.org1.asn417.com

192.168.89.132 peer1.org1.asn417.com

192.168.89.132 peer0.org2.asn417.com

192.168.89.132 peer1.org2.asn417.com

3. 创始块的生成

首先创建一个目录来存储orderer节点相关的文件:

[root@master1 fabricconfig]# mkdir -p /opt/hyperledger/order

然后将$GOPATH/src/github.com/hyperledger/fabric/sampleconfig/目录下的模板文件configtx.yaml复制到刚创建的order目录

[root@master1 fabricconfig]# cp -r $GOPATH/src/github.com/hyperledger/fabric/sampleconfig/configtx.yaml /opt/hyperledger/order/

然后修改order目录中的configtx.yaml文件,修改后如下所示:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

---

################################################################################

#

# ORGANIZATIONS

#

# This section defines the organizational identities that can be referenced

# in the configuration profiles.

#

################################################################################

Organizations:

# SampleOrg defines an MSP using the sampleconfig. It should never be used

# in production but may be used as a template for other definitions.

- &OrdererOrg

# Name is the key by which this org will be referenced in channel

# configuration transactions.

# Name can include alphanumeric characters as well as dots and dashes.

Name: OrdererMSP

# ID is the key by which this org's MSP definition will be referenced.

# ID can include alphanumeric characters as well as dots and dashes.

ID: OrdererMSP

# MSPDir is the filesystem path which contains the MSP configuration.

MSPDir: /opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/msp

# Policies defines the set of policies at this level of the config tree

# For organization policies, their canonical path is usually

# /Channel///

Policies: &SampleOrgPolicies

Readers:

Type: Signature

Rule: "OR('OrdererMSP.member', 'OrdererMSP.admin', 'OrdererMSP.peer')"

# If your MSP is configured with the new NodeOUs, you might

# want to use a more specific rule like the following:

# Rule: "OR('SampleOrg.admin', 'SampleOrg.peer', 'SampleOrg.client')"

Writers:

Type: Signature

Rule: "OR('OrdererMSP.member', 'OrdererMSP.admin', 'OrdererMSP.peer')"

# If your MSP is configured with the new NodeOUs, you might

# want to use a more specific rule like the following:

# Rule: "OR('SampleOrg.admin', 'SampleOrg.client')"

Admins:

Type: Signature

Rule: "OR('OrdererMSP.admin')"

Endorsement:

Type: Signature

Rule: "OR('OrdererMSP.member')"

# AnchorPeers defines the location of peers which can be used for

# cross-org gossip communication. Note, this value is only encoded in

# the genesis block in the Application section context.

AnchorPeers:

- Host: 127.0.0.1

Port: 7051

- &Org1

Name: Org1MSP

ID: Org1MSP

MSPDir: /opt/hyperledger/fabricconfig/crypto-config/peerOrganizations/org1.asn417.com/msp

AnchorPeers:

- Host: peer0.org1.asn417.com

Port: 7051

- &Org2

Name: Org2MSP

ID: Org2MSP

MSPDir: /opt/hyperledger/fabricconfig/crypto-config/peerOrganizations/org2.asn417.com/msp

AnchorPeers:

- Host: peer0.org2.asn417.com

Port: 7051

################################################################################

#

# CAPABILITIES

#

# This section defines the capabilities of fabric network. This is a new

# concept as of v1.1.0 and should not be utilized in mixed networks with

# v1.0.x peers and orderers. Capabilities define features which must be

# present in a fabric binary for that binary to safely participate in the

# fabric network. For instance, if a new MSP type is added, newer binaries

# might recognize and validate the signatures from this type, while older

# binaries without this support would be unable to validate those

# transactions. This could lead to different versions of the fabric binaries

# having different world states. Instead, defining a capability for a channel

# informs those binaries without this capability that they must cease

# processing transactions until they have been upgraded. For v1.0.x if any

# capabilities are defined (including a map with all capabilities turned off)

# then the v1.0.x peer will deliberately crash.

#

################################################################################

Capabilities:

# Channel capabilities apply to both the orderers and the peers and must be

# supported by both.

# Set the value of the capability to true to require it.

Channel: &ChannelCapabilities

# V1.3 for Channel is a catchall flag for behavior which has been

# determined to be desired for all orderers and peers running at the v1.3.x

# level, but which would be incompatible with orderers and peers from

# prior releases.

# Prior to enabling V1.3 channel capabilities, ensure that all

# orderers and peers on a channel are at v1.3.0 or later.

V1_3: true

# Orderer capabilities apply only to the orderers, and may be safely

# used with prior release peers.

# Set the value of the capability to true to require it.

Orderer: &OrdererCapabilities

# V1.1 for Orderer is a catchall flag for behavior which has been

# determined to be desired for all orderers running at the v1.1.x

# level, but which would be incompatible with orderers from prior releases.

# Prior to enabling V1.1 orderer capabilities, ensure that all

# orderers on a channel are at v1.1.0 or later.

V1_1: true

# Application capabilities apply only to the peer network, and may be safely

# used with prior release orderers.

# Set the value of the capability to true to require it.

Application: &ApplicationCapabilities

# V1.3 for Application enables the new non-backwards compatible

# features and fixes of fabric v1.3.

V1_3: true

# V1.2 for Application enables the new non-backwards compatible

# features and fixes of fabric v1.2 (note, this need not be set if

# later version capabilities are set)

V1_2: false

# V1.1 for Application enables the new non-backwards compatible

# features and fixes of fabric v1.1 (note, this need not be set if

# later version capabilities are set).

V1_1: false

################################################################################

#

# APPLICATION

#

# This section defines the values to encode into a config transaction or

# genesis block for application-related parameters.

#

################################################################################

Application: &ApplicationDefaults

ACLs: &ACLsDefault

# This section provides defaults for policies for various resources

# in the system. These "resources" could be functions on system chaincodes

# (e.g., "GetBlockByNumber" on the "qscc" system chaincode) or other resources

# (e.g.,who can receive Block events). This section does NOT specify the resource's

# definition or API, but just the ACL policy for it.

#

# User's can override these defaults with their own policy mapping by defining the

# mapping under ACLs in their channel definition

#---Lifecycle System Chaincode (lscc) function to policy mapping for access control---#

# ACL policy for lscc's "getid" function

lscc/ChaincodeExists: /Channel/Application/Readers

# ACL policy for lscc's "getdepspec" function

lscc/GetDeploymentSpec: /Channel/Application/Readers

# ACL policy for lscc's "getccdata" function

lscc/GetChaincodeData: /Channel/Application/Readers

# ACL Policy for lscc's "getchaincodes" function

lscc/GetInstantiatedChaincodes: /Channel/Application/Readers

#---Query System Chaincode (qscc) function to policy mapping for access control---#

# ACL policy for qscc's "GetChainInfo" function

qscc/GetChainInfo: /Channel/Application/Readers

# ACL policy for qscc's "GetBlockByNumber" function

qscc/GetBlockByNumber: /Channel/Application/Readers

# ACL policy for qscc's "GetBlockByHash" function

qscc/GetBlockByHash: /Channel/Application/Readers

# ACL policy for qscc's "GetTransactionByID" function

qscc/GetTransactionByID: /Channel/Application/Readers

# ACL policy for qscc's "GetBlockByTxID" function

qscc/GetBlockByTxID: /Channel/Application/Readers

#---Configuration System Chaincode (cscc) function to policy mapping for access control---#

# ACL policy for cscc's "GetConfigBlock" function

cscc/GetConfigBlock: /Channel/Application/Readers

# ACL policy for cscc's "GetConfigTree" function

cscc/GetConfigTree: /Channel/Application/Readers

# ACL policy for cscc's "SimulateConfigTreeUpdate" function

cscc/SimulateConfigTreeUpdate: /Channel/Application/Readers

#---Miscellanesous peer function to policy mapping for access control---#

# ACL policy for invoking chaincodes on peer

peer/Propose: /Channel/Application/Writers

# ACL policy for chaincode to chaincode invocation

peer/ChaincodeToChaincode: /Channel/Application/Readers

#---Events resource to policy mapping for access control###---#

# ACL policy for sending block events

event/Block: /Channel/Application/Readers

# ACL policy for sending filtered block events

event/FilteredBlock: /Channel/Application/Readers

# Organizations lists the orgs participating on the application side of the

# network.

Organizations:

# Policies defines the set of policies at this level of the config tree

# For Application policies, their canonical path is

# /Channel/Application/

Policies: &ApplicationDefaultPolicies

LifecycleEndorsement:

Type: ImplicitMeta

Rule: "MAJORITY Endorsement"

Endorsement:

Type: ImplicitMeta

Rule: "MAJORITY Endorsement"

Readers:

Type: ImplicitMeta

Rule: "ANY Readers"

Writers:

Type: ImplicitMeta

Rule: "ANY Writers"

Admins:

Type: ImplicitMeta

Rule: "MAJORITY Admins"

# Capabilities describes the application level capabilities, see the

# dedicated Capabilities section elsewhere in this file for a full

# description

Capabilities:

<<: *ApplicationCapabilities

################################################################################

#

# ORDERER

#

# This section defines the values to encode into a config transaction or

# genesis block for orderer related parameters.

#

################################################################################

Orderer: &OrdererDefaults

# Orderer Type: The orderer implementation to start.

# Available types are "solo" and "kafka".

OrdererType: solo

# Addresses here is a nonexhaustive list of orderers the peers and clients can

# connect to. Adding/removing nodes from this list has no impact on their

# participation in ordering.

# NOTE: In the solo case, this should be a one-item list.

Addresses:

- orderer.asn417.com:7050

# Batch Timeout: The amount of time to wait before creating a batch.

BatchTimeout: 2s

# Batch Size: Controls the number of messages batched into a block.

# The orderer views messages opaquely, but typically, messages may

# be considered to be Fabric transactions. The 'batch' is the group

# of messages in the 'data' field of the block. Blocks will be a few kb

# larger than the batch size, when signatures, hashes, and other metadata

# is applied.

BatchSize:

# Max Message Count: The maximum number of messages to permit in a

# batch. No block will contain more than this number of messages.

MaxMessageCount: 10

# Absolute Max Bytes: The absolute maximum number of bytes allowed for

# the serialized messages in a batch. The maximum block size is this value

# plus the size of the associated metadata (usually a few KB depending

# upon the size of the signing identities). Any transaction larger than

# this value will be rejected by ordering. If the "kafka" OrdererType is

# selected, set 'message.max.bytes' and 'replica.fetch.max.bytes' on

# the Kafka brokers to a value that is larger than this one.

AbsoluteMaxBytes: 10 MB

# Preferred Max Bytes: The preferred maximum number of bytes allowed

# for the serialized messages in a batch. Roughly, this field may be considered

# the best effort maximum size of a batch. A batch will fill with messages

# until this size is reached (or the max message count, or batch timeout is

# exceeded). If adding a new message to the batch would cause the batch to

# exceed the preferred max bytes, then the current batch is closed and written

# to a block, and a new batch containing the new message is created. If a

# message larger than the preferred max bytes is received, then its batch

# will contain only that message. Because messages may be larger than

# preferred max bytes (up to AbsoluteMaxBytes), some batches may exceed

# the preferred max bytes, but will always contain exactly one transaction.

PreferredMaxBytes: 512 KB

# Max Channels is the maximum number of channels to allow on the ordering

# network. When set to 0, this implies no maximum number of channels.

MaxChannels: 0

Kafka:

# Brokers: A list of Kafka brokers to which the orderer connects. Edit

# this list to identify the brokers of the ordering service.

# NOTE: Use IP:port notation.

Brokers:

- kafka0:9092

- kafka1:9092

- kafka2:9092

# Organizations lists the orgs participating on the orderer side of the

# network.

Organizations:

# Policies defines the set of policies at this level of the config tree

# For Orderer policies, their canonical path is

# /Channel/Orderer/

Policies:

Readers:

Type: ImplicitMeta

Rule: "ANY Readers"

Writers:

Type: ImplicitMeta

Rule: "ANY Writers"

Admins:

Type: ImplicitMeta

Rule: "MAJORITY Admins"

# BlockValidation specifies what signatures must be included in the block

# from the orderer for the peer to validate it.

BlockValidation:

Type: ImplicitMeta

Rule: "ANY Writers"

# Capabilities describes the orderer level capabilities, see the

# dedicated Capabilities section elsewhere in this file for a full

# description

Capabilities:

<<: *OrdererCapabilities

################################################################################

#

# CHANNEL

#

# This section defines the values to encode into a config transaction or

# genesis block for channel related parameters.

#

################################################################################

Channel: &ChannelDefaults

# Policies defines the set of policies at this level of the config tree

# For Channel policies, their canonical path is

# /Channel/

Policies:

# Who may invoke the 'Deliver' API

Readers:

Type: ImplicitMeta

Rule: "ANY Readers"

# Who may invoke the 'Broadcast' API

Writers:

Type: ImplicitMeta

Rule: "ANY Writers"

# By default, who may modify elements at this config level

Admins:

Type: ImplicitMeta

Rule: "MAJORITY Admins"

# Capabilities describes the channel level capabilities, see the

# dedicated Capabilities section elsewhere in this file for a full

# description

Capabilities:

<<: *ChannelCapabilities

################################################################################

#

# PROFILES

#

# Different configuration profiles may be encoded here to be specified as

# parameters to the configtxgen tool. The profiles which specify consortiums

# are to be used for generating the orderer genesis block. With the correct

# consortium members defined in the orderer genesis block, channel creation

# requests may be generated with only the org member names and a consortium

# name.

#

################################################################################

Profiles:

# SampleSingleMSPSolo defines a configuration which uses the Solo orderer,

# and contains a single MSP definition (the MSP sampleconfig).

# The Consortium SampleConsortium has only a single member, SampleOrg.

TestTwoOrgsOrdererGenesis:

<<: *ChannelDefaults

Orderer:

<<: *OrdererDefaults

Organizations:

- *OrdererOrg

Consortiums:

SampleConsortium:

Organizations:

- *Org1

- *Org2

# profiles, only the 'Application' section and consortium # name are

# considered.

TestTwoOrgsChannel:

Consortium: SampleConsortium

Application:

<<: *ApplicationDefaults

Organizations:

- *Org1

- *Org2

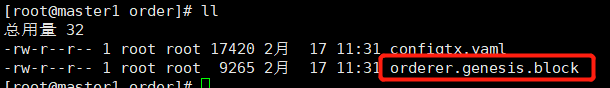

配置文件修改完成以后,执行以下命令生成创始块文件:

[root@master1 order]# configtxgen -profile TestTwoOrgsOrdererGenesis -outputBlock ./orderer.genesis.block

2019-02-17 11:31:40.312 CST [common.tools.configtxgen] main -> WARN 001 Omitting the channel ID for configtxgen for output operations is deprecated. Explicitly passing the channel ID will be required in the future, defaulting to 'testchainid'.

2019-02-17 11:31:40.312 CST [common.tools.configtxgen] main -> INFO 002 Loading configuration

2019-02-17 11:31:40.348 CST [common.tools.configtxgen.localconfig] completeInitialization -> INFO 003 orderer type: solo

2019-02-17 11:31:40.349 CST [common.tools.configtxgen.localconfig] Load -> INFO 004 Loaded configuration: /opt/hyperledger/order/configtx.yaml

2019-02-17 11:31:40.387 CST [common.tools.configtxgen.localconfig] completeInitialization -> INFO 005 orderer type: solo

2019-02-17 11:31:40.387 CST [common.tools.configtxgen.localconfig] LoadTopLevel -> INFO 006 Loaded configuration: /opt/hyperledger/order/configtx.yaml

2019-02-17 11:31:40.389 CST [common.tools.configtxgen.encoder] NewOrdererOrgGroup -> WARN 007 Default policy emission is deprecated, please include policy specifications for the orderer org group Org1MSP in configtx.yaml

2019-02-17 11:31:40.389 CST [common.tools.configtxgen.encoder] NewOrdererOrgGroup -> WARN 008 Default policy emission is deprecated, please include policy specifications for the orderer org group Org2MSP in configtx.yaml

2019-02-17 11:31:40.389 CST [common.tools.configtxgen] doOutputBlock -> INFO 009 Generating genesis block

2019-02-17 11:31:40.390 CST [common.tools.configtxgen] doOutputBlock -> INFO 00a Writing genesis block

上述命令执行完成后会在order文件夹中生成创始块文件orderer.genesis.block:

4. 账本创始块的生成

fabric中的一个通道channel就是一个账本。创建channel的配置文件也是configtx.yaml。

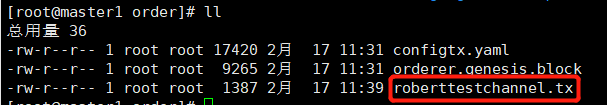

创建channel的命令如下:

[root@master1 order]# configtxgen -profile TestTwoOrgsChannel -outputCreateChannelTx ./roberttestchannel.tx -channelID roberttestchannel

2019-02-17 11:39:56.360 CST [common.tools.configtxgen] main -> INFO 001 Loading configuration

2019-02-17 11:39:56.402 CST [common.tools.configtxgen.localconfig] Load -> INFO 002 Loaded configuration: /opt/hyperledger/order/configtx.yaml

2019-02-17 11:39:56.436 CST [common.tools.configtxgen.localconfig] completeInitialization -> INFO 003 orderer type: solo

2019-02-17 11:39:56.436 CST [common.tools.configtxgen.localconfig] LoadTopLevel -> INFO 004 Loaded configuration: /opt/hyperledger/order/configtx.yaml

2019-02-17 11:39:56.436 CST [common.tools.configtxgen] doOutputChannelCreateTx -> INFO 005 Generating new channel configtx

2019-02-17 11:39:56.437 CST [common.tools.configtxgen.encoder] NewApplicationOrgGroup -> WARN 006 Default policy emission is deprecated, please include policy specifications for the application org group Org1MSP in configtx.yaml

2019-02-17 11:39:56.437 CST [common.tools.configtxgen.encoder] NewApplicationOrgGroup -> WARN 007 Default policy emission is deprecated, please include policy specifications for the application org group Org2MSP in configtx.yaml

2019-02-17 11:39:56.438 CST [common.tools.configtxgen] doOutputChannelCreateTx -> INFO 008 Writing new channel tx

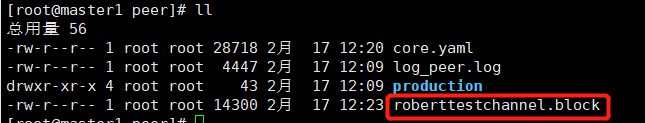

上述命令执行完成之后会在order文件夹中生成roberttestchannel.tx文件,该文件用来生成channel:

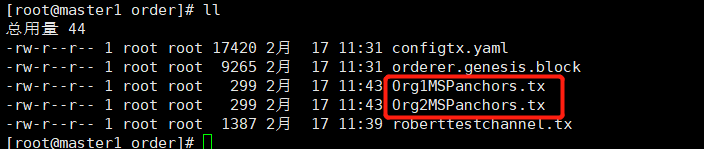

接下来需要生成锚节点文件,对应的执行以下两条命令:

[root@master1 order]# configtxgen -profile TestTwoOrgsChannel -outputAnchorPeersUpdate ./Org1MSPanchors.tx -channelID roberttestchannel -asOrg Org1MSP

2019-02-17 11:43:37.583 CST [common.tools.configtxgen] main -> INFO 001 Loading configuration

2019-02-17 11:43:37.619 CST [common.tools.configtxgen.localconfig] Load -> INFO 002 Loaded configuration: /opt/hyperledger/order/configtx.yaml

2019-02-17 11:43:37.656 CST [common.tools.configtxgen.localconfig] completeInitialization -> INFO 003 orderer type: solo

2019-02-17 11:43:37.657 CST [common.tools.configtxgen.localconfig] LoadTopLevel -> INFO 004 Loaded configuration: /opt/hyperledger/order/configtx.yaml

2019-02-17 11:43:37.657 CST [common.tools.configtxgen] doOutputAnchorPeersUpdate -> INFO 005 Generating anchor peer update

2019-02-17 11:43:37.658 CST [common.tools.configtxgen] doOutputAnchorPeersUpdate -> INFO 006 Writing anchor peer update

[root@master1 order]# configtxgen -profile TestTwoOrgsChannel -outputAnchorPeersUpdate ./Org2MSPanchors.tx -channelID roberttestchannel -asOrg Org2MSP

2019-02-17 11:43:47.306 CST [common.tools.configtxgen] main -> INFO 001 Loading configuration

2019-02-17 11:43:47.342 CST [common.tools.configtxgen.localconfig] Load -> INFO 002 Loaded configuration: /opt/hyperledger/order/configtx.yaml

2019-02-17 11:43:47.379 CST [common.tools.configtxgen.localconfig] completeInitialization -> INFO 003 orderer type: solo

2019-02-17 11:43:47.380 CST [common.tools.configtxgen.localconfig] LoadTopLevel -> INFO 004 Loaded configuration: /opt/hyperledger/order/configtx.yaml

2019-02-17 11:43:47.380 CST [common.tools.configtxgen] doOutputAnchorPeersUpdate -> INFO 005 Generating anchor peer update

2019-02-17 11:43:47.380 CST [common.tools.configtxgen] doOutputAnchorPeersUpdate -> INFO 006 Writing anchor peer update

上述两个命令执行完后会在order文件夹中生成两个锚节点文件Org1MSPanchors.tx和Org2MSPanchors.tx:

5. orderer节点的启动

首先将orderer的模板配置文件复制一份到order文件夹中:

[root@master1 order]# cp -r $GOPATH/src/github.com/hyperledger/fabric/sampleconfig/orderer.yaml /opt/hyperledger/order/

然后修改order中的orderer.yaml文件,修改后的配置文件如下:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

---

################################################################################

#

# Orderer Configuration

#

# - This controls the type and configuration of the orderer.

#

################################################################################

General:

# Ledger Type: The ledger type to provide to the orderer.

# Two non-production ledger types are provided for test purposes only:

# - ram: An in-memory ledger whose contents are lost on restart.

# - json: A simple file ledger that writes blocks to disk in JSON format.

# Only one production ledger type is provided:

# - file: A production file-based ledger.

LedgerType: file

# Listen address: The IP on which to bind to listen.

ListenAddress: 0.0.0.0

# Listen port: The port on which to bind to listen.

ListenPort: 7050

# TLS: TLS settings for the GRPC server.

TLS:

Enabled: false

# PrivateKey governs the file location of the private key of the TLS certificate.

PrivateKey: /opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/tls/server.key

# Certificate governs the file location of the server TLS certificate.

Certificate: /opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/tls/server.crt

RootCAs:

- /opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/tls/ca.crt

ClientAuthRequired: false

ClientRootCAs:

# Keepalive settings for the GRPC server.

Keepalive:

# ServerMinInterval is the minimum permitted time between client pings.

# If clients send pings more frequently, the server will

# disconnect them.

ServerMinInterval: 60s

# ServerInterval is the time between pings to clients.

ServerInterval: 7200s

# ServerTimeout is the duration the server waits for a response from

# a client before closing the connection.

ServerTimeout: 20s

# Cluster settings for ordering service nodes that communicate with other ordering service nodes

# such as Raft based ordering service.

Cluster:

# ClientCertificate governs the file location of the client TLS certificate

# used to establish mutual TLS connections with other ordering service nodes.

ClientCertificate:

# ClientPrivateKey governs the file location of the private key of the client TLS certificate.

ClientPrivateKey:

# DialTimeout governs the maximum duration of time after which connection

# attempts are considered as failed.

DialTimeout: 5s

# RPCTimeout governs the maximum duration of time after which RPC

# attempts are considered as failed.

RPCTimeout: 7s

# RootCAs governs the file locations of certificates of the Certificate Authorities

# which authorize connections to remote ordering service nodes.

RootCAs:

- /opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/tls/ca.crt

# ReplicationBuffersSize is the maximum number of bytes that can be allocated

# for each in-memory buffer used for block replication from other cluster nodes.

# Each channel has its own memory buffer.

ReplicationBufferSize: 20971520 # 20MB

# PullTimeout is the maximum duration the ordering node will wait for a block

# to be received before it aborts.

ReplicationPullTimeout: 5s

# ReplicationRetryTimeout is the maximum duration the ordering node will wait

# between 2 consecutive attempts.

ReplicationRetryTimeout: 5s

# Genesis method: The method by which the genesis block for the orderer

# system channel is specified. Available options are "provisional", "file":

# - provisional: Utilizes a genesis profile, specified by GenesisProfile,

# to dynamically generate a new genesis block.

# - file: Uses the file provided by GenesisFile as the genesis block.

GenesisMethod: file

# Genesis profile: The profile to use to dynamically generate the genesis

# block to use when initializing the orderer system channel and

# GenesisMethod is set to "provisional". See the configtx.yaml file for the

# descriptions of the available profiles. Ignored if GenesisMethod is set to

# "file".

GenesisProfile: TestOrgsOrdererGenesis

# Genesis file: The file containing the genesis block to use when

# initializing the orderer system channel and GenesisMethod is set to

# "file". Ignored if GenesisMethod is set to "provisional".

GenesisFile: /opt/hyperledger/order/orderer.genesis.block

# LocalMSPDir is where to find the private crypto material needed by the

# orderer. It is set relative here as a default for dev environments but

# should be changed to the real location in production.

LocalMSPDir: /opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/msp

# LocalMSPID is the identity to register the local MSP material with the MSP

# manager. IMPORTANT: The local MSP ID of an orderer needs to match the MSP

# ID of one of the organizations defined in the orderer system channel's

# /Channel/Orderer configuration. The sample organization defined in the

# sample configuration provided has an MSP ID of "SampleOrg".

LocalMSPID: OrdererMSP

# Enable an HTTP service for Go "pprof" profiling as documented at:

# https://golang.org/pkg/net/http/pprof

Profile:

Enabled: false

Address: 0.0.0.0:6060

# BCCSP configures the blockchain crypto service providers.

BCCSP:

# Default specifies the preferred blockchain crypto service provider

# to use. If the preferred provider is not available, the software

# based provider ("SW") will be used.

# Valid providers are:

# - SW: a software based crypto provider

# - PKCS11: a CA hardware security module crypto provider.

Default: SW

# SW configures the software based blockchain crypto provider.

SW:

# TODO: The default Hash and Security level needs refactoring to be

# fully configurable. Changing these defaults requires coordination

# SHA2 is hardcoded in several places, not only BCCSP

Hash: SHA2

Security: 256

# Location of key store. If this is unset, a location will be

# chosen using: 'LocalMSPDir'/keystore

FileKeyStore:

KeyStore:

# Authentication contains configuration parameters related to authenticating

# client messages

Authentication:

# the acceptable difference between the current server time and the

# client's time as specified in a client request message

TimeWindow: 15m

################################################################################

#

# SECTION: File Ledger

#

# - This section applies to the configuration of the file or json ledgers.

#

################################################################################

FileLedger:

# Location: The directory to store the blocks in.

# NOTE: If this is unset, a new temporary location will be chosen every time

# the orderer is restarted, using the prefix specified by Prefix.

Location: /opt/hyperledger/order/production/orderer

# The prefix to use when generating a ledger directory in temporary space.

# Otherwise, this value is ignored.

Prefix: hyperledger-fabric-ordererledger

################################################################################

#

# SECTION: RAM Ledger

#

# - This section applies to the configuration of the RAM ledger.

#

################################################################################

RAMLedger:

# History Size: The number of blocks that the RAM ledger is set to retain.

# WARNING: Appending a block to the ledger might cause the oldest block in

# the ledger to be dropped in order to limit the number total number blocks

# to HistorySize. For example, if history size is 10, when appending block

# 10, block 0 (the genesis block!) will be dropped to make room for block 10.

HistorySize: 1000

################################################################################

#

# SECTION: Kafka

#

# - This section applies to the configuration of the Kafka-based orderer, and

# its interaction with the Kafka cluster.

#

################################################################################

Kafka:

# Retry: What do if a connection to the Kafka cluster cannot be established,

# or if a metadata request to the Kafka cluster needs to be repeated.

Retry:

# When a new channel is created, or when an existing channel is reloaded

# (in case of a just-restarted orderer), the orderer interacts with the

# Kafka cluster in the following ways:

# 1. It creates a Kafka producer (writer) for the Kafka partition that

# corresponds to the channel.

# 2. It uses that producer to post a no-op CONNECT message to that

# partition

# 3. It creates a Kafka consumer (reader) for that partition.

# If any of these steps fail, they will be re-attempted every

# for a total of , and then every

# for a total of until they succeed.

# Note that the orderer will be unable to write to or read from a

# channel until all of the steps above have been completed successfully.

ShortInterval: 5s

ShortTotal: 10m

LongInterval: 5m

LongTotal: 12h

# Affects the socket timeouts when waiting for an initial connection, a

# response, or a transmission. See Config.Net for more info:

# https://godoc.org/github.com/Shopify/sarama#Config

NetworkTimeouts:

DialTimeout: 10s

ReadTimeout: 10s

WriteTimeout: 10s

# Affects the metadata requests when the Kafka cluster is in the middle

# of a leader election.See Config.Metadata for more info:

# https://godoc.org/github.com/Shopify/sarama#Config

Metadata:

RetryBackoff: 250ms

RetryMax: 3

# What to do if posting a message to the Kafka cluster fails. See

# Config.Producer for more info:

# https://godoc.org/github.com/Shopify/sarama#Config

Producer:

RetryBackoff: 100ms

RetryMax: 3

# What to do if reading from the Kafka cluster fails. See

# Config.Consumer for more info:

# https://godoc.org/github.com/Shopify/sarama#Config

Consumer:

RetryBackoff: 2s

# Settings to use when creating Kafka topics. Only applies when

# Kafka.Version is v0.10.1.0 or higher

Topic:

# The number of Kafka brokers across which to replicate the topic

ReplicationFactor: 3

# Verbose: Enable logging for interactions with the Kafka cluster.

Verbose: false

# TLS: TLS settings for the orderer's connection to the Kafka cluster.

TLS:

# Enabled: Use TLS when connecting to the Kafka cluster.

Enabled: false

# PrivateKey: PEM-encoded private key the orderer will use for

# authentication.

PrivateKey:

# As an alternative to specifying the PrivateKey here, uncomment the

# following "File" key and specify the file name from which to load the

# value of PrivateKey.

#File: path/to/PrivateKey

# Certificate: PEM-encoded signed public key certificate the orderer will

# use for authentication.

Certificate:

# As an alternative to specifying the Certificate here, uncomment the

# following "File" key and specify the file name from which to load the

# value of Certificate.

#File: path/to/Certificate

# RootCAs: PEM-encoded trusted root certificates used to validate

# certificates from the Kafka cluster.

RootCAs:

# As an alternative to specifying the RootCAs here, uncomment the

# following "File" key and specify the file name from which to load the

# value of RootCAs.

#File: path/to/RootCAs

# SASLPlain: Settings for using SASL/PLAIN authentication with Kafka brokers

SASLPlain:

# Enabled: Use SASL/PLAIN to authenticate with Kafka brokers

Enabled: false

# User: Required when Enabled is set to true

User:

# Password: Required when Enabled is set to true

Password:

# Kafka protocol version used to communicate with the Kafka cluster brokers

# (defaults to 0.10.2.0 if not specified)

Version:

################################################################################

#

# Debug Configuration

#

# - This controls the debugging options for the orderer

#

################################################################################

Debug:

# BroadcastTraceDir when set will cause each request to the Broadcast service

# for this orderer to be written to a file in this directory

BroadcastTraceDir:

# DeliverTraceDir when set will cause each request to the Deliver service

# for this orderer to be written to a file in this directory

DeliverTraceDir:

################################################################################

#

# Operations Configuration

#

# - This configures the operations server endpoint for the orderer

#

################################################################################

Operations:

# host and port for the operations server

ListenAddress: 127.0.0.1:8443

# TLS configuration for the operations endpoint

TLS:

# TLS enabled

Enabled: false

# Certificate is the location of the PEM encoded TLS certificate

Certificate:

# PrivateKey points to the location of the PEM-encoded key

PrivateKey:

# Require client certificate authentication to access all resources

ClientAuthRequired: false

# Paths to PEM encoded ca certificates to trust for client authentication

RootCAs: []

################################################################################

#

# Metrics Configuration

#

# - This configures metrics collection for the orderer

#

################################################################################

Metrics:

# The metrics provider is one of statsd, prometheus, or disabled

Provider: disabled

# The statsd configuration

Statsd:

# network type: tcp or udp

Network: udp

# the statsd server address

Address: 127.0.0.1:8125

# The interval at which locally cached counters and gauges are pushed

# to statsd; timings are pushed immediately

WriteInterval: 30s

# The prefix is prepended to all emitted statsd metrics

Prefix:

################################################################################

#

# Consensus Configuration

#

# - This section contains config options for a consensus plugin. It is opaque

# to orderer, and completely up to consensus implementation to make use of.

#

################################################################################

Consensus:

# The allowed key-value pairs here depend on consensus plugin. For etcd/raft,

# we use following options:

# WALDir specifies the location at which Write Ahead Logs for etcd/raft are

# stored. Each channel will have its own subdir named after channel ID.

WALDir: /var/hyperledger/production/orderer/etcdraft/wal

# SnapDir specifies the location at which snapshots for etcd/raft are

# stored. Each channel will have its own subdir named after channel ID.

SnapDir: /var/hyperledger/production/orderer/etcdraft/snapshot

接下来启动orderer:

[root@master1 order]# orderer start

2019-02-17 11:58:02.236 CST [localconfig] completeInitialization -> INFO 001 Kafka.Version unset, setting to 0.10.2.0

2019-02-17 11:58:02.251 CST [orderer.common.server] prettyPrintStruct -> INFO 002 Orderer config values:

General.LedgerType = "file"

General.ListenAddress = "0.0.0.0"

General.ListenPort = 7050

General.TLS.Enabled = false

General.TLS.PrivateKey = "/opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/tls/server.key"

General.TLS.Certificate = "/opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/tls/server.crt"

General.TLS.RootCAs = [/opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/tls/ca.crt]

General.TLS.ClientAuthRequired = false

General.TLS.ClientRootCAs = []

General.Cluster.RootCAs = [/opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/tls/ca.crt]

General.Cluster.ClientCertificate = ""

General.Cluster.ClientPrivateKey = ""

General.Cluster.DialTimeout = 5s

General.Cluster.RPCTimeout = 7s

General.Cluster.ReplicationBufferSize = 20971520

General.Cluster.ReplicationPullTimeout = 5s

General.Cluster.ReplicationRetryTimeout = 5s

General.Keepalive.ServerMinInterval = 1m0s

General.Keepalive.ServerInterval = 2h0m0s

General.Keepalive.ServerTimeout = 20s

General.GenesisMethod = "file"

General.GenesisProfile = "TestOrgsOrdererGenesis"

General.SystemChannel = "test-system-channel-name"

General.GenesisFile = "/opt/hyperledger/order/orderer.genesis.block"

General.Profile.Enabled = false

General.Profile.Address = "0.0.0.0:6060"

General.LocalMSPDir = "/opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/msp"

General.LocalMSPID = "OrdererMSP"

General.BCCSP.ProviderName = "SW"

General.BCCSP.SwOpts.SecLevel = 256

General.BCCSP.SwOpts.HashFamily = "SHA2"

General.BCCSP.SwOpts.Ephemeral = false

General.BCCSP.SwOpts.FileKeystore.KeyStorePath = "/opt/hyperledger/fabricconfig/crypto-config/ordererOrganizations/asn417.com/orderers/orderer.asn417.com/msp/keystore"

General.BCCSP.SwOpts.DummyKeystore =

General.BCCSP.SwOpts.InmemKeystore =

General.BCCSP.PluginOpts =

General.Authentication.TimeWindow = 15m0s

FileLedger.Location = "/opt/hyperledger/order/production/orderer"

FileLedger.Prefix = "hyperledger-fabric-ordererledger"

RAMLedger.HistorySize = 1000

Kafka.Retry.ShortInterval = 5s

Kafka.Retry.ShortTotal = 10m0s

Kafka.Retry.LongInterval = 5m0s

Kafka.Retry.LongTotal = 12h0m0s

Kafka.Retry.NetworkTimeouts.DialTimeout = 10s

Kafka.Retry.NetworkTimeouts.ReadTimeout = 10s

Kafka.Retry.NetworkTimeouts.WriteTimeout = 10s

Kafka.Retry.Metadata.RetryMax = 3

Kafka.Retry.Metadata.RetryBackoff = 250ms

Kafka.Retry.Producer.RetryMax = 3

Kafka.Retry.Producer.RetryBackoff = 100ms

Kafka.Retry.Consumer.RetryBackoff = 2s

Kafka.Verbose = false

Kafka.Version = 0.10.2.0

Kafka.TLS.Enabled = false

Kafka.TLS.PrivateKey = ""

Kafka.TLS.Certificate = ""

Kafka.TLS.RootCAs = []

Kafka.TLS.ClientAuthRequired = false

Kafka.TLS.ClientRootCAs = []

Kafka.SASLPlain.Enabled = false

Kafka.SASLPlain.User = ""

Kafka.SASLPlain.Password = ""

Kafka.Topic.ReplicationFactor = 3

Debug.BroadcastTraceDir = ""

Debug.DeliverTraceDir = ""

Consensus = map[WALDir:/var/hyperledger/production/orderer/etcdraft/wal SnapDir:/var/hyperledger/production/orderer/etcdraft/snapshot]

Operations.ListenAddress = "127.0.0.1:8443"

Operations.TLS.Enabled = false

Operations.TLS.PrivateKey = ""

Operations.TLS.Certificate = ""

Operations.TLS.RootCAs = []

Operations.TLS.ClientAuthRequired = false

Operations.TLS.ClientRootCAs = []

Metrics.Provider = "disabled"

Metrics.Statsd.Network = "udp"

Metrics.Statsd.Address = "127.0.0.1:8125"

Metrics.Statsd.WriteInterval = 30s

Metrics.Statsd.Prefix = ""

2019-02-17 11:58:02.268 CST [fsblkstorage] newBlockfileMgr -> INFO 003 Getting block information from block storage

2019-02-17 11:58:02.284 CST [orderer.commmon.multichannel] Initialize -> INFO 004 Starting system channel 'testchainid' with genesis block hash 569be2315ff00b113f5de9cdb399f2d71c8164f919f4e7013997a33bd1fa3f7a and orderer type solo

2019-02-17 11:58:02.284 CST [orderer.common.server] Start -> INFO 005 Starting orderer:

Version: 1.4.0-rc2

Commit SHA: b87ec80

Go version: go1.11.5

OS/Arch: linux/amd64

2019-02-17 11:58:02.284 CST [orderer.common.server] Start -> INFO 006 Beginning to serve requests

(orderer服务启动起来了,因此需要新开一个终端进行后续操作。。。)

6. peer节点的启动

同样的,首先需要创建一个文件夹来存放peer模块的配置文件和区块数据:

[root@master1 ~]# mkdir /opt/hyperledger/peer

然后将相关的模板配置文件core.yaml复制到peer文件夹下并修改内容,修改后的内容如下所示:

# Copyright IBM Corp. All Rights Reserved.

#

# SPDX-License-Identifier: Apache-2.0

#

###############################################################################

#

# Peer section

#

###############################################################################

peer:

# The Peer id is used for identifying this Peer instance.

id: peer0.org1.asn417.com

# The networkId allows for logical seperation of networks

networkId: dev

# The Address at local network interface this Peer will listen on.

# By default, it will listen on all network interfaces

listenAddress: 0.0.0.0:7051

# The endpoint this peer uses to listen for inbound chaincode connections.

# If this is commented-out, the listen address is selected to be

# the peer's address (see below) with port 7052

# chaincodeListenAddress: 0.0.0.0:7052

# The endpoint the chaincode for this peer uses to connect to the peer.

# If this is not specified, the chaincodeListenAddress address is selected.

# And if chaincodeListenAddress is not specified, address is selected from

# peer listenAddress.

# chaincodeAddress: 0.0.0.0:7052

# When used as peer config, this represents the endpoint to other peers

# in the same organization. For peers in other organization, see

# gossip.externalEndpoint for more info.

# When used as CLI config, this means the peer's endpoint to interact with

address: peer0.org1.asn417.com:7051

# Whether the Peer should programmatically determine its address

# This case is useful for docker containers.

addressAutoDetect: false

# Setting for runtime.GOMAXPROCS(n). If n < 1, it does not change the

# current setting

gomaxprocs: -1

# Keepalive settings for peer server and clients

keepalive:

# MinInterval is the minimum permitted time between client pings.

# If clients send pings more frequently, the peer server will

# disconnect them

minInterval: 60s

# Client keepalive settings for communicating with other peer nodes

client:

# Interval is the time between pings to peer nodes. This must

# greater than or equal to the minInterval specified by peer

# nodes

interval: 60s

# Timeout is the duration the client waits for a response from

# peer nodes before closing the connection

timeout: 20s

# DeliveryClient keepalive settings for communication with ordering

# nodes.

deliveryClient:

# Interval is the time between pings to ordering nodes. This must

# greater than or equal to the minInterval specified by ordering

# nodes.

interval: 60s

# Timeout is the duration the client waits for a response from

# ordering nodes before closing the connection

timeout: 20s

# Gossip related configuration

gossip:

# Bootstrap set to initialize gossip with.

# This is a list of other peers that this peer reaches out to at startup.

# Important: The endpoints here have to be endpoints of peers in the same

# organization, because the peer would refuse connecting to these endpoints

# unless they are in the same organization as the peer.

bootstrap: 127.0.0.1:7051

# NOTE: orgLeader and useLeaderElection parameters are mutual exclusive.

# Setting both to true would result in the termination of the peer

# since this is undefined state. If the peers are configured with

# useLeaderElection=false, make sure there is at least 1 peer in the

# organization that its orgLeader is set to true.

# Defines whenever peer will initialize dynamic algorithm for

# "leader" selection, where leader is the peer to establish

# connection with ordering service and use delivery protocol

# to pull ledger blocks from ordering service. It is recommended to

# use leader election for large networks of peers.

useLeaderElection: true

# Statically defines peer to be an organization "leader",

# where this means that current peer will maintain connection

# with ordering service and disseminate block across peers in

# its own organization

orgLeader: false

# Interval for membershipTracker polling

membershipTrackerInterval: 5s

# Overrides the endpoint that the peer publishes to peers

# in its organization. For peers in foreign organizations

# see 'externalEndpoint'

endpoint:

# Maximum count of blocks stored in memory

maxBlockCountToStore: 100

# Max time between consecutive message pushes(unit: millisecond)

maxPropagationBurstLatency: 10ms

# Max number of messages stored until a push is triggered to remote peers

maxPropagationBurstSize: 10

# Number of times a message is pushed to remote peers

propagateIterations: 1

# Number of peers selected to push messages to

propagatePeerNum: 3

# Determines frequency of pull phases(unit: second)

# Must be greater than digestWaitTime + responseWaitTime

pullInterval: 4s

# Number of peers to pull from

pullPeerNum: 3

# Determines frequency of pulling state info messages from peers(unit: second)

requestStateInfoInterval: 4s

# Determines frequency of pushing state info messages to peers(unit: second)

publishStateInfoInterval: 4s

# Maximum time a stateInfo message is kept until expired

stateInfoRetentionInterval:

# Time from startup certificates are included in Alive messages(unit: second)

publishCertPeriod: 10s

# Should we skip verifying block messages or not (currently not in use)

skipBlockVerification: false

# Dial timeout(unit: second)

dialTimeout: 3s

# Connection timeout(unit: second)

connTimeout: 2s

# Buffer size of received messages

recvBuffSize: 20

# Buffer size of sending messages

sendBuffSize: 200

# Time to wait before pull engine processes incoming digests (unit: second)

# Should be slightly smaller than requestWaitTime

digestWaitTime: 1s

# Time to wait before pull engine removes incoming nonce (unit: milliseconds)

# Should be slightly bigger than digestWaitTime

requestWaitTime: 1500ms

# Time to wait before pull engine ends pull (unit: second)

responseWaitTime: 2s

# Alive check interval(unit: second)

aliveTimeInterval: 5s

# Alive expiration timeout(unit: second)

aliveExpirationTimeout: 25s

# Reconnect interval(unit: second)

reconnectInterval: 25s

# This is an endpoint that is published to peers outside of the organization.

# If this isn't set, the peer will not be known to other organizations.

externalEndpoint:

# Leader election service configuration

election:

# Longest time peer waits for stable membership during leader election startup (unit: second)

startupGracePeriod: 15s

# Interval gossip membership samples to check its stability (unit: second)

membershipSampleInterval: 1s

# Time passes since last declaration message before peer decides to perform leader election (unit: second)

leaderAliveThreshold: 10s

# Time between peer sends propose message and declares itself as a leader (sends declaration message) (unit: second)

leaderElectionDuration: 5s

pvtData:

# pullRetryThreshold determines the maximum duration of time private data corresponding for a given block

# would be attempted to be pulled from peers until the block would be committed without the private data

pullRetryThreshold: 60s

# As private data enters the transient store, it is associated with the peer's ledger's height at that time.

# transientstoreMaxBlockRetention defines the maximum difference between the current ledger's height upon commit,

# and the private data residing inside the transient store that is guaranteed not to be purged.

# Private data is purged from the transient store when blocks with sequences that are multiples

# of transientstoreMaxBlockRetention are committed.

transientstoreMaxBlockRetention: 1000

# pushAckTimeout is the maximum time to wait for an acknowledgement from each peer

# at private data push at endorsement time.

pushAckTimeout: 3s

# Block to live pulling margin, used as a buffer

# to prevent peer from trying to pull private data

# from peers that is soon to be purged in next N blocks.

# This helps a newly joined peer catch up to current

# blockchain height quicker.

btlPullMargin: 10

# the process of reconciliation is done in an endless loop, while in each iteration reconciler tries to

# pull from the other peers the most recent missing blocks with a maximum batch size limitation.

# reconcileBatchSize determines the maximum batch size of missing private data that will be reconciled in a

# single iteration.

reconcileBatchSize: 10

# reconcileSleepInterval determines the time reconciler sleeps from end of an iteration until the beginning

# of the next reconciliation iteration.

reconcileSleepInterval: 1m

# reconciliationEnabled is a flag that indicates whether private data reconciliation is enable or not.

reconciliationEnabled: true

# TLS Settings

# Note that peer-chaincode connections through chaincodeListenAddress is

# not mutual TLS auth. See comments on chaincodeListenAddress for more info

tls:

# Require server-side TLS

enabled: false

# Require client certificates / mutual TLS.

# Note that clients that are not configured to use a certificate will

# fail to connect to the peer.

clientAuthRequired: false

# X.509 certificate used for TLS server

cert:

file: /opt/hyperledger/fabricconfig/crypto-config/peerOrganizations/org1.asn417.com/peers/peer0.org1.asn417.com/tls/server.crt

# Private key used for TLS server (and client if clientAuthEnabled

# is set to true

key:

file: /opt/hyperledger/fabricconfig/crypto-config/peerOrganizations/org1.asn417.com/peers/peer0.org1.asn417.com/tls/server.key

# Trusted root certificate chain for tls.cert

rootcert:

file: /opt/hyperledger/fabricconfig/crypto-config/peerOrganizations/org1.asn417.com/peers/peer0.org1.asn417.com/tls/ca.crt

# Set of root certificate authorities used to verify client certificates

clientRootCAs:

files:

- /opt/hyperledger/fabricconfig/crypto-config/peerOrganizations/org1.asn417.com/peers/peer0.org1.asn417.com/tls/ca.crt

# Private key used for TLS when making client connections. If

# not set, peer.tls.key.file will be used instead

clientKey:

file:

# X.509 certificate used for TLS when making client connections.

# If not set, peer.tls.cert.file will be used instead

clientCert:

file:

# Authentication contains configuration parameters related to authenticating

# client messages

authentication:

# the acceptable difference between the current server time and the

# client's time as specified in a client request message

timewindow: 15m

# Path on the file system where peer will store data (eg ledger). This

# location must be access control protected to prevent unintended

# modification that might corrupt the peer operations.

fileSystemPath: /opt/hyperledger/peer/production

# BCCSP (Blockchain crypto provider): Select which crypto implementation or

# library to use

BCCSP:

Default: SW

# Settings for the SW crypto provider (i.e. when DEFAULT: SW)

SW:

# TODO: The default Hash and Security level needs refactoring to be

# fully configurable. Changing these defaults requires coordination

# SHA2 is hardcoded in several places, not only BCCSP

Hash: SHA2

Security: 256

# Location of Key Store

FileKeyStore:

# If "", defaults to 'mspConfigPath'/keystore

KeyStore:

# Settings for the PKCS#11 crypto provider (i.e. when DEFAULT: PKCS11)

PKCS11:

# Location of the PKCS11 module library

Library:

# Token Label

Label:

# User PIN

Pin:

Hash:

Security:

FileKeyStore:

KeyStore:

# Path on the file system where peer will find MSP local configurations

mspConfigPath: /opt/hyperledger/fabricconfig/crypto-config/peerOrganizations/org1.asn417.com/peers/peer0.org1.asn417.com/msp

# Identifier of the local MSP

# ----!!!!IMPORTANT!!!-!!!IMPORTANT!!!-!!!IMPORTANT!!!!----

# Deployers need to change the value of the localMspId string.

# In particular, the name of the local MSP ID of a peer needs

# to match the name of one of the MSPs in each of the channel

# that this peer is a member of. Otherwise this peer's messages

# will not be identified as valid by other nodes.

localMspId: Org1MSP

# CLI common client config options

client:

# connection timeout

connTimeout: 3s

# Delivery service related config

deliveryclient: