无人驾驶汽车系统入门(二十九)——使用TensorFlow Object Detection API分别在GPU和Google Cloud TPU上训练交通信号灯检测神经网络

本文将解决如何使用TensorFlow Object Detection API训练交通信号灯检测网络,使用Lisa数据集,通过简单脚本将数据集整理为tf record格式,我们将分别在本地的GPU和Google Cloud提供的TPU上进行训练,最后导出网络的protocbuf权重,在jupyter notebook中进行模型验证。

首先感谢谷歌TensorFlow Research Cloud团队提供的免费Cloud TPU,在本文中我使用的是Cloud TPU v2版本,拥有8个核心共计64GB的内存(类比于GPU的显存),能够提供最大180 TFlops的算力。

环境准备

本地GPU环境准备

首先介绍在本地使用GPU进行训练的环境准备,首先确保你的电脑中包含算力比较充足的GPU(俗称显卡,推荐GTX1060及以上级别),使用Ubuntu或者其他Linux发行版本的系统,安装好CUDA和CUDNN,安装tensorflow-gpu和其他环境:

pip install tensorflow-gpu

sudo apt-get install protobuf-compiler python-pil python-lxml python-tk

pip install --user Cython

pip install --user contextlib2

pip install --user jupyter

pip install --user matplotlib

安装COCO API:

git clone https://github.com/cocodataset/cocoapi.git

cd cocoapi/PythonAPI

make

cp -r pycocotools /models/research/

克隆models项目:

git clone https://github.com/tensorflow/models.git

cd 到models/research/目录下,运行:

protoc object_detection/protos/*.proto --python_out=.

添加当前路径(research)到python路径:

export PYTHONPATH=$PYTHONPATH:`pwd`:`pwd`/slim

以上均为官方安装教程,见:https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/installation.md

至此,本地运行环境准备完成,后文中我们将在Google Cloud上如准备以上环境。

交通信号灯检测数据集准备

我们首先下载交通信号灯数据集,本文我们采用Lisa信号灯数据集,下载链接:

http://cvrr.ucsd.edu/vivachallenge/index.php/traffic-light/traffic-light-detection/

使用Day Train Set (12.4 GB)和Night Train Set (0.8 GB)两个数据集进行训练,下载完成后解压,得到原始图片(保存与frame文件夹下)和标注的CSV文件(文件名为:frameAnnotationsBOX.csv)

编写脚本create_lisa_tf_record.py将Lisa数据集以tf record格式保存,代码如下:

#!/usr/bin/env python

import os

import csv

import io

import itertools

import hashlib

import random

import PIL.Image

import tensorflow as tf

from object_detection.utils import label_map_util

from object_detection.utils import dataset_util

import contextlib2

from object_detection.dataset_tools import tf_record_creation_util

ANNOTATION = 'frameAnnotationsBOX.csv'

FRAMES = 'frames'

MAP = {

'go': 'green',

'goLeft': 'green',

'stop': 'red',

'stopLeft': 'red',

'warning': 'yellow',

'warningLeft': 'yellow'

}

flags = tf.app.flags

flags.DEFINE_string('data_dir', '', 'Root directory to LISA dataset.')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

flags.DEFINE_string('label_map_path', 'data/lisa_label_map.pbtxt',

'Path to label map proto')

FLAGS = flags.FLAGS

width = None

height = None

def process_frame(label_map_dict, frame):

global width

global height

filename, xmin, ymin, xmax, ymax, classes = frame

if not os.path.exists(filename):

tf.logging.error("File %s not found", filename)

return

with tf.gfile.GFile(filename, 'rb') as img:

encoded_png = img.read()

png = PIL.Image.open(io.BytesIO(encoded_png))

if png.format != 'PNG':

tf.logging.error("File %s has unexpeted image format '%s'", filename, png.format)

return

if width is None and height is None:

width = png.width

height = png.height

tf.logging.info('Expected image size: %dx%d', width, height)

if width != png.width or height != png.height:

tf.logging.error('File %s has unexpected size', filename)

return

print filename

print classes

key = hashlib.sha256(encoded_png).hexdigest()

labels = [ label_map_dict[c] for c in classes ]

xmin = [ float(x)/width for x in xmin ]

xmax = [ float(x)/width for x in xmax ]

ymin = [ float(y)/height for y in ymin ]

ymax = [ float(y)/height for y in ymax ]

classes = [ c.encode('utf8') for c in classes ]

example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(filename.encode('utf8')),

'image/source_id': dataset_util.bytes_feature(filename.encode('utf8')),

'image/key/sha256': dataset_util.bytes_feature(key.encode('utf8')),

'image/encoded': dataset_util.bytes_feature(encoded_png),

'image/format': dataset_util.bytes_feature('png'.encode('utf8')),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmin),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmax),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymin),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymax),

'image/object/class/text': dataset_util.bytes_list_feature(classes),

'image/object/class/label': dataset_util.int64_list_feature(labels)

}))

return example

def create_frame(root, frame, records):

filename = os.path.join(root, FRAMES, os.path.basename(frame))

if not os.path.exists(filename):

tf.logging.error("File %s not found", filename)

return

xmin = []

ymin = []

xmax = []

ymax = []

classes = []

for r in records:

if r['Annotation tag'] not in MAP:

continue

classes.append(MAP[r['Annotation tag']])

xmin.append(r['Upper left corner X'])

xmax.append(r['Lower right corner X'])

ymin.append(r['Upper left corner Y'])

ymax.append(r['Lower right corner Y'])

yield (filename, xmin, ymin, xmax, ymax, classes)

def process_annotation(root):

tf.logging.info('Processing %s', os.path.join(root, ANNOTATION))

with tf.gfile.GFile(os.path.join(root, ANNOTATION)) as a:

annotation = a.read().decode('utf-8')

with io.StringIO(annotation) as a:

data = csv.DictReader(a, delimiter=';')

for key, group in itertools.groupby(data, lambda r: r['Filename']):

for e in create_frame(root, key, group):

yield e

def main(_):

label_map_dict = label_map_util.get_label_map_dict(FLAGS.label_map_path)

frames = []

for r, d, f in tf.gfile.Walk(FLAGS.data_dir, in_order=True):

if ANNOTATION in f:

del d[:]

for e in process_annotation(r):

frames.append(e)

# random.shuffle(frames)

num_shards=30

with contextlib2.ExitStack() as tf_record_close_stack:

output_tfrecords = tf_record_creation_util.open_sharded_output_tfrecords(

tf_record_close_stack, FLAGS.output_path, num_shards)

for index, f in enumerate(frames):

tf_example = process_frame(label_map_dict, f)

output_shard_index = index % num_shards

output_tfrecords[output_shard_index].write(tf_example.SerializeToString())

if __name__ == '__main__':

tf.logging.set_verbosity(tf.logging.INFO)

tf.app.run()

将此脚本复制到 models/research 目录下,运行此脚本如下:

python create_lisa_tf_record.py \

--data_dir={lisa数据集的路径} \

--output_path={你保存record文件的路径,包含record文件名} \

--label_map_path={lisa_label_map.pbtxt的路径}

其中label_map_path用于指定标签数字到类别名的映射关系,我们只研究红绿黄灯的检测识别,所以只有三个类,lisa_label_map.pbtxt内容如下:

item {

id: 1

name: 'green'

}

item {

id: 2

name: 'red'

}

item {

id: 3

name: 'yellow'

}

需要留意的是,Lisa数据集的标签一共有六类:go,goLeft,stop,stopLeft,warning,warningLeft,我们在上面的脚本中将这六类分别映射成green, red, yellow三类。

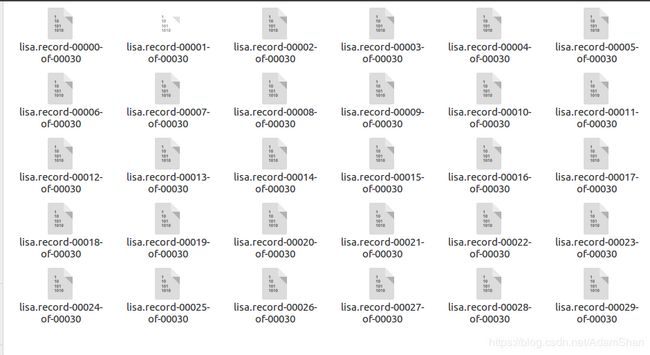

由于数据集数量较大,所以我们没有将所有的数据保存成一个tf record文件,我们使用如下代码,将record文件拆分成了30个子文件:

num_shards=30

with contextlib2.ExitStack() as tf_record_close_stack:

output_tfrecords = tf_record_creation_util.open_sharded_output_tfrecords(

tf_record_close_stack, FLAGS.output_path, num_shards)

for index, f in enumerate(frames):

tf_example = process_frame(label_map_dict, f)

output_shard_index = index % num_shards

output_tfrecords[output_shard_index].write(tf_example.SerializeToString())

得到的tf record文件如下图所示,至此,数据集的准备完成:

模型训练文件配置

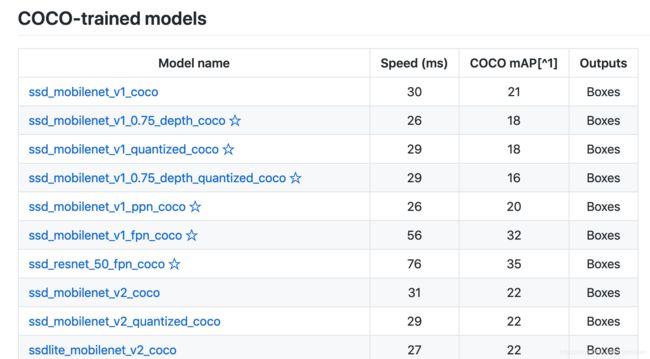

准备好tf record后,需要调整模型的配置文件,我们首先在Object detection的Model Zoo中选取一个想要训练的模型,如下图所示,点击下载模型的预训练权重,现代深度学习模型往往包含百兆甚至数百兆的参数,从头训练需要耗费巨大的算力,所以我们选择在某些数据集上(如COCO、PASCAL)已经预训练好的模型,在此模型权重的基础上进行 微调(Fine tune) ,即可快速训练得到适用于新任务的模型,具体理论细节,大家可以搜索关键词 迁移学习 了解。

如上图,我们分别选择ssd_resnet_50_fpn_coco和ssd_mobilenet_v1_fpn_coco在本地GPU和Cloud TPU上进行训练,注意带星号即表示该模型支持TPU训练。下载好权重之后,从models/research/object_detection/samples/configs 下拷贝出对应的config文件,以ssd_resnet_50_fpn_coco为例,修改类别数为3:

num_classes: 3

因为Lisa数据集的图像尺寸均为1280*960,修改输入的resize层参数如下:

image_resizer {

fixed_shape_resizer {

height: 960

width: 1280

}

}

将config中所有的PATH_TO_BE_CONFIGURED替换为对应文件的路径,如下所示:

fine_tune_checkpoint: "{下载的预训练模型路径}/model.ckpt"

train_input_reader: {

tf_record_input_reader {

input_path: "{训练集record}/lisa.record-?????-of-00029"

}

label_map_path: "lisa_label_map.pbtxt"

}

eval_input_reader: {

tf_record_input_reader {

input_path: "eval/lisa.record-00029-of-00030"

}

label_map_path: "lisa_label_map.pbtxt"

shuffle: false

num_readers: 1

}

上文中我们将数据集分割成了30个tf record文件,我们将最后一个文件lisa.record-00029-of-00030作为验证集,前29个record文件作为训练集,使用linux下的rename命令将lisa.record-000{0-28}-of-00030改为lisa.record-000{0-28}-of-00029,命令如下:

rename "s/30/29/" *

config文件中还可以配置网络训练大量参数,如batch_size以及学习率等等,在GPU中,由于显存有限,batch_size建议配置为2或者4,TPU属性和GPU有很大区别,所以参数会有所不同,配置好config文件后,cd到models/research/目录,使用如下指令开始训练:

python object_detection/model_main.py \

--pipeline_config_path={config 文件路径} \

--model_dir={训练输出的路径(文件夹)} \

--num_train_steps=50000 (训练的迭代次数) \

--sample_1_of_n_eval_examples=1 \

--alsologtostderr

使用tensorboard可视化训练过程,效果如下:

导出并验证模型

当训练结束以后,模型会以checkpoint文件的形式保存,为了导出用于应用的计算图,需要将该模型的图结构和权重固化到一起,这一步操作被称为freeze,使用如下命令freeze导出的模型:

# 在tensorflow/models/research/目录下

INPUT_TYPE=image_tensor

PIPELINE_CONFIG_PATH={config文件路径}

TRAINED_CKPT_PREFIX={model.ckpt-50000的路径}

EXPORT_DIR={导出计算图的路径}

python object_detection/export_inference_graph.py \

--input_type=${INPUT_TYPE} \

--pipeline_config_path=${PIPELINE_CONFIG_PATH} \

--trained_checkpoint_prefix=${TRAINED_CKPT_PREFIX} \

--output_directory=${EXPORT_DIR}

得到权重固化的模型计算图后,验证该模型,修改models/research/object_detection/目录下的object_detection_tutorial.ipynb文件,在jupyter notebook下对模型进行验证,首先导入库:

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

from distutils.version import StrictVersion

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

from object_detection.utils import ops as utils_ops

if StrictVersion(tf.__version__) < StrictVersion('1.9.0'):

raise ImportError('Please upgrade your TensorFlow installation to v1.9.* or later!')

# This is needed to display the images.

%matplotlib inline

from utils import label_map_util

from utils import visualization_utils as vis_util

加载模型权重:

# 冻结的检测模型(protocbuf格式)的路径,这是用于信号灯检测的实际模型。

PATH_TO_FROZEN_GRAPH = '/home/adam/data/tl_detector/new-export/frozen_inference_graph.pb'

# label map的路径

PATH_TO_LABELS = '/home/adam/data/tl_detector/lisa_label_map.pbtxt'

# 交通信号灯检测为3

NUM_CLASSES = 3

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_FROZEN_GRAPH, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

加载label map:

category_index = label_map_util.create_category_index_from_labelmap(PATH_TO_LABELS, use_display_name=True)

print(category_index)

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

使用模型对测试图片进行交通信号灯检测:

import os

PATH_TO_TEST_IMAGES_DIR = '/home/adam/data/tl_detector/test-example/'

image_list = os.listdir(PATH_TO_TEST_IMAGES_DIR)

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, i) for i in image_list ]

print(TEST_IMAGE_PATHS)

# Size, in inches, of the output images.

IMAGE_SIZE = (12, 8)

def run_inference_for_single_image(image, graph):

with graph.as_default():

with tf.Session() as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0')

# Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image, 0)})

# all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8`)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

检测结果:

Google Cloud TPU环境配置以及模型训练

Cloud TPU环境配置

要使用Google的TPU计算资源首先需要掌握“科学上网”(让你的电脑能够访问Google),然后进入 https://cloud.google.com 注册并创建Project,所有新注册的用户均需要激活,激活完成以后才能使用Cloud的所有功能,激化实际上就是要绑定一张信用卡,激活完成后能免费领取300美元的额度,同学们可以使用此额度来完成本文的实验。

在Cloud的控制台,我们新建一个云存储示例(英文名Bucket),TPU节点在运算时读取存储于Bucket上的数据集(tf record格式),同时将训练的结果图(checkout)文件和用于tensorboard可视化的event等文件写到Bucket中。Cloud官方给出了如何创建一个Bucket教程:https://cloud.google.com/tpu/docs/quickstart (其中的Create a Cloud Storage bucket小节)

下载ctpu工具并安装,下载和安装说明见官方仓库:https://github.com/tensorflow/tpu/tree/master/tools/ctpu 注意是Local Machine安装而不是Cloud Shell,安装完成以后使用如下命令新建虚拟机和TPU:

ctpu up

在此过程中会弹出网页让你登陆Google账号,登陆即可。配置完成后会弹出如下信息:

ctpu will use the following configuration:

Name: [your TPU's name]

Zone: [your project's zone]

GCP Project: [your project's name]

TensorFlow Version: 1.13

VM:

Machine Type: [your machine type]

Disk Size: [your disk size]

Preemptible: [true or false]

Cloud TPU:

Size: [your TPU size]

Preemptible: [true or false]

OK to create your Cloud TPU resources with the above configuration? [Yn]:

确认即可,其中VM是你的一个云上的虚拟机,TPU是你的TPU节点,在完成配置后,下载安装gcloud工具:https://cloud.google.com/sdk/gcloud/ ,gcloud工具为 Google Cloud Platform 提供了主要的命令行界面。借助此工具,可以通过命令行或在脚本和其他自动化功能中执行许多常见平台任务。安装gcloud以后,使用 gcloud init 初始化环境,使用如下指令设置当前project和zone(保持和虚拟机以及TPU在同一个zone):

gcloud config set project [YOUR-CLOUD-PROJECT]

gcloud config set compute/zone [YOUR-ZONE]

使用gcloud登陆虚拟机,指令如下:

gcloud compute ssh [你的虚拟机的名字]

使用ctpu up指令新建的虚拟机和TPU节点通常同名。登陆到你的远程虚拟机之后,使用和本地GPU环境一样的安装方式(见上文)安装object detection API,注意虚拟机已经安装好了支持TPU的Tensorflow,所以无需再安装,其余安装和环境配置流程和本地训练一致。

TPU不能直接使用存储于虚拟机上的数据,TPU的数据读入和训练结果的输出均面向google的云存储Bucket,前文中我们已经新建了一个Bucket实例,下面我们要配置Bucket使得我们的TPU有权限读写该Bucket,首先我们在虚拟机上查看我们的TPU服务账号,配置虚拟机的环境变量,配置虚拟机的project:

gcloud config set project YOUR_PROJECT_NAME

在 .bashrc 添加project id和Bucket:

export PROJECT="YOUR_PROJECT_ID"

export YOUR_GCS_BUCKET="YOUR_UNIQUE_BUCKET_NAME"

使用如下指令得到当前TPU的服务账号:

curl -H "Authorization: Bearer $(gcloud auth print-access-token)" https://ml.googleapis.com/v1/projects/${PROJECT}:getConfig

得到当前TPU的服务账号,类似于 [email protected] 拷贝此账号,在 .bashrc 中添加如下环境变量:

export TPU_ACCOUNT=your-service-account

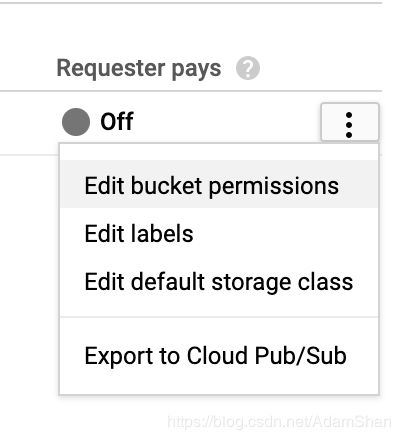

在Google Cloud控制台的找到Bucket-Browser,选择Bucket点击 Edit bucket permissions 如下:

复制TPU服务账号,选择添加Bucket读写权限和Object读写权限,修改完成以后,使用gcloud登录虚拟机,使用上文相同的方法制作record文件,lisa数据集可以使用wget下载。制作完成record文件后,使用 gsutil 将数据集从虚拟机拷贝至Bucket:

gsutil cp -r record/ gs://${YOUR_GCS_BUCKET}/

同样的,将从Model Zoo中下载的预训练权重和label map使用gsutil拷贝至Bucket:

gsutil cp -r ssd_mobilenet_fpn/ gs://${YOUR_GCS_BUCKET}/

gsutil cp lisa_label_map.pbtxt gs://${YOUR_GCS_BUCKET}/

修改config文件,我们使用的TPU v2是Google推出的第二代Cloud TPU,其包含8个核心,每个核心具备8G的内存,所以使用TPU训练模型,可是设置较大的batch size,较大的batch size意味着我们可以设置相对小的训练步数,本文中设置batch size为32,训练步数为25000步。同时,config文件中所有文件路径也要修改为云存储的路径,例如:

train_input_reader: {

tf_record_input_reader {

input_path: "gs://adamshan/record/lisa.record-?????-of-00029"

}

label_map_path: "gs://adamshan/lisa_label_map.pbtxt"

}

其中 adamshan 是我的Bucket名称。同样的,使用 gsutil 将config文件拷贝至Bucket,cd到虚拟机的 models/research/ 目录下,执行如下命令开始训练:

python object_detection/model_tpu_main.py \

--gcp_project={你的cloud project} \

--tpu_zone={你的TPU所在的zone,例如:us-central1-f} \

--tpu_name={你的TPU名称,可以使用gcloud查看,也可在cloud的控制台查看} \

--model=train \

--num_train_steps=25000 \

--model_dir={你要输出模型和tensorboard event文件的路径,注意是bucket路径,例如gs://adamshan/saved/} \

--pipeline_config_path={你保存于bucket中的config文件路径,例如:gs://adamshan/ssd_mobilenet_fpn.config}

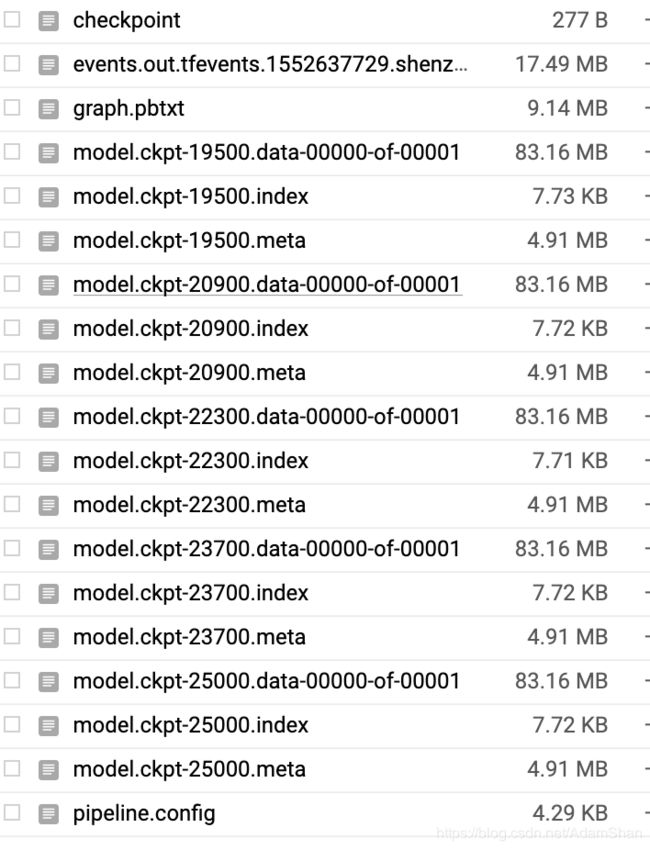

TPU将非常高效地开始训练,通常一个模型的fine tune在Cloud TPU v2上大约只需要1-2小时,最后的训练结果将被保存至Bucket的指定目录,如下:

模型的固化和应用同上文中本地训练的模型一致。