eclipse IDEA maven scala spark 搭建 成功运行 sparkContext

整了好几天,把eclipse弄能用.. 期间报各种错,进度也被耽误了…archetype和pom部分引用他人的,可惜调试的太多,没有记录下作者,这里歉意+感谢.

环境:

Hadoop–>2.6.4

Scala–>2.11.8

Spark–>2.2.0

IDE,

eclipseEE + scalaIDE插件–>oxygen:pom有报错,但是可用

scalaIDE–>4.7-RC:目前spark的本地/集群都可执行.

IDEA–>还有些问题,可运行,不完美.补充在最后,和eclipseEE有点像.

注意:

1,版本的一致.scala和spark的版本要对应,不然可能报class.Product$class错,报找不到类或者…错误好多,没头绪..

如:

下面pom的配置中的spark.version和scala.version和scala.binary.version还有scala Library Container中版本的匹配,

2,pom中添加scala-maven-plugin插件依赖,就不需要再添加scala的dependency,除非有特殊需求;同时要注意导入的Scala Library Container中的版本问题

3,善用maven 的update project和project 的clean还有项目右键中configure菜单中的功能

4,本方案为初级方案.有些问题还是未能解决,使用eclipse的话,就用scalaIDE版的吧.IDEA可用的话,编辑scala还是很顺手的,就是括号,引号的tab跳转不舒服

eclipse部分:

具体如下:同时适用于eclipseEE和scalaIDE,具体有说明.

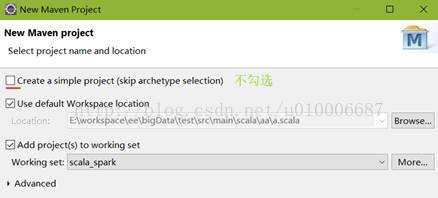

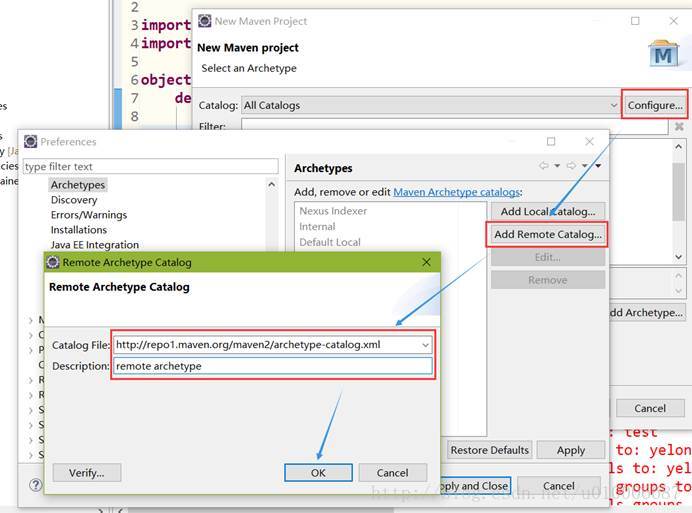

next

http://repo1.maven.org/maven2/archetype-catalog.xml

remote archetype创建好后,修改

src/main/java–>src/main/scala

src/test/java–>src/test/scala修改pom.xml.使用上面远程的archetype,会自动生成指定的pom,这里不使用.

<properties>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

<spark.version>2.2.0spark.version>

<scala.version>2.11.8scala.version>

<scala.binary.version>2.11scala.binary.version>

<hadoop.version>2.6.4hadoop.version>

properties>

<dependencies>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_${scala.binary.version}artifactId>

<version>${spark.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-streaming_${scala.binary.version}artifactId>

<version>${spark.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-sql_${scala.binary.version}artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>${hadoop.version}version>

dependency>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>4.12version>

dependency>

dependencies>

<repositories>

<repository>

<id>centralid>

<name>Maven Repository Switchboardname>

<layout>defaultlayout>

<url>http://repo2.maven.org/maven2url>

<snapshots>

<enabled>falseenabled>

snapshots>

repository>

repositories>

<build>

<sourceDirectory>src/main/scalasourceDirectory>

<testSourceDirectory>src/test/scalatestSourceDirectory>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<version>3.7.0version>

<configuration>

<source>1.8source>

<target>1.8target>

<encoding>UTF-8encoding>

configuration>

plugin>

<plugin>

<groupId>net.alchim31.mavengroupId>

<artifactId>scala-maven-pluginartifactId>

<version>3.3.1version>

<executions>

<execution>

<goals>

<goal>compilegoal>

<goal>testCompilegoal>

goals>

<configuration>

<args>

<arg>-make:transitivearg>

<arg>-dependencyfilearg>

args>

configuration>

execution>

executions>

plugin>

plugins>

build>

当当运行时出现” -make:transitive”错误时,注释掉这个<arg>.

同时在dependencies添加(可选):

<dependency>

<groupId>org.specs2groupId>

<artifactId>specs2-junit_2.11artifactId>

<version>3.9.4version>

<scope>testscope>

dependency>注意:

1. 这里在eclipseEE在市场中下载了scalaIDE插件后,依然会报错,不用管,能用.

2. pom中的依赖包的版本注意与自己的版本对应.

3. 恰当使用项目右键中maven的update Projcet功能和eclipse菜单栏projcet的clean和build Automatically.多试试.

4. 在使用maven 的update Projcet后成功识别pom中的scala插件配置后,项目右键会有scala的选项.在使用eclipseEE的maven的update Projcet后没有反应时,使用项目右键菜单中configure,有”add Scala Nature”,可给项目添加scala库容器

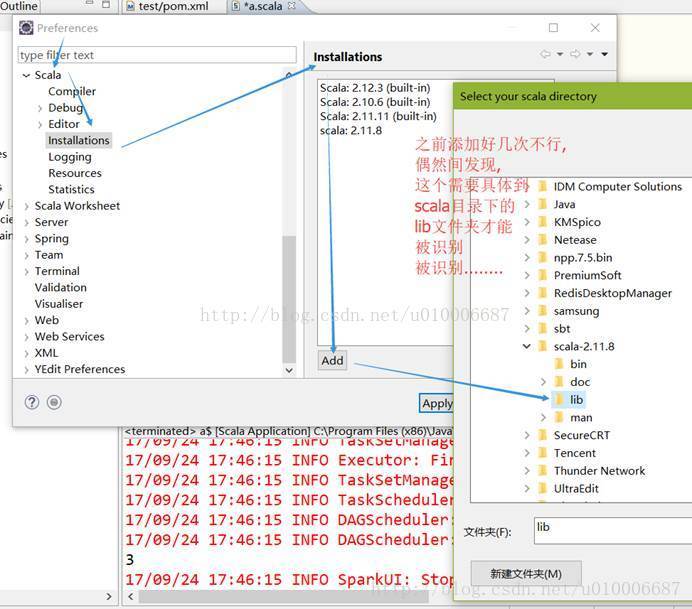

5. 当下载scalaIDE插件或者使用最新的scalaIDE时,默认图中的(build-in)3个版本,要添加自己的scala版本时,在Window–Perferences–>

6. 修改scala版本时,在scala Library Container 右键–> build path–> configure build path –> Libraries标签中,remove当前版本,add Library –> Scala Library–> 选择刚才添加的版本即可.

5,创建object对象,测试运行.注意eclipseEE在执行sparkjob时,我这里需要设置head memory,在的run configurations..中scala application的对应任务下

测试代码:

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

object a {

def main(args: Array[String]): Unit = {

val conf = new SparkConf()

//conf.setMaster("spark://mini2:7077")

conf.setMaster("local[4]")

conf.setAppName("test")

val sc = new SparkContext(conf)

val a = sc.parallelize(List(1,2,3), 2)

println(a.count()) //3

sc.stop()

}

}**

IDEA部分

**

1,创建maven项目,修改

src/main/java-->src/main/scala

src/test/java-->src/test/scala2,改pom,配置内容与上面有些不同–

试了下,两个pom的部分不能互换,起码eclipse的部分不能放在IDEA这个版本里, 配置具体什么含义,会者不难.不会的人就照着这个来吧,起码能跑起来

<properties>

<maven.compiler.source>1.8maven.compiler.source>

<maven.compiler.target>1.8maven.compiler.target>

<encoding>UTF-8encoding>

<scala.version>2.11.8scala.version>

<spark.version>2.2.0spark.version>

<hadoop.version>2.6.4hadoop.version>

properties>

<dependencies>

<dependency>

<groupId>org.apache.sparkgroupId>

<artifactId>spark-core_2.11artifactId>

<version>${spark.version}version>

dependency>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>${hadoop.version}version>

dependency>

dependencies>

<build>

<sourceDirectory>src/main/scalasourceDirectory>

<testSourceDirectory>src/test/scalatestSourceDirectory>

<plugins>

<plugin>

<groupId>net.alchim31.mavengroupId>

<artifactId>scala-maven-pluginartifactId>

<version>3.2.2version>

<executions>

<execution>

<goals>

<goal>compilegoal>

<goal>testCompilegoal>

goals>

<configuration>

<args>

<arg>-dependencyfilearg>

<arg>${project.build.directory}/.scala_dependenciesarg>

args>

configuration>

execution>

executions>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-shade-pluginartifactId>

<version>3.1.0version>

<executions>

<execution>

<phase>packagephase>

<goals>

<goal>shadegoal>

goals>

<configuration>

<filters>

<filter>

<artifact>*:*artifact>

<excludes>

<exclude>META-INF/*.SFexclude>

<exclude>META-INF/*.DSAexclude>

<exclude>META-INF/*.RSAexclude>

excludes>

filter>

filters>

configuration>

execution>

executions>

plugin>

plugins>

build>

===>这里遇到了-make:transitive问题,注释掉这行,没有添加junit依赖,也可运行…………….

3,在Projcet Structure中Global Library修改本项目的scala版本,类似eclipse添加.见eclipse部分

4,善用侧边栏的Maven Project的左上角的刷新和项目右键的build和rebuild功能

5,我这里运行时,报出和eclipseEE相同的内存不足,在run configurations…中设置,如图:

eclipse+插件版本我没找到类似的设置,只能一个obj设置一个了;

scalaIDE不存在内存问题.