初探 TensorFlow 2.0

初探 TensorFlow 2.0

安装 TensorFlow 2.0 虚拟环境

Mac or Linux

conda create --name tf2 python=3.6

Windows

conda create --name tf2 python=3.6

使用 activate 命令激活

Mac or Linux

source activate tf2

Windows

activate

同理deactivate用来退出环境

安装tensorflow的环境

首先使用清华源

sudo pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

Or

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

其次,安装 tensorflow 2.0

sudo pip install tensorflow==2.0.0-alpha

Or

pip install tensorflow==2.0.0-alpha

创建Jupyter Notebook环境

需要将tensorflow2.0作为一个kernel加入到jupyter环境中

conda install notebook ipykernel

sudo python -m ipykernel install --name tf2

Or

conda install notebook ipykernel

python -m ipykernel install --name tf2

使用jupyter notebook命令启动ipython。环境切换到tf2

导入tensorflow和keras,查看对应版本信息

import tensorflow as tf

from tensorflow.keras import layers

print(tf.__version__)

print(tf.keras.__version__)

2.0.0-alpha0

2.2.4-tf

介绍两种建立神经网络的方式

- 使用tf.keras.Sequential创建神经网络

- 使用 Keras 函数式 API创建神经网络

# 导入数据,下载数据集

(x_train, y_train), (x_test, y_test) = tf.keras.datasets.mnist.load_data()

x_train = x_train.reshape([x_train.shape[0], -1])

x_test = x_test.reshape([x_test.shape[0], -1])

print(x_train.shape, ' ', y_train.shape)

print(x_test.shape, ' ', y_test.shape)

print(x_train.dtype)

(60000, 784) (60000,)

(10000, 784) (10000,)

uint8

创建神经网络结构的两种方案

方案1:使用tf.keras.Sequential创建神经网络

Method 1

model = tf.keras.Sequential()

model.add(layers.Dense(64,activation='relu',input_shape=(784,)))

model.add(layers.Dense(32,activation='relu',kernel_initializer=tf.keras.initializers.glorot_normal))

model.add(layers.Dense(32,activation='relu',kernel_regularizer=tf.keras.regularizers.l2(0.01)))

model.add(layers.Dense(10,activation='softmax'))

Method 2

# model = tf.keras.Sequential([

# layers.Dense(64, activation='relu', kernel_initializer='he_normal', input_shape=(784,)),

# layers.Dense(64, activation='relu', kernel_initializer='he_normal'),

# layers.Dense(64, activation='relu', kernel_initializer='he_normal',kernel_regularizer=tf.keras.regularizers.l2(0.01)),

# layers.Dense(10, activation='softmax')

# ])

complie函数,主要是来编译我们的模型

函数说明

- 第一个是使用的优化器optimizer;

- 第二个是模型的损失函数,这里使用的是sparse_categorical_crossentropy,也可以写成loss=tf.keras.losses.SparseCategoricalCrossentropy(),但在使用save方法保存和加载模型的时会报错,所以推荐使用字符串的写法;

- 第三个参数是模型评估的方式,这里我们使用正确率来评估模型,也可以评估模型的其他方法。

使用fit函数训练模型

加入了验证集,batch_size设置为256,并用history来保存了结果

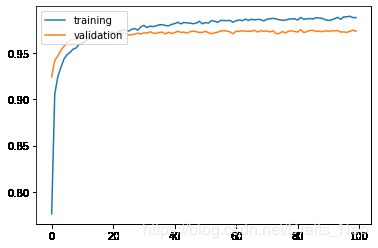

绘制accuracy曲线

运行history.dict。有一个关键的key是history,保留了每一步的loss、accuracy、val_loss、val_accuracy。

我们直接可以使用history.history[‘accuracy’]来访问每一步训练集的准确率

之所以会有accuracy,是因为在compile函数中加入了metrics=[‘accuracy’],之所以会有val_loss和val_accuracy,是因为我们在fit函数中加入了validation_split=0.3

model.compile(optimizer=tf.keras.optimizers.Adam(0.001),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

history = model.fit(x_train, y_train, batch_size=256, epochs=100, validation_split=0.3, verbose=0)

import matplotlib.pyplot as plt

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.legend(['training', 'validation'], loc='upper left')

plt.show()

# 使用model.summary()来查看构建的模型

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 64) 50240

_________________________________________________________________

dense_1 (Dense) (None, 32) 2080

_________________________________________________________________

dense_2 (Dense) (None, 32) 1056

_________________________________________________________________

dense_3 (Dense) (None, 10) 330

=================================================================

Total params: 53,706

Trainable params: 53,706

Non-trainable params: 0

_________________________________________________________________

# history = model.fit(x_train, y_train, batch_size=256, epochs=100, validation_split=0.3, verbose=0)

# import matplotlib.pyplot as plt

# plt.plot(history.history['accuracy'])

# plt.plot(history.history['val_accuracy'])

# plt.legend(['training', 'validation'], loc='upper left')

# plt.show()

# import matplotlib.pyplot as plt

# plt.plot(history.history['accuracy'])

# plt.plot(history.history['val_accuracy'])

# plt.legend(['training', 'validation'], loc='upper left')

# plt.show()

使用evaluate进行模型的评测

results = model.evaluate(x_test,y_test)

10000/10000 [==============================] - 1s 76us/sample - loss: 0.2339 - accuracy: 0.9655

方案2:使用Keras函数式API创建神经网络

使用tf.keras.Sequential是层的简单堆叠,无法表示任意模型,如具有非序列数据流的模型(例如,残差连接)。而使用Keras函数式API则可以。在使用Keras函数式API时,层实例可调用并返回张量。而输入张量和输出张量用于定义 tf.keras.Model实例

构建模型

input_x = tf.keras.Input(shape=(784,))

hidden1 = layers.Dense(64, activation='relu', kernel_initializer='he_normal')(input_x)

hidden2 = layers.Dense(64, activation='relu', kernel_initializer='he_normal')(hidden1)

hidden3 = layers.Dense(64, activation='relu', kernel_initializer='he_normal',kernel_regularizer=tf.keras.regularizers.l2(0.01))(hidden2)

output = layers.Dense(10, activation='softmax')(hidden3)

model2 = tf.keras.Model(inputs = input_x,outputs = output)

训练模型

model2.compile(optimizer=tf.keras.optimizers.Adam(0.001),

#loss=tf.keras.losses.SparseCategoricalCrossentropy(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

history = model2.fit(x_train, y_train, batch_size=256, epochs=100, validation_split=0.3, verbose=0)

import matplotlib.pyplot as plt

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.legend(['training', 'validation'], loc='upper left')

plt.show()

模型的保存和加载

使用save和tf.keras.models.load_model保存和加载模型

model2.save('model.h5')

model3 = tf.keras.models.load_model('model.h5')

results = model3.evaluate(x_test,y_test)

10000/10000 [==============================] - 1s 85us/sample - loss: 0.2075 - accuracy: 0.9704

添加BN和Dropout

注意:Relu、BN和Dropout的顺序!!!

model4 = tf.keras.Sequential([

layers.Dense(64, activation='relu', input_shape=(784,)),

layers.BatchNormalization(),

layers.Dropout(0.2),

layers.Dense(64, activation='relu'),

layers.BatchNormalization(),

layers.Dropout(0.2),

layers.Dense(64, activation='relu'),

layers.BatchNormalization(),

layers.Dropout(0.2),

layers.Dense(10, activation='softmax')

])

model4.compile(optimizer=tf.keras.optimizers.Adam(0.001),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model4.compile(optimizer=tf.keras.optimizers.Adam(0.001),

#loss=tf.keras.losses.SparseCategoricalCrossentropy(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

history = model4.fit(x_train, y_train, batch_size=256, epochs=100, validation_split=0.3, verbose=0)

import matplotlib.pyplot as plt

plt.plot(history.history['accuracy'])

plt.plot(history.history['val_accuracy'])

plt.legend(['training', 'validation'], loc='upper left')

plt.show()

思考-先BN还是先Relu?

一般认为的顺序是Relu->BN->Dropout,Dropout的顺序是最后一个应该是没有疑问的,关键是Relu和BN的顺序。更扩展点,是BN和非线性激活函数的关系。

关于这个问题,论文中给出的是先BN,后面接非线性激活函数。但实际中,也有人主张先非线性激活函数,再是BN。

推荐链接:

- https://www.zhihu.com/question/283715823

- https://zhuanlan.zhihu.com/c_1091021863043624960