TensorFlow实现Word2Vec

一、先了解什么是Word2Vec

Word2Vec也称为word Embeddings,中文有很多叫法,比如“词向量”,“词嵌入”。Word2Vec可以将语言中的字词转化为计算机可以理解的稠密向量,比如图片是像素的稠密矩阵,音频可以装换为声音信号的频谱数据。进而对其他自然语言处理,比如文本分类、词性标注、机器翻译等。在自然语言的Word2Vec处理之前,通常将字词转换为离散的单独的符号,这就是One-hot encoder。通常将整篇文章中每一个词都转换为一个向量,则整篇文章变为一个稀疏矩阵,那我们需要更多的数据来训练,稀疏数据训练的效率比较低,计算也麻烦。One-hot encoder对特征的编码往往是随机的,没有提供任何相关的信息,没有考虑字词间可能存在的关系。

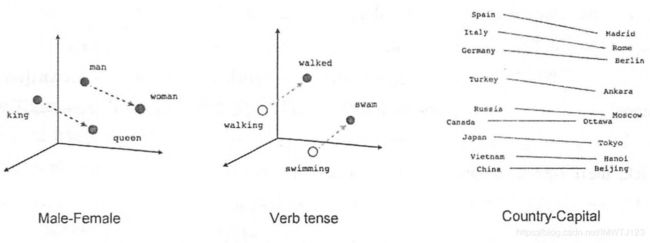

当我们使用向量表达(vector repressntations)可以解决这个问题。向量空间模型可以将字词转换为连续值的向量表达,并且其中意思相近的词将被映射到向量空间中相近的位置如图1。向量空间模型可以大致分为两类:一是计数模型,比如latent semantic analysis,它将统计在语料库中,相邻出现词的频率,再把这些计数统计结果转为小而稠密的矩阵。二是预测模型,比如neural prob abilistic models根据一个词周围相邻的词推测出这个词,以及它的空间向量。预测模型通常使用最大似然法,在给定语句h的情况下,最大化目标词汇w1的概率,它的问题是计算量非常大,需要计算词汇表中所有单词可能出现的可能性。

图1

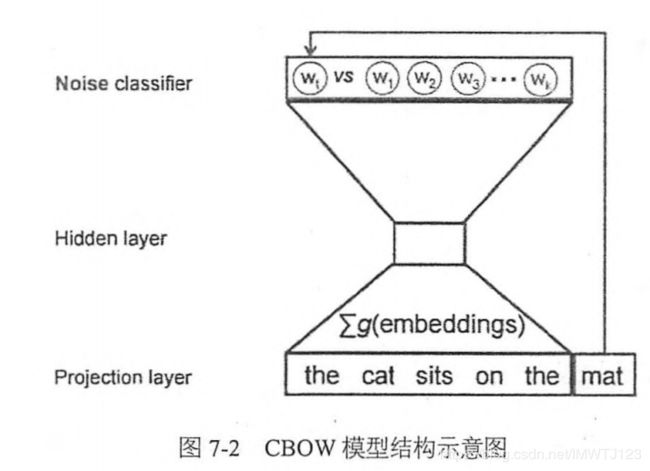

Word2Vec既是一种计算非常高效的,可以从原始语料中学习字词空间向量的预测模型,它主要分为CBOW和Skip-Gram两种模型。其中CBOW是从原始语句推测目标字词,比如中国的首都——北京,对小型数据比较合适。CBOM模型不需要计算完整的概率模型,只需要训练一个二元分类模型如图2,用来区分真实的目标词汇和编造的词汇(噪声)这两类。而Skip-Gram正好相反,从目标字词推测原始语句在大型语料中表现好。

当模型预测真实的目标词汇为高概率,同时预测其他噪声词汇为低概率时,我们训练的学习目标就被最优化了,用编造噪声的词汇训练的方法称为negative sampling,用这种方法计算loss funcation的效率特别高,我们只需要计算随机选择的k个词汇而非词汇表中的全部词汇,所以训练速度特别快。

在实际中, 我们使用Noise-Contrastive Estimation Loss,同时在Tensorflow中也有tf.nn.nce_loss()直接实现这个loss。

下面使用Skip-Gram模式的Word2Vec, 先来看它训练样本的构造。

二、Skip-Gram模式的Word2Vec

以“ the quick brown fox jumped over the lazy dog”这句话为例, 我们要构造一个语境与目标词汇的映射关系, 其中语境包括一个单词左边和右边的词汇, 假设滑窗的尺寸为1, 因为Skip-Gram模型是从目标词汇预测语境, 所以训练样本不再是[the,brown] ——quick,而是quick——the和quick——brown。我们的数据集则为( quick,the),( quick,brown),(brown,quick),(brown,fox)等。 我们训练时希望能从目标词汇quick预测语境the, 同时也需要制造随机的词汇作为负样本, 我们希望预测的概率分布在正样本the上尽可能大, 而在随机产生的负样本上小。

这里的做法是通过优化算法比如SGD来更新模型中word Embedding的参数, 让概率分布的损失函数尽可能小。 这样每个单词的embedded vector就会随着训练过程不断调整, 直到处于一个最适合语料的空间位置, 这样我们的损失函数最小, 最符合语料, 同时预测出正确单词的概率最大。

三、使用TensorFlow实现Word2Vec的训练

import collections

import math

import os

import random

import zipfile

import numpy as np

import urllib

import tensorflow as tf

from sklearn.manifold import TSNE

import matplotlib.pyplot as plt

#定义下载文本数据的函数,并核对文件尺寸

url = 'http://mattmahoney.net/dc/'

def maybe_download(filename, excepted_bytes):

if not os.path.exists(filename):

filename, _ = urllib.request.urlretrieve(url + filename, filename)

statinfo = os.stat(filename)

if statinfo.st_size == excepted_bytes:

print("Found and verified", filename)

else:

print(statinfo.st_size)

raise Exception(

"Failed to verfy" + filename + "Can you get to it with browser?")

return filename

filename = maybe_download('text8.zip', 31344016)

def read_data(filename):

with zipfile.ZipFile(filename) as f:

data=tf.compat.as_str(f.read(f.namelist()[0])).split()

return data

words=read_data(filename)

print('Data size',len(words))

"""创建vocabulary词汇量,使用collections.Counter统计单词列表中单词的频数,然后使用most_common

方法取top 50000频数的单词作为vocabulary,并创建dict将其放入vocabulary中。dict的查询复杂度为O(1)。

接下来将全部单词编号,top 50000以外的为0,并遍历单词列表,判断是否出现在dictonary中,是将其编号,

否则为0,最后返回转换后的编号data ,每个单词的统计频数count,词汇表dictonary,及其反转形式reverse_dictonary"""

vocabulary_size = 50000

def build_dataset(words):

count = [['UNK', -1]]

count.extend(collections.Counter(words).most_common(vocabulary_size - 1))

dictionary = dict()

for word, _ in count:

dictionary[word] = len(dictionary)

data = list()

unk_count = 0

for word in words:

if word in dictionary:

index = dictionary[word]

else:

index = 0

unk_count += 1

data.append(index)

count[0][1] = unk_count

reverse_dictionary = dict(zip(dictionary.values(), dictionary.keys()))

return data, count, dictionary, reverse_dictionary

data, count, dictionary, reverse_dictionary = build_dataset(words)

#为了节约内存删除原始单词列表,打印出最高频出现的词汇及其数量

del words

print ('Most common words (+UNK)', count[:5])

print('Sample data', data[:10], [reverse_dictionary[i] for i in data[:10]])

#生成训练样本.

data_index = 0

def generate_batch(batch_size, num_skips, skip_window):

global data_index

assert batch_size % num_skips == 0

assert num_skips <= 2 * skip_window

batch = np.ndarray(shape = (batch_size), dtype = np.int32)

labels = np.ndarray(shape = (batch_size, 1), dtype = np.int32)

span = 2 * skip_window + 1

buffer = collections.deque(maxlen = span)

for _ in range(span):

buffer.append(data[data_index])

data_index = (data_index + 1) % len(data)

for i in range(batch_size // num_skips):

target = skip_window

targets_to_avoid = [skip_window]

for j in range(num_skips):

while target in targets_to_avoid:

target = random.randint(0, span - 1)

targets_to_avoid.append(target)

batch[i * num_skips + j] = buffer[skip_window]

labels[i * num_skips + j, 0] = buffer[target]

buffer.append(data[data_index])

data_index = (data_index + 1)%len(data)

return batch, labels

#调用generate_batch函数简单测试一下功能

batch, labels = generate_batch(batch_size = 8, num_skips = 2, skip_window = 1)

for i in range(8):

print(batch[i], reverse_dictionary[batch[i]], '->', labels[i, 0], reverse_dictionary[labels[i, 0]])

#定义训练是的参数

batch_size = 128

embedding_size = 128

skip_window = 1

num_skips = 2

valid_size = 16

valid_window = 100

valid_examples = np.random.choice(valid_window, valid_size, replace = False)

num_sampled = 64

#定义Skip-Gram Word2Vec模型的网络结构

graph = tf.Graph()

with graph.as_default():

train_inputs = tf.placeholder(tf.int32, shape = [batch_size])

train_labels = tf.placeholder(tf.int32, shape = [batch_size, 1])

valid_dataset = tf.constant(valid_examples, dtype = tf.int32)

with tf.device('/gpu:0'):

embeddings = tf.Variable(

tf.random_uniform([vocabulary_size, embedding_size], -1.0, 1.0))

embed = tf.nn.embedding_lookup(embeddings, train_inputs)

nce_weights = tf.Variable(

tf.truncated_normal([vocabulary_size, embedding_size], stddev = 1.0 / math.sqrt(embedding_size)))

nce_biases = tf.Variable(tf.zeros([vocabulary_size]))

loss = tf.reduce_mean(tf.nn.nce_loss(weights = nce_weights,

biases = nce_biases,

labels = train_labels,

inputs = embed,

num_sampled = num_sampled,

num_classes = vocabulary_size))

optimizer = tf.train.GradientDescentOptimizer(1.0).minimize(loss)

norm = tf.sqrt(tf.reduce_sum(tf.square(embeddings), 1, keep_dims = True))

normalized_embeddings = embeddings / norm

valid_embeddings = tf.nn.embedding_lookup(normalized_embeddings, valid_dataset)

similarity = tf.matmul(valid_embeddings, normalized_embeddings, transpose_b = True)

init = tf.global_variables_initializer()

#定义最大迭代次数,创建并设置默认的session

num_steps = 100001

with tf.Session(graph = graph) as session:

init.run()

print("Initialized")

average_loss = 0

for step in range(num_steps):

batch_inputs, batch_labels = generate_batch(batch_size, num_skips, skip_window)

feed_dict = {train_inputs: batch_inputs, train_labels: batch_labels}

_, loss_val = session.run([optimizer, loss], feed_dict = feed_dict)

average_loss += loss_val

if step % 2000 == 0:

if step > 0:

average_loss /= 2000

print("Average loss at step ", step, ":", average_loss)

average_loss = 0

if step % 10000 == 0:

sim = similarity.eval()

for i in range(valid_size):

valid_word = reverse_dictionary[valid_examples[i]]

top_k = 8

nearest = (-sim[i, :]).argsort()[1: top_k+1]

log_str = "Nearest to %s:" % valid_word

for k in range(top_k):

close_word=reverse_dictionary[nearest[k]]

log_str = "%s %s," %(log_str, close_word)

print(log_str)

final_embeddings = normalized_embeddings.eval()

#定义可视化Word2Vec效果的函数

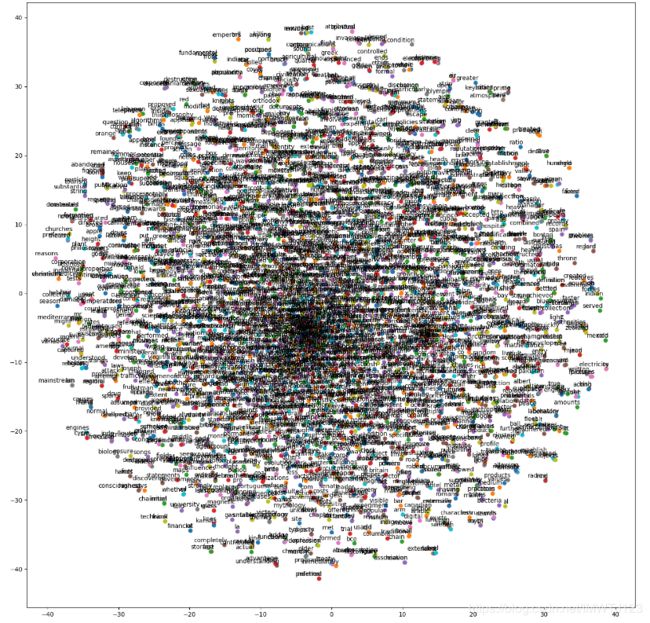

def plot_with_labels(low_dim_embs, labels, filename = 'tsne.png'):

assert low_dim_embs.shape[0] >= len(labels), "More labels than embeddings"

plt.figure(figsize= (18, 18))

for i, label in enumerate(labels):

x, y = low_dim_embs[i, :]

plt.scatter(x, y)

plt.annotate(label, xy = (x, y), xytext= (5, 2), textcoords = 'offset points', ha = 'right', va = 'bottom')

plt.savefig(filename)

tsne = TSNE(perplexity = 30, n_components = 2, init = 'pca', n_iter = 5000)

plot_only = 100

low_dim_embs = tsne.fit_transform(final_embeddings[:plot_only, :])

labels = [reverse_dictionary[i] for i in range(plot_only)]

plot_with_labels(low_dim_embs, labels)

参考文献: 黄文坚TensorFlow实战