卷积神经网络CNN-高级

卷积神经网络CNN-高级

AlexNet:现代神经网络起源

结构源自ImageNet比赛。

1. AlexNet结构

在卷积的时候,我们会依据这个公式来提取特征图: 【img_size - filter_size】/stride +1 = new_feture_size

- INPUT: 224×224×3 (RGB图像),实际上会经过预处理变为 227×227×3 的大小;

- CONV1:使用的96个大小规格为 11×11 的卷积核,stride为4,pad为0,进行特征提取,(ps:图上之所以看起来是48个是由于采用了2个GPU服务器处理,每一个服务器上承担了48个),得到两个 55×55×96 大小的特征图,([227-11] / 4 + 1 )= 55;

- MAX POOL1:使用 3×3 大小的filters,stride为2,进行最大池化,得到 27×27×96 池化后的特征图;

- NORM1:归一化层;

- CONV2:使用的256个大小规格为 5×5 的卷积核,stride为1,pad为2,进行特征提取,得到两个 27×27×128 大小的特征图;

- MAX POOL2:使用 3×3 大小的filters,stride为2,进行最大池化,得到 13×13×256 池化后的特征图;

- NORM2:归一化层;

- CONV3:使用的384个大小规格为 3×3 的卷积核,stride为1,pad为1,得到 13×13×384 个特征图;

- CONV4:使用的384个大小规格为 3×3 的卷积核,stride为1,pad为1,得到 13×13×384 个特征图;

- CONV5:使用的256个大小规格为 3×3 的卷积核,stride为1,pad为1,得到 13×13×256 个特征图;

- MAX POOL3:使用 3×3 大小的filters,stride为2,进行最大池化,得到 6×6×256 池化后的特征图;

- FC6:4096个神经元;

- FC7:4096个神经元;

- FC8:1000个神经元。

2. VGG:AlexNet增强版

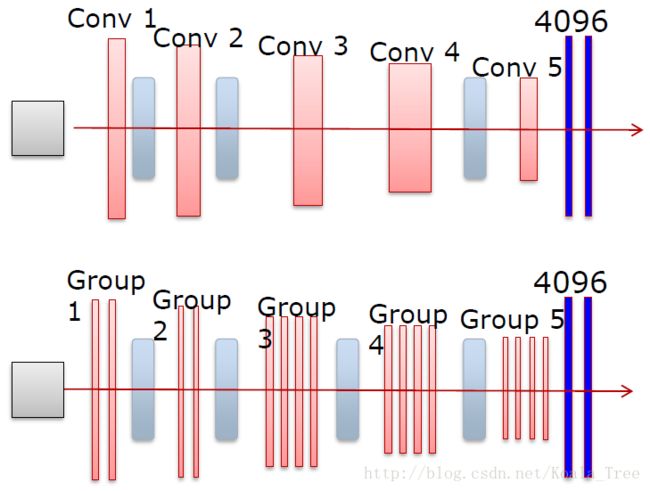

AlexNet和VGG结构对比

Group表示用多个卷积层代替一个卷积层。

VGG结构

VGG作用

- 结构简单,与AlexNet结构类似;

- 性能优异,较AlexNet提升明显,与GoogleNet、ResNet表现接近;

- 选择最多的基本模型,方便进行结构的优化设计。

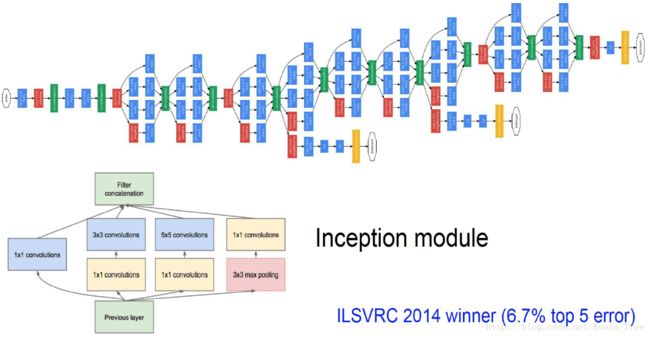

3. GoogleNet:多维度识别

结构发展变化

结构存在的问题:直接使用 3×3 等大小的卷积核,会使得结构参数变得很多;

使用 1×1 卷积核的好处:会用少的数据量,将数据降维,减少特征图的“厚度”。

结构的细节

全卷积结构FCN

一般的神经网络:卷积层+全连接层;

全卷积网络:没有全连接层。(全连接层需要的参数很多)

特点:

- 输入图片大小无限制;

- 空间信息有丢失;

- 参数更少,表达能力更强。

4. ResNet:机器超越人类识别

结构特征

问题:为什么ResNet有效?

- 1.前向计算:低层卷积网络高层卷积网络信息融合;层数越深,模型的表力越强;

- 2.反向计算:导数传递更直接,越过模型,直达各层;

5. DeepFace:结构化图片的特殊处理

人脸识别:通过观察人脸确定对应的身份,在应用中跟多的是确认(verification)。

人脸识别数据的特点

- 结构化:所有人脸,组成相似,理论上能够实现对齐;

- 差异化:相同位置,形貌不同;

局部卷积

- 每个卷积核固定某个区域,不移动;

- 不同区域之间不共享卷积核;

- 卷积核参数由固定区域数据确定。

全局部卷积连接的缺陷

- 预处理:大量对准,对准要求高,原始信息可能丢失;

- 卷积参数数量很大,模型收敛难度大,需要大量数据;

- 模型可扩展性差,基本限于人脸计算。

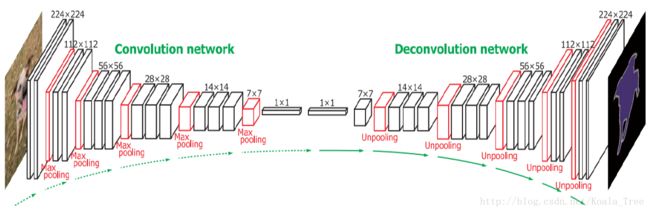

6. U-Net:图片生成网络

通过卷积神经网络生成特殊类型的图片,图片所有pixel需要生成,多目标回归。

VGG U-Net

卷积-逆卷积,池化-反池化

图片分割图生成

7. VGG实例:

以下是一个VGG16的TensorFlow模型。

- 需要用到的工具函数—

utils.py:

import skimage

import skimage.io

import skimage.transform

import numpy as np

# synset = [l.strip() for l in open('synset.txt').readlines()]

# returns image of shape [224, 224, 3]

# [height, width, depth]

def load_image(path):

# load image

img = skimage.io.imread(path)

img = img / 255.0

assert (0 <= img).all() and (img <= 1.0).all()

# print "Original Image Shape: ", img.shape

# we crop image from center

short_edge = min(img.shape[:2])

yy = int((img.shape[0] - short_edge) / 2)

xx = int((img.shape[1] - short_edge) / 2)

crop_img = img[yy: yy + short_edge, xx: xx + short_edge]

# resize to 224, 224

resized_img = skimage.transform.resize(crop_img, (224, 224))

return resized_img

# returns the top1 string

def print_prob(prob, file_path):

synset = [l.strip() for l in open(file_path).readlines()]

# print prob

pred = np.argsort(prob)[::-1]

# Get top1 label

top1 = synset[pred[0]]

print(("Top1: ", top1, prob[pred[0]]))

# Get top5 label

top5 = [(synset[pred[i]], prob[pred[i]]) for i in range(5)]

print(("Top5: ", top5))

return top1

def load_image2(path, height=None, width=None):

# load image

img = skimage.io.imread(path)

img = img / 255.0

if height is not None and width is not None:

ny = height

nx = width

elif height is not None:

ny = height

nx = img.shape[1] * ny / img.shape[0]

elif width is not None:

nx = width

ny = img.shape[0] * nx / img.shape[1]

else:

ny = img.shape[0]

nx = img.shape[1]

return skimage.transform.resize(img, (ny, nx))

def test():

img = skimage.io.imread("./test_data/starry_night.jpg")

ny = 300

nx = img.shape[1] * ny / img.shape[0]

img = skimage.transform.resize(img, (ny, nx))

skimage.io.imsave("./test_data/test/output.jpg", img)

if __name__ == "__main__":

test()- VGG16模型结构—

vgg16.py:

import inspect

import os

import numpy as np

import tensorflow as tf

import time

VGG_MEAN = [103.939, 116.779, 123.68]

class Vgg16:

def __init__(self, vgg16_npy_path=None):

if vgg16_npy_path is None:

path = inspect.getfile(Vgg16)

path = os.path.abspath(os.path.join(path, os.pardir))

path = os.path.join(path, "vgg16.npy")

vgg16_npy_path = path

print(path)

self.data_dict = np.load(vgg16_npy_path, encoding='latin1').item()

print("npy file loaded")

def build(self, rgb):

"""

load variable from npy to build the VGG

:param rgb: rgb image [batch, height, width, 3] values scaled [0, 1]

"""

start_time = time.time()

print("build model started")

rgb_scaled = rgb * 255.0

# Convert RGB to BGR

red, green, blue = tf.split(axis=3, num_or_size_splits=3, value=rgb_scaled)

assert red.get_shape().as_list()[1:] == [224, 224, 1]

assert green.get_shape().as_list()[1:] == [224, 224, 1]

assert blue.get_shape().as_list()[1:] == [224, 224, 1]

bgr = tf.concat(axis=3, values=[

blue - VGG_MEAN[0],

green - VGG_MEAN[1],

red - VGG_MEAN[2],

])

assert bgr.get_shape().as_list()[1:] == [224, 224, 3]

self.conv1_1 = self.conv_layer(bgr, "conv1_1")

self.conv1_2 = self.conv_layer(self.conv1_1, "conv1_2")

self.pool1 = self.max_pool(self.conv1_2, 'pool1')

self.conv2_1 = self.conv_layer(self.pool1, "conv2_1")

self.conv2_2 = self.conv_layer(self.conv2_1, "conv2_2")

self.pool2 = self.max_pool(self.conv2_2, 'pool2')

self.conv3_1 = self.conv_layer(self.pool2, "conv3_1")

self.conv3_2 = self.conv_layer(self.conv3_1, "conv3_2")

self.conv3_3 = self.conv_layer(self.conv3_2, "conv3_3")

self.pool3 = self.max_pool(self.conv3_3, 'pool3')

self.conv4_1 = self.conv_layer(self.pool3, "conv4_1")

self.conv4_2 = self.conv_layer(self.conv4_1, "conv4_2")

self.conv4_3 = self.conv_layer(self.conv4_2, "conv4_3")

self.pool4 = self.max_pool(self.conv4_3, 'pool4')

self.conv5_1 = self.conv_layer(self.pool4, "conv5_1")

self.conv5_2 = self.conv_layer(self.conv5_1, "conv5_2")

self.conv5_3 = self.conv_layer(self.conv5_2, "conv5_3")

self.pool5 = self.max_pool(self.conv5_3, 'pool5')

self.fc6 = self.fc_layer(self.pool5, "fc6")

assert self.fc6.get_shape().as_list()[1:] == [4096]

self.relu6 = tf.nn.relu(self.fc6)

self.fc7 = self.fc_layer(self.relu6, "fc7")

self.relu7 = tf.nn.relu(self.fc7)

self.fc8 = self.fc_layer(self.relu7, "fc8")

self.prob = tf.nn.softmax(self.fc8, name="prob")

self.data_dict = None

print(("build model finished: %ds" % (time.time() - start_time)))

def avg_pool(self, bottom, name):

return tf.nn.avg_pool(bottom, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME', name=name)

def max_pool(self, bottom, name):

return tf.nn.max_pool(bottom, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME', name=name)

def conv_layer(self, bottom, name):

with tf.variable_scope(name):

filt = self.get_conv_filter(name)

conv = tf.nn.conv2d(bottom, filt, [1, 1, 1, 1], padding='SAME')

conv_biases = self.get_bias(name)

bias = tf.nn.bias_add(conv, conv_biases)

relu = tf.nn.relu(bias)

return relu

def fc_layer(self, bottom, name):

with tf.variable_scope(name):

shape = bottom.get_shape().as_list()

dim = 1

for d in shape[1:]:

dim *= d

x = tf.reshape(bottom, [-1, dim])

weights = self.get_fc_weight(name)

biases = self.get_bias(name)

# Fully connected layer. Note that the '+' operation automatically

# broadcasts the biases.

fc = tf.nn.bias_add(tf.matmul(x, weights), biases)

return fc

def get_conv_filter(self, name):

return tf.constant(self.data_dict[name][0], name="filter")

def get_bias(self, name):

return tf.constant(self.data_dict[name][1], name="biases")

def get_fc_weight(self, name):

return tf.constant(self.data_dict[name][0], name="weights")- 导入训练好的VGG16模型参数进行训练—

vgg16_test.py:

import numpy as np

import tensorflow as tf

# import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import skimage

import vgg16

import utils

img1 = utils.load_image("./test_data/dog.png")

print img1.shape

batch = img1.reshape((1, 224, 224, 3))

#plot the image

# imgshow1=plt.imshow(img1)

# with tf.Session(config=tf.ConfigProto(gpu_options=(tf.GPUOptions(per_process_gpu_memory_fraction=0.7)))) as sess:

with tf.device('/cpu:0'):

with tf.Session() as sess:

images = tf.placeholder("float", [1, 224, 224, 3])

feed_dict = {images: batch}

vgg = vgg16.Vgg16()

with tf.name_scope("content_vgg"):

vgg.build(images)

prob = sess.run(vgg.prob, feed_dict=feed_dict)

top5 = np.argsort(prob[0])[-1:-6:-1]

for n, label in enumerate(top5):

print label

pool1 = sess.run(vgg.pool1, feed_dict=feed_dict)

print pool1.shape

conv3_3=sess.run(vgg.conv3_3, feed_dict=feed_dict)

print conv3_3.shape

#now let's plot the model filters

vgg = vgg16.Vgg16()

#get the saved parameter dict keys

print vgg.data_dict.keys()

#show the first conv layer

filter_conv1=vgg.get_conv_filter("conv1_1")

print 'filter_conv1', filter_conv1.shape

tf.Print(filter_conv1[:,:,:,:5],[filter_conv1[:,:,:,:5]])

filter_conv3=vgg.get_conv_filter("conv3_3")

print 'filter_conv3', filter_conv3.shape

tf.Print(filter_conv3[:,:,:3,:5],[filter_conv3[:,:,:3,:5]])