sklearn计算准确率和召回率----accuracy_score、metrics.precision_score、metrics.recall_score

转自:http://d0evi1.com/sklearn/model_evaluation/

accuracy_score

** clf.score(X_test, y_test)引用的就是accuracy_score方法(clf为分类器对象)

accuracy_score函数计算了准确率,不管是正确预测的fraction(default),还是count(normalize=False)。

在multilabel分类中,该函数会返回子集的准确率。如果对于一个样本来说,必须严格匹配真实数据集中的label,整个集合的预测标签返回1.0;否则返回0.0.

预测值与真实值的准确率,在n个样本下的计算公式如下:

accuracy(y,ŷ )=1nsamples∑i=0nsamples−1l(ŷ i=yi)accuracy(y,y^)=1nsamples∑i=0nsamples−1l(y^i=yi)

1(x)为指示函数。

>>> import numpy as np

>>> from sklearn.metrics import accuracy_score

>>> y_pred = [0, 2, 1, 3]

>>> y_true = [0, 1, 2, 3]

>>> accuracy_score(y_true, y_pred)

0.5

>>> accuracy_score(y_true, y_pred, normalize=False)

2在多标签的case下,二分类label:

>>> accuracy_score(np.array([[0, 1], [1, 1]]), np.ones((2, 2)))

0.5metrics.precision_score、metrics.recall_score

*注意正确率和召回率的计算方法,跟我理解的有点不一样

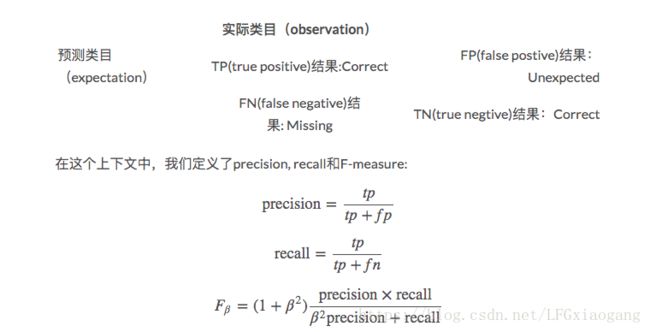

在二元分类中,术语“positive”和“negative”指的是分类器的预测类别(expectation),术语“true”和“false”则指的是预测是否正确(有时也称为:观察observation)。给出如下的定义:

| 实际类目(observation) | ||

|---|---|---|

| 预测类目(expectation) | TP(true positive)结果:Correct | FP(false postive)结果:Unexpected |

| FN(false negative)结果: Missing | TN(true negtive)结果:Correct |

这里是一个二元分类的示例:

>>> from sklearn import metrics

>>> y_pred = [0, 1, 0, 0]

>>> y_true = [0, 1, 0, 1]

>>> metrics.precision_score(y_true, y_pred)

1.0

>>> metrics.recall_score(y_true, y_pred)

0.5

>>> metrics.f1_score(y_true, y_pred)

0.66...

>>> metrics.fbeta_score(y_true, y_pred, beta=0.5)

0.83...

>>> metrics.fbeta_score(y_true, y_pred, beta=1)

0.66...

>>> metrics.fbeta_score(y_true, y_pred, beta=2)

0.55...

>>> metrics.precision_recall_fscore_support(y_true, y_pred, beta=0.5)

(array([ 0.66..., 1. ]), array([ 1. , 0.5]), array([ 0.71..., 0.83...]), array([2, 2]...))

>>> import numpy as np

>>> from sklearn.metrics import precision_recall_curve

>>> from sklearn.metrics import average_precision_score

>>> y_true = np.array([0, 0, 1, 1])

>>> y_scores = np.array([0.1, 0.4, 0.35, 0.8])

>>> precision, recall, threshold = precision_recall_curve(y_true, y_scores)

>>> precision

array([ 0.66..., 0.5 , 1. , 1. ])

>>> recall

array([ 1. , 0.5, 0.5, 0. ])

>>> threshold

array([ 0.35, 0.4 , 0.8 ])

>>> average_precision_score(y_true, y_scores)

0.79...